The best way to grow your business is to gather large-scale and huge amounts of data and turn it into something useful that gives you an advantage over your competitors.

But how do you collect information from all over the web, with tons of data? That’s where large-scale web scraping comes in to help!

What is Large-Scale Web Scraping?

Scraping millions of pages at once is Large-scale web scraping. It can either be scraping thousands of web pages from large websites like Amazon, LinkedIn, or Github, or extracting content from thousands of different small websites simultaneously.

This process is automated usually implemented using a web scraper or crawler.

Here are some key components of large scale web scraping:

- Data Extraction: The process of retrieving data from websites using web scraping tools like Crawlbase or programming libraries.

- Data Parsing: Data parsing is the process of structuring and cleaning the extracted data to make it usable for analysis.

- Data Storage: Storing the scraped data in databases or file systems for further processing and analysis.

- Data Analysis: Using statistical techniques and machine learning algorithms to gain insights from the extracted data.

Large-scale web scraping vs Regular Web scraping

Regular web scraping is like collecting information from a small number of web pages or just one website. It’s usually for specific tasks or projects, using simpler tools. On the other hand, large-scale web scraping is more ambitious. It aims to gather lots of data from many sources or a big part of a single website. This is a bit more complicated, needing advanced tools and techniques to handle massive amounts of data. While regular scraping is for smaller projects, large-scale scraping is for handling huge datasets and getting detailed insights.

What is Large-Scale Web Scraping used for?

With the vast amount of information available on the internet, web scraping allows us to extract data from websites and use it for various purposes, such as market research, competitive analysis, and data-driven decision making.

Imagine you want to scrape Amazon products in a category. This category has 20,000 pages, with 20 items on each page. That adds up to 400,000 pages to go through and collect data. In simpler terms, it means making 400,000 HTTP GET requests.

If each web page takes 2.5 seconds to load, you’d spend (400,000 X 2.5 seconds), which is 1,000,000 seconds. That’s more than 11 days just waiting for the pages to load. And keep in mind, this is only the time spent on loading the pages. Extracting and saving data from each one would take even more time.

Using large-scale web scraping helps you gather lots of data at a low cost, allowing you to make smart decisions for better product development meantime saving you a lot of time.

Real-World Use Cases and Success Stories of Large Scale Web Scraping

Large scale web scraping has proven to be invaluable for many businesses and organizations. Companies in the e-commerce industry, for instance, use web scraping to gather product information and pricing data from competitors’ websites. This allows them to analyze market trends, adjust their pricing strategies, and stay competitive.

eCommerce

One such success story is the case of a major online retailer that used web scraping to monitor the prices of its competitors’ products. By scraping practices on multiple E-commerce websites like scraping Airbnb prices and others, they were able to identify pricing patterns and adjust their own prices accordingly. This not only helped them stay competitive, but also increased their profit margins.

Finance

Another example is in the field of finance, where web scraping is used to gather news articles, social media sentiment, and financial data. This information is then used to make informed investment decisions and predict market trends. Large financial institutions, hedge funds, and trading firms heavily rely on web scraping for their data analysis needs.

For instance, a renowned investment firm utilized web scraping to collect news articles and social media sentiment related to specific stocks. By analyzing this data, they were able to identify emerging trends and sentiment shifts, allowing them to make timely investment decisions. This gave them a significant edge in the market and resulted in substantial profits.

Research and Development

Academic institutions and research organizations use web scraping to gather data from various sources, such as scientific journals and databases. This data is then used for analysis, hypothesis testing, and generating insights.

For example, a team of researchers used web scraping to collect data on climate change from multiple sources. By aggregating and analyzing this data, they were able to identify patterns and trends in temperature fluctuations, precipitation levels, and other climate variables. This research contributed to a better understanding of climate change and its impact on the environment.

Marketing

Large scale web scraping has found applications in the field of marketing and lead generation. Companies use web scrapers to extract contact information, find email addresses, customer reviews, and social media data from Instagram, facebook, LinkedIn, twitter etc to identify potential leads and target their marketing campaigns more effectively.

Take, for instance, a digital global marketing agency that utilized web scraping to extract customer reviews from Walmart. By analyzing these reviews, they were able to identify common pain points and preferences of their target audience. This allowed them to tailor their marketing strategies and improve customer satisfaction, resulting in increased sales and brand loyalty.

Challenges and Limitations in Large Scale Web Scraping

Despite its advantages, large scale web scraping comes with its own set of challenges and limitations.

Massive Amounts of data:

One of the main challenges is the sheer volume of data that needs to be processed. With millions of web pages to scrape, it can be a daunting task to handle and process such massive amounts of data.

In order to overcome this challenge, it is important to have a robust and scalable infrastructure in place. This includes having powerful servers and cloud storage systems that can handle the high volume of data. Additionally, implementing efficient algorithms and data processing techniques can help optimize the scraping process and reduce the time required for data extraction.

Anti-Scraping Measures:

Another challenge in large scale web scraping is dealing with websites that implement measures to prevent scraping. These measures can include CAPTCHAs, IP blocking, and other security mechanisms. These measures are put in place by website owners to protect their data and prevent unauthorized access.

However, there are ways to overcome these challenges and bypass these measures. One approach is to use distributed computing, where the scraping task is divided among multiple machines or servers. This allows for parallel processing and can significantly speed up the scraping process. Additionally, using proxy servers can help bypass IP blocking by routing the scraping requests through different IP addresses.

CAPTCHAs:

CAPTCHAs are designed to distinguish between humans and bots, and they often require users to solve puzzles or enter specific characters. To overcome this challenge, various techniques can be used, such as using OCR (Optical Character Recognition) to automatically solve CAPTCHAs or using third-party CAPTCHA solving services.

Legal and Ethical Aspects:

Moreover, large scale web scraping requires careful consideration of legal and ethical aspects. It is important to respect the terms of service of websites and comply with any legal restrictions or guidelines. Scraping large amounts of data from a website without permission can lead to legal consequences and damage to the reputation of the scraping project.

By having a robust infrastructure, implementing efficient algorithms, using distributed computing and proxy servers, handling CAPTCHAs effectively, and respecting legal and ethical considerations, it is possible to successfully scrape and process massive amounts of data from the web.

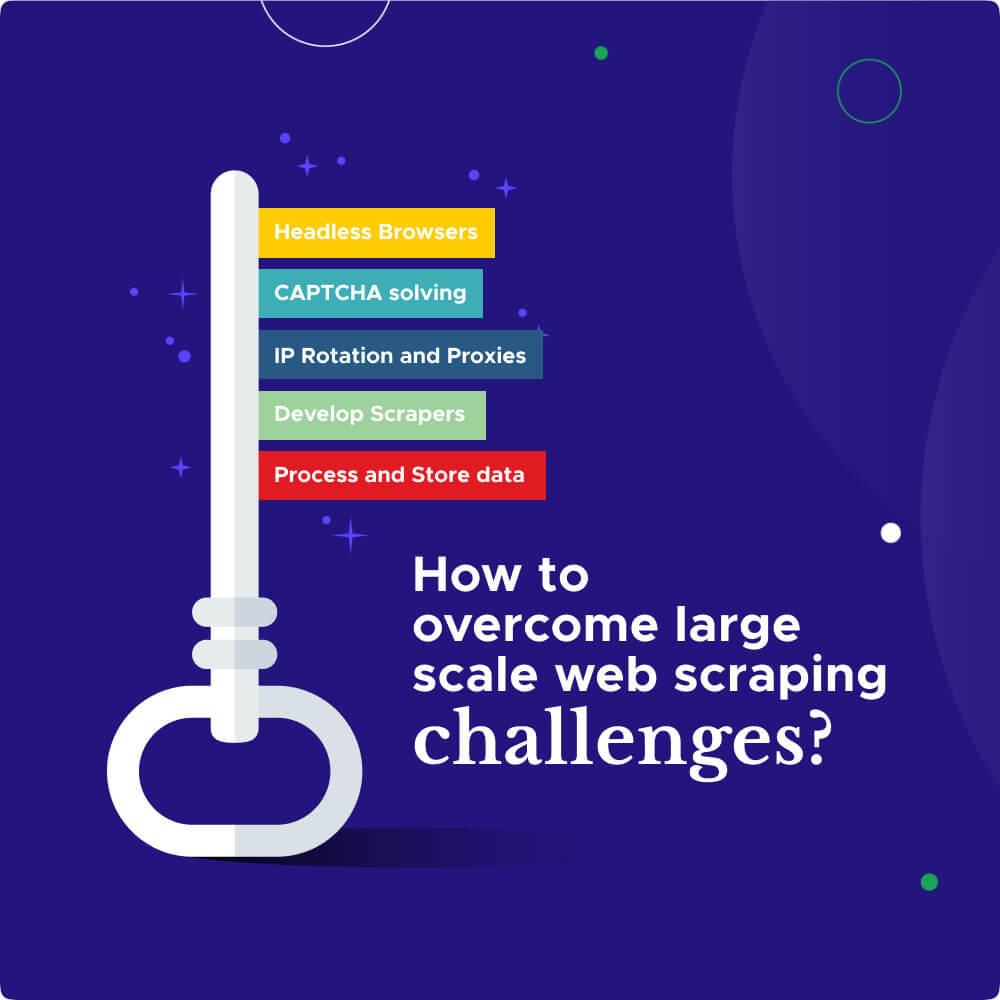

How to overcome these Challenges?

To successfully perform large scale web scraping, it is important to follow a systematic approach. Here are the key steps involved in doing large scale web scraping:

Headless Browsers :

Headless browsers provide a way for users to retrieve the data they need from dynamic websites. When scraping dynamic sites, headless browsers come in handy as they can mimic user interactions, including mouse movements and clicks.

CAPTCHA solving:

CAPTCHAS are specifically designed to prevent automated scraping. However, you can avoid them by using CAPTCHA solving service by a web scraper.

IP Rotation and Proxies:

Use a web scraping tool that has features to work with proxy services. This helps users avoid getting blocked by the websites they are trying to scrape. For instance, using rotating IP addresses lets web scrapers make more requests without getting flagged as suspicious due to rate-limiting. It is recommended to use rotating residential proxies for best results.

Develop Scrapers:

Use the selected tools and libraries to develop web scrapers that can extract data from the target websites. Crawlbase Crawler is a known tool for large-scale data extraction and offer enterprise solution for clients. It offers Smart Proxy solution and Storage API along with Crawler which makes scraping large volumes of data easily manageable.

Process and Store data:

Clean and structure the extracted data and store it in a suitable format for further analysis. You can also choose a web scraper that provides Cloud storage services to store the extracted data.

Is Large-Scale Web Scraping Legal?

While web scraping offers numerous benefits, it is crucial to be aware of the legal and ethical considerations surrounding its practice.

Firstly, not all websites allow web scraping, and some may even explicitly prohibit it. It is essential to respect website owners’ terms of service and adhere to their scraping policies.

Secondly, web scraping should be done in a responsible and ethical manner. It is important to ensure that the scraping process does not disrupt the normal functioning of websites or violate users’ privacy.

Lastly, it is vital to comply with data protection and privacy regulations when handling scraped data. Organizations should handle the extracted data securely and responsibly, ensuring that personal and sensitive information is protected.

Strategies for Handling Big Data from Web Scraping

Large scale web scraping often results in the collection of massive amounts of data. Processing and analyzing this big data can be a daunting task. Here are some strategies for handling and processing big data from web scraping:

Firstly, data preprocessing techniques such as data cleaning, data transformation, and data normalization can improve the quality and usability of the extracted data.

Secondly, using distributed computing frameworks such as Apache Hadoop or Apache Spark can help distribute the processing workload across multiple machines, enabling faster and more efficient data processing.

Furthermore, employing parallel processing techniques and utilizing cloud computing resources can significantly speed up data processing and analysis.

Final Words

Large scale web scraping is a powerful technique that enables businesses and organizations to extract valuable insights from the vast amount of data available on the internet. By overcoming challenges, understanding its importance, and following best practices, organizations can gain a competitive edge and make data-driven decisions in today’s digital landscape.