Best Buy, founded in 1966 by Richard M. Schulze and Gary Smoliak, has become a dominant player in the electronics retail sector. What started as a small store in St. Paul, Minnesota, has now grown into a retail giant with over 1,000 stores across America. Best Buy offers a wide range of consumer electronics, appliances, and entertainment products, making it a one-stop shop for tech enthusiasts and everyday consumers alike.

As of December 2023, the website has recorded 131.9 million visits from different corners of the globe, underlining its significance as a digital marketplace. The rich tapestry of Best Buy’s product offerings and the dynamic nature of its website make it a compelling arena for data extraction.

Why scrape data from Best Buy? The answer lies in the wealth of insights waiting to be uncovered. With a multitude of users navigating its digital aisles, Best Buy becomes a rich source of trends, pricing dynamics, and consumer preferences. Whether you’re a market researcher, a pricing strategist, or a tech enthusiast, the ability to Scrape Best Buy opens doors to a wealth of information, empowering informed decision-making and strategic planning.

Table Of Contents

- BestBuy.com SERP Layout

- Key elements to Scrape

- Best Buy Data Use cases

- Installing Python and necessary libraries

- Choosing a Development IDE

- Fetch HTML Using Requests Library

- Inspecting the Best Buy Website for CSS selectors

- Using BeautifulSoup for HTML parsing

- Drawbacks of the DIY Approach

- Crawlbase Registration and API Token

- Accessing the Crawling API with Crawlbase Library

- Effortlessly extracting Best Buy product data

- Handling Pagination

Understanding Best Buy Website

Best Buy’s website, BestBuy.com, presents a structured and dynamic landscape that holds valuable information for those venturing into web scraping.

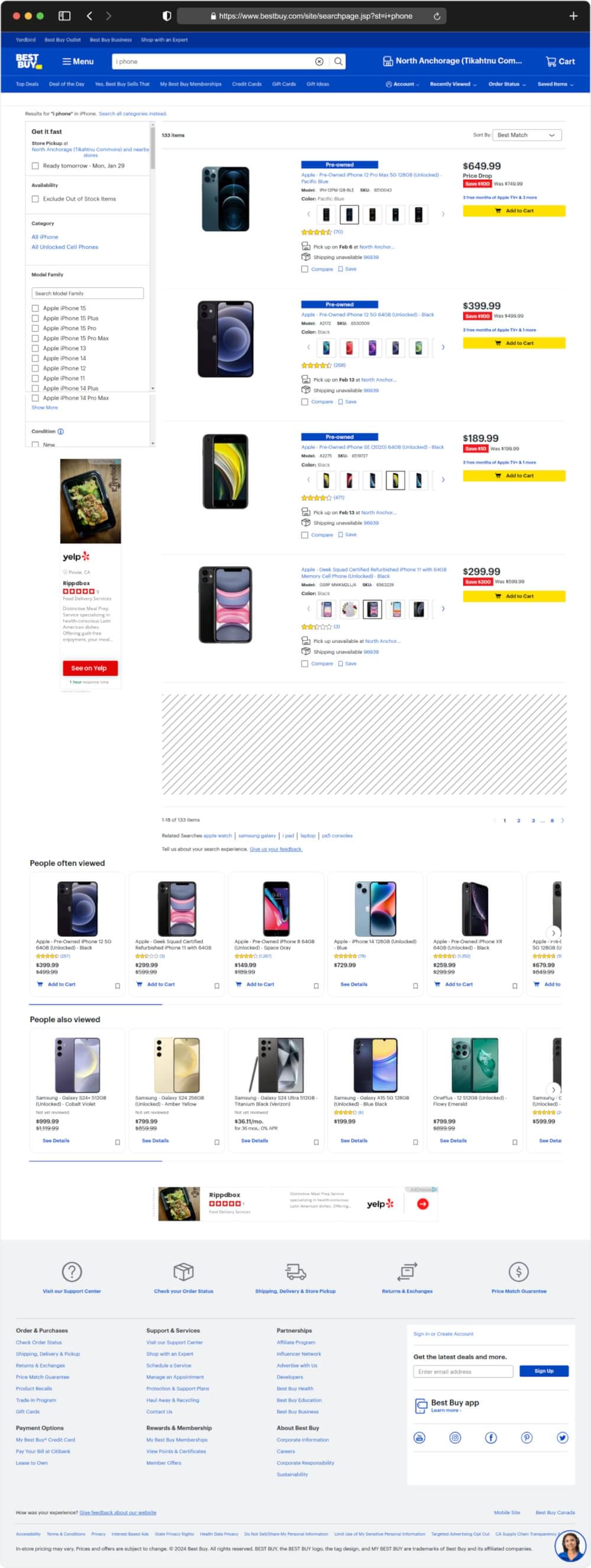

BestBuy.com SERP Layout

Imagine BestBuy.com as a well-organized digital catalog. Just like a newspaper with headlines, main stories, and side sections, Best Buy’s Search Engine Results Page structure follows a carefully designed format.

- Product Showcase: It’s like the main stories in a newspaper, showing you the best and most popular products related to what you searched for.

- Search Bar: This is like the big headline in a newspaper. You use it to type in what you want to find on BestBuy.com.

- Search Filters: These are like the organized sections on the side. They help you narrow down your search by letting you choose things like the brand, price range, and customer ratings. It makes it easier for you to find exactly what you want.

- People Also/Often Viewed: This part is next to the main product showcase. It shows you other products that people like you have looked at or bought. It’s like getting suggestions from other shoppers to help you discover new things.

- Footer: This is at the bottom, like the bottom part of a newspaper. It has links to different parts of the Best Buy website and information about policies and terms. It’s like the conclusion to your shopping journey, with everything you might need.

Understanding this layout equips our Best Buy scraper to navigate the virtual aisles efficiently.

Key Elements to Scrape

Now, armed with an understanding of Best Buy’s SERP Layout, let’s pinpoint the essential data points for extraction:

- Product Listings: The primary focus of our Scrape Best Buy mission is obtaining a list of products relevant to the search.

- Product Names: Just as a newspaper’s headlines provide a quick idea of the main stories, the product names serve as the titles of each listed item.

- Product Descriptions: Beneath each product name, users will typically find a brief description or snippet offering insights into the product’s features without clicking on it.

- Often Viewed Products: This section shows products that other shoppers have frequently viewed. It’s like a recommendation from the online community, providing users with additional options based on popular choices.

- Promotional Content: Occasionally, the initial results may include promotional content. Recognizing these as promotional and differentiating them from organic listings is crucial.

Understanding Best Buy’s SERP data points guides our scraping efforts, allowing us to gather pertinent information from Best Buy’s digital shelves efficiently.

Best Buy Data Use Cases

The information we get from Best Buy’s website is really useful and can be used in many different ways. Let’s look at some interesting examples:

- Market Insights: Understand pricing trends, consumer preferences, and brand popularity for informed market decisions.

- Competitive Pricing: Stay competitive by gaining insights into competitors’ pricing strategies and market pricing dynamics.

- Tech Updates: Stay in the know about the latest gadgets, innovations, and product launches for tech enthusiasts.

- Consumer Behavior Analysis: Shape marketing strategies by analyzing how consumers interact with products and respond to promotions.

- Inventory Optimization: Efficiently manage inventory with real-time information on product availability, stock levels, and demand trends.

Understanding these use cases highlights the practicality and significance of web scraping in extracting actionable information from Best Buy’s digital marketplace.

Setting Up Your Environment

To kickstart your journey into web scraping, let’s set up an environment that streamlines the process. Here are the steps to get you started:

Installing Python and Necessary Libraries

Begin by installing Python, the powerhouse for web scraping tasks. Visit the official Python website, download the latest version, and follow the installation instructions. Once Python is up and running, it’s time to equip it with the essential libraries for web scraping:

- Requests Library: This versatile library simplifies HTTP requests, allowing you to retrieve web pages effortlessly. Install it using the following command:

1 | pip install requests |

- Beautiful Soup: A powerful HTML parser, Beautiful Soup aids in extracting data from HTML and XML files. Install it using the following command:

1 | pip install beautifulsoup4 |

- Crawlbase Library: To leverage the advanced features of Crawlbase Crawling API, install the Crawlbase library. Install it using the following command:

1 | pip install crawlbase |

Choosing a Development IDE

Choosing the right Integrated Development Environment (IDE) can make your coding experience more enjoyable. Here are some options to consider:

- Visual Studio Code (VSCode): A user-friendly and feature-packed code editor. Get it from the official VSCode website.

- PyCharm: A robust Python IDE with advanced functionalities. You can download the community edition here.

- Google Colab: An online platform that lets you write and run Python code collaboratively in the cloud. Access it through Google Colab.

Once you have Python installed and the required libraries set up, along with your chosen coding tool ready, you’re all set for a smooth journey to scrape Best Buy. Let’s now jump into the coding part and grab valuable data from BestBuy.com.

DIY Approach with Python

In our example, let’s focus on scraping data related to “i phone” from the Best Buy website. Let’s break down the process into digestible chunks:

Fetch HTML Using Requests Library

Kickstart your journey by tapping into the power of the Requests library. This handy Python module acts as your virtual messenger, allowing you to talk to Best Buy’s servers. With a few lines of code, you can fetch the HTML content from the website, laying the groundwork for data extraction.

1 | import requests |

Launch your favored text editor or IDE, replicate the supplied code, and store it within a Python file. As an illustration, label it bestbuy_scraper.py.

Execute the Script:

Initiate your terminal or command prompt and traverse to the folder where you stashed bestbuy_scraper.py. Trigger the script with the following command:

1 | python bestbuy_scraper.py |

Upon pressing Enter, witness the enchantment as your script springs to action, dispatching a request to the Best Buy website, procuring the HTML content, and unveiling it on your terminal screen.

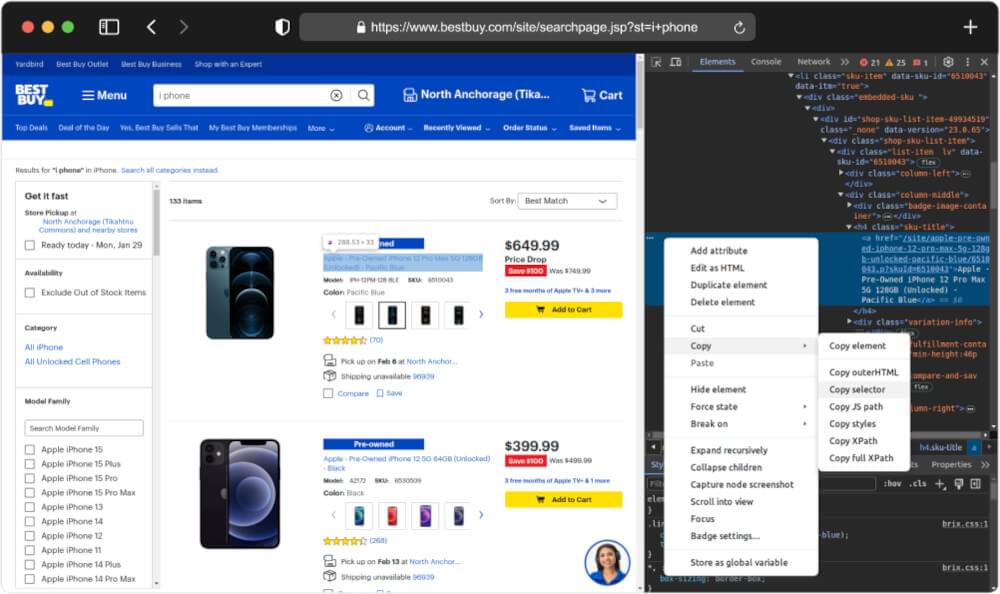

Inspecting the Best Buy Website for CSS selectors

- Open Developer Tools: Right-click on the webpage in the browser and opt for ‘Inspect’ (or ‘Inspect Element’). This action unveils the Developer Tools, granting access to delve into the HTML structure.

- Traverse HTML Elements: Within the Developer Tools realm, navigate the HTML elements to pinpoint the specific data earmarked for scraping. Hunt for distinctive identifiers, classes, or tags linked to the sought-after information.

- Pinpoint CSS Selectors: Jot down the CSS selectors aligning with the elements of interest. These selectors become vital signposts for your Python script, guiding it to spot and harvest the coveted data.

Using BeautifulSoup for HTML Parsing

Once you have the HTML content and CSS selectors in your hands, it’s time to bring in BeautifulSoup. This Python library is your assistant in navigating and understanding the HTML structure. With its help, you can pinpoint and extract the relevant information seamlessly.

For the example, we will extract essential details like the product title, rating, review count, price, and URL link (Product Page URL) for every product listed on the specified Best Buy search page. Let’s extend our previous script and scrape this information from HTML.

1 | import requests |

This script uses the BeautifulSoup library to parse the HTML content of the response. It extracts details we want from the HTML elements corresponding to each product in the search results. The extracted data is organized into a list of dictionaries, where each dictionary represents the information of a single product. The script then prints the results in a nicely formatted JSON format.

But will the HTML we receive contain the useful information? Let see the output of above script:

1 | [] |

You will see the output as empty list because Best Buy employs JavaScript to dynamically generate search results on its SERP page. When you send an HTTP request to the Best Buy URL, the HTML response lacks meaningful data, leading to an absence of valuable information.

Drawbacks of the DIY Approach

While the DIY approach using Python for Scraping Best Buy provides a hands-on experience, it comes with inherent drawbacks that can impact efficiency and scalability:

Limited Scalability:

- Inefficiency with Large Datasets: DIY scripts may encounter inefficiencies when handling extensive data extraction tasks, leading to performance issues.

- Resource Intensity: Large-scale scraping may strain system resources, affecting the overall performance of the scraping script.

- Rate Limiting and IP Blocking: Best Buy’s servers may impose rate limits, slowing down or blocking requests if they exceed a certain threshold. DIY approaches may struggle to handle rate limiting, leading to disruptions in data retrieval.

Handling Dynamic Content:

- Challenges with JavaScript-Driven Elements: DIY approaches may struggle to interact with dynamically loaded content that relies heavily on JavaScript.

- Incomplete Data Retrieval: In scenarios where dynamic content is prevalent, the DIY method may not capture the entirety of the information.

While the DIY approach provides valuable insights and a deeper understanding of web scraping fundamentals, these drawbacks emphasize the need for a more efficient and scalable solution. In the upcoming sections, we’ll explore the Crawlbase Crawling API—a powerful solution designed to overcome these limitations and streamline the Best Buy scraping process.

Using Crawlbase Crawling API for Best Buy

Unlocking the full potential of web scraping for Best Buy becomes seamless with the integration of Crawlbase Crawling API. The Crawlbase Crawling API makes web scraping easy and efficient for developers. Its parameters allow us to handle various scraping tasks effortlessly.

Here’s a step-by-step guide on harnessing the power of this dedicated API:

Crawlbase Registration and API Token

Fetching Best Buy data using a Crawling API begins with creating an account on the Crawlbase platform. Let’s guide you through the account setup process for Crawlbase:

- Navigate to Crawlbase: Open your web browser and head to the Crawlbase website’s Signup page to kickstart your registration journey.

- Provide Your Credentials: Enter your email address and craft a password for your Crawlbase account. Ensure you fill in the necessary details accurately.

- Verification Process: A verification email may land in your inbox after submitting your details. Look out for it and complete the verification steps outlined in the email.

- Log In: Once your account is verified, return to the Crawlbase website and log in using the credentials you just created.

- Secure Your API Token: Accessing the Crawlbase Crawling API requires an API token, and you can find yours in your account documentation.

Quick Note: Crawlbase provides two types of tokens – one tailored for static websites and another designed for dynamic or JavaScript-driven websites. Since our focus is on scraping Best Buy, we will use JS token.

Bonus: Crawlbase offers an initial allowance of 1,000 free requests for the Crawling API, making it an ideal choice for our web scraping expedition.

Accessing the Crawling API with Crawlbase Library

Integrate the Crawlbase library into your Python environment using the provided API token. The Crawlbase library acts as a bridge, connecting your Python scripts with the robust features of the Crawling API. The provided code snippet demonstrates how to initialize and utilize the Crawling API through the Crawlbase Python library.

1 | from crawlbase import CrawlingAPI |

Detailed documentation of the Crawling API is available on the Crawlbase platform. You can read it here. If you want to learn more about the Crawlbase Python library and see additional examples of its usage, you can find the documentation here.

Extracting Target Product Data Effortlessly

With the Crawlbase Crawling API, grabbing details about Best Buy products becomes simple. By using a JS token and tweaking API settings like ajax_wait and page_wait, we can handle JavaScript rendering. Let’s enhance our DIY script by bringing in the Crawling API.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

Handling Pagination

Gathering details from Best Buy’s search results means going through several pages, each showing a batch of product listings. To make sure we get all the needed info, we need to deal with pagination. This involves moving through the result pages and asking for more data when necessary.

On Best Buy’s website, they use the &cp parameter in the URL to handle pagination. It tells us the current page number. For instance, &cp=1 means the first page, and &cp=2 points to the second page. This parameter helps us methodically collect data from different pages and create a complete dataset for analysis.

Let’s upgrade our current script to smoothly manage pagination.

1 | from crawlbase import CrawlingAPI |

Tip: Crawlbase offers numerous ready-to-use scrapers compatible with our Crawling API. Explore further details in our documentation. Additionally, we craft tailor-made solutions tailored to your specific requirements. Our proficient team can design a solution exclusively for you. This means you won’t have to constantly monitor website details and CSS selectors. Let Crawlbase take care of that for you, allowing you to concentrate on achieving your objectives. Reach out to us here.

Final Thoughts

While Scraping Best Buy product data, simplicity and effectiveness are very important. While the DIY approach involves a learning curve, the Crawlbase Crawling API emerges as the astute choice. Bid farewell to concerns about reliability and scalability; embrace the Crawlbase Crawling API for a straightforward, reliable, and scalable solution to effortlessly scrape Best Buy.

If you’re eager to explore scraping from various e-commerce platforms, consider diving into these detailed guides:

Web scraping may present challenges, and your success is paramount. Should you require additional guidance or encounter obstacles, don’t hesitate to reach out. Our dedicated team is here to support you on your journey through the world of web scraping. Happy scraping!

Frequently Asked Questions

Q. Is Web Scraping Legal for Best Buy?

Web scraping for Best Buy is generally legal when done responsibly and in compliance with the website’s terms of service. Ensure you review and adhere to Best Buy’s policies to maintain ethical scraping practices. Legal implications can arise if scraping leads to unauthorized access, excessive requests, or violates any applicable laws. It’s crucial to approach web scraping with respect for the website’s guidelines and applicable legal regulations.

Q. How Do I Manage Dynamic Content When Scraping Best Buy with the Crawlbase Crawling API?

Managing dynamic content is a critical aspect of scraping Best Buy with the Crawlbase Crawling API. The API is designed to handle dynamic elements loaded through JavaScript, ensuring comprehensive data retrieval. Utilize parameters such as page_wait and ajax_wait to navigate and capture dynamically generated content, ensuring that your scraping efforts cover all aspects of Best Buy’s web pages. This feature enhances the effectiveness of your scraping script, allowing you to obtain a complete dataset, including content that may load after the initial page load.

Q. Why Would Someone Scrape Product Data from Best Buy SERP?

Scraping product data from Best Buy SERP (Search Engine Results Page) serves various purposes. Businesses and researchers may scrape this data to monitor price fluctuations, analyze market trends, or gather competitive intelligence. It provides valuable insights into product availability, customer reviews, and overall market dynamics, aiding decision-making processes. The Crawlbase Crawling API facilitates this scraping seamlessly, ensuring reliable and efficient data extraction for diverse purposes.

Q. What Measures Does Crawlbase’s Crawling API Take to Avoid IP Blocking?

Crawlbase’s Crawling API incorporates several strategic measures to minimize the risk of IP blocking and ensure a seamless scraping experience:

- Intelligent IP Rotation: The API dynamically rotates IP addresses, preventing overuse of a single IP and reducing the likelihood of being blocked.

- Anti-Bot Measures Handling: Crawlbase is equipped to navigate anti-bot measures, enhancing anonymity and decreasing the chances of detection.

- Smart Rate Limiting: The API manages request rates intelligently, preventing disruptions due to rate limiting and ensuring a steady flow of data retrieval. These features collectively contribute to a smoother and uninterrupted scraping process while mitigating the risk of website detection and IP blocking.