Web scraping has become an indispensable tool for individuals and businesses alike in today’s data-driven world. It allows us to extract valuable information from websites, turning unstructured data into actionable insights. Among the myriad websites available for scraping, Walmart, one of the world’s largest retailers, stands out as a valuable source of product data. Whether you’re an e-commerce entrepreneur looking to monitor competitor prices or a data enthusiast interested in analyzing market trends, scraping Walmart product pages can provide you with a wealth of data to work with.

In this step-by-step guide, we will explore the art and science of web scraping, focusing specifically on effectively scraping Walmart product pages. We’ll use the Python programming language, a versatile and widely-used tool in the world of web scraping, along with the Crawlbase Crawling API to streamline the process. By the end of this guide, you’ll be equipped with the knowledge and tools to collect product titles, prices, ratings, and other valuable data from Walmart’s vast online catalog.

Before exploring the technical aspects, let’s take a moment to understand why web scraping is essential and why scraping Walmart product pages, mainly, can be a game-changer for various purposes.

Table of Contents

- The importance of web scraping

- Why scrape Walmart?

- Tools and technologies used

- Installing Python and essential libraries

- Creating a virtual environment

- Obtaining a Crawlbase API key

- Layout of Walmart Search Page

- Inspecting HTML to Get CSS Selectors

- Utilizing the Crawlbase Python library

- Managing Parameters and Tailoring Responses

- Scraping Walmart Search Page

- Handling pagination on Search Page

- Storing scraped data in a CSV File

- Storing scraped data in a SQLite Database

1. Getting Started

In this data-driven world, the ability to gather and analyze information from the web has become a powerful asset. This is where web scraping, the process of extracting data from websites, comes into play. It serves as a bridge between the vast ocean of online information and your specific data needs. Whether in business, research, or simply curious, web scraping empowers you to access, analyze, and utilize data that was once locked away in the depths of the internet.

The Importance of Web Scraping

Web scraping is a transformative technique that plays a pivotal role in the era of data-driven decision-making. It entails extracting data from websites, which can then be harnessed for various purposes across various domains. Whether you’re a business professional, data analyst, researcher, or simply an information enthusiast, web scraping can be a game-changer in your quest for data.

The significance of web scraping lies in its ability to convert unstructured web data into structured datasets amenable to analysis, visualization, and integration into applications. It empowers you to stay informed, make data-backed decisions, and gain a competitive edge in an increasingly digital world. By automating the data collection process, web scraping saves time and resources that would otherwise be spent on manual data entry and monitoring. It opens up new opportunities for research, market analysis, competitive intelligence, and innovation.

Why Scrape Walmart?

In the vast e-commerce landscape, Walmart stands as one of the giants. It boasts an extensive range of products, competitive pricing, and a large customer base. As a result, extracting data from Walmart’s website can provide invaluable insights for various purposes, from market research and price tracking to competitor analysis and inventory management.

- Competitive Intelligence: For businesses, monitoring competitors’ product listings, prices, and customer reviews on Walmart provides valuable market intelligence. You can adjust your pricing strategy, optimize product descriptions, or tailor your marketing efforts based on real-time data.

- Market Research: Web scraping allows you to track market trends and consumer preferences. You can identify emerging products, monitor pricing dynamics, and gain deeper insights into your target audience’s buying behavior.

- Inventory Management: Retailers can use scraped data to synchronize their inventory with Walmart’s product offerings. Real-time data on product availability and pricing ensures that your inventory remains competitive and up-to-date.

- Consumer Feedback: The reviews and ratings of products on Walmart’s platform are a goldmine of customer sentiment. Scraping this data enables you to understand what customers love or dislike about specific products, aiding in product development and enhancement.

- Price Monitoring: Walmart frequently adjusts its product prices to stay competitive. If you’re a retailer or reseller, monitoring these price fluctuations can help you make informed pricing decisions and remain competitive in the market.

2. Tools and Technologies Used

The following tools and technologies will facilitate our journey into the world of web scraping Walmart product pages:

- Python: Python is our programming language of choice due to its simplicity, versatility, and rich ecosystem of libraries. It provides us with the tools to write the scraping code and efficiently process the extracted data.

- Crawlbase Crawling API: While Python equips us with the scripting capabilities, we’ll rely on the Crawlbase Crawling API to fetch Walmart product pages with precision and reliability. Here’s why we’ve chosen to use this API:

- Efficiency: Crawlbase streamlines the process of making HTTP requests to websites, retrieving HTML content, and navigating through web pages. This efficiency is especially valuable when scraping data from large e-commerce websites like Walmart.

- Reliability: The Crawlbase Crawling API is designed to handle the intricacies of web scraping, such as handling cookies, managing headers, and dealing with anti-scraping measures. It ensures we can access the data we need consistently, without interruptions.

- Scalability: Whether you’re scraping a few product pages or thousands of them, Crawlbase offers scalability. Crawling API offers the capability to rotate IP addresses, providing anonymity and reducing the risk of being blocked by websites. It can handle both small-scale and large-scale web scraping projects with ease.

- Data Enrichment: Beyond basic HTML content, Crawlbase can extract additional data, such as JavaScript-rendered content, making it a robust choice for scraping dynamic websites.

Now that we’ve established the significance of web scraping and the specific advantages of scraping Walmart’s product pages let’s dive deeper into the technical aspects. We’ll start by setting up our environment, obtaining the necessary tools, and preparing for the exciting journey of web scraping.

3. Setting Up

Before we embark on our web scraping journey to extract valuable data from Walmart product pages, setting up our environment is essential. This section will guide you through the necessary steps to prepare your system for web scraping. We’ll cover installing Python and essential libraries, creating a virtual environment, and obtaining a Crawlbase API key.

Installing Python and Essential Libraries

Python is the heart of our web scraping operation, and we’ll need a few essential libraries to make our task more manageable. Follow these steps to set up Python and the required libraries:

Python Installation: If you don’t already have Python installed on your system, you can download the latest version from the official Python website. Select the appropriate version for your operating system (Windows, macOS, or Linux).

Package Manager - pip: Python comes with a powerful package manager called

pip. It allows you to easily install and manage Python packages. To check if you have pip installed, open your terminal or command prompt and run the following command:1

pip --version

- Note: If

pipis not installed, the Python installer typically includes it. You can also refer to the official pip documentation for installation instructions.

- Note: If

Essential Libraries: We’ll need two fundamental Python libraries for web scraping:

- Crawlbase Library: The Crawlbase Python library allows us to make HTTP requests to the Crawlbase Crawling API, simplifying the process of fetching web pages and handling the responses. To install it, use pip:

1

pip install crawlbase

- Beautiful Soup: Beautiful Soup is a Python library that makes it easy to parse HTML and extract data from web pages. To install it, use pip:

1

pip install beautifulsoup4

- Pandas Library: Additionally, we’ll also use the Pandas library for efficient data storage and manipulation. Pandas provide powerful data structures and data analysis tools. To install Pandas, use pip:

1

pip install pandas

With Python and these essential libraries in place, we’re ready to move on to the next step: creating a virtual environment.

Creating a Virtual Environment

Creating a virtual environment is a best practice when working on Python projects. It allows you to isolate project-specific dependencies, preventing conflicts with system-wide packages. To create a virtual environment, follow these steps:

- Open a Terminal or Command Prompt: Launch your terminal or command prompt, depending on your operating system.

- Navigate to Your Project Directory: Use the cd command to navigate to the directory where you plan to work on your web scraping project. For example:

1 | cd path/to/your/project/directory |

- Create the Virtual Environment: Run the following command to create a virtual environment:

1 | python -m venv walmart-venv |

This command will create a folder named “walmart-venv” in your project directory, containing a clean Python environment.

- Activate the Virtual Environment: Depending on your operating system, use the appropriate command to activate the virtual environment:

- Windows:

1

walmart-venv\Scripts\activate

- macOS and Linux:

1

source walmart-venv/bin/activate

Once activated, your terminal prompt should change to indicate that you are now working within the virtual environment.

With your virtual environment set up and activated, you can install project-specific packages and work on your web scraping code in an isolated environment.

Obtaining a Crawlbase API Token

We’ll use the Crawlbase Crawling API to scrape data from websites efficiently. This API simplifies the process of making HTTP requests to websites, handling IP rotation, and managing web obstacles like CAPTCHAs. Here’s how to obtain your Crawlbase API token:

- Visit the Crawlbase Website: Go to the Crawlbase website in your web browser.

- Sign Up or Log In: If you don’t already have an account, sign up for a Crawlbase account. If you have an account, log in.

- Get Your API Token: Once you’re logged in, navigate to the documentation to get your API token. Crawlbase allows users to choose between two token types: a Normal (TCP) token and a JavaScript (JS) token. Opt for the Normal token when dealing with websites that exhibit minimal changes, such as static websites. However, if your target site relies on JavaScript to function or crucial data is generated through JavaScript on the user’s side, the JavaScript token becomes indispensable. For instance, when scraping data from Walmart, the JavaScript token is the key to accessing the desired information. You can get your API token here.

- Keep Your API Token Secure: Your API token is a valuable asset, so keep it secure. Please do not share it publicly, and avoid committing it to version control systems like Git. You’ll use this API token in your Python code to access the Crawlbase Crawling API.

With Python and essential libraries installed, a virtual environment created, and a Crawlbase API token in hand, you’re well-prepared to dive into web scraping Walmart product pages. In the next sections, we’ll explore the structure of Walmart pages and start making HTTP requests to retrieve the data we need.

4. Understanding Walmart Search Page Structure

In this section, we’ll dissect the layout of the Walmart website, identify the key data points we want to scrape, and explore how to inspect the page’s HTML to derive essential CSS selectors for data extraction. Understanding these fundamentals is essential as we embark on our journey to scrape Walmart product pages effectively.

Layout of a Walmart Search Page

To efficiently scrape data from Walmart’s search pages, it’s imperative to understand the carefully crafted layout of these pages. Walmart has designed its search results pages with user experience in mind, but this structured format also lends itself well to web scraping endeavors.

Here’s a detailed breakdown of the essential elements commonly found on a typical Walmart search page:

Search Bar: Positioned prominently at the top of the page, the search bar serves as the gateway to Walmart’s extensive product database. Shoppers use this feature to input their search queries and initiate product exploration.

Search Results Grid: The page presents a grid of product listings just below the search filters. Each listing contains vital information, including the product title, price, and ratings. These data points are the crown jewels of our web scraping mission.

Product Cards: Each product listing is encapsulated within a product card, making it a discreet entity within the grid. These cards usually include an image, the product’s title, price, and ratings. Extracting data from these cards is our primary focus during web scraping.

Pagination Controls: In situations where the search results extend beyond a single page, Walmart thoughtfully includes pagination controls at the bottom of the page. These controls allow users to navigate through additional result pages. As scrapers, we must be equipped to handle pagination for comprehensive data collection.

Filters and Sorting Options: Walmart provides users various filters and sorting options to refine their search results. While these features are essential for users, they are often other focuses of web scraping efforts.

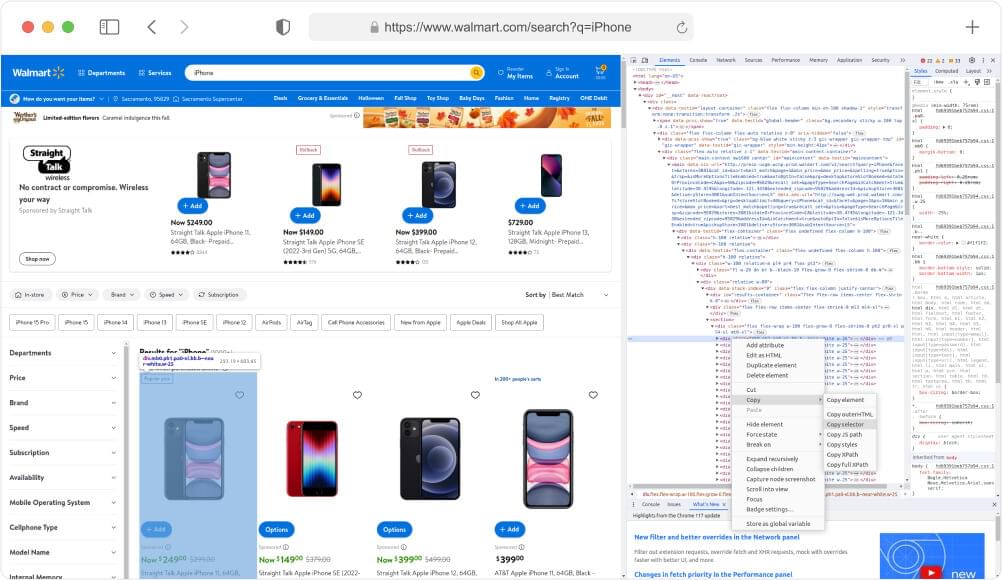

Inspecting HTML to Get CSS Selectors

We must identify the HTML elements that house the information we covet to extract data from web pages. This task is where web development tools and browser developer tools come to our rescue. Let’s outline how you can inspect the HTML structure and unearth those precious CSS selectors:

- Open the Web Page: Navigate to the Walmart website and land on either a search page that beckons your interest.

- Right-Click and Inspect: Employ your right-clicking prowess on an element you wish to extract (e.g., a product card) and select “Inspect” or “Inspect Element” from the context menu. This mystical incantation will conjure the browser’s developer tools.

- Locate the HTML Source: Within the confines of the developer tools, the HTML source code of the web page will lay bare its secrets. Hover your cursor over various elements in the HTML panel and witness the corresponding portions of the web page magically illuminate.

- Identify CSS Selectors: To liberate data from a particular element, right-click on it within the developer tools and gracefully choose “Copy” > “Copy selector.” This elegant maneuver will transport the CSS selector for that element to your clipboard, ready to be wielded in your web scraping incantations.

By delving deep into the HTML and extracting those elusive CSS selectors, you’ll be armed to precision-target the right elements when crafting your web scraping symphony. In the forthcoming sections, these selectors will serve as the ethereal strings that resonate with data, drawing it forth from Walmart’s web pages with finesse.

Now that we’ve thoroughly explored the labyrinthine structure of the Walmart website and equipped ourselves with the art of CSS selector discovery, we’re poised to transition theory into practice. In the upcoming sections, we’ll embark on an exhilarating coding journey, where we’ll wield Python, the Crawlbase Crawling API, and Beautiful Soup to deftly scrape data from Walmart’s search pages.

5. Building Your Walmart Scraper

Now that we’ve laid the foundation by understanding the importance of web scraping, setting up our environment, and exploring the intricate structure of Walmart’s website, it’s time to roll up our sleeves and build our Walmart scraper. In this section, we’ll guide you through the process step by step, utilizing the powerful Crawlbase Python library along with Python programming to make your web scraping journey a breeze.

Utilizing the Crawlbase Python Library

The Crawlbase Python library serves as a lightweight and dependency-free wrapper for Crawlbase APIs, streamlining the intricacies of web scraping. This versatile tool simplifies tasks like making HTTP requests to websites, adeptly handling IP rotation, and gracefully maneuvering through web obstacles, including CAPTCHAs. To embark on your web scraping journey with this library, you can seamlessly follow these steps:

- Import: To wield the formidable Crawling API from the Crawlbase library, you must commence by importing the indispensable CrawlingAPI class. This foundational step paves the way for accessing a range of Crawlbase APIs. Here’s a glimpse of how you can import these APIs:

1 | from crawlbase import CrawlingAPI |

- Initialization: With your Crawlbase API token securely in hand, the next crucial step involves initializing the CrawlingAPI class. This pivotal moment connects your code to the vast capabilities of Crawlbase:

1 | api = CrawlingAPI({ 'token': 'YOUR_CRAWLBASE_TOKEN' }) |

- Sending a Request: Once your CrawlingAPI class stands ready with your Crawlbase API token, you’re poised to send requests to your target websites. Here’s a practical example of crafting a GET request tailored for scraping iPhone listings from Walmart’s search page:

1 | response = api.get('https://www.walmart.com/search?q=iPhone') |

With the Crawlbase Python library as your trusty companion, you can confidently embark on your web scraping odyssey. For a deeper dive into its capabilities, you can explore further details here.

Managing Parameters and Tailoring Responses

Before embarking on your web scraping journey, it’s crucial to understand how to effectively manage parameters and customize responses using the Crawlbase Crawling API. This flexibility empowers you to craft your requests precisely to meet your unique requirements, offering a truly customized and efficient scraping experience. Let’s delve into the intricacies of parameter management and response customization.

Parameter Management with Crawlbase Crawling API

The Crawlbase Crawling API offers a multitude of parameters at your disposal, enabling you to fine-tune your scraping requests. These parameters can be tailored to suit your unique needs, making your web scraping efforts more efficient and precise. You can explore the complete list of available parameters in the API documentation.

To illustrate parameter usage with a practical example, let’s say you want to scrape Walmart’s iPhone product listings. You can make a GET request to the Walmart search page while specifying parameters such as “user_agent” and “format”:

1 | response = api.get('https://www.walmart.com/search?q=iPhone', { |

In this example, we’ve set the “user_agent” parameter to mimic a specific browser user agent and chosen the “JSON” format for the response. These parameters allow you to tailor your request to your specific requirements precisely.

Customizing Response Formats

When interacting with Crawlbase, you have the flexibility to choose between two response formats: HTML and JSON. Your choice depends on your preferences and parsing needs.

HTML Response Format: If you select the HTML response format (which is the default), you’ll receive the raw HTML content of the web page as the response. Additionally, crucial response parameters will be conveniently added to the response headers for easy access. Here’s an example of what such a response might look like:

1 | Headers: |

JSON Response Format: Alternatively, you can opt for the JSON response format. In this case, you’ll receive a well-structured JSON object that your application can effortlessly process. This JSON object contains all the necessary information, including the response parameters. Here’s an example of a JSON response:

1 | { |

With the ability to manage parameters and customize response formats, you’re equipped with the tools to fine-tune your scraping requests and tailor the output to suit your project’s needs best. This level of control ensures a seamless and efficient web scraping experience, enabling you to extract the precise data you require from Walmart’s web pages.

Scraping Walmart Search Page

Now that we’ve armed ourselves with a solid understanding of web scraping fundamentals and how to harness the Crawlbase Python library effectively, it’s time to embark on the practical journey of scraping Walmart’s search page. In this developer-focused section, we’ll construct a Python script that adeptly captures product data from Walmart’s search results page.

This script encapsulates the essence of web scraping: making HTTP requests, parsing HTML content, and extracting the critical information we seek.

1 | # Import necessary libraries |

In this script, we import BeautifulSoup and the Crawlbase Python library. After initializing the CrawlingAPI class with your Crawlbase API token, we define the search query, construct the Walmart search page URL, and make a GET request using the Crawlbase API.

Upon a successful request (status code 200), we extract and parse the HTML content of the search page with BeautifulSoup. We then focus on product containers, extracting essential product details like title, price, and rating.

These details are organized into a list for further processing, and the script concludes by printing the scraped product data. This script provides a practical demonstration of extracting valuable information from Walmart’s search results page using web scraping techniques.

Example Output:

1 | [ |

Handling pagination on the Search Page

Walmart search results are often paginated, meaning there are multiple pages of search results to navigate through. To scrape all the relevant data, we need to handle pagination by iterating through the pages and extracting data from each page.

Here’s an example of how to scrape search results from multiple pages on Walmart:

1 | import json |

This Python script efficiently scrapes product data from Walmart’s search result pages while seamlessly handling pagination. It accomplishes this through two core functions: get_total_pages and scrape_page.

The get_total_pages function fetches the total number of pages for a given search query by sending a GET request to the initial search page. It then parses the HTML content, extracting the last page number from the pagination list. This ensures that the script is aware of the number of pages it needs to scrape.

The scrape_page function handles the actual scraping of product data. It inputs a specific search page URL, makes a GET request, and employs BeautifulSoup to extract product details such as title, price, and, optionally, the rating. It gracefully accounts for cases where products may not have a rating.

In the main function, the script defines the search query, constructs the URL for the initial search page, and calculates the total number of pages. It then iterates through each page, scraping product details and accumulating them in a list. Finally, it prints the collected product details in a neat JSON format. This approach allows for comprehensive data extraction from multiple search result pages, ensuring no product details are overlooked during pagination.

Data Storage

After successfully scraping data from Walmart’s search pages, the next crucial step is storing this valuable information for future analysis and reference. In this section, we will explore two common methods for data storage: saving scraped data in a CSV file and storing it in an SQLite database. These methods allow you to organize and manage your scraped data efficiently.

Storing Scraped Data in a CSV File

CSV (Comma-Separated Values) is a widely used format for storing tabular data. It’s a simple and human-readable way to store structured data, making it an excellent choice for saving your scraped Walmart product data.

We’ll extend our previous web scraping script to include a step for saving the scraped data into a CSV file using the popular Python library, pandas. Here’s an updated version of the script:

1 | import pandas as pd |

In this updated script, we’ve introduced pandas, a powerful data manipulation and analysis library. After scraping and accumulating the product details in the all_product_details list, we create a pandas DataFrame from this data. Then, we use the to_csv method to save the DataFrame to a CSV file named “walmart_product_data.csv” in the current directory. Setting index=False ensures that we don’t save the DataFrame’s index as a separate column in the CSV file.

You can easily work with and analyze your scraped data by employing pandas. This CSV file can be opened in various spreadsheet software or imported into other data analysis tools for further exploration and visualization.

Storing Scraped Data in an SQLite Database

If you prefer a more structured and query-friendly approach to data storage, SQLite is a lightweight, serverless database engine that can be a great choice. You can create a database table to store your scraped data, allowing for efficient data retrieval and manipulation. Here’s how you can modify the script to store data in an SQLite database:

1 | import json |

In this updated code, we’ve added functions for creating the SQLite database and table ( create_database ) and saving the scraped data to the database ( save_to_database ). The create_database function checks if the database and table exist and creates them if they don’t. The save_to_database function inserts the scraped data into the ‘products’ table.

By running this code, you’ll store your scraped Walmart product data in an SQLite database named ‘walmart_products.db’. You can later retrieve and manipulate this data using SQL queries or access it programmatically in your Python projects.

6. Conclusion

In this comprehensive exploration of web scraping, we’ve delved into the immense potential of harnessing data from the web. Web scraping, the art of extracting information from websites, has become an indispensable tool for businesses, researchers, and curious minds. It serves as a bridge between the boundless expanse of online data and specific data needs, enabling users to access, analyze, and utilize information previously hidden within the depths of the internet.

Our focus sharpened on the importance of web scraping, mainly when applied to a retail giant like Walmart. We unveiled how scraping Walmart’s website can provide a wealth of insights, from competitive intelligence and market research to efficient inventory management and consumer sentiment analysis. This powerhouse of data can revolutionize decision-making across industries.

We equipped ourselves with the technical know-how to establish the environment needed for web scraping. From setting up Python and essential libraries to obtaining a Crawlbase Crawling API token, we laid a robust foundation. We navigated the intricate web structure of Walmart, honing our skills in inspecting HTML for CSS selectors that would become our tools for data extraction.

Practicality met theory as we built a Walmart scraper using Python and Crawlbase Crawling API. This dynamic script captured product data from Walmart’s search results pages while adeptly handling pagination. Lastly, we understood the importance of data storage, offering insights into saving scraped data in both CSV files and SQLite databases, empowering users to effectively manage and analyze their troves of scraped information.

Web scraping is more than a technological feat; it’s a gateway to informed decisions, innovation, and market advantage in today’s data-driven landscape. Armed with this knowledge, you’re poised to unlock the potential of web scraping, revealing the valuable nuggets of data that can reshape your business strategies or fuel your research endeavors. Happy scraping!

7. Frequently Asked Questions

Q. How do I choose the right data to scrape from Walmart’s search pages?

Selecting the right data to scrape from Walmart’s search pages depends on your specific goals. Common data points include product titles, prices, ratings, and links. Consider the most relevant information to your project, whether it’s competitive pricing analysis, product research, or trend monitoring. The blog provides insights into the key data points you can extract for various purposes.

Q. Can I scrape Walmart’s search pages in real time for pricing updates?

Yes, you can scrape Walmart’s search pages to monitor real-time pricing updates. Web scraping allows you to track changes in product prices, which can be valuable for price comparison, adjusting your own pricing strategy, or notifying you of price drops or increases. The blog introduces the technical aspects of scraping Walmart’s search pages, which you can adapt for real-time monitoring.

Q. How can I handle pagination when scraping Walmart’s search results?

Handling pagination in web scraping is crucial when dealing with multiple pages of search results. You can navigate through Walmart’s search result pages by incrementing the page number in the URL and making subsequent HTTP requests. The script can be designed to continue scraping data from each page until no more pages are left to scrape, ensuring you collect comprehensive data from the search results.

Q. What are the common challenges in web scraping?

Web scraping can be challenging due to several factors:

- Website Structure: Websites often change their structure, making it necessary to adapt scraping code.

- Anti-Scraping Measures: Websites may employ measures like CAPTCHAs, IP blocking, or session management to deter scrapers.

- Data Quality: Extracted data may contain inconsistencies or errors that require cleaning and validation.

- Ethical Concerns: Scraping should be done ethically, respecting the website’s terms and privacy laws.