Scrape Walmart prices easily and turn the internet into your personal shopping assistant. Whether you’re comparing prices, running a business, or just love gathering data, knowing how to pull prices from a huge retailer like Walmart is incredibly useful. It allows you to make better buying decisions, stay updated on market trends, and find the best deals out there.

This article will show a simple way to collect Walmart’s pricing data using web scraping techniques. With Python and Crawlbase Crawling API, you’ll learn how to get the prices you need without fuss. By the end of this read, you’ll be equipped to gather Walmart prices quickly and effortlessly.

Ready to get started? We’re about to make web scraping as easy as shopping online. Welcome to straightforward tips on how to access Walmart’s pricing information!

Table Of Contents

- The Power of Data Extraction

- Overview of Walmart and its product prices

- Installing Python and necessary libraries

- Selecting the right Integrated Development Environment (IDE)

- Signing up for the Crawlbase Crawling API and obtaining API credentials

- Exploring the components of Walmart product pages

- Identifying the price elements for scraping

- Overview of Crawlbase Crawling API

- Benefits of using Crawlbase Crawling API

- How to use the Crawlbase Python library

- Initiating HTTP requests to Walmart product pages

- Analyzing HTML to determine the location of price data

- Extracting price information from HTML

- Extracting Multiple Product Pages from Search Results

- Saving scraped price data in a CSV file

- Storing data in an SQLite database for further analysis

Getting Started

In this section, we’ll lay the foundation for our journey into the web scraping world, specifically focusing on Walmart’s product prices. We’ll begin by introducing “The Power of Data Extraction” and then provide an overview of Walmart and its product pricing data.

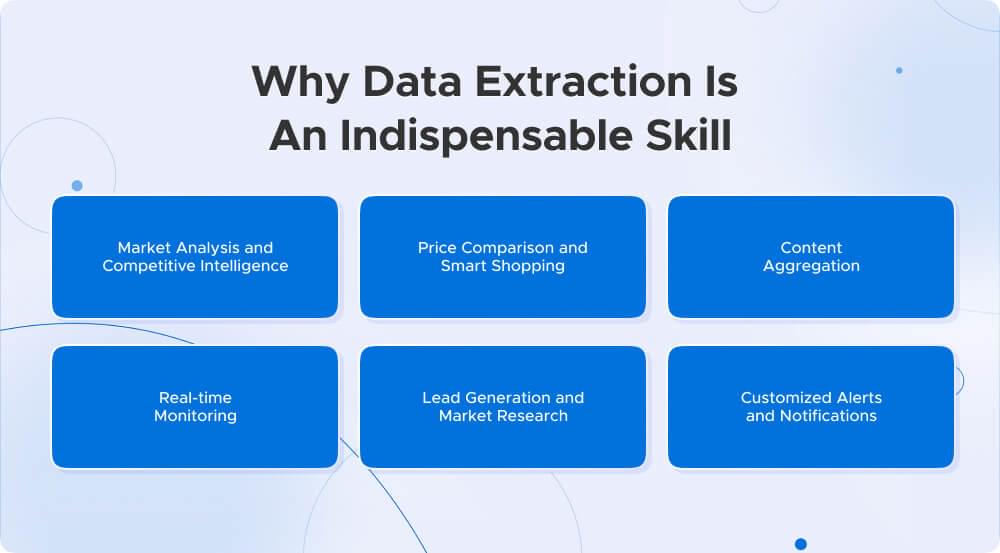

The Power of Data Extraction

Data extraction, often referred to as web scraping in the online realm, is a process that allows you to collect and organize vast amounts of data from websites in an automated and systematic manner. Think of it as your digital assistant, tirelessly gathering, categorizing, and presenting valuable information from the depths of the internet. The data extraction applications are as diverse as the data itself, and their impact can be transformational in various aspects of modern life.

Here’s why data extraction is an indispensable skill:

- Market Analysis and Competitive Intelligence: Data extraction is a business’s strategic asset. It enables you to keep a watchful eye on competitors’ prices, product offerings, and customer reviews. This knowledge is vital for making informed decisions, such as setting competitive prices or fine-tuning your marketing strategies.

- Price Comparison and Smart Shopping: Data extraction helps you easily navigate the e-commerce landscape as a consumer. You can effortlessly compare prices of products across multiple online retailers, ensuring you get the best deals and save your hard-earned money.

- Content Aggregation: Content creators and researchers benefit from data extraction by automating the collection of articles, blog posts, news, or research data. This process streamlines research and content creation, freeing time for more creative and analytical tasks.

- Real-time Monitoring: Data extraction allows you to monitor dynamic data sources continuously. This is crucial for staying up-to-date with rapidly changing information, such as stock prices, weather updates, or social media trends.

- Lead Generation and Market Research: Businesses can generate potential leads by scraping data from various sources, such as business directories or social media profiles. Such data can be used for targeted marketing campaigns or market research.

- Customized Alerts and Notifications: Data extraction can set up custom alerts and notifications for specific events or data changes, ensuring you are instantly informed when something significant happens.

Overview of Walmart and Its Product Prices

Walmart needs no introduction. It’s one of the world’s largest retail giants, with a significant online presence offering diverse products. Walmart’s product prices hold immense importance for both shoppers and businesses. As a shopper, you can explore a wide array of items at Walmart, and being able to scrape and compare prices can lead to cost savings. For businesses, tracking and analyzing Walmart’s prices can provide a competitive advantage and support pricing strategies.

Manually collecting pricing data from Walmart’s website can take time and effort. This is where data extraction, specifically web scraping, comes to the rescue. In this blog, we’ll delve into how to scrape Walmart’s product prices using Python and the Crawlbase Crawling API, simplifying the process and making it highly efficient.

Now that we’ve explored the power of data extraction and have an overview of Walmart’s product prices let’s proceed to set up our environment for the exciting world of web scraping.

Setting Up Your Environment

Before diving into the exciting world of web scraping Walmart’s prices, we must prepare our environment. This includes installing the necessary tools, selecting the right Integrated Development Environment (IDE), and obtaining the essential API credentials.

Installing Python and Necessary Libraries

Python is the programming language of choice for web scraping due to its versatility and wealth of libraries. If you don’t already have Python installed on your system, you can download it from the official website at python.org. Once Python is up and running, the next step is to ensure you have the required libraries for our web scraping project. We’ll primarily use three main libraries:

- Crawlbase Python Library: This library is the heart of our web scraping process. It allows us to make HTTP requests to Walmart’s product pages using the Crawlbase Crawling API. To install it, you can use the “pip” command with:

1 | pip install crawlbase |

- Beautiful Soup 4: Beautiful Soup is a Python library that simplifies the parsing of HTML content from web pages. It’s an indispensable tool for extracting data. Install it with:

1 | pip install beautifulsoup4 |

- Pandas: Pandas is a powerful data manipulation and analysis library in Python. We’ll use it to store and manage the scraped price data efficiently. You can install Pandas with:

1 | pip install pandas |

Having these libraries in place sets us up for a smooth web scraping experience.

Selecting the Right Integrated Development Environment (IDE)

While you can write Python code in a simple text editor, an Integrated Development Environment (IDE) can significantly enhance your development experience. It provides features like code highlighting, auto-completion, and debugging tools, making your coding more efficient. Here are a few popular Python IDEs to consider:

- PyCharm: PyCharm is a robust IDE with a free Community Edition. It offers features like code analysis, a visual debugger, and support for web development.

- Visual Studio Code (VS Code): VS Code is a free, open-source code editor developed by Microsoft. Its extensive extension library makes it versatile for various programming tasks, including web scraping.

- Jupyter Notebook: Jupyter Notebook is excellent for interactive coding and data exploration and is commonly used in data science projects.

- Spyder: Spyder is an IDE designed for scientific and data-related tasks, providing features like a variable explorer and an interactive console.

Signing Up for the Crawlbase Crawling API and Obtaining API Credentials

To make our web scraping project successful, we’ll leverage the power of the Crawlbase Crawling API. This API is designed to handle complex web scraping scenarios like Walmart’s product pages efficiently. It simplifies accessing web content while bypassing common challenges such as JavaScript rendering, CAPTCHAs, and anti-scraping measures.

One of the notable features of the Crawlbase Crawling API is IP rotation, which helps prevent IP blocks and CAPTCHA challenges. By rotating IP addresses, the API ensures that your web scraping requests appear as if they come from different locations, making it more challenging for websites to detect and block scraping activities.

Here’s how to get started with the Crawlbase Crawling API:

- Visit the Crawlbase Website: Open your web browser and navigate to the Crawlbase signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the provided instructions.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawlbase Crawling API. You can find your API tokens on your Crawlbase dashboard.

Note: Crawlbase offers two types of tokens, one for static websites and another for dynamic or JavaScript-driven websites. Since we’re scraping Walmart, which relies on JavaScript for dynamic content loading, we’ll opt for the JavaScript Token. Crawlbase generously offers an initial allowance of 1,000 free requests for the Crawling API, making it an excellent choice for our web scraping project.

Now that we’ve set up our environment, we’re ready to dive deeper into understanding Walmart’s website structure and effectively use the Crawlbase Crawling API for our web scraping endeavor.

Understanding Walmart’s Website Structure

Before we jump into the exciting world of web scraping Walmart prices, it’s essential to understand how Walmart’s website is structured. Understanding the layout and components of Walmart’s product pages is critical to identifying the elements we want to scrape, especially the price information.

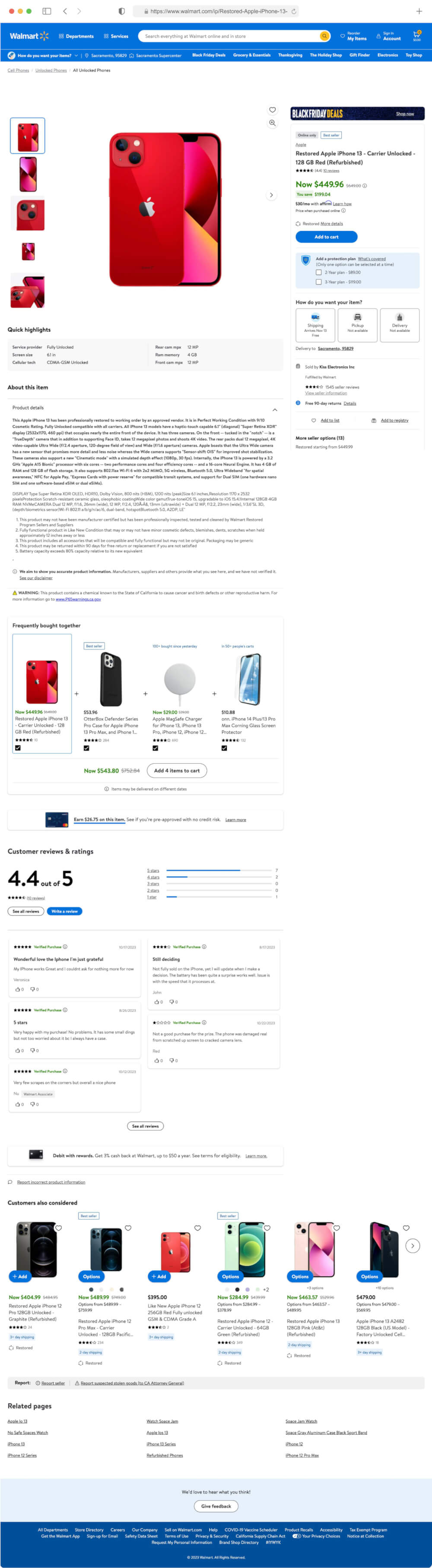

Exploring the Components of Walmart Product Pages

Walmart’s product pages are like treasure troves of valuable information. Each page is meticulously designed to provide customers with a comprehensive product view. We must break down these pages into their essential components as web scrapers. Here are some key components commonly found on Walmart product pages:

- Product Title: The name of the product is usually displayed prominently. This title serves as an identifier and is essential for categorizing the products.

- Product Images: Images play a crucial role in online shopping. Walmart showcases product images from various angles to help customers visualize the item.

- Price Information: The price of the product is a critical element that shoppers and web scrapers are interested in. It provides insights into the cost of the product and is vital for price tracking and analysis.

- Product Description: A detailed product description offers additional information about the item. This information can be valuable for potential buyers in making informed decisions.

- Customer Reviews and Ratings: Customer feedback in the form of reviews and ratings can help us gauge the quality of the product. Web scraping these reviews can provide insights into customer satisfaction.

- Seller Information: Knowing who the seller is and their location can be valuable for market analysis and understanding the product’s source.

Identifying the Price Elements for Scraping

Since our primary focus is on scraping price data from Walmart product pages, we need to identify the specific elements related to pricing. Here are the key elements that we’ll target for extraction:

- Product Price: This is the current price of the product. It’s crucial for tracking and comparing prices over time and for budget-conscious shoppers.

- Discounted price (if applicable): If Walmart offers any discounts or special offers, we’ll aim to scrape the discounted price, providing insights into cost savings.

- Price Unit: Some products are sold in various units, such as pounds, ounces, or liters. Scraping the price unit allows us to understand how the product is priced.

With a clear understanding of Walmart’s website structure and the elements we intend to scrape, we’re well-prepared for the next steps in our web scraping journey. We’ll learn how to effectively use the Crawlbase Crawling API to collect the price data we need.

Introduction to Crawlbase Crawling API

Now that we have a solid grasp of Walmart’s website structure, we must introduce a powerful tool to make our web scraping journey smoother—the Crawlbase Crawling API. In this section, we’ll cover the basics of the API, its benefits, and how to harness its capabilities using the Crawlbase Python library.

Overview of Crawlbase Crawling API

The Crawlbase Crawling API is a versatile web scraping tool designed to tackle complex web scraping scenarios easily. It’s a game-changer for web scrapers dealing with dynamic websites like Walmart, where data is loaded and modified using JavaScript.

This API simplifies accessing web content, rendering JavaScript, and returning the HTML content, ready for parsing. Its features, including IP rotation, make it stand out, which helps prevent IP blocks and CAPTCHA challenges. By rotating IP addresses, the API ensures that your web scraping requests appear as if they come from different locations, making it more challenging for websites to detect and block scraping activities.

Benefits of Using Crawlbase Crawling API

Why should you consider using the Crawlbase Crawling API for web scraping? Here are some of the key advantages it offers:

- JavaScript Rendering: Many modern websites, including Walmart, rely heavily on JavaScript for content rendering. The Crawlbase API can handle these dynamic elements, ensuring you get the fully loaded web page.

- Simplified Requests: The API abstracts away the complexities of handling HTTP requests, cookies, and sessions. You can focus on crafting your scraping logic while the API handles the technical details.

- Data Structure: The data you receive from the API is typically well-structured, making it easier to parse and extract the information you need.

- Scalability: The Crawlbase Crawling API supports scalable web scraping by handling multiple requests simultaneously. This is particularly advantageous when dealing with large volumes of data.

- Reliability: The API is designed to be reliable and provide consistent results, which is crucial for any web scraping project.

How to Use the Crawlbase Python Library

The Crawlbase Python library is a lightweight and dependency-free wrapper for the Crawlbase APIs. This library streamlines various aspects of web scraping, making it an excellent choice for projects like scraping Walmart prices.

Here’s how you can get started with the Crawlbase Python library:

Import the Library: To utilize the Crawlbase Crawling API from the Python library, begin by importing the indispensable CrawlingAPI class. This foundational step opens the door to accessing a range of Crawlbase APIs. Here’s a glimpse of how you can import these APIs:

1 | from crawlbase import CrawlingAPI |

Initialization: Once you have your Crawlbase API token in hand, the next crucial step is to initialize the CrawlingAPI class. This connection enables your code to leverage the vast capabilities of Crawlbase:

1 | api = CrawlingAPI({ 'token': 'YOUR_CRAWLBASE_TOKEN' }) |

Sending Requests: With the CrawlingAPI class ready and your Crawlbase API token securely set, you’re poised to send requests to your target websites. Here’s a practical example of crafting a GET request tailored for scraping Walmart product pages:

1 | response = api.get('https://www.walmart.com/product-page-url') |

With the Crawlbase Crawling API and the Crawlbase Python library at your disposal, you have the tools you need to embark on your web scraping adventure efficiently. In the following sections, we’ll dive into the specifics of web scraping Walmart prices, from making HTTP requests to extracting price data and storing it for analysis.

Web Scraping Walmart Prices

We’re about to dive into the heart of our web scraping journey: extracting Walmart product prices. This section will cover the step-by-step process of web scraping Walmart’s product pages. This includes making HTTP requests, analyzing HTML, extracting price information, and handling multiple product pages with pagination.

Initiating HTTP Requests to Walmart Product Pages

The first step in scraping Walmart prices is initiating HTTP requests to the product pages you want to gather data from. We’ll use the Crawlbase Crawling API to make this process more efficient and to handle dynamic content loading on Walmart’s website.

1 | from crawlbase import CrawlingAPI |

By sending an HTTP request to a Walmart product page, we retrieve the raw HTML content of that specific page. This HTML will be the source of the price data we’re after. The Crawlbase API ensures the page is fully loaded, including any JavaScript-rendered elements, which is crucial for scraping dynamic content.

Output Preview:

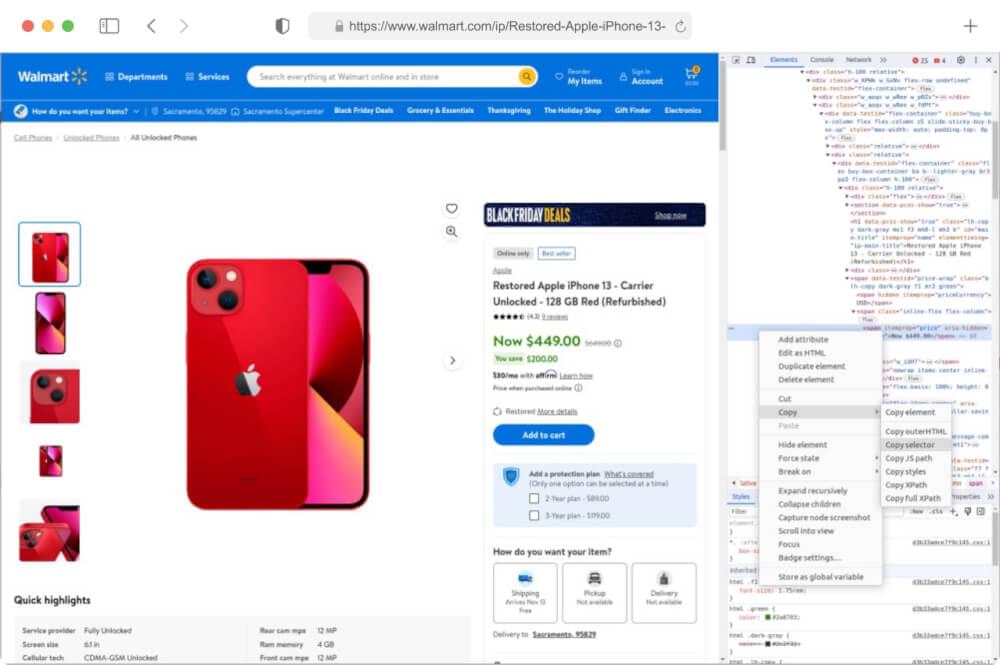

Inspecting HTML to Determine the Location of Price Data

We must identify the HTML elements that house the information we covet to extract data from web pages. This task is where web and browser developer tools come to our rescue. Let’s outline how you can inspect the HTML structure and unearth those precious CSS selectors:

- Open the Web Page: Navigate to the Walmart website and land on either a search page that beckons your interest.

- Right-Click and Inspect: Employ your right-clicking prowess on an element you wish to extract (e.g., a product card) and select “Inspect” or “Inspect Element” from the context menu. This mystical incantation will conjure the browser’s developer tools.

- Locate the HTML Source: Within the confines of the developer tools, the HTML source code of the web page will lay bare its secrets. Hover your cursor over various elements in the HTML panel and witness the corresponding portions of the web page magically illuminate.

- Identify CSS Selectors: To liberate data from a particular element, right-click on it within the developer tools and gracefully choose “Copy”> “Copy selector.” This elegant maneuver will transport the CSS selector for that element to your clipboard, ready to be wielded in your web scraping incantations.

Now that we’ve looked closely at how Walmart’s website is built and learned how to find the needed parts, it’s time to put our knowledge into action. In the next steps, we’ll start coding and use Python, the Crawlbase Crawling API, and Beautiful Soup to extract information from Walmart’s search pages.

Extracting Price Information from HTML

With the price elements identified, we can now write Python code to extract the price information from the HTML content. Beautiful Soup is a valuable tool, allowing us to navigate the HTML structure and retrieve the data we need. For the example, we will extract the product’s title, price, discounted price (if any), and rating details. Let us extend our previous script and scrape this information from HTML.

1 | # Import necessary libraries |

This Python script utilizes ‘Beautiful Soup’ library for HTML parsing and and the ‘CrawlingAPI’ module from the ‘Crawlbase’ library for web interaction. After initializing the API with a token and configuring options, it constructs a URL for a specific product page, makes a GET request, and parses the HTML content. The script then extracts product information, such as the product name, price, discount (if available), and rating, and presents these details in a structured JSON format. Error handling is included to address any exceptions that might occur during the scraping process. This code example illustrates how to automate the collection of data from web pages and format it for further analysis or storage.

Example Output:

1 | { |

Extracting Multiple Product Pages from Search Results

We often need to scrape multiple product pages from search results to build a comprehensive dataset of Walmart prices. This involves handling the pagination of search results and iterating through different pages to access additional product listings. If you’re wondering how to extract the URLs of these product pages from search results, we recommend reading How to Scrape Walmart Search Pages, which covers this topic. Once you have the list of product page URLs, you can return here to learn how to scrape price data from those pages.

Please see our dedicated blog post for detailed insights into extracting product page URLs from Walmart search results. After you’ve collected the URLs, you can use the instructions in this blog to proceed with scraping the price data. With this combined knowledge, you’ll be well-equipped to scrape Walmart prices efficiently.

In the next section, we’ll discuss efficiently storing the scraped price data. Let’s keep the momentum going as we explore this exciting web scraping journey.

Storing the Scraped Price Data

After successfully scraping data from Walmart’s product pages, the next crucial step is storing this valuable information for future analysis and reference. In this section, we will explore two common methods for data storage: saving scraped data in a CSV file and storing it in an SQLite database. These methods allow you to organize and manage your scraped data efficiently.

Saving scraped price data in a CSV file

CSV (Comma-Separated Values) is a widely used format for storing tabular data. It’s a simple and human-readable way to store structured data, making it an excellent choice for saving your scraped Walmart product data.

We’ll extend our previous web scraping script to include a step for saving the scraped data into a CSV file using the popular Python library, pandas. Here’s an updated version of the script:

1 | import pandas as pd |

In this updated script, we’ve introduced pandas, a powerful data manipulation and analysis library. After scraping and accumulating the product details, we create a pandas DataFrame from this data. Then, we use the to_csv method to save the DataFrame to a CSV file named “walmart_product_data.csv” in the current directory. Setting index=False ensures that we don’t save the DataFrame’s index as a separate column in the CSV file.

You can easily work with and analyze your scraped data by employing pandas. This CSV file can be opened in various spreadsheet software or imported into other data analysis tools for further exploration and visualization.

Storing data in an SQLite database for further analysis

If you prefer a more structured and query-friendly approach to data storage, SQLite is a lightweight, serverless database engine that can be a great choice. You can create a database table to store your scraped data, allowing for efficient data retrieval and manipulation. Here’s how you can modify the script to store data in an SQLite database:

1 | import json |

In this updated code, we’ve added functions for creating the SQLite database and table ( create_database ) and saving the scraped data to the database ( save_to_database ). The create_database function checks if the database and table exist and creates them if they don’t. The save_to_database function inserts the scraped data into the ‘products’ table.

By running this code, you’ll store your scraped Walmart product data in an SQLite database named ‘walmart_products.db’. You can later retrieve and manipulate this data using SQL queries or access it programmatically in your Python projects.

Final Words

This guide equips you with the knowledge and tools to scrape Walmart prices using Python seamlessly and the Crawlbase Crawling API. Whether diving into web scraping for the first time or expanding your expertise, the principles outlined here provide a solid foundation. If you’re eager to explore scraping on other e-commerce platforms such as Amazon, eBay, or AliExpress, our additional guides await your discovery.

Web scraping may pose challenges, and we understand the importance of a supportive community. If you seek further guidance or encounter obstacles, don’t hesitate to reach out. Crawlbase support team is here to assist you in overcoming any challenges and ensuring a successful web scraping journey.

Frequently Asked Questions

Q. What Are the Benefits of Using the Crawlbase Crawling API?

The Crawlbase Crawling API is a powerful tool that simplifies web scraping, particularly for dynamic websites like Walmart. It offers benefits such as IP rotation, JavaScript rendering, and handling common web scraping challenges like CAPTCHAs. With the Crawlbase Crawling API, you can access web content efficiently, retrieve structured data, and streamline the web scraping process. It is a reliable choice for web scraping projects that require data extraction from complex and dynamic websites.

Q. What Are Some Common Challenges in Web Scraping Walmart Prices?

Like any scraping endeavor, web scraping Walmart prices comes with its share of challenges. One common hurdle is handling dynamic content and JavaScript rendering on Walmart’s website. Prices and product details often load dynamically, requiring careful consideration of the page’s structure. Additionally, Walmart may implement anti-scraping measures, necessitating strategies like rotating IP addresses and using headers to mimic human-like browsing behavior. Another challenge is managing pagination, especially when dealing with extensive product listings. Efficiently navigating multiple pages and extracting the desired price data requires meticulous attention to HTML structure and pagination patterns. Staying informed about potential changes in Walmart’s website layout is crucial for maintaining a reliable scraping process over time.

Q. Can I Scrape Other Data from Walmart Using the Crawlbase Crawling API?

Yes, the Crawlbase Crawling API is versatile and can be used to scrape various types of data from Walmart, not limited to prices. You can customize your web scraping project to extract product descriptions, ratings, reviews, images, and other relevant information. The API’s ability to handle dynamic websites ensures you can access the data you need for your specific use case.

Q. Are There Any Alternatives to Storing Data in CSV or SQLite?

While storing data in CSV or SQLite formats is common and effective, alternative storage options are based on your project’s requirements. You can explore other database systems like MySQL or PostgreSQL for more extensive data storage and retrieval capabilities. For scalable and secure data storage, you can consider cloud-based storage solutions such as Amazon S3, Google Cloud Storage, or Microsoft Azure. The choice of data storage method depends on your specific needs and preferences.