Accessing and analyzing data from the web has become an integral part of many tasks these days, from market research to academic studies. HTML tables are a common way websites organize and present data, making them a valuable resource for data extraction. Whether you’re a researcher, analyst, or enthusiast, knowing how to scrape tables from websites opens up a world of information at your fingertips.

This blog will guide you through three different methods to scrape tables from websites, catering to various preferences and skill levels. We’ll explore using Google Sheets, the R language with the rvest package, and Python, each offering its own advantages and capabilities. By the end, you’ll have the tools and knowledge to extract valuable data tables from the web efficiently and effectively.

Now, let’s get into the intricacies of HTML tables and why they are essential for web scraping.

Table Of Contents

- What are HTML tables and why they are important?

- How to Use Google Sheets to Extract HTML Tables Data

- Extracting Website Tables Data with R’s rvest Package

- How to Scrape Tables from a Website Python

- Final Thoughts

- Frequently Asked Questions (FAQs)

What are HTML tables and why they are important?

HTML tables are structured elements used on web pages to organize and display data in a tabular format. They consist of rows and columns, with each cell containing information relevant to its corresponding row and column intersection. These tables play a crucial role in presenting data on websites, making it easier for users to comprehend and analyze information.

How to Use Google Sheets to Extract HTML Tables Data

Using Google Sheets to extract table data is a convenient and straightforward method that allows you to gather information from websites without the need for complex coding or software. Here’s how you can do it:

- Open Google Sheets: Start by opening a new or existing Google Sheets document.

- Access the ImportHTML Function: Google Sheets provides a built-in function called ImportHTML, which allows you to import tables and lists from HTML pages on the web. To access this function, click on a cell where you want the imported data to appear.

- Enter the Function: In the selected cell, type “=IMPORTHTML(“ followed by the URL of the webpage containing the table you want to extract. Then, specify whether you want to import a table or a list, and the index number of the table (if there are multiple tables on the page). e.g.

=IMPORTHTML("https://en.wikipedia.org/wiki/Wonders_of_the_World","table",1)

- Press Enter: After entering the function, press Enter, and Google Sheets will automatically import the table data from the specified URL and display it in the selected cell.

- Adjust Formatting: Once the data is imported, you may need to adjust the formatting, such as resizing columns or formatting cells, to make the information more readable and organized.

- Refresh Data (Optional): Google Sheets allows you to set the imported data to automatically refresh at regular intervals. This ensures that your spreadsheet always reflects the latest information from the webpage.

By following these steps, you can easily extract table data from websites using Google Sheets. This method is particularly useful for users who prefer a user-friendly interface and do not have advanced coding skills. Additionally, Google Sheets offers collaboration features, allowing multiple users to work on the same spreadsheet simultaneously, making it a convenient choice for team projects and data analysis tasks.

Extracting Website Tables Data with R’s rvest Package

Using R language along with the rvest package provides a powerful way to scrape tables from websites. Here’s how you can do scrape table from Website r.

- Install the rvest Package: If you haven’t already installed the rvest package, you can do so by running the following command in your R console:

1 | install.packages("rvest") |

- Load the rvest Package: Once installed, you need to load the rvest package into your R environment using the following command:

1 | library(rvest){% asset_img "why-scrape-html-tables.jpg" "Why Scrape HTML Tables 'Why Scrape HTML Tables'" %} |

Specify the URL: Next, specify the URL of the webpage containing the table you want to scrape.

Use the html_table Function: The html_table function from the rvest package allows you to extract tables from HTML pages. Use this function along with the

read_htmlfunction to read the HTML content of the webpage and convert it into a data frame containing the table data.

1 | # Replace 'url' with the URL of the webpage containing the table |

Access the Table Data: Once you’ve extracted the table data, you can access it like any other data frame in R. Use indexing or column names to access specific rows or columns of the table.

Optional: Data Cleaning: Depending on the structure of the table and your specific requirements, you may need to perform some data cleaning or manipulation to prepare the data for analysis. This may include removing unwanted rows or columns, converting data types, or handling missing values.

By following these steps, you can easily scrape tables from websites using R language and the rvest package. This method is particularly useful for R users who prefer working with the R programming language and want to automate the process of extracting data from websites for analysis and visualization.

Complete Working Example:

1 | # Install and load the rvest package |

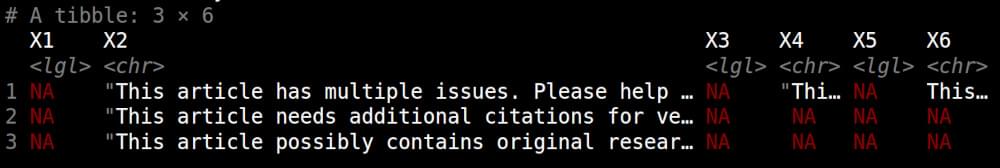

Example Output:

How to Scrape Tables from a Website Python

Python is a powerful programming language with libraries like Requests, BeautifulSoup and Pandas that make web scraping tasks relatively straightforward. Head into the steps below to scrape table from website Python.

- Install Necessary Libraries: First, make sure you have the requests and BeautifulSoup libraries installed. If not, you can install them using pip:

1 | pip install requests beautifulsoup4 pandas |

- Import Libraries

In your Python script, import the required libraries:

1 | from bs4 import BeautifulSoup |

- Fetch the Webpage: Use the requests library to send a GET request to the URL of the webpage containing the table you want to scrape. This will retrieve the HTML content of the webpage.

1 | url = "https://www.example.com/table-page" |

- Parse HTML Content: Next, use BeautifulSoup to parse the HTML content of the webpage and navigate to the table you want to scrape. You can use BeautifulSoup’s find or find_all methods to locate the table based on its HTML structure.

1 | soup = BeautifulSoup(html_content, "html.parser") |

- Extract Table Data: Iterate through the tables and convert them into Pandas DataFrames for easy manipulation:

1 | table_data = [] |

- Save or Manipulate Data

You can now save the scraped table data to a CSV file or perform further analysis and manipulatSave or Manipulate Dataion using Pandas:

1 | for idx, df in enumerate(table_data): |

- Optional: Data Cleaning: Depending on your requirements, you may need to perform some data cleaning or manipulation on the extracted data. This could include removing unwanted rows or columns, converting data types, or handling missing values.

By following these steps, you can scrape tables from websites using Python. This approach is versatile and can be used to extract data from a wide range of websites with tables, making it a valuable tool for data collection and analysis tasks.

Complete Working Example:

1 | from bs4 import BeautifulSoup |

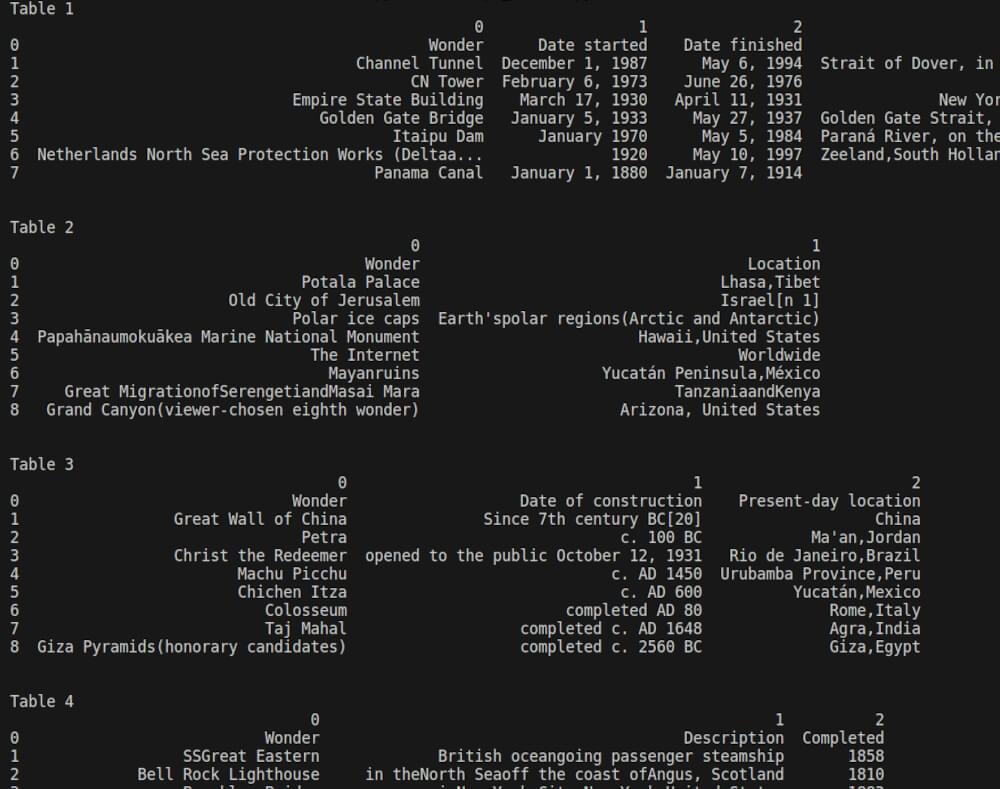

Example Output:

Final Thoughts

Scraping tables from websites can be a valuable skill for extracting and analyzing data efficiently. Whether you’re using Google Sheets, R language with the rvest package, or Python libraries like BeautifulSoup and Pandas, the process allows you to gather insights from various online sources. By understanding HTML structures and utilizing the right tools, you can automate data extraction tasks and streamline your workflow.

If you are interested to learn more about web scraping, read our following guides.

📜 Web Scrape Wikipedia

📜 How to Scrape Google Scholar Results

📜 How to Scrape Quora

📜 How to Scrape websites with Chatgpt

📜 Playwright Web Scraping

📜 How to Scrape TikTok comments

Keep exploring different scraping methods and stay updated with best practices to make the most out of web scraping for your projects. If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions (FAQs)

Q. Is it legal to scrape tables from websites for data extraction?

While web scraping itself is not illegal, it’s essential to scrape responsibly and abide by the website’s terms of service and copyright laws. Avoid scraping sensitive or copyrighted data without permission and refrain from overloading the website’s servers with excessive requests. Always check the website’s robots.txt file for scraping permissions and consider obtaining consent if necessary.

Q. What are the common challenges in scraping tables from websites?

Scraping tables from websites can encounter challenges like inconsistent HTML structures, dynamic content loading via JavaScript, and anti-scraping measures such as CAPTCHA and IP blocking. However, with the right tools and techniques, these challenges can be overcome by understanding the website’s structure and using methods like browser automation and proxy rotation.

Q. How can I unblock websites while scraping HTML tables?

Unblocking websites during HTML table scraping can be challenging due to security measures like IP blocking or CAPTCHA. One effective solution is to use Crawlbase’s Crawling API. Crawlbase offers a reliable solution to bypass blocks and access website content seamlessly. With its rotating proxies and user-agent strings, Crawlbase ensures smooth scraping operations while respecting website terms of service. By integrating Crawlbase’s API into your scraping scripts, you can overcome blocking measures and extract HTML table data efficiently.

Q. How can I handle dynamic content when scraping tables from websites?

Dynamic content, such as data loaded through JavaScript or AJAX calls, can pose challenges for traditional web scraping techniques. To handle dynamic content effectively, consider using headless browsers like Selenium WebDriver, which can execute JavaScript and render web pages as a real browser would. You can also used 3rd party APIs like Crawlbase’s Crawling API to handle dynamic content.

Alternatively, analyze the network requests made by the webpage to identify the API endpoints responsible for fetching data dynamically and directly scrape from those endpoints.