ChatGPT web scraping is getting quite popular these days. Developers want to learn how to scrape websites using ChatGPT so we have created a simple guide on ChatGPT scraping to simplify your web scraping process. ChatGPT utilizes GPT-3 – an advanced language tool created by OpenAI.

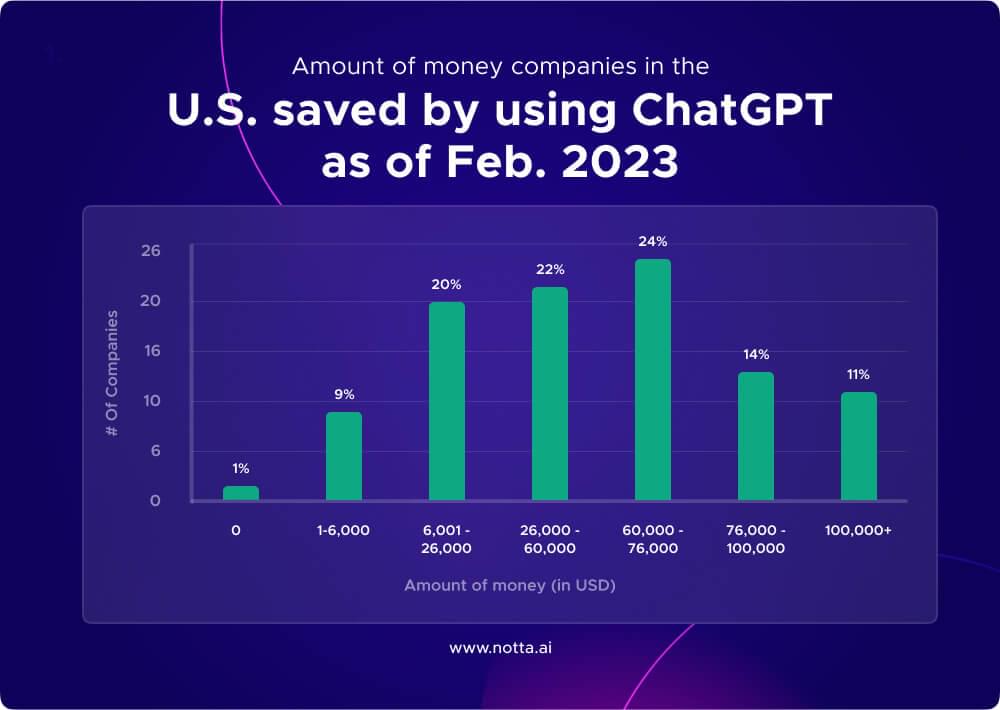

Many companies now use ChatGPT into their day-to-day tasks, the following Graph shows the amount of money U.S companies saved in February 2023 using ChatGPT.

In this guide, we break everything down, from signing up to the writing prompts and checking the code it generates. And to solve the tough web pages, we have some pro tips to improve on your scraping and assist you where other developers encounter problems.

Let’s get started!

Table Of Contents

- Setup a ChatGPT Account

- Locate Elements for Scraping

- Craft the ChatGPT Prompt

- Review and Test the Generated Code

- Requesting Code Editing Assistance

- Linting

- Optimizing Code Efficiency

- Implementing Pagination Strategies

- Finding Solutions for Dynamically Rendered Content with ChatGPT

- Understanding ChatGPT’s Limitations and Workarounds

- Final Thoughts

- Frequently Asked Questions

There’s a lot of curiosity about what ChatGPT can and can’t do. One question that pops up often is whether ChatGPT can scrape websites? So let’s get the answer of that first.

1. Can ChatGPT Scrape Websites?

ChatGPT doesn’t have the ability to scrape websites like a human would. Scraping involves pulling information from websites automatically. ChatGPT isn’t equipped with internet browsing capabilities, instead, it relies on the vast amount of data it’s been trained on to generate responses.

While ChatGPT might not have this superpower built-in, it can still be incredibly helpful.

For example, if you need to scrape a website using Python, ChatGPT can provide you with code snippets and point you in the direction of powerful web scraping libraries like Beautiful Soup or Scrapy.

2. How to Use ChatGPT for Web Scraping

Web scraping with ChatGPT starts with setting up your ChatGPT account and creating detailed prompts to scrape based on what you need. Here are some steps to guide you through the process:

Step 1: Set up a ChatGPT Account

Go to ChatGPT’s login page and press the “Sign up” button to register. You can sign up with an email address, or alternatively, use your Google, Microsoft or Apple account to sign up. Once you’ve signed in, you will see the ChatGPT interface.

Step 2: Locate Elements for Scraping

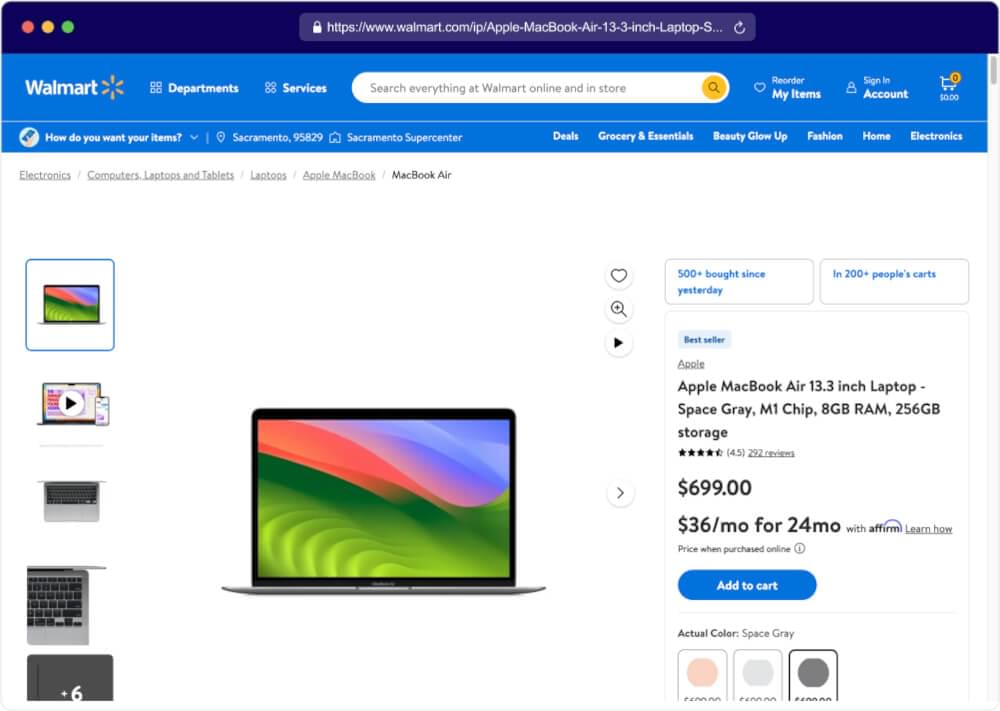

It is necessary to find the elements of the source page you want to scrape out. Let’s take the example of this Walmart’s website product page.

Say you’re interested in extracting product information such as product titles, prices, and customer ratings.

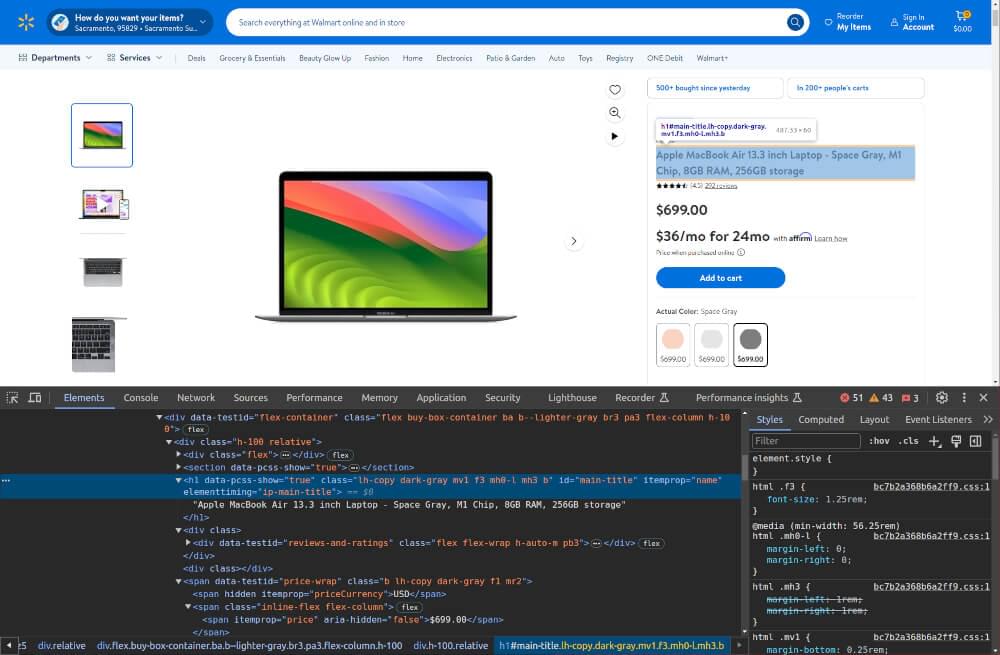

Simply navigate to the Walmart website, right-click on the desired elements (e.g., product titles, prices), and select “Inspect” to view the HTML code. Find the unique CSS selector which targets the needed element.

Step 3: Craft the ChatGPT Prompt

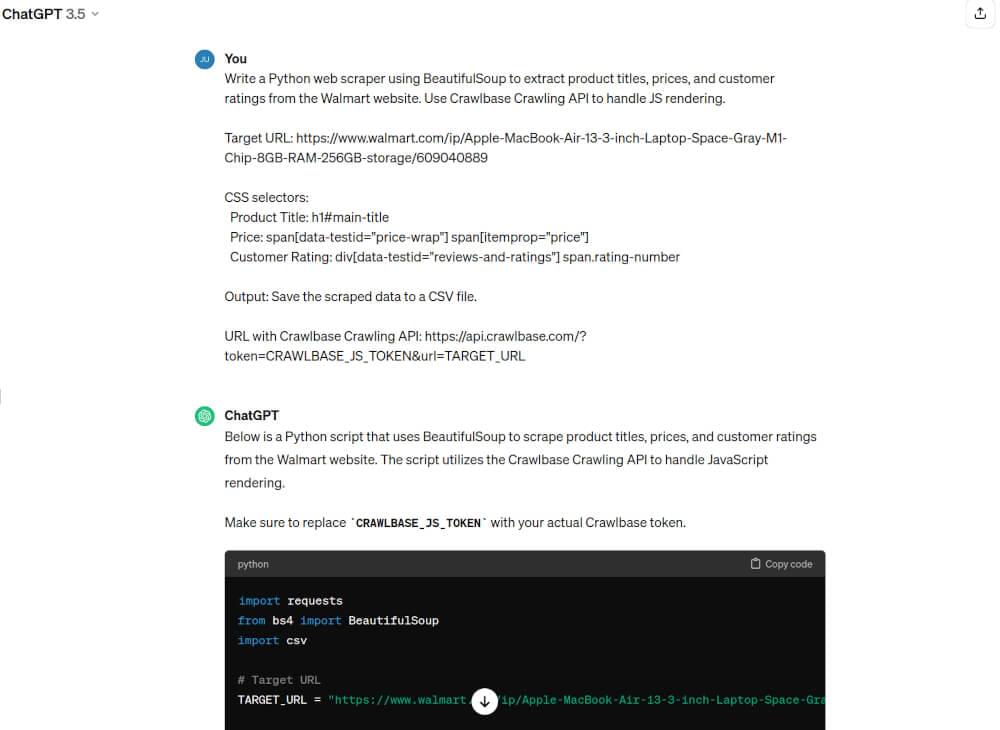

Now that you have everything required, create a prompt that is clear and concise for the ChatGPT. Please include your programming language, necessary library packages such as BeautifulSoup, and your desired output file format. Since we have selected Walmart, which uses JS rendering, to handle it we will take help of Crawlbase Crawling API. You can read about it here. A sample prompt is as follows:

1 | Write a Python web scraper using BeautifulSoup to extract product titles, prices, and customer ratings from the Walmart website. Use Crawlbase Crawling API to handle JS rendering. |

Upon providing clear instructions to ChatGPT together with the correct CSS selectors, you will obtain accurate custom code snippets specifically designed for scraping.

Here’s a snapshot of ChatGPT prompt.

Step 4: Review and Test the Generated Code

In our Walmart example, the code generated should be reviewed and tested to ensure it scrapes product details correctly. Confirm that the code generated from ChatGPT for scraping is ideal for your needs and that it does not have extra unnecessary packages or libraries.

Then, copy the custom code and run it to ascertain its suitability.

1 | import requests |

Note: Please make sure you have the BeautifulSoup library and the requests library installed before executing the code. You can do this by launching the terminal and typing:

1 | pip install beautifulsoup4 requests |

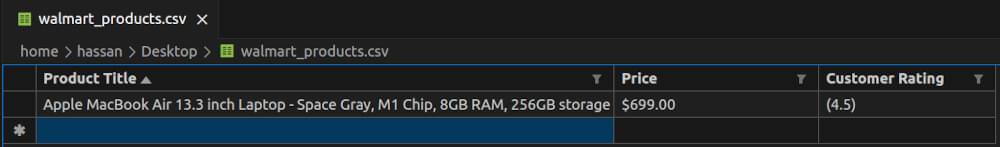

Here is the snapshot of the walmart_products.csv file generated after executing the code:

By following these steps of ChatGPT scraping, you’ll be well-equipped to efficiently scrape websites with ChatGPT tailored to your specific needs. Let’s move on to the next steps of ChatGPT data scraping.

3. Tips and Tricks for Using ChatGPT Like a Pro

Here are some tips and tricks to optimize your ChatGPT web scraping experience:

Requesting Code Editing Assistance

In case the code generated is not up to your specifications or gives an unanticipated outcome, ChatGPT provides code editing help to customize the code to meet your requirements. All you have to do is pinpoint the changes you would want, for example, rearranged elements in scraping or code refinement. ChatGPT could also recommend better-suited code or propose amendments for automated alteration scraping.

Linting

Code quality is another aspect to keep in mind as one goes about their web scraping. You can simplify your code and keep it clean from any potential syntax errors by following best practices and coding style guides from ChatGPT assistance. Ask ChatGPT to adhere to some coding standard .Optionally, add lint the code in the additional instructions of the prompt’s.

Optimizing Code Efficiency

Efficiency is everything in web scraping. This is even more critical where large datasets surface or the subject to be scrapped is intricate. To better this aspect, it would be useful to tap ChatGPT to enlighten you on optimizing your code. In particular, you could enquire about the most suitable frameworks and packages to accelerate the scraping process, use caching, concurrency or scaling to bargain parallel processing and curtail the number of redundant network connections.

Implementing Pagination Strategies

With techniques such as iterating through pages, adjusting page parameters and utilizing scroll parameters to retrieve all relevant data, you can streamline the pagination process and ensure complete data extraction from paginated web pages.

By incorporating these pro tips into your scraping workflow, you can enhance your scraping experience and achieve more accurate and efficient results.

4. Finding Solutions for Dynamically Rendered Content with ChatGPT

Navigating websites with dynamically rendered content can pose challenges for web scrapers. However, with ChatGPT’s assistance, you can effectively extract data from these types of web pages. Here are some techniques to handle dynamically rendered content:

Using Headless Browsers

Headless browsers allow you to interact with web pages programmatically without the need for a graphical user interface. ChatGPT can provide guidance on leveraging headless browsers to scrape dynamically rendered content. By simulating user interactions and executing JavaScript code, headless browsers enable you to access and extract data from dynamically generated elements on the page.

Utilizing Dedicated APIs

Dedicated APIs, such as the Crawlbase Crawling API, offer an alternative approach to scraping dynamically rendered content. These APIs provide structured access to web data, allowing you to retrieve dynamic content in a reliable and efficient manner. ChatGPT can help you explore the capabilities of dedicated APIs and integrate them into your web scraping workflow for enhanced efficiency and scalability.

Parsing Dynamic HTML

ChatGPT can offer suggestions on parsing dynamic HTML content to extract the information you need. By analyzing the structure of the web page and identifying dynamic elements, you can use parsing techniques to extract relevant data. ChatGPT can provide guidance on selecting appropriate parsing methods and libraries to effectively scrape dynamically rendered content.

Automating Interactions

In some cases, automating interactions with web pages may be necessary to access dynamically rendered content. ChatGPT can provide recommendations on automating interactions using simulated user actions. By simulating clicks, scrolls, and other interactions, you can navigate through dynamic elements on the page and extract the desired data.

With ChatGPT’s assistance, handling dynamically rendered content becomes more manageable. By implementing these techniques, you can overcome the challenges associated with scraping dynamic web pages and extract valuable data for your projects.

5. Understanding ChatGPT’s Limitations and Workarounds

As powerful as ChatGPT is, it’s essential to be aware of its limitations to effectively navigate the web scraping process. Here’s a closer look at some common challenges and potential workarounds when using ChatGPT for web scraping:

Peculiarities with ChatGPT

ChatGPT, powered by large language models like GPT-3, may sometimes return responses that are factually incorrect or inconsistent with reality. This phenomenon, known as the “hallucination problem,” can affect the accuracy of the generated code snippets. To mitigate this issue, it’s crucial to review and verify the ChatGPT response and the resulting code before executing it.

Handling Anti-Scraping Measures

Many websites implement strong security measures, such as CAPTCHAs and request rate limiting, to prevent automated scrapers from accessing their content. As a result, simple ChatGPT-generated scrapers may encounter difficulties when attempting to scrape these sites. However, there are workarounds available, such as Crawlbase’s Crawling API. This API provides features like IP rotation and bypassing CAPTCHAs, helping to minimize the chances of triggering automated bot detection.

Addressing Hardware Limitations

While ChatGPT simplifies the process of writing web scrapers, it lacks the hardware resources to provide web proxies and support more scalable scraping operations. This limitation may pose challenges when dealing with large-scale web scraping projects or complex web scraping tasks. To overcome this limitation, consider optimizing your code for efficiency, leveraging caching techniques, and minimizing unnecessary network calls.

6. Final Thoughts

Using ChatGPT for web scraping has revolutionized the process, making it easier and more accessible than ever before. While ChatGPT simplifies the creation of web scrapers, it’s essential to acknowledge its limitations.

Despite its capabilities, ChatGPT may occasionally produce unexpected results due to inherent peculiarities in its Generative AI development services model. Additionally, it doesn’t provide direct assistance in bypassing CAPTCHAs or offering web proxies for more scalable scraping.

If you found this guide helpful, be sure to explore our blogs for additional resources and tutorials. Whether you’re a beginner learning the basics of web scraping or an expert seeking advanced techniques to overcome anti-bot systems, we have something to offer everyone.

7. Frequently Asked Questions

Q. Can ChatGPT scrape websites directly?

No, ChatGPT is not designed to directly scrape data from websites. Instead, it assists in generating code for web scraping based on provided instructions and prompts. ChatGPT can help streamline the process of creating web scraping scripts by generating Python code snippets tailored to specific scraping tasks.

Q. How can I ensure my web scraping activities remain anonymous?

Maintaining anonymity while web scraping involves several strategies:

- Use of Proxies: Utilize a proxy server to hide your IP address and location, reducing the risk of detection by websites.

- IP Rotation: Rotate IP addresses to prevent websites from identifying patterns associated with scraping activity.

- User-Agent Spoofing: Mimic legitimate user agents to make scraping requests appear as organic user traffic.

- Request Rate Limiting: Implement scraping logic to mimic human behavior, such as pacing requests and avoiding rapid or excessive scraping activity.

Q. Can AutoGPT do web scraping?

Yes, AutoGPT is capable of performing web scraping tasks. AutoGPT is an automated version of GPT (Generative Pre-trained Transformer) models, similar to ChatGPT but geared towards generating code automatically. It can be trained to understand web scraping tasks and generate Python code to extract data from websites without human intervention. So, if you need to scrape data from websites, AutoGPT can be trained to help you with that.

Q. Can ChatGPT analyze a webpage?

Yes, ChatGPT can analyze webpages. You can interact with ChatGPT by asking questions or giving it tasks related to a webpage, and it will assist you in understanding or manipulating the information present on that page. For instance, you can ask ChatGPT to summarize the content of a webpage, extract specific data, or even analyze the sentiment of the text on the page. ChatGPT can be a helpful tool for processing and interpreting information from websites.

Q. Can GPT-4 read HTML?

Yes, GPT-4 has the ability to understand HTML. GPT-4, like its predecessors, is a powerful language model trained on a vast amount of text data, which includes HTML code. As a result, it can comprehend and work with HTML code just like a human can. This means that GPT-4 can interpret HTML tags, structure, and content, allowing it to process and manipulate web pages effectively. Whether it’s extracting specific elements from HTML or generating HTML code itself, GPT-4 can handle various tasks related to HTML processing.

Q. Does ChatGPT scrape the internet?

Nope, ChatGPT don’t do that! ChatGPT is designed to respect people’s privacy and only use the information it was trained on, like books, websites, and other texts, up until January 2022. So, ChatGPT can’t access or scrape the internet for new information. ChatGPT is like a library book, filled with knowledge up to a certain date, but it can’t check out anything new!