If your project involves financial data such as news, reports, stock quotes, or anything related to financial management, Yahoo Finance remains a top choice for data extraction. With over 335 million visitors in March 2024, it’s a clear leader compared to other established players in the market like USA Today (203 million), Business Insider (154 million), and Bloomberg (67 million).

Ready to automate your data collection from Yahoo Finance? This article will guide you through the process step-by-step. We’ll show you exactly how to utilize the Crawlbase API with Python to build a powerful and efficient scraper. Follow along and see how easy it can be.

Table of Contents

2. Prerequisites of Scraping Financial Data

4. Scraping Yahoo Finance Webpage

5. Scraping the Title from Yahoo Finance HTML using bs4

6. How to Scrape Financial Prices

8. Scraping Stock Closing Date on Yahoo Finance

9. Complete the Yahoo Finance Scraper

1. Project Scope

The scope of this project includes the development of a web scraping tool utilizing the Python programming language, Crawlbase’s Crawling API, and BeautifulSoup library. Our primary objective is to extract specific data from web pages hosted on the Yahoo Finance website and presenting the information in a clean format, focusing on stock market information such as company names, stock prices, changes, and closing dates.

Objectives of Scraping Financial Data from Yahoo

Web Scraping Tool Development: Develop a Python based web scraping tool capable of extracting data from targeted web pages on Yahoo Finance and presenting the data extracted in a readable format. Our target site relies on AJAX to load and update the data dynamically so we will need a tool that is capable of processing JavaScript.

Crawlbase API Integration: Integrate the Crawling API into the scraping tool to have a more efficient retrieval of HTML content from the target web pages. The Crawling API will be used to load and process dynamic content by using its JavaScript rendering capabilities and at the same time avoid any potential IP blocks and CAPTCHAs.

Data Parsing: Utilize the BeautifulSoup library to parse the HTML content, remove unwanted information, and extract clean and relevant data with precise selectors.

Data Export: Export the extracted data into a structured format, specifically JSON, for further analysis and utilization.

This guide will equip you with the knowledge to build a web scraper for Yahoo Finance stock data. With this project, you can unlock valuable insights that you can use for various purposes like market research, analysis, and more.

2. Prerequisites of Scraping Financial Data

As a good practice for developers, we should always first discuss the requirements of the project. We should know the essentials before we continue into the actual coding phase. So here are the important foundations for this project:

Basic Python Knowledge

Since we will be using Python and Beautifulsoup, it is natural that you should have a basic understanding of the Python programming language. If this is your first time, we encourage you to enroll to basic courses or at least watch video tutorials and do some basic coding exercises before attempting to build your own scraper.

Python installed

If Python isn’t already installed on your system, head over to the official Python website and download the latest version. Follow the installation instructions provided to set up Python on your machine.

About IDEs

There are several Integrated Development Environments (IDEs) available for Python that you can use for this project, each with its own features and advantages. Here are some popular options:

PyCharm: Developed by JetBrains, PyCharm is a powerful and feature-rich IDE with intelligent code completion, code analysis, and debugging capabilities. It comes in two editions: Community (free) and Professional (paid).

Visual Studio Code (VS Code): Developed by Microsoft, VS Code is a lightweight yet powerful IDE with extensive support for Python development through extensions. It offers features like IntelliSense, debugging, and built-in Git integration.

JupyterLab: JupyterLab is an interactive development environment that allows you to create and share documents containing live code, equations, visualizations, and narrative text. It’s particularly well-suited for data science and research-oriented projects.

Sublime Text: Sublime Text is a lightweight and fast text editor known for its speed and simplicity. It offers a wide range of plugins and customization options, making it suitable for Python development when paired with the right plugins.

You have the flexibility to use any of these IDEs to interact with the Crawling API or any other web service using HTTP requests. Choose the one that you’re most comfortable with and that best fits your workflow and project requirements.

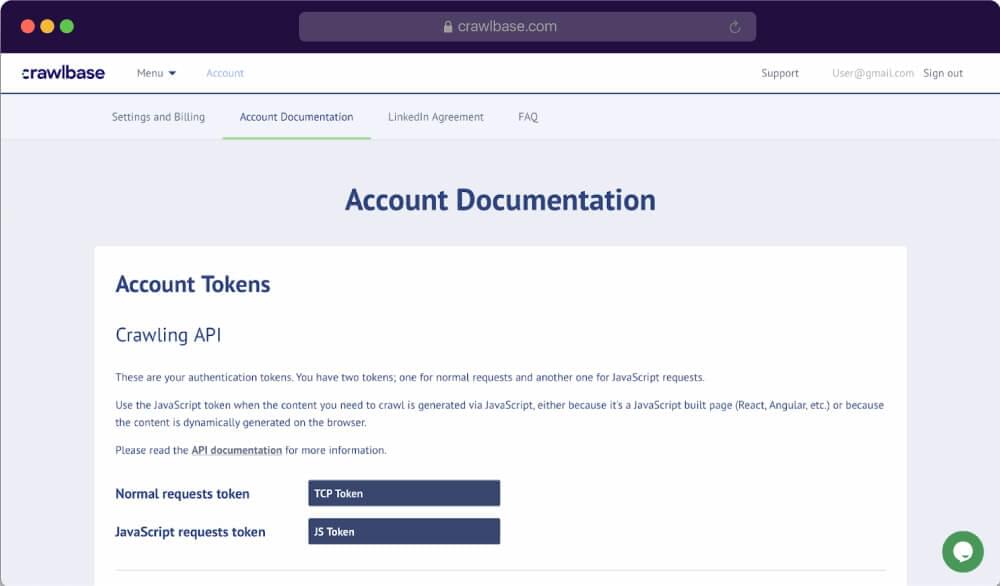

Crawlbase API Account

The Crawling API from Crawlbase will be in the center of this project. It is important that you have an account and get hold of your account tokens before starting this guide for a more smoother flow once engaged in the coding phase.

Simply sign up for an account and go to your account documentation to get your tokens. For this project, we will use the JavaScript request token to crawl the Yahoo finance pages.

3. Installing Dependencies

Once Python is installed and you’ve figured out what IDE you prefer, it’s time to install the necessary packages for our project. In programming, a package is a collection of related modules or classes that are organized together to provide a set of functionalities. Packages help in organizing code into hierarchical namespaces, which makes it easier to manage and reuse code across different projects.

To install a package, simply open your command prompt (Windows) or terminal (macOS/Linux), create a directory where you want to store your Python code and use pip command to install the packages as shown below:

1 | pip install crawlbase |

The commands above will install the following:

Crawlbase Python library: A lightweight, dependency free Python class that acts as wrapper for Crawlbase API. It is essentially a package so you can easily integrate various Crawlbase APIs including the Crawling API into your project.

Beautifulsoup4: A Python library used for web scraping purposes. It allows you to extract data from HTML and XML files, making it easier to parse and navigate through the document’s structure. Beautiful Soup provides a simple interface for working with HTML and XML documents by transforming the raw markup into a navigable parse tree.

Additionally we will be using JSON module to export the data into JSON file, it is a built-in package of Python, which can be used to work with JSON data.

4. Scraping Yahoo Finance webpage

Now it’s time to write our code. We will first write a code to crawl the complete HTML source code of our target web page. In this step, we will be utilizing the Crawlbase package.

Start by opening your preferred text editor or IDE and create a new Python file. For the purpose of this guide, let’s create a file named scraper.py from your terminal/console:

1 | touch scraper.py |

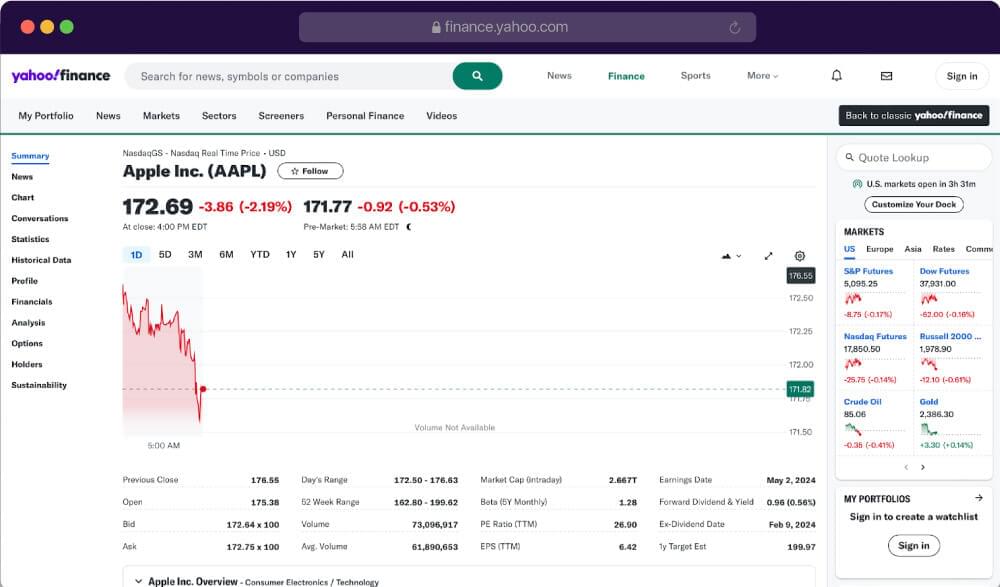

For demonstration, we will be targeting this Yahoo finance page.

Copy the complete code below and read the explanation to understand each section:

1 | from crawlbase import CrawlingAPI |

How Scraping Yahoo Finance Works:

we’ve imported the CrawlingAPI class from the crawlbase module. This class allows interaction with the Crawlbase API for web crawling purposes.

The crawl function takes two parameters: page_url (the URL of the page to crawl) and api_token (the API token used to authenticate requests to the Crawlbase API).

The code is then wrapped in a try block to handle potential errors. If any errors occur during execution, they’re caught and handled in the except block.

An instance of the CrawlingAPI class is created with the provided API token and the get method is used to make a GET request to the specified page_url. The response from the API is stored in the response variable.

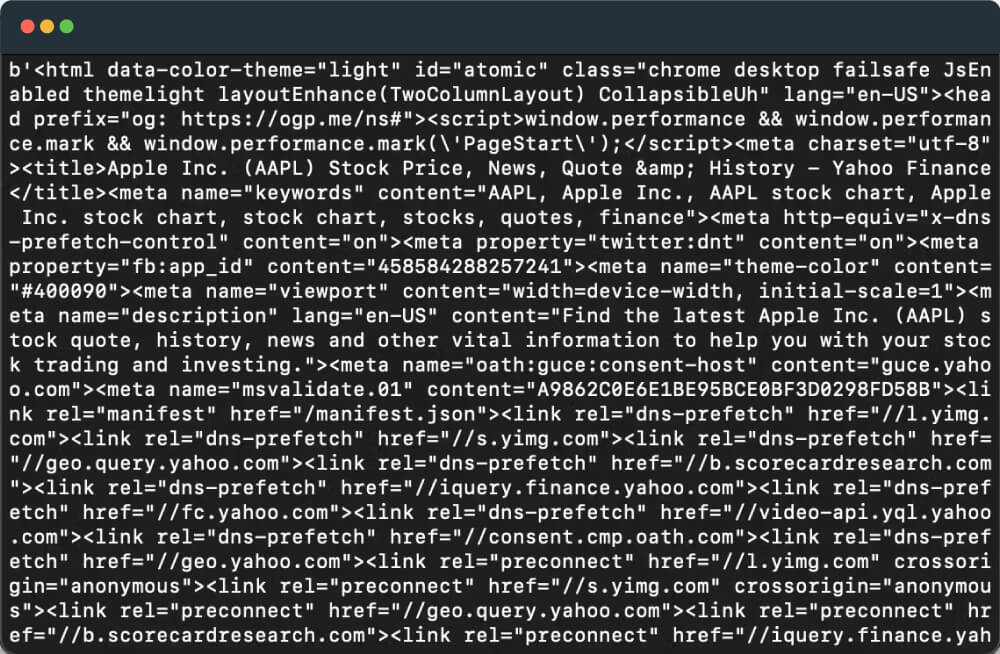

The script also checks if the HTTP status code of the response is 200, which indicates that the request was successful. If the request was successful, the body of the response (HTML source code) is printed to the console. If the request fails or any exceptions occur during execution, error messages are printed to the console.

Let’s try to execute the code. You can go to your console again and enter the command below:

1 | python scraper.py |

If successful, you will get a response similar to this:

5. Scraping the Title from Yahoo Finance HTML using bs4

For this section, we will now focus on scraping the content of the HTML source code we’ve obtained from crawling the Yahoo finance webpage. We should start by calling the Beautiful Soup library to parse the HTML and present it in JSON format.

1 | from crawlbase import CrawlingAPI |

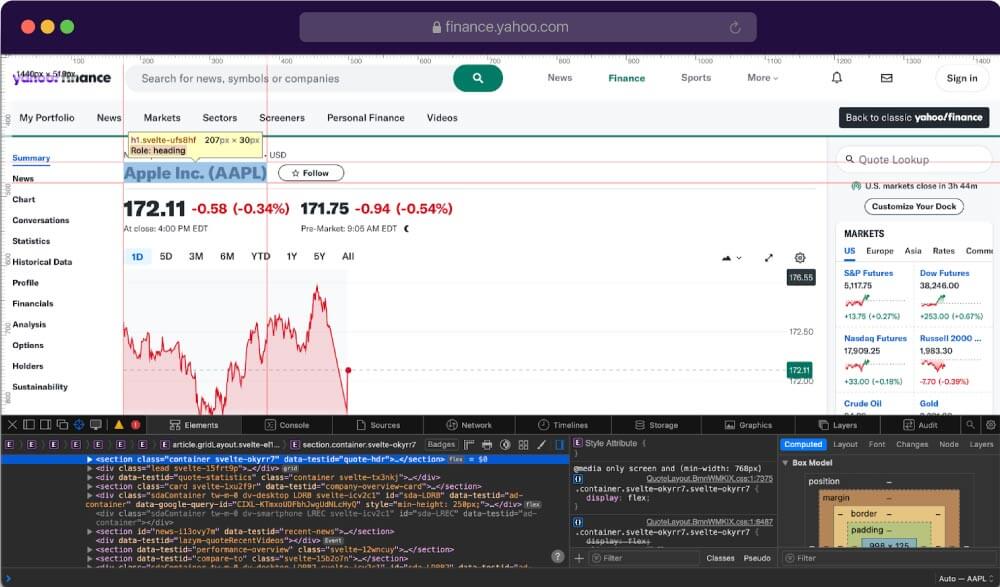

Next we will need to look for the data we want to extract. Search for the Company name or the Title first. You will have to inspect the structure of the webpage using browser developer tools or viewing the page source by highlighting the Title, right-clicking, and selecting “Inspect” option.

Once you have the line for the title element, simply utilize the BeautifulSoup selector to extract the data. Here’s how you can write the code:

1 | def scrape_data(response): |

The code starts by trying to parse the HTML content of the web page. It uses the BeautifulSoup constructor, passing the HTML content (response['body']) and the parser type ('html.parser').

Inside the try block, the function attempts to extract specific data from the parsed HTML. It tries to find an <h1> element with a class name 'svelte-ufs8hf' using the select_one method provided by Beautiful Soup.

Once the element is found, it retrieves the text content of the <h1> element and assigns it to the variable title. If the <h1> element is not found, title is set to None.

In case of an error, it prints an error message to the console and returns an empty dictionary as a fallback.

6. How to Scrape Financial Prices

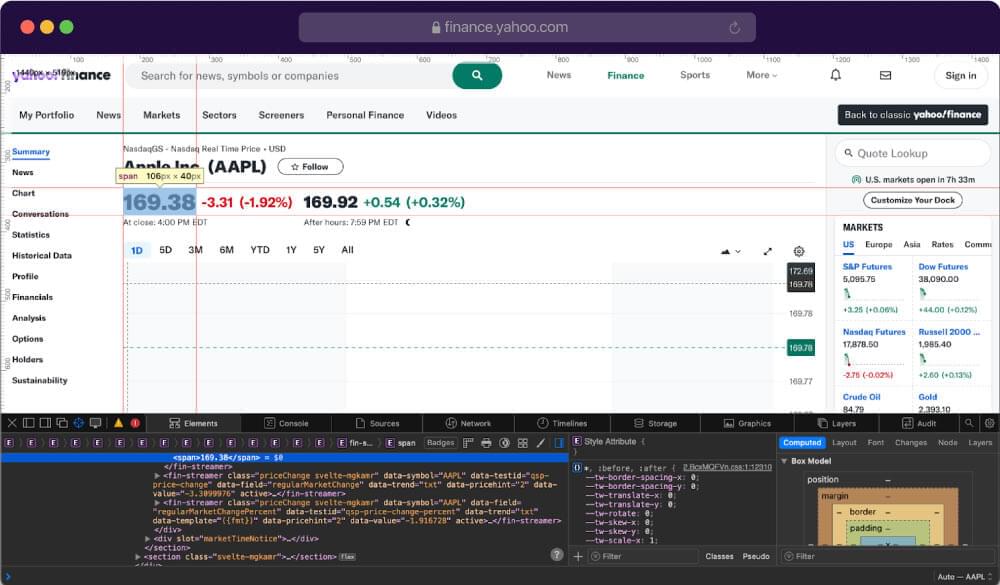

The next relevant data we want to extract for the Yahoo finance webpage is the most recent trading price of a financial asset or simply the Price. Start by highlighting the price and inspecting it like shown in the image below:

Write the code to extract the Price element:

1 | def scrape_data(response): |

Same as the code above, this will allow us to extract the specific element from the complete HTML source code and remove any irrelevant data for our project.

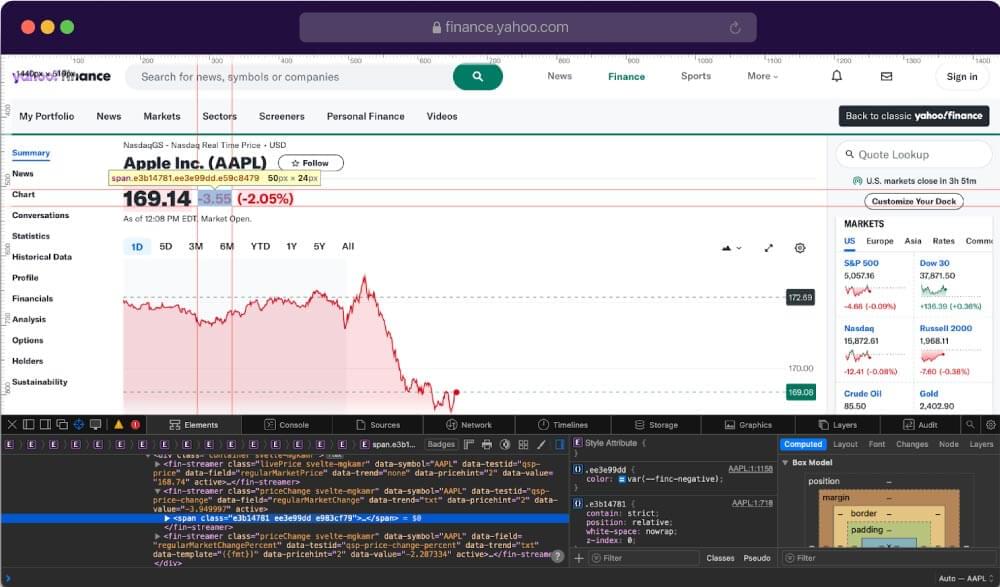

7. Scraping the Price change

Our next target data would be the Price change. This value represents the change in the price of a financial asset, such as a stock, from its previous close.

Again, simply highlight the change price and get the appropriate selector for the element.

1 | def scrape_data(response): |

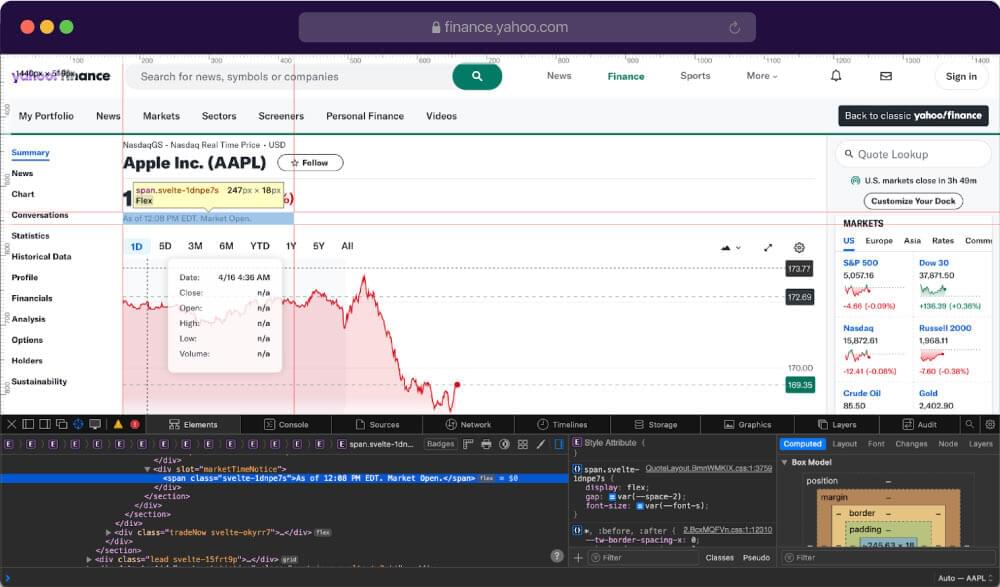

8. Scraping Stock Closing Date on Yahoo Finance

Lastly, we will also scrape the Closing date. It refers to the closing price of a financial asset (such as a stock) on a specific date. For example, if you see “at close” followed by the date “April 19, 2024,” it means that the information being provided pertains to the closing price of the asset on April 19, 2024.

Also note that if it shows “Market Open”, this indicates that the price being displayed is the price at which the asset started trading when the market opened.

Highlight the data and go to inspect to get the associated selector. Let’s write the code once again to extract the data using BeautifulSoup.

1 | def scrape_data(response): |

9. Complete the Yahoo Finance Scraper

After writing the selector for each of our target data, it’s time to compile the code and bring our scraper to action. For your convenience, we’ve compiled the code below and add some lines to save the response as a JSON file. Feel free to copy and save it to your local machine:

1 | from crawlbase import CrawlingAPI |

Execute the code to get the response. Use the command below:

1 | python scraper.py |

If successful, it should provide a similar output as shown below:

1 | { |

There it is. The JSON format response will allow you to use the data effectively. Use it to analyze the stock market, compare prices, and so on. The choice is yours.

10. Conclusion

Congratulations! You’ve completed a comprehensive guide on how to efficiently build a scraper for Yahoo Finance using Python, Crawlbase API, and BeautifulSoup. You’ve learned how to extract clean and useful data from web pages and customize it for your projects or analysis.

The code shared in this guide is available for everyone interested. We encourage you to actively engage with it, as it can be useful for everyone including all sorts of developers, data scientist, or even for a curious learner. You are free to modify and tailor the code to suit your specific requirements. Tweak it for automation, scraping data from other websites, extract different types of information, or add new functions.

We hope this guide serves its purpose and has equipped you with the skills and tools necessary to utilize web scraping effectively in your projects. Happy scraping, and may your data adventures lead you to new discoveries and insights!

If you are looking for other projects like this one, we would like you to check the following as well:

Playwright Web Scraping 2024 - Tutorial

Is there something you would like to know more about? Our support team would be glad to help. Please send us an email.

11. Frequently Asked Questions

Is web scraping legal for scraping data from Yahoo Finance?

Yes, web scraping itself is not inherently illegal, but it’s important to review and comply with the terms of service of the website you’re scraping. Yahoo Finance, may have specific terms and conditions regarding web scraping activities like many other websites. Make sure to familiarize yourself with these terms to avoid any legal issues.

How to scrape data from Yahoo finance?

- Identify the data to scrape and inspect the website

- Select a scraping tool or library to extract data from the web pages

- Use the chosen scraping tool to send an HTTP GET request to the target URL

- Parse the HTML content of the web page using the scraping tool’s parsing capabilities

- Depending on your needs, you can store the scraped data in a file, database, or data structure for later analysis or use it directly in your application.

What tools and libraries can I use for scraping Yahoo Finance?

There are several tools and libraries available for web scraping in Python, including BeautifulSoup, Scrapy, and Selenium. Additionally, you can utilize APIs such as Crawlbase API for easier access to web data. Choose the tool or library that best fits your project requirements and technical expertise.