Redfin.com is a real estate website filled with valuable information about homes, apartments, and properties all across the United States & Canada. Every month, millions of people visit Redfin to browse listings, check out neighborhoods, and dream about their next move. With millions of properties listed and years of data under its belt, Redfin is a big deal in the real estate business.

But how do regular folks like us can get this data? Well, that’s where web scraping comes in.

In this guide, we’re going to show you how to dig deep into Redfin and pull out all sorts of useful information about properties.

Table Of Contents

- Why Scrape Redfin Property Data?

- What can we Scrape from Redfin?

- Environment Setup for Redfin Scraping

- How to Scrape Redfin Property Pages

- Scrape Redfin Rental Property Pages

- Scrape Redfin Sales Property Pages

- Utilizing Sitemap Feeds for New and Updated Listings

- Implementing Redfin Feed Scraper in Python

- Overview of Redfin Anti-Scraping Measures

- Using Crawlbase Crawling API for Smooth Scraping

Why Scrape Redfin Property Data?

Scraping Redfin property data provides access to valuable insights and opportunities in real estate. It enables users to extract information on property listings, prices, and market trends, empowering informed decision-making and competitive advantage.

Whether you’re an investor, homeowner, or researcher, scraping Redfin offers direct access to relevant data, facilitating analysis and strategic planning.

What Can We Scrape from Redfin?

When it comes to scraping Redfin, the possibilities are vast and varied. We can scrape various real estate fields and targets from Redfin. You can explore everything from searching for properties to finding detailed listings for homes up for sale or rent using a redfin scraper.

Whether you’re interested in exploring properties for sale, searching for a rental, or eyeing investment opportunities, Redfin provides access to comprehensive information on property listings, prices, and market trends. Plus, you can also dig into info about land for sale, upcoming open house events, and even find details about real estate agents in given area.

While our focus in this guide will be on scraping real estate property rent, sale, and search pages, it’s important to note that the techniques and strategies we’ll cover can be easily adapted to extract data from other pages across the Redfin platform.

Let’s create a custom Redfin scrapers for each one.

Environment Setup for Redfin Scraping

The first thing to setup a custom Redfin scraper is ensuring all required libraries are installed so lets go ahead.

Python Setup: Begin by confirming whether Python is installed on your system. Open your terminal or command prompt and enter the following command to check the Python version:

1 | python --version |

If Python is not installed, download the latest version from the official Python website and follow the installation instructions provided.

Creating Environment: For managing project dependencies and ensuring consistency, it’s recommended to create a virtual environment. Navigate to your project directory in the terminal and execute the following command to create a virtual environment named redfin_env:

1 | python -m venv redfin_env |

Activate the virtual environment by running the appropriate command based on your operating system:

On Windows:

1

redfin_env\Scripts\activate

On macOS/Linux:

1

source redfin_env/bin/activate

Installing Libraries: With your virtual environment activated, install the required Python libraries for web scraping. Execute the following commands to install the requests and beautifulsoup4 libraries:

1 | pip install requests |

Choosing IDE: Selecting a suitable Integrated Development Environment (IDE) is crucial for efficient coding. Consider popular options such as PyCharm, Visual Studio Code, or Jupyter Notebook. Install your preferred IDE and ensure it’s configured to work with Python.

Once your environment is ready, you’ll be all set to use Python to scrape Redfin’s big collection of real estate data.

How to Scrape Redfin Property Pages

When it comes to scraping Redfin property pages, there are two main types to focus on: rental property pages and sales property pages. Let’s break down each one:

Scrape Redfin Rental Property Pages

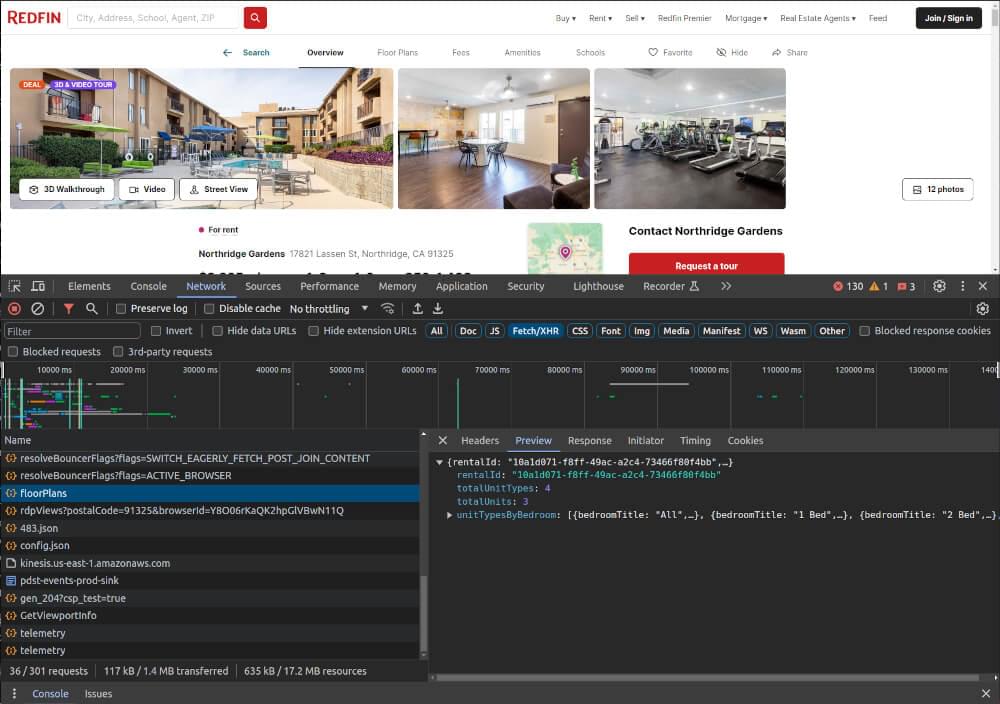

Scraping rental property pages from Redfin involves utilizing a private API employed by the website. To initiate this process, follow these steps:

- Identify Property Page for Rent: Navigate to any property page on Redfin that is available for rent. For example this.

- Access Browser Developer Tools: Open the browser’s developer tools by pressing the F12 key and navigate to the Network tab.

- Filter Requests: Filter requests by selecting Fetch/XHR requests.

- Reload the Page: Refresh the page to observe the requests sent from the browser to the server.

Among the requests, focus on identifying the floorPlans request, which contains the relevant property data. This request is typically sent to a specific API URL, such as:

1 | https://www.redfin.com/stingray/api/v1/rentals/rental_id/floorPlans |

The rental_id in the API URL represents the unique identifier for the rental property. To extract this data programmatically, Python can be used along with libraries like requests and BeautifulSoup. Below is a simplified example demonstrating how to scrape rental property pages using Python:

1 | import requests |

In this example, the scrape_rental_property function extracts the rental ID from the HTML of the property page and constructs the corresponding API URL. Subsequently, it sends a request to the API URL to retrieve the property data in JSON format.

Example Output:

1 | { |

Scrape Redfin Sales Property Pages

Scraping sales property pages using redfin scraper involves using XPath and CSS selectors as there’s no dedicated API for fetching the data. Below is a simplified example demonstrating how to scrape redfin sales property pages using Python with the requests and BeautifulSoup libraries:

1 | import requests |

In this example, the parse_property_for_sale function extracts property data from the HTML content of sales property pages using BeautifulSoup and returns it as a JSON object. Then, the scrape_property_for_sale function iterates over a list of property page URLs, retrieves their HTML content using requests, and parses the data using the parse_property_for_sale function.

Example Output:

1 | Scraped 2 property listings for sale |

How to Scrape Redfin Search Pages

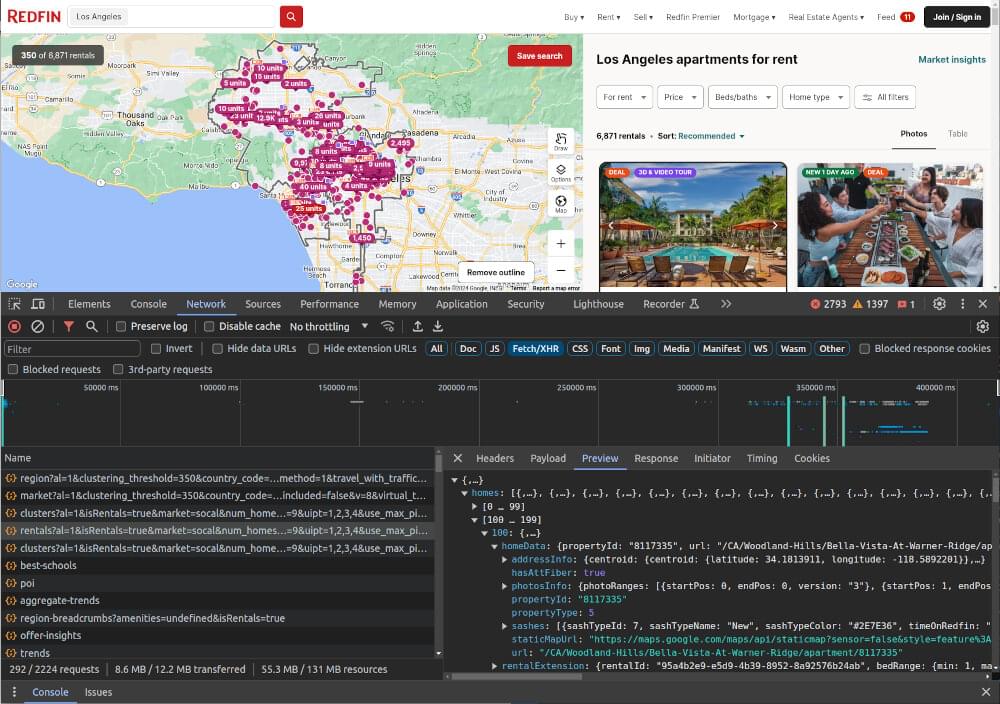

When you want to scrape redfin data from it’s search pages, you can do so by tapping into their private search API, which provides the information you need in JSON format. Here’s how you can find and access this API:

- Go to any search page on redfin.com.

- Press the F12 key to open the browser developer tools and peek into the page’s HTML.

- Search for the location (e.g. Los Angeles).

- Search for the API which meets your expectations in the network tab.

By following these steps, you’ll find the API request responsible for fetching data related to your specified search area. To locate this API, head over to the network tab and filter the requests by Fetch/XHR.

To actually scrape Redfin search results, you’ll need to grab the API URL from the recorded requests and use it to retrieve all the search data in JSON format. Here’s a simple Python script to help you do that:

1 | import requests |

In this script, the scrape_search function sends a request to the search API URL and then extracts the relevant JSON data from the API response. Executing this code provides us with property data retrieved from all pagination pages of the search results.

Click here to see the sample output.

Track Redfin Listing Changes Feeds

Keeping up-to-date with the newest developments in Redfin listings is essential for a range of purposes, whether you’re buying, selling, or simply interested in real estate. Here’s a simple way to stay informed about these updates:

Utilizing Sitemap Feeds for New and Updated Listings

Redfin offers sitemap feeds that provide information about both new listings and updates to existing ones. These feeds, namely newest and latest, are invaluable resources for anyone looking to stay informed about the dynamic real estate market. Here’s what each of these feeds signals:

- Signals when new listings are posted.

- Signals when listings are updated or changed.

By scraping these sitemaps, you can retrieve the URL of the listing along with the timestamp indicating when it was listed or updated. Here’s a snippet of what you might find in these sitemaps:

1 | <url> |

Note: The timezone used in these sitemaps is UTC-8, as indicated by the last number in the datetime string.

Implementing Redfin Feed Scraper in Python

To scrape these Redfin feeds and retrieve the URLs and timestamps of the recent property listings, you can use Python along with the requests library. Here’s a Python script to help you achieve this:

1 | import requests |

Running this script will provide you with the URLs and dates of the recently added property listings on Redfin. Once you have this information, you can further utilize your Redfin scraper to extract property datasets from these URLs.

Example Output:

1 | { |

Bypass Redfin Blocking with Crawlbase

In the process to scrape Redfin data efficiently, encountering blocking measures can be a hurdle. However, with the right approach, you can easily bypass captchas and blocks. Let’s see how Crawlbase custom redfin scraper helps you with it.

Overview of Redfin Anti-Scraping Measures

Redfin employs various anti-scraping measures to protect its data from being harvested by automated bots. These measures may include IP rate limiting, CAPTCHAs, and user-agent detection. To bypass these obstacles, it’s essential to adopt strategies that mimic human browsing behavior and rotate IP addresses effectively.

Using Crawlbase Crawling API for Smooth Redfin Scraping

Crawlbase offers a comprehensive solution for scraping data from Redfin without triggering blocking mechanisms. By leveraging Crawlbase’s Crawling API, you gain access to a pool of residential IP addresses, ensuring smooth and uninterrupted scraping operations. Additionally, Crawlbase handles user-agent rotation and CAPTCHA solving, further enhancing the scraping process.

Crawlbase provides its own Python library to facilitate its customers. You just need to can replace requests library with crawlbase library to send requests. Use pip install crawlbase command to install it. You need to have an access token to authenticate when using it, which you can obtain after creating an account.

Here’s an example function of using the Crawling API from the Crawlbase library to send requests.

1 | from crawlbase import CrawlingAPI |

Note: First 1000 Crawling API requests are free of cost. No Credit Card required. You can read API documentation here.

With our API, you can execute scraping tasks with confidence, knowing that your requests are indistinguishable from genuine user interactions. This approach not only enhances scraping efficiency but also minimizes the risk of being detected and blocked by Redfin’s anti-scraping mechanisms.

Final Thoughts

Scraping data from Redfin can be a valuable tool for various purposes, such as market analysis, property valuation, and real estate monitoring. By using web scraping techniques and tools like the Redfin scraper, people and companies can collect useful information about the real estate market.

However, it’s essential to approach web scraping ethically and responsibly, respecting the terms of service and privacy policies of the websites being scraped. Additionally, considering the potential for IP blocking and other obstacles, it’s wise to use anti-blocking techniques like rotating proxies and changing user-agent strings to stay hidden. One solution to tackle these blocking measures is by using Crawlbase Crawling API.

If you’re interested in learning how to scrape data from other real estate websites, check out our helpful guides below.

📜 How to Scrape Realtor.com

📜 How to Scrape Zillow

📜 How to Scrape Airbnb

📜 How to Scrape Booking.com

📜 How to Scrape Expedia

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy scraping!

Frequently Asked Questions (FAQs)

Q. Can I scrape data from Redfin legally?

Yes, you can scrape data from Redfin, but it’s essential to do so responsibly and ethically. Redfin’s terms of service prohibit automated scraping, so it’s crucial to review and adhere to their policies. To avoid any legal issues, consider the following:

- Respect Redfin’s robots.txt file, which outlines the parts of the site that are off-limits to crawlers.

- Scrape only publicly available data and avoid accessing any private or sensitive information.

- Limit the frequency of your requests to avoid overloading Redfin’s servers.

- If possible, obtain explicit permission from Redfin before scraping their site extensively.

Q. How can I prevent my scraping efforts from being blocked by Redfin?

To prevent your scraping efforts from being blocked by Redfin, you can employ several anti-blocking measures:

- Use rotating residential proxies to avoid detection and prevent IP blocking.

- Use a pool of user-agent strings to mimic human browsing behavior and avoid detection by Redfin’s anti-scraping mechanisms.

- Implement rate-limiting to control the frequency of your requests and avoid triggering Redfin’s automated detection systems.

- Consider using a service like Crawlbase Crawling API, which provides tools and features specifically designed to circumvent blocking measures and ensure smooth scraping operations.

Q. What tools and libraries can I use to scrape data from Redfin?

You can use various tools and libraries to scrape data from Redfin, including:

- Python: Libraries like Requests and BeautifulSoup provide powerful capabilities for sending HTTP requests and parsing HTML content.

- Scrapy: A web crawling and scraping framework that simplifies the process of extracting data from websites.

- Crawlbase: A comprehensive web scraping platform that offers features like rotating proxies, user-agent rotation, and anti-blocking measures specifically designed to facilitate smooth and efficient scraping from Redfin and other websites.

Q. Is web scraping from Redfin worth the effort?

Web scraping from Redfin can be highly valuable for individuals and businesses looking to gather insights into the real estate market. By extracting data on property listings, prices, trends, and more, you can gain valuable information for investment decisions, market analysis, and competitive research. However, it’s essential to approach scraping ethically, respecting the terms of service of the website and ensuring compliance with legal and ethical standards. Additionally, leveraging tools like the Crawlbase Crawling API can help streamline the scraping process and mitigate potential obstacles such as IP blocking and anti-scraping measures.