When it comes to the real estate industry, having access to accurate and up-to-date data can give you a competitive edge. One platform that has become a go-to source for real estate data is Zillow. With its vast database of property listings, market trends, and neighborhood information, Zillow has become a treasure trove of valuable data for homebuyers, sellers, and real estate professionals.

Zillow, boasting impressive site statistics, records millions of visits daily and hosts a staggering number of property listings. With a user-friendly interface and a diverse range of features, Zillow attracts a substantial audience seeking information on real estate trends and property details.

Real estate professionals rely heavily on accurate and comprehensive data to make informed decisions. Whether it’s researching market trends, evaluating property prices, or identifying investment opportunities, having access to reliable data is crucial. But manually extracting data from Zillow can be a tedious and time-consuming task. That’s where data scraping comes into play. Data scraping from Zillow empowers real estate professionals with the ability to collect and analyze large amounts of data quickly and efficiently, saving both time and effort.

Come along as we explore the world of Zillow data scraping using Python. We’ll kick off with a commonly used approach, understand its limitations, and then delve into the efficiency of the Crawlbase Crawling API. Join us on this adventure through the intricacies of web scraping on Zillow!

Table of Contents

- Zillow’s Search Paths

- Key data points available on Zillow

- Installing Python

- Installing essential libraries

- Choosing a suitable Development IDE

- Utilizing Python’s requests library

- Inspect the Zillow Page for CSS selectors

- Parsing HTML with BeautifulSoup

- Drawbacks and challenges of the Common approach

- Crawlbase Registration and API Token

- Accessing the Crawling API with Crawlbase Library

- Scraping Property pages URL from SERP

- Handling pagination for extensive data retrieval

- Scraping Data from Zillow Property Page URLs

- Saving Scraped Zillow Data in a Database

- Advantages of using Crawlbase’s Crawling API for Zillow Scraping

Understanding Zillow Scraping Set-up

Zillow offers a user-friendly interface and a vast database of property listings. With Zillow, you can easily search for properties based on your desired location, price range, and other specific criteria. The platform provides detailed property information, including the number of bedrooms and bathrooms, square footage, and even virtual tours or 3D walkthroughs in some cases.

Moreover, Zillow goes beyond just property listings. It also provides valuable insights into neighborhoods and market trends. You can explore the crime rates, school ratings, and amenities in a particular area to determine if it aligns with your preferences and lifestyle. Zillow’s interactive mapping tools allow you to visualize the proximity of the property to nearby amenities such as schools, parks, and shopping centers.

Zillow’s Search Paths

Zillow offers various other search filters, such as price range, property type, number of bedrooms, and more. By utilizing these filters effectively, you can narrow down your search and extract specific data that aligns with your needs. The URLs are categorized into distinct sections based on user queries and preferences. Here are examples of some main categories within the SERP URLs:

- Sale Listings:

https://www.zillow.com/{location}/sale/?searchQueryState={...} - Sold Properties:

https://www.zillow.com/{location}/sold/?searchQueryState={...} - Rental Listings:

https://www.zillow.com/{location}/rentals/?searchQueryState={....}

These URLs represent specific sections of Zillow’s database, allowing users to explore properties available for sale, recently sold properties, or rental listings in a particular location.

Key Data Points That Can be Scraped on Zillow

When scraping data from Zillow, it’s crucial to identify the key data points that align with your objectives. Zillow provides a vast array of information, ranging from property details to market trends. Some of the essential data points you can extract from Zillow include:

- Property Details: Includes detailed information about the property, such as square footage, the number of bedrooms and bathrooms, and the type of property (e.g., single-family home, condo, apartment).

- Price History: Tracks the historical pricing information for a property, allowing users to analyze price trends and fluctuations over time.

- Zillow Zestimate: Zillow’s proprietary home valuation tool that provides an estimated market value for a property based on various factors. It offers insights into a property’s potential worth.

- Neighborhood Information: Offers data on the neighborhood, including nearby schools, amenities, crime rates, and other relevant details that contribute to a comprehensive understanding of the area.

- Local Market Trends: Provides insights into the local real estate market, showcasing trends such as median home prices, inventory levels, and the average time properties spend on the market.

- Comparable Home Sales: Allows users to compare a property’s details and pricing with similar homes in the area, aiding in market analysis and decision-making.

- Rental Information: For rental properties, Zillow includes details such as monthly rent, lease terms, and amenities, assisting both renters and landlords in making informed choices.

- Property Tax Information: Offers data on property taxes, helping users understand the tax implications associated with a particular property.

- Home Features and Amenities: Lists specific features and amenities available in a property, providing a detailed overview for potential buyers or tenants.

- Interactive Maps: Utilizes maps to display property locations, neighborhood boundaries, and nearby points of interest, enhancing spatial understanding.

Understanding and leveraging these key data points on Zillow is essential for anyone involved in real estate research, whether it be for personal use, investment decisions, or market analysis.

How to Scrape Zillow with Python

Setting up a conducive Python environment is the foundational step for efficient real estate data scraping from Zillow. Here’s a brief guide to getting your Python environment ready:

Installing Python

Begin by installing Python on your machine. Visit the official Python website (https://www.python.org/) to download the latest version compatible with your operating system.

During installation, ensure you check the box that says “Add Python to PATH” to make Python accessible from any command prompt window.

Once Python is installed, open a command prompt or terminal window and verify the installation by using following command:

1 | python --version |

Installing Essential Libraries

For web scraping, you’ll need to install essential libraries like requests for making HTTP requests and beautifulsoup4 for parsing HTML. To leverage the Crawlbase Crawling API seamlessly, install the Crawlbase Python library as well. Use the following commands:

1 | pip install requests |

Choosing a Suitable Development IDE:

Selecting the right Integrated Development Environment (IDE) can greatly enhance your coding experience. There are several IDEs to choose from; here are a few popular ones:

- PyCharm: A powerful and feature-rich IDE specifically designed for Python development. It offers intelligent code assistance, a visual debugger, and built-in support for web development.

- VSCode (Visual Studio Code): A lightweight yet powerful code editor that supports Python development. It comes with a variety of extensions, making it customizable to your preferences.

- Jupyter Notebook: Ideal for data analysis and visualization tasks. Jupyter provides an interactive environment and is widely used in data science projects.

- Spyder: A MATLAB-like IDE that is well-suited for scientific computing and data analysis. It comes bundled with the Anaconda distribution.

Choose an IDE based on your preferences and the specific requirements of your real estate data scraping project. Ensure the selected IDE supports Python and provides the features you need for efficient coding and debugging.

Create a Zillow Scraper

In this section, we’ll walk through the common approach to creating a Zillow scraper using Python. This method involves using the requests library to fetch web pages and BeautifulSoup for parsing HTML to extract the desired information.

In our example, let’s focus on scraping properties on sale at location “Columbia Heights, Washington, DC”. Let’s break down the process into digestible chunks:

Utilizing Python’s Requests library for Zillow web scraping

The requests library allows us to send HTTP requests to Zillow’s servers and retrieve the HTML content of web pages. Here’s a code snippet to make a request to the Zillow website:

1 | import requests |

Open your preferred text editor or IDE, copy the provided code, and save it in a Python file. For example, name it zillow_scraper.py.

Run the Script:

Open your terminal or command prompt and navigate to the directory where you saved zillow_scraper.py. Execute the script using the following command:

1 | python zillow_scraper.py |

As you hit Enter, your script will come to life, sending a request to the Zillow website, retrieving the HTML content and displaying it on your terminal.

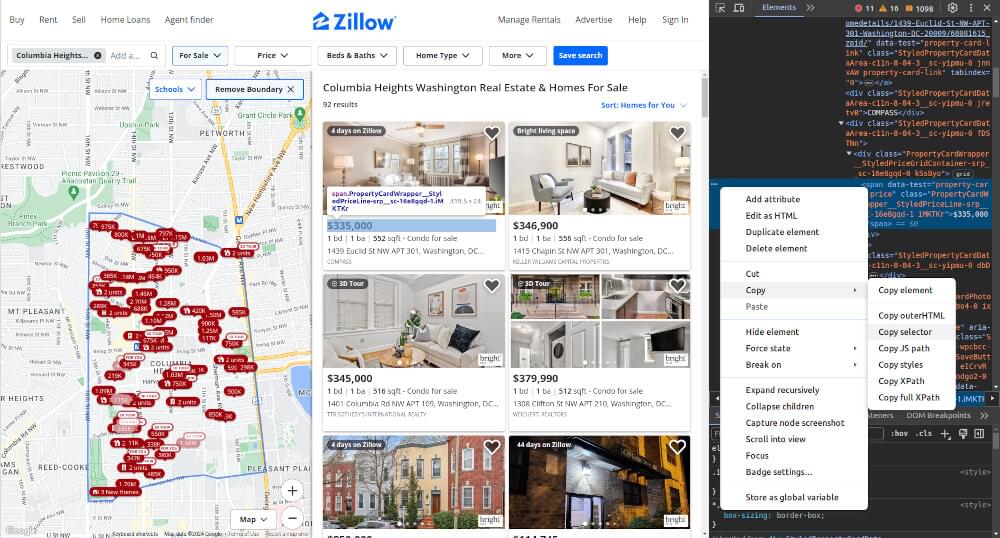

Inspect the Zillow Page for CSS selectors

With the HTML content obtained from the page, the next step is to analyze the webpage and pinpoint the location of data points we need.

- Open Developer Tools: Simply right-click on the webpage in your browser and choose ‘Inspect’ (or ‘Inspect Element’). This will reveal the Developer Tools, allowing you to explore the HTML structure.

- Traverse HTML Elements: Once in the Developer Tools, explore the HTML elements to locate the specific data you want to scrape. Look for unique identifiers, classes, or tags associated with the desired information.

- Pinpoint CSS Selectors: Take note of the CSS selectors that correspond to the elements you’re interested in. These selectors serve as essential markers for your Python script, helping it identify and gather the desired data.

Parsing HTML with BeautifulSoup

Once we’ve fetched the HTML content from Zillow using the requests library and CSS selectors are in our hands, the next step is to parse this content and extract the information we need. This is where BeautifulSoup comes into play, helping us navigate and search the HTML structure effortlessly.

In our example, we’ll grab web link to each property listed on the chosen Zillow search page. Afterwards, we’ll utilize these links to extract key details about each property. Now, let’s enhance our existing script to gather this information directly from the HTML.

1 | import requests |

But will the HTML we receive using requests contain the required information? Let see the output of above script:

1 | [ |

You will observe that the output only captures a portion of the anticipated results. This limitation arises because Zillow utilizes JavaScript/Ajax to dynamically load search results on its SERP page. When you make an HTTP request to the Zillow URL, the HTML response lacks a significant portion of the search results, resulting in the absence of valuable information. The dynamically loaded content is not present in the initial HTML response, making it challenging to retrieve the complete set of data through a static request.

While the common approach of using Python’s requests library and BeautifulSoup for Zillow scraping is a straightforward method, it comes with certain drawbacks and challenges such as rate limiting, IP blocking and mishandling dynamic content loading.

Scrape Zillow with Crawlbase

Now, let’s explore a more advanced and efficient method for Zillow scraping using the Crawlbase Crawling API. This approach offers several advantages over the common method and addresses its limitations. Its parameters allow us to handle various scraping tasks effortlessly.

Here’s a step-by-step guide on harnessing the power of this dedicated API:

Crawlbase Account Creation and API Token Retrieval

Initiating the process of extracting Target data through the Crawlbase Crawling API starts with establishing your presence on the Crawlbase platform. Let’s walk you through the steps of creating an account and obtaining your essential API token:

- Visit Crawlbase: Launch your web browser and go to the Signup page on the Crawlbase website to commence your registration.

- Input Your Credentials: Provide your email address and create a secure password for your Crawlbase account. Accuracy in filling in the required details is crucial.

- Verification Steps: Upon submitting your details, check your inbox for a verification email. Complete the steps outlined in the email to verify your account.

- Log into Your Account: Once your account is verified, return to the Crawlbase website and log in using the credentials you established.

- Obtain Your API Token: Accessing the Crawlbase Crawling API necessitates an API token, which you can locate in your account documentation.

Quick Note: Crawlbase offers two types of tokens – one tailored for static websites and another designed for dynamic or JavaScript-driven websites. Since our focus is on scraping Zillow, we will utilize the JS token. As an added perk, Crawlbase extends an initial allowance of 1,000 free requests for the Crawling API, making it an optimal choice for our web scraping endeavor.

Accessing the Crawling API with Crawlbase Library

The Crawlbase library in Python facilitates seamless interaction with the API, allowing you to integrate it into your Zillow scraping project effortlessly.The provided code snippet demonstrates how to initialize and utilize the Crawling API through the Crawlbase Python library.

1 | from crawlbase import CrawlingAPI |

Detailed documentation of the Crawling API is available on the Crawlbase platform. You can read it here. If you want to learn more about the Crawlbase Python library and see additional examples of its usage, you can find the documentation here.

Scraping Property Pages URL from SERP

To extract all the URLs of property pages from Zillow’s SERP, we’ll enhance our common script by bringing in the Crawling API. Zillow, like many modern websites, employs dynamic elements that load asynchronously through JavaScript. We’ll incorporate the ajax_wait and page_wait parameters to ensure our script captures all relevant property URLs.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

Handling Pagination for Extensive Data Retrieval

To ensure comprehensive data retrieval from Zillow, we need to address pagination. Zillow organizes search results across multiple pages, each identified by a page number in the URL. Zillow employs the {pageNo}_p path parameter for pagination management. Let’s modify our existing script to handle pagination and collect property URLs from multiple pages.

1 | from crawlbase import CrawlingAPI |

The first function, fetch_html, is designed to retrieve the HTML content of a given URL using an API, with the option to specify parameters. It incorporates a retry mechanism, attempting the request up to a specified number of times (default is 2) in case of errors or timeouts. The function returns the decoded HTML content if the server responds with a success status (HTTP 200), and if not, it raises an exception with details about the response status.

The second function, get_property_urls, aims to collect property URLs from multiple pages on a specified website. It first fetches the HTML content of the initial page to determine the total number of available pages. Then, it iterates through the pages, fetching and parsing the HTML to extract property URLs. The maximum number of pages to scrape is determined by the minimum of the total available pages and the specified maximum pages parameter. The function returns a list of property URLs collected from the specified number of pages.

Scraping Data from Zillow Property Page URLs

Now that we have a comprehensive list of property page URLs, the next step is to extract the necessary data from each property page. Let’s enhance our script to navigate through these URLs and gather relevant details such as property type, address, price, size, bedrooms & bathrooms count, and other essential data points.

1 | from crawlbase import CrawlingAPI |

This script introduces the scrape_properties_data function, which retrieves the HTML content from each property page URL and extracts details we need. Adjust the data points based on your requirements, and further processing can be performed as needed.

Example Output:

1 | [ |

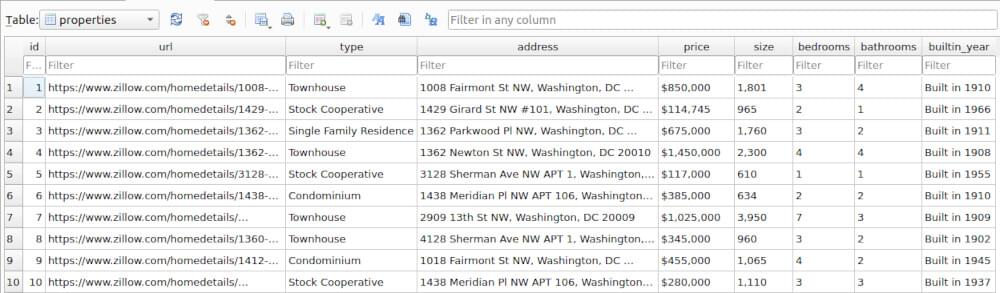

Saving Scraped Zillow Data in a Database

Once you’ve successfully extracted the desired data from Zillow property pages, it’s a good practice to store this information systematically. One effective way is by utilizing a SQLite database to organize and manage your scraped real estate data. Below is an enhanced version of the script to integrate SQLite functionality and save the scraped data:

1 | from crawlbase import CrawlingAPI |

This script introduces two functions: initialize_database to set up the SQLite database table, and insert_into_database to insert each property’s data into the database. The SQLite database file (zillow_properties_data.db) will be created in the script’s directory. Adjust the table structure and insertion logic based on your specific data points.

properties Table Snapshot:

Advantages of using Crawlbase’s Crawling API for Zillow Scraping

Scraping real estate data from Zillow becomes more efficient with Crawlbase’s Crawling API. Here’s why it stands out:

- Efficient Dynamic Content Handling: Crawlbase’s API adeptly manages dynamic content on Zillow, ensuring your scraper captures all relevant data, even with delays or dynamic changes.

- Minimized IP Blocking Risk: Crawlbase reduces the risk of IP blocking by allowing you to switch IP addresses, enhancing the success rate of your Zillow scraping project.

- Tailored Scraping Settings: Customize API requests with settings like

user_agent,format, andcountryfor adaptable and efficient scraping based on specific needs. - Pagination Made Simple: Crawlbase simplifies pagination handling with parameters like

ajax_waitandpage_wait, ensuring seamless navigation through Zillow’s pages for extensive data retrieval. - Tor Network Support: For added privacy, Crawlbase supports the Tor network via the

tor_networkparameter, enabling secure scraping of onion websites. - Asynchronous Crawling: The API supports asynchronous crawling with the async parameter, enhancing the efficiency of large-scale Zillow scraping tasks.

- Autoparsing for Data Extraction: Use the

autoparseparameter for simplified data extraction in JSON format, reducing post-processing efforts.

In summary, Crawlbase’s Crawling API streamlines Zillow scraping with efficiency and adaptability, making it a robust choice for real estate data extraction projects.

Potential Use Cases of Zillow Real Estate Data

Identifying Market Trends: Zillow data allows real estate professionals to identify market trends, such as price fluctuations, demand patterns, and popular neighborhoods. This insight aids in making informed decisions regarding property investments and sales strategies.

Property Valuation and Comparisons: Analyzing Zillow data enables professionals to assess property values and make accurate comparisons. This information is crucial for determining competitive pricing, understanding market competitiveness, and advising clients on realistic property valuations.

Targeted Marketing Strategies: By delving into Zillow data, real estate professionals can tailor their marketing strategies. They can target specific demographics, create effective advertising campaigns, and reach potential clients who are actively searching for properties matching certain criteria.

Investment Opportunities: Zillow data provides insights into potential investment opportunities. Real estate professionals can identify areas with high growth potential, emerging trends, and lucrative opportunities for property development or investment.

Client Consultations and Recommendations: Armed with comprehensive Zillow data, professionals can provide clients with accurate and up-to-date information during consultations. This enhances the credibility of recommendations and empowers clients to make well-informed decisions.

Final Thoughts

In the world of real estate data scraping from Zillow, simplicity and effectiveness play a vital role. While the common approach may serve its purpose, the Crawlbase Crawling API emerges as a smarter choice. Say goodbye to challenges and embrace a streamlined, reliable, and scalable solution with the Crawlbase Crawling API for Zillow scraping.

For those eager to explore data scraping from various platforms, feel free to dive into our comprehensive guides:

📜 How to Scrape Amazon

📜 How to Scrape Airbnb Prices

📜 How to Scrape Booking.com

📜 How to Scrape Expedia

Happy scraping! If you encounter any hurdles or need guidance, our dedicated team is here to support you on your journey through the realm of real estate data.

Frequently Asked Questions (FAQs)

Q1: Can you Scrape Zillow?

Web scraping is a complex legal area. While Zillow’s terms of service generally allow browsing, systematic data extraction may be subject to restrictions. It is advisable to review Zillow’s terms and conditions, including the robots.txt file. Always respect the website’s policies and consider the ethical implications of web scraping.

Q2: Can I use Zillow API data for commercial purposes?

The use of scraped data, especially for commercial purposes, depends on Zillow’s policies. It is important to carefully review and adhere to Zillow’s terms of service, including any guidelines related to data usage and copyright. Seeking legal advice is recommended if you plan to use the scraped data commercially.

Q3: Are there any limitations to using the Crawlbase Crawling API for Zillow scraping?

While the Crawlbase Crawling API is a robust tool, users should be aware of certain limitations. These may include rate limits imposed by the API, policies related to API usage, and potential adjustments needed due to changes in the structure of the target website. It is advisable to refer to the Crawlbase documentation for comprehensive information on API limitations.

Q4: How can I handle dynamic content on Zillow using the Crawlbase Crawling API?

The Crawlbase Crawling API provides mechanisms to handle dynamic content. Parameters such as ajax_wait and page_wait are essential tools for ensuring the API captures all relevant content, even if the web pages undergo dynamic changes during the scraping process. Adjusting these parameters based on the website’s behavior helps in effective content retrieval.