When it comes to Amazon SERP scraping, you have plenty of tools at your disposal. However, nothing quite revolutionizes web development like Next.js. Its ability to generate server-side pages and its smart routing make it the perfect choice for extracting data from websites like a pro.

In this blog, we’ll provide you with a step-by-step guide to creating your very own web-based Amazon SERP scraper. With an easy-to-use user interface and the combined power of Next.js and Crawlbase, we’ll help you steer clear of common web scraping challenges like IP bans and CAPTCHAs.

We have built a ready-to-use Amazon SERP scraper to meet your business needs. You can try it now.

To head right into the steps of building an Amazon SERP scraper, Click here.

Table of Contents

I. The Relevance of Crawlbase in SERP Scraping

VI. Scraping Amazon SERP Page using Crawlbase

VII. Mapping Product List: Creating Essential Frontend Components

VIII. Taking Your Next.js Project Live: Deployment Essentials

I. The Relevance of Crawlbase in SERP Scraping

By scraping Amazon’s SERPs, you can find out what products are popular, keep an eye on what your competitors are charging, and make your own product listings better so more people can see them.

That said, extracting valuable data from Amazon’s SERPs is a tough task due to various challenges and limitations. Amazon uses techniques like CAPTCHAs and dynamic data loading to hinder scraping. This is where Crawlbase steps in.

Crawlbase, a proficient web crawling and scraping platform, is the solution to Amazon SERP challenges. It streamlines the data extraction process, helping web scrapers overcome hurdles like CAPTCHAs, proxy rotation, and automatic content parsing. With Crawlbase, scraping becomes more efficient and reliable, ensuring a smoother path to obtaining valuable Amazon data.

However, even with Crawlbase in your corner, the efficiency of your scraping process is significantly enhanced by NextJS, a dynamic and versatile web framework. But what does Next.js really bring to the table? Let’s discuss it further.

II. Understanding Next.js

Next.js is a framework that empowers developers to construct full-stack web applications using React. With React for building user interfaces and Next.js for additional features and optimizations, it’s a dynamic combination.

Under the hood, Next.js takes care of the nitty-gritty technical details. It automatically handles tasks like bundling and compiling your code, freeing you from the hassle of extensive configuration. This allows you to focus on the creative aspect of building your web application.

Whether you’re an individual developer or part of a larger team, Next.js is a valuable asset. It enables you to create interactive, dynamic, and fast React applications, making it a fitting choice for web scraping tasks where you need efficient data extraction and the creation of user-friendly interfaces.

Simply put, Next.js provides developers with the essential tools and proven methods needed to create up-to-date web applications. When combined with Crawlbase, it forms a strong foundation for web development and is an excellent option for challenging web scraping tasks, such as extracting Amazon search results.

III. Project Scope

In this project, we aim to build the Amazon SERP scraper. To accomplish this, we have to do the following:

1. Build an Amazon SERP Scraper: We will create a custom scraper to extract data from Amazon’s SERP. It will be designed for easy and efficient use.

2. Utilize Crawlbase’s Crawling API: To tackle common web scraping challenges, we’ll make full use of Crawlbase’s Crawling API. The Crawling API utilizes rotating proxies and advanced algorithms to bypass IP bans and CAPTCHAs.

3. Optimize Efficiency with Next.js: Next.js will be our go-to framework for streamlining the web scraping process. Its features, such as server-side rendering, will boost efficiency.

4. Prioritize User-Friendliness: We’ll ensure that the tool offers an intuitive user interface, making the web scraping process straightforward for all users.

5. Overcome Common Challenges: Our goal is to handle typical web scraping hurdles effectively. Next.js and Crawlbase will be instrumental in this process.

Upon project completion, you’ll have the knowledge and tools to engage in web scraping confidently, making Amazon data accessible in a user-friendly and efficient way.

IV. Prerequisites

Before we head into steps to build our own Amazon SERP scraper with NextJS and Crawlbase, there are a few prerequisites and environment setup steps you need to ensure. Here’s what you’ll need:

1. Node.js Installed, Version 18.17 or Later: To run our project successfully, make sure you have Node.js installed on your system. You can download the latest version or use Node.js 18.17 or later. This is the engine that will execute our JavaScript code.

2. Basic Knowledge of ReactJS: Familiarity with ReactJS is a plus. While we’ll guide you through the project step by step, having a foundational understanding of React will help you grasp the concepts more easily.

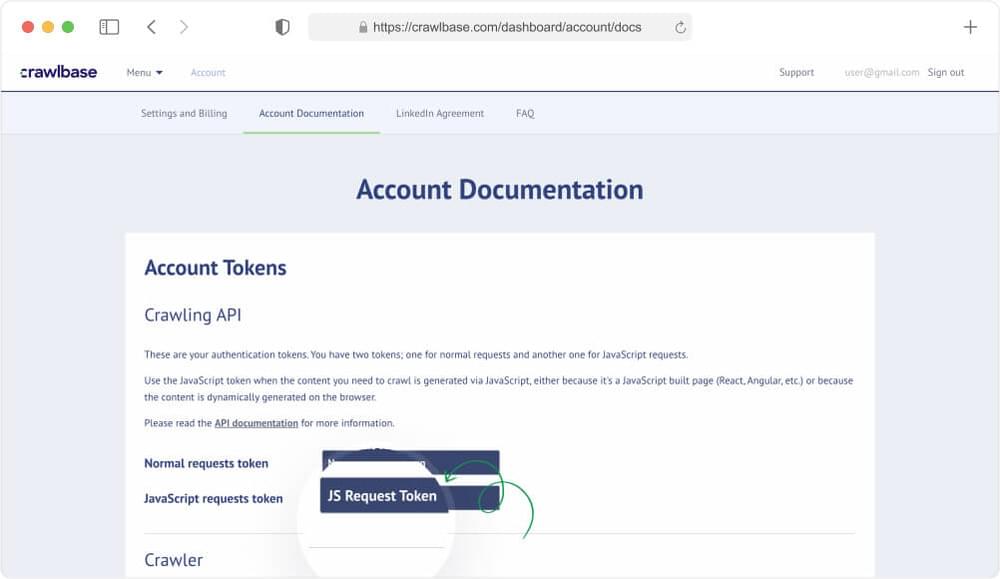

3. Crawlbase JavaScript Token: To fully leverage Crawlbase’s Crawling API, you’ll need access to a Crawlbase Token. In the context of our project, we’ll be using the JavaScript token. This specific token enables the API to utilize Crawlbase’s headless browser infrastructure for rendering JavaScript content. If you don’t have one, you can obtain it from the account documentation.

V. Next.js Project Setup

With our web scraping foundation firmly established, it’s now time to get our Next.js project up and running. This is where the magic happens, allowing us to create the pages we need for scraping in a highly efficient manner.

Thanks to Next.js Server-Side Rendering (SSR), these pages can be rendered directly on the server, making them ideal for our web scraping efforts.

To kickstart our Next.js project, you can simply execute a straightforward command. Here’s how to do it:

Step 1: Open your terminal or command prompt.

Step 2: Run the following command:

1 | npx create-next-app@latest |

This command will swiftly set up the boilerplate for our Next.js project, providing us with a solid foundation to begin our web scraping journey.

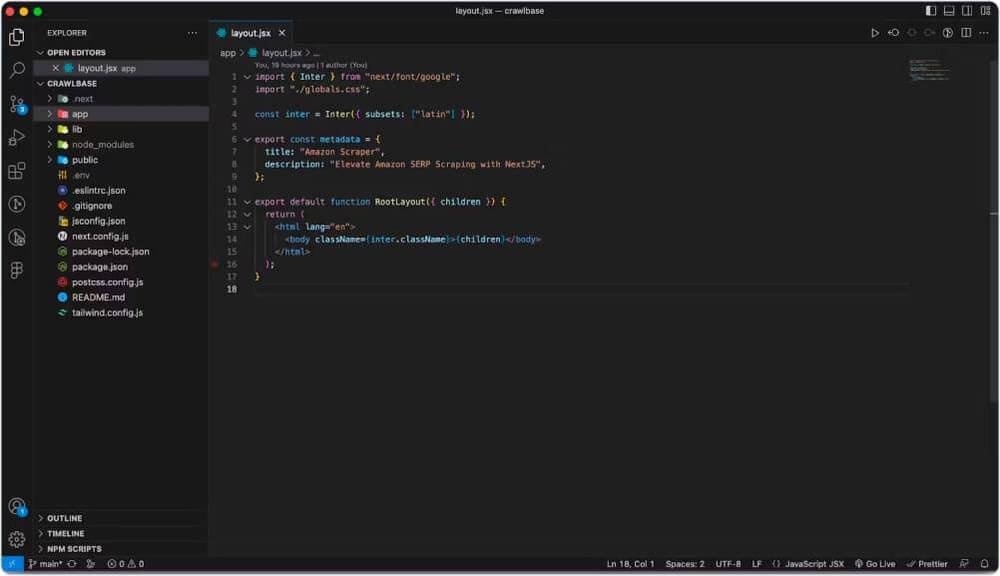

After initiating the Next.js project, it’s time to fine-tune it according to your specific requirements. In our case, we’ll opt for JavaScript instead of TypeScript and skip the ESLint configuration. However, it’s important to ensure that you select “Yes” for Tailwind, as we’re utilizing Tailwind for styling our FrontEnd.

By customizing the project configuration to your needs, you can tailor it to the precise goals of your web scraping project. With these selections, you’ll receive a boilerplate that aligns perfectly with our Next.js-based web scraper.

Now, we need to install the Crawlbase Node.js package, which will enable us to easily integrate the Crawling API into our Next.js code and fetch the scraped products from Amazon’s Search Engine Results Page (SERP). To do this, run the following command to install the package:

1 | npm i crawlbase |

This command ensures that we have Crawlbase integrated into our project, ready to help us with our web scraping tasks.

Next, let’s run our Next.js project to see it in action. Execute the following command:

1 | npm run dev |

The above command will execute the project dev env and run the application with default layout, as shown below:

VI. Scraping Amazon SERP Page using Crawlbase

Finally, we are ready to scrape Amazon SERP results. Our objective is to configure Crawlbase to extract valuable data from Amazon’s web pages. This will include essential information like product listings and prices.

Let’s create an index.js file inside the “actions” folder. This file will serve as the command center for executing the Crawlbase Crawling API to fetch scraped products. Copy the code below and save it as

actions/index.js

1 | 'use server'; |

In this code, we’re setting the stage for our Amazon SERP scraper. We import the necessary modules and create an instance of the Crawling API with your Crawlbase JavaScript token.

The scrapeAmazonProducts function is the star of the show. It takes a product string as input and uses it to make a request to Amazon’s SERP. The response, containing valuable product data, is then processed and returned.

This is just the first part of our project.

Next, we have to map the code to our frontend project.

Head to the next section of the blog to find out how.

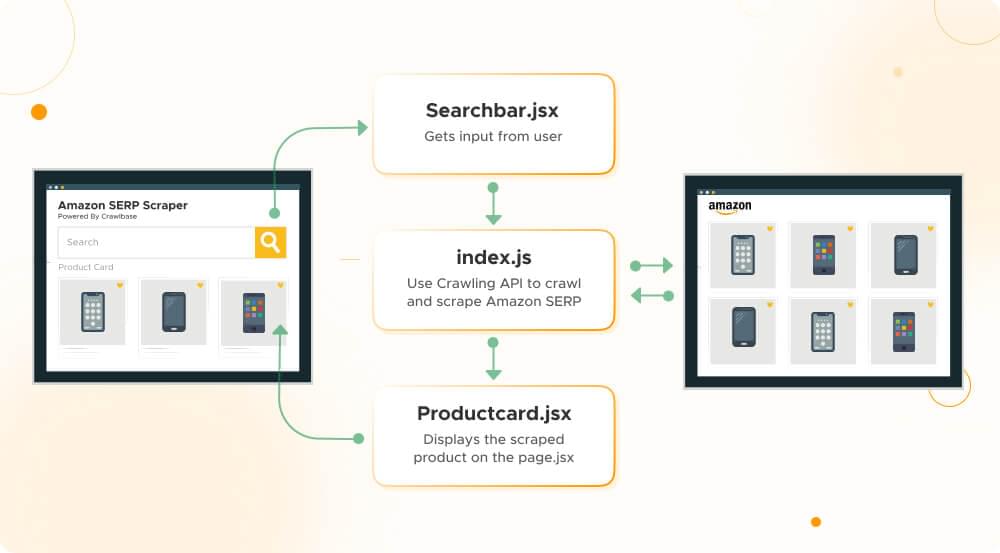

VII. Mapping Product List: Creating Essential Frontend Components

After successfully fetching data from the Amazon SERP, the next step is to map and present this data on the frontend. This involves organizing and structuring the data to make it more accessible for your project. You should consider transforming the data into JSON objects or other data structures that fit your project’s needs.

In this section of the blog, we’ll create three important files to make it all happen:

First: ProductCard.jsx

Under the components folder, we’ll save the code below in a file named ProductCard.jsx. This component will play a crucial role in presenting individual product details, including images, prices, categories, and customer reviews.

components/ProductCard.jsx

1 | import React from 'react'; |

Explanation:

- This code defines the structure of the

Product Cardcomponent, which is responsible for rendering product information. - The component accepts a

productobject as a prop, containing data like image, price, category, and customer reviews. - It creates a visual representation of the product with features like an image, title, price, category, and customer reviews.

- The product’s name is made into a clickable link, allowing users to access more details by opening the product’s Amazon page in a new tab.

By creating this component, we’re establishing the foundation for presenting Amazon product listings in a user-friendly and organized manner on the frontend of our web scraping project.

Second: Searchbar.jsx

Another essential component to be saved under the components folder is Searchbar.jsx. This component will handle the search functionality, allowing users to enter keywords of their product queries.

component/Searchbar.jsx

1 | 'use client'; |

Explanation:

- The code defines a React functional component named

Searchbarthat is used to render a search input field and a search button. This component is intended to be a part of the user interface. use clientsuggests that this component will be used on the client-side, which is typical for components that are meant for the frontend.- The component accepts several props:

handleSubmit: A function to handle the form submission.searchPrompt: The current value of the search input.setSearchPrompt: A function to update the search input value.isLoading: A boolean indicating whether a search is in progress.

- Within the component’s

returnblock, it renders an HTMLformelement that triggers thehandleSubmitfunction when submitted. - Inside the form, there is:

- A

labelelement for accessibility with an icon that’s visually hidden but conveys the purpose of the input field.

- A

- A search input field that:

- Displays the current search value (

searchPrompt). - Calls

setSearchPromptto update the search value when changed. - Disables spellcheck.

- Has various styles for appearance, border, and focus states.

- Displays the current search value (

- A search

button:- It’s conditionally disabled when

isLoadingistrue. - Has styling for its appearance and behavior (colors, focus effects, etc.).

- Displays “Searching…” when

isLoadingistrue, and “Search” otherwise.

- It’s conditionally disabled when

This component is a fundamental part of the user interface for initiating a search operation, and it communicates with the rest of the application through the provided props.

Third: page.jsx

In your project folder, create a new “app” folder. Then, copy the code below and save it as “page.jsx” file. This is where we’ll bring everything together, utilizing the ProductCard and Searchbar components to create a seamless and interactive user experience.

The main state for the search component resides here, and we will pass all the necessary methods from this component to its children.

app/page.jsx

1 | 'use client'; |

Explanation:

- This code defines a React functional component named

Home, which represents the main page or component of your application. "use client"indicates that this component will be used on the client-side, which is typical for components that are part of the frontend.- It imports other components and modules, including

Searchbar,ProductCard,useStatefrom React, andscrapeAmazonProductsfrom anactionsmodule. - The component’s state is managed using the

useStatehook. It initializes various state variables such assearchPrompt,isLoading,fetchProducts, andisError. - The

handleSubmitfunction is an asynchronous function that is executed when a form is submitted. It’s responsible for handling the search operation:- It sets

isLoadingtotrueto indicate that a search is in progress. - Clears any previous search results by setting

fetchProductsto an empty array and resettingisErrortofalse. - Calls the

scrapeAmazonProductsfunction (likely responsible for fetching data from Amazon) with the currentsearchPrompt. - If the operation is successful, it updates

fetchProductswith the retrieved data and resetsisErrortofalse. - If an error occurs during the operation, it sets

isErrortotrue, clears the search results, and logs the error to the console. - Finally, it sets

isLoadingtofalsewhen the search operation is complete.

- It sets

- The

returnblock contains the JSX code for rendering the component. It includes:- A container div with various styles and classes.

- A navigation section with a title that mentions “Amazon Scraper” and a link to “Crawlbase.”

- A

Searchbarcomponent, which is likely a search input field. - A section for displaying the scraped products, with conditional rendering based on the length of

fetchProducts. - In case of an error, it displays an error message.

- A grid for displaying product cards using the

ProductCardcomponent. The product cards are mapped over thefetchProductsarray.

Code Execution

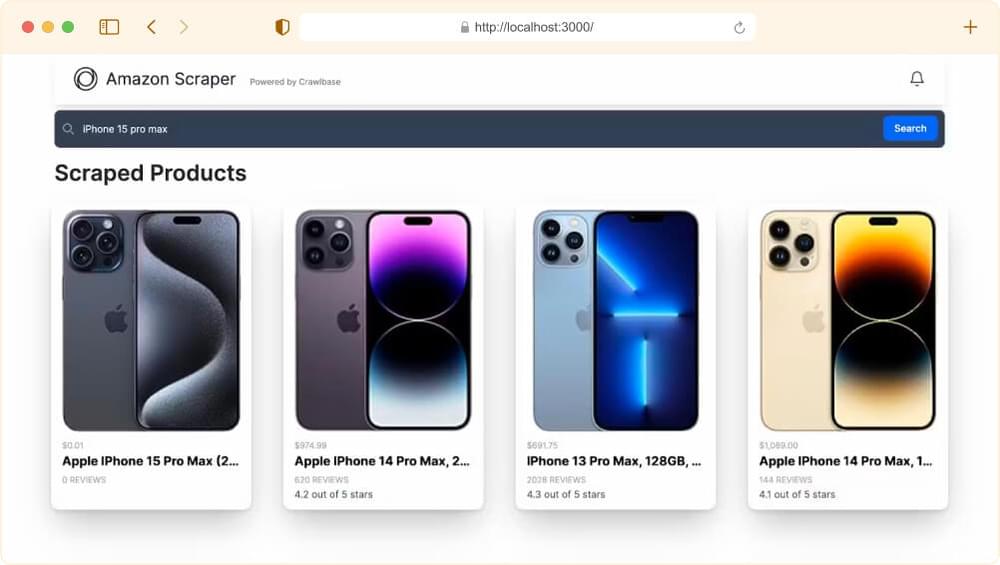

Now, it’s time to put our web scraper project into action. To run it, you can either execute the command below or refresh the localhost:3000 window in your browser to view the results:

1 | npm run dev |

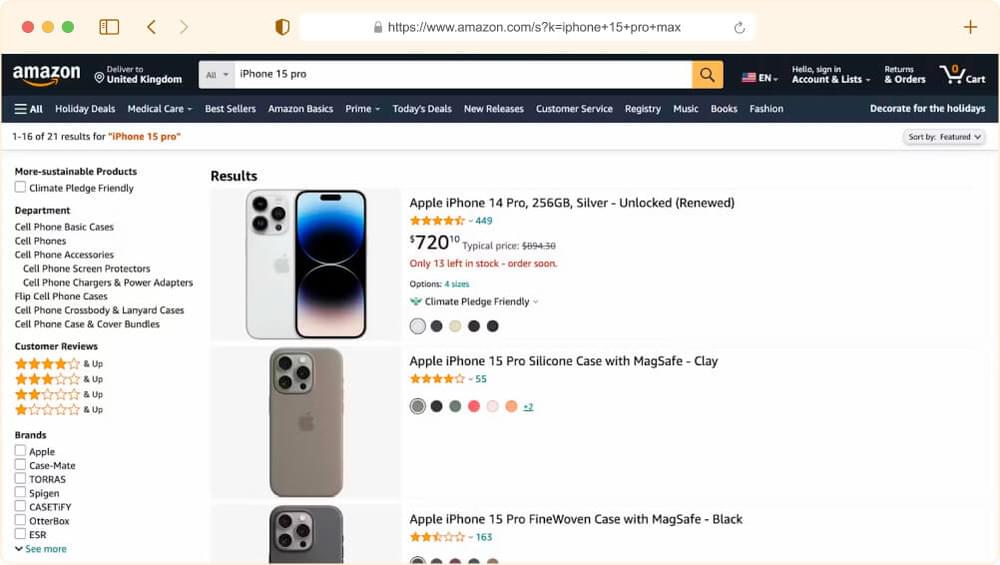

Let’s conduct a test search. For instance, let’s look up ‘iPhone 15 pro max’ and see what the scraper brings back.

VIII. Taking Your Next.js Project Live: Deployment Essentials

When it’s time to share your Next.js project with the world, deploying it from your local development environment to a live website accessible anywhere is the next crucial step. You’ll typically need to follow these steps:

1. Choose a Hosting Provider: Select a web hosting provider where you can deploy your Next.js application. Common choices include Vercel, Netlify, AWS, and many others. The choice depends on your project’s requirements and your familiarity with the platform.

2. Set Up an Account: Create an account on your chosen hosting platform if you still need to do so.

3. Prepare Your Next.js App:

- Make sure your project is fully functional and working as expected on your local machine (

localhost:3000). - Ensure all necessary dependencies and scripts are properly defined in your project’s

package.json.

4. Initialize a Repository: Initialize a version control system (e.g., Git) for your project if you haven’t already. Commit your changes and create a .gitignore file to exclude unnecessary files.

5. Deploy to Hosting Provider:

- Different hosting providers have different deployment methods, but they often involve linking your hosting account to your Git repository.

- Push your code to a Git repository (GitHub, GitLab, Bitbucket, etc.) if you’re not already using one. Make sure the repository is public or accessible to the hosting provider.

- Link your hosting account to your Git repository, and configure deployment settings (e.g., specifying the build command).

- Trigger the deployment process.

6. Build Process: During deployment, the hosting platform will typically run the build process to compile your Next.js app into a production-ready form. This might include transpilation, bundling, and optimization.

7. Domain Configuration: If you have a custom domain, configure it to point to your deployed application. This involves setting up DNS records.

8. Monitor Deployment: Keep an eye on the deployment process via the hosting provider’s dashboard. Check for any errors or warnings during the build process.

9. Testing: Once the deployment is complete, test your live application on the provided URL to ensure everything works as expected.

10. Scaling: Depending on your hosting provider and your app’s requirements, you can scale your application to handle more traffic if needed.

Remember that the exact steps and processes can vary depending on your chosen hosting provider. Check their documentation for detailed instructions on how to deploy a Next.js application.

This transition from local development to a global online presence is a pivotal moment in sharing your Next.js project with users anywhere.

IX. Final Thoughts

As we conclude, remember that web scraping is always evolving. Businesses need dependable, efficient, and ethical ways to collect data. Next.js and Crawlbase are leading this change, offering the tools to access the growing world of web data.

Let’s recap the key takeaways from this blog and see how this transformative combination can elevate your web scraping projects.

Key Takeaways:

- Next.js, a Game-Changer: We’ve learned that Next.js is not just transforming web development; it’s revolutionizing web scraping as well. Its server-side rendering (SSR) capability makes it ideal for scraping data, and its dynamic nature offers the flexibility you need.

- Crawlbase’s Power: By integrating Crawlbase’s Crawling API with Next.js, we’ve harnessed a headless browser infrastructure that mitigates common web scraping challenges like IP bans and CAPTCHAs. This opens up opportunities for more extensive data extraction and analysis.

Benefits of Next.js and Crawlbase:

The benefits are clear. Using Next.js and Crawlbase for Amazon SERP scraping offers:

- Efficiency: With Next.js, you can render pages on the server, ensuring faster data retrieval. Crawlbase provides a diverse range of features and rotating proxies that mimic human behavior, making your web scraping smoother and more efficient.

- Reliability: Both Next.js and Crawlbase bring reliability to your web scraping projects. The combination allows you to access real-time data without worrying about IP bans or CAPTCHAs, ensuring you can rely on the results for data-driven decisions.

Exploration Awaits:

Now that you’ve witnessed the power of Next.js and Crawlbase in action, it’s time for you to explore these tools for your web scraping projects. If you want to learn more about scraping data from Amazon, you can find helpful tutorials in the links below:

How to Scrape Amazon Buy Box Data

How to Scrape Amazon Best Sellers

How to Scrape Amazon PPC AD Data

We also have a wide collection of similar tutorials for scraping data from other E-commerce websites, such as Walmart, eBay, and AliExpress.

If you have any questions or need help, please feel free to contact us. Our support team will be happy to assist.

X. Frequently Asked Questions

Q: Can I use this method to scrape other e-commerce websites, not just Amazon?

A: Yes, the techniques discussed in this blog can be adapted for scraping other e-commerce websites with similar structures and challenges, like eBay and AliExpress. You may need to adjust your scraping strategy and tools to match the specific site’s requirements.

Q: What are the potential risks of web scraping, and how can I mitigate them?

A: Web scraping comes with potential risks, including legal consequences, the threat of IP bans, and the possibility of disrupting the target website’s functionality. Therefore, it’s crucial to follow ethical practices and use reliable Scrapers like Crawlbase.

Crawlbase helps mitigate the risk of IP bans by employing a vast pool of rotating residential proxies and a smart system that acts like a person when browsing a site. This helps us follow the website’s rules while collecting data anonymously and efficiently. It’s also important to limit how fast we collect data to avoid putting too much load on the website’s server and keep our web scraping efforts in good shape.

Q: Are there any alternatives to NextJS and the Crawling API for Amazon SERP scraping?

A: Yes, several alternatives exist, such as Python-based web scraping libraries like BeautifulSoup and Scrapy. Additionally, you can also use Crawlbase’s Crawling API which is a subscription-based service focused on delivering scraped data.