To simply become the best amazon seller, you need to scrape Amazon best sellers data. If you’re trying to reach there, you must be curious about what’s hot on Amazon right now. With the help of Amazons best sellers scraper and this guide you will be able get deep insights on why they are the best ones. Think names, rankings, and prices.

To head right into the steps, click here

Table of Contents

- Why scrape Amazon Best Sellers?

- Understanding the Amazon Best Sellers Page

- Exploring the Structure of the Page

- Identifying the Data to Scrape

- Setting Up Your Development Environment

- Installing Python

- Installing Necessary Python Libraries

- Scrape Amazon Best Sellers with Crawlbase Crawling API

- Creating a Python Script File

- Creating a Crawlbase Account

- Fetching HTML using the Crawling API

- Crawlbase “amazon-best-sellers” scraper

- Best Practices and Ethical Considerations

- Legal and Ethical Aspects

- Respectful Scraping Practices

- Scraping Frequency and Volume

- Real-World Applications and Use Cases with Amazon Best Seller Scraper

- Price Monitoring

- Market Research

- Competitive Analysis

- Final Words

- Frequently Asked Questions

Why scrape Amazon Best Sellers?

Scraping Amazon Best Sellers offers several valuable insights and benefits, making it a compelling choice for various purposes. Here are some reasons why you might want to scrape Amazon Best Sellers:

Market Research: Amazon’s Best Sellers list provides a real-time snapshot of what products are currently popular and in demand. By scraping this data, you can gain valuable market insights, identify trends, and understand consumer preferences.

Competitive Analysis: Tracking the best-selling products in your niche or industry can help you monitor your competitors. By regularly scraping Amazon Best Sellers, you can keep an eye on which products are performing well and adapt your own strategies accordingly.

Product Selection: If you’re an e-commerce entrepreneur or considering launching a new product, scraping Amazon Best Sellers can help you identify potentially lucrative product categories or niches. It can inform your product selection and business decisions.

Pricing Strategies: Knowing which products are popular and how their prices fluctuate over time can assist in optimizing your pricing strategies. You can adjust your pricing to remain competitive or maximize profits.

Content Creation: If you run a content-driven website or blog, the Best Sellers data can be a valuable source of information for creating content that resonates with your audience. You can write product reviews, buying guides, or curated lists based on the most popular products.

E-commerce Optimization: If you’re an Amazon seller, scraping Best Sellers data can help you fine-tune your product listings, keywords, and marketing efforts. You can also identify potential complementary products to upsell or cross-sell.

Product Availability: Tracking best-selling products can help you stay informed about product availability. This is especially relevant during peak shopping seasons like the holidays when popular items may quickly go out of stock.

Educational and Research Purposes: Web scraping projects, including scraping Amazon Best Sellers, can serve as a valuable learning experience for Python developers and data enthusiasts. It provides an opportunity to apply web scraping techniques in a real-world context.

Data for Analytics: The scraped data can be used for in-depth data analysis, visualization, and modeling. It can help you uncover patterns and correlations within the e-commerce landscape.

Decision-Making: The insights gathered from Amazon Best Sellers can inform critical business decisions, including inventory management, marketing strategies, and diversification of product offerings.

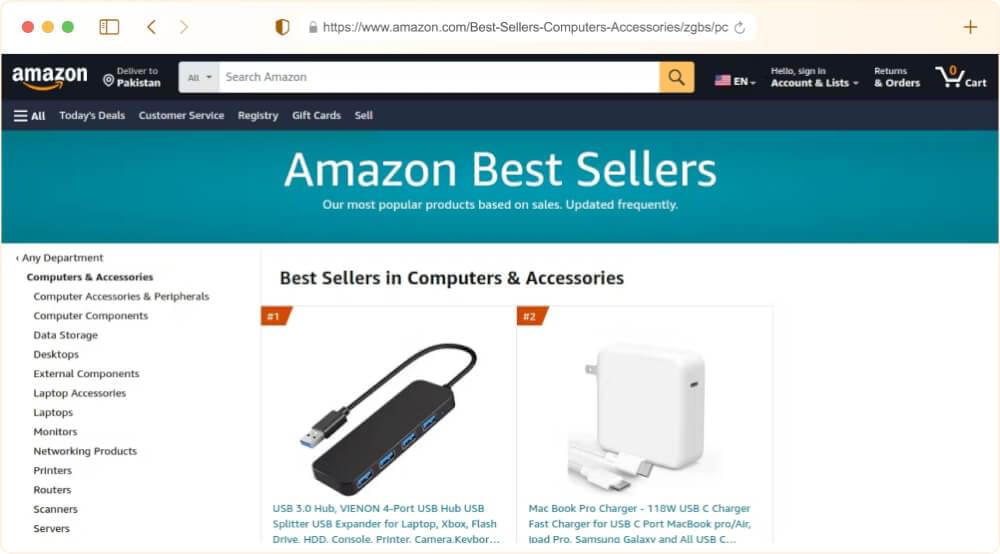

Understanding the Amazon Best Sellers Page

Amazon’s Best Sellers page is a rich source of valuable data, but before you can scrape it effectively, it’s important to understand its structure and identify the specific data you want to extract. This section will guide you through this process.

Exploring the Structure of the Page

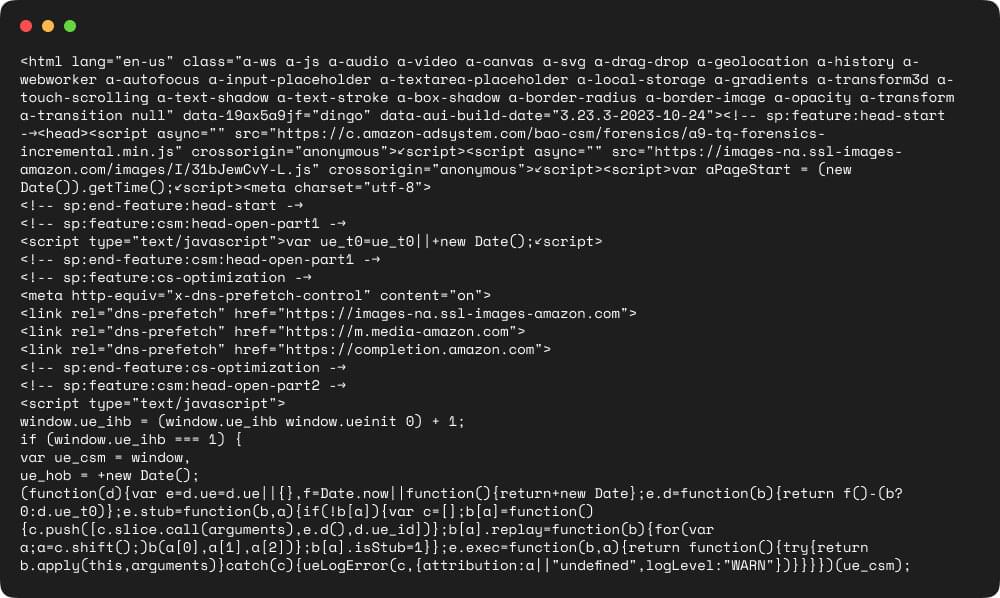

To scrape the Amazon Best Sellers page, you need to familiarize yourself with the page’s HTML structure. This understanding is crucial for locating and extracting the desired information. Here’s what you should do:

- View Page Source: Right-click on the webpage and select “View Page Source” or “Inspect” in your web browser. This will open the HTML source code of the page.

- Navigate Through Elements: In the HTML source code, explore the elements that make up the page. Look for patterns, classes, and IDs that define the various sections and data points. Pay attention to the hierarchy of elements to identify key structures.

- Identify Data Containers: Locate the HTML elements that enclose the Best Sellers data. These containers typically include product titles, rankings, prices, and other relevant details.

- Pagination Elements: If the Best Sellers page has multiple pages, find the elements that allow you to navigate between them. Understanding how pagination works is important for scraping data from multiple pages.

Identifying the Data to Scrape

Once you’ve familiarized yourself with the page’s structure, you can pinpoint the specific data elements you want to scrape. Consider the following steps:

- Rankings and Titles: Determine how product rankings and titles are structured. Look for HTML elements, classes, or tags that contain this information. For example, product titles are often found within

<a>tags or specific<div>elements. - Price and Seller Information: Identify the HTML elements that hold the price, seller information, and availability status. Prices are typically enclosed within

<span>or<div>elements with specific classes. - Product Categories: Find the elements that indicate the category or department to which each product belongs. Categories can provide valuable context to the Best Sellers data.

- Reviews and Ratings: Locate the elements that display product reviews, ratings, and customer feedback. These are often found in specific

<div>or<span>elements. - Additional Data: Depending on your specific use case, you may want to scrape additional information such as product images, product descriptions, or customer reviews. Identify the relevant elements for these data points. It is so important that your product images focus on the product itself, ensuring clarity and professionalism. By using a background remover, you can eliminate distractions and highlight the product, making it more appealing to potential customers.

Understanding the structure and data placement within the Amazon Best Sellers page is essential before you proceed with web scraping. It ensures that your scraping script can accurately target and extract the information you need. In the next sections, we will delve into the actual Python code and libraries used to scrape this data effectively.

Setting Up Your Development Environment

Setting up a proper development environment is the first step in your journey to scrape Amazon Best Sellers using Python. This section will guide you through the necessary steps.

Installing Python

Python is the programming language of choice for web scraping. If you don’t already have Python installed, follow these steps:

- Visit the Python website: Go to the official Python website at python.org.

- Choose the Python version: Download the latest version of Python, which is typically recommended. Ensure that you choose a version compatible with your operating system (Windows, macOS, or Linux).

- Install Python: Run the installer you downloaded and follow the installation instructions. Make sure to check the option to add Python to your system’s PATH, as it simplifies command-line usage.

- Verify installation: Open your terminal or command prompt and enter

python --versionorpython3 --versionto confirm that Python is installed correctly.

Installing Necessary Python Libraries

You’ll need specific Python libraries to perform web scraping effectively. Install these libraries using Python’s package manager, pip. Open your terminal or command prompt and run these commands:

Crawlbase: Crawlbase has a Python library designed to simplify web crawling tasks by acting as a convenient wrapper for the Crawlbase API. This lightweight library is intended to streamline the process of accessing and utilizing the features provided by the Crawlbase API, making it easier for developers to incorporate web crawling and data extraction into their Python applications.

To get started with “Crawlbase“, you can simply install it using the following command:

1 | pip install crawlbase |

Other libraries: Depending on your specific project requirements, you may need additional libraries for tasks like data storage, data analysis, or automation. Install them as needed.

Scrape Amazon Best Sellers with Crawlbase Crawling API

Creating a Python Script File

You can generate a Python script file named "amazon-scraper.py" by executing the following command:

1 | touch amazon-scraper.py |

This command will generate an empty Python script file with the name “amazon-scraper.py” in your present directory. After creating this file, you can open and modify it to compose your Python code for scraping Amazon pages.

Creating a Crawlbase Account

To utilize the Crawlbase Crawling API, it’s essential to have a Crawlbase account. If you don’t already have one, you can create an account by following these straightforward steps:

- Start by clicking on the link provided to create a new Crawlbase Account.

- Fill in the necessary information, which includes your name, email address, and a secure password of your choice.

- To verify your email address, check your inbox for a verification link, and click on it. This step confirms your email and account.

- Once your email is successfully verified, you can access your Crawlbase dashboard, where you’ll be able to manage your API access, monitor your crawling activities and obtain your private token.

Fetching HTML using the Crawling API

After obtaining your API credentials, installing the Python library, and creating your “amazon-scraper.py” file, the next step is to select the particular Amazon Best Sellers page you intend to scrape. In this example, we’ve chosen to focus on the Amazon Best Sellers in Computers & Accessories. This choice is crucial as it allows you to demonstrate the versatility of the scraping process by selecting a Amazon best sellers page that presents a wide array of elements for extraction. This specific page showcases an up-to-date list of the most popular computer and accessory products available on the Amazon platform, making it an excellent starting point for your web scraping project.

To set up the Crawlbase Crawling API, it’s crucial to define the necessary parameters and endpoints to enable the API to function correctly. To begin, make sure you have already created the “amazon-scraper.py” file, as described in the previous section. Then, copy and paste the script provided below into this file. Finally, execute the script in your terminal by using the command “python amazon-scraper.py.”

1 | from crawlbase import CrawlingAPI |

The provided script illustrates how to utilize Crawlbase’s Crawling API to access and extract data from an Amazon Best Sellers page. This is achieved by configuring the API token, specifying the URL of interest, and initiating a GET request. When you execute this code, you will obtain the unprocessed HTML content from the designated Amazon page, which will be presented in the console, as demonstrated below:

Crawlbase “amazon-best-sellers” scraper

In the previous example, we learned how to obtain the basic structure of an Amazon Best Sellers page, which essentially provides us with the HTML content of the page. However, there are situations where we’re not interested in the raw HTML data but rather in extracting specific and important information from the page. Fortunately, Crawlbase’s Crawling API includes built-in Amazon scrapers known as “amazon-best-sellers“. These scrapers are designed to help us extract valuable content from Amazon Best Sellers pages.

To enable this functionality when using the Crawling API in Python, it’s essential to include a “scraper” parameter with the value “amazon-best-sellers” in your code. This parameter simplifies the process of extracting pertinent page content in JSON format. These adjustments will be implemented within the existing file, “python-scraper.py.” Let’s review the following example for a better understanding:

1 | from crawlbase import CrawlingAPI |

JSON Response:

1 | { |

Best Practices and Ethical Considerations

Scraping Amazon Best Sellers data or any other website comes with certain responsibilities and ethical considerations. Adhering to best practices ensures that you operate within the bounds of the law and maintain respect for the websites you scrape. In this section, we will explore the legal, ethical, and practical aspects of web scraping.

Legal and Ethical Aspects

When scraping Amazon Best Sellers or any website, it’s crucial to consider the legal and ethical implications. Here are some key aspects to keep in mind:

- Respect Terms of Service: Always review and comply with Amazon’s Terms of Service and any relevant legal regulations regarding web scraping. Violating these terms could lead to legal consequences.

- Robots.txt: Check if Amazon’s robots.txt file specifies any rules for web crawlers. Respect the rules outlined in this file. Some websites may explicitly disallow web scraping in certain areas.

- Data Usage: Be mindful of how you intend to use the scraped data. Ensure that your use complies with copyright, data protection, and privacy laws. Avoid data misuse, such as spamming or unauthorized reselling.

Respectful Scraping Practices

To maintain ethical web scraping practices and minimize disruption to websites, follow these guidelines:

- User-Agent Header: Set an appropriate User-Agent header in your HTTP requests to identify your scraper. Use a user agent that resembles a typical web browser to avoid suspicion.

- Respect Robots.txt: If Amazon’s robots.txt file permits web crawling, respect the rules and crawl delay specified. Don’t scrape pages disallowed in robots.txt.

- Avoid Overloading Servers: Limit the frequency and volume of your requests to Amazon’s servers. Excessive scraping can strain the website’s resources and lead to temporary or permanent IP bans.

- Use a Proxy Pool: Employ a rotating proxy pool to distribute your requests across multiple IP addresses. This helps prevent IP bans and allows for more extensive scraping.

- Handle Errors Gracefully: Build error-handling mechanisms into your scraping script to deal with temporary connectivity issues and server errors. This prevents unnecessary website strain.

Best Practices and Ethical Considerations

The frequency and volume of your scraping activities play a significant role in your ethical and practical approach:

- Scraping Frequency: Avoid making requests at high frequencies, especially on websites like Amazon. Frequent requests can lead to your IP address being temporarily or permanently blocked.

- Batch Scraping: Instead of scraping continuously, schedule scraping sessions during off-peak hours. This reduces the impact on Amazon’s servers and minimizes disruption for other users.

- Data Retention: Only store and retain the data you need for your intended purpose. Discard unnecessary data promptly and responsibly.

- Regular Maintenance: Monitor and maintain your scraping script to adapt to changes in Amazon’s website structure or anti-scraping measures. Regularly review and update your code to ensure it remains effective.

Adhering to legal and ethical principles and respecting the rules and guidelines set by websites like Amazon is essential for responsible web scraping. It helps maintain a positive online ecosystem and ensures the longevity of your web scraping projects.

Real-World Applications and Use Cases with Amazon Best Seller Scraper

Web scraping Amazon Best Sellers data can be applied in various real-world scenarios to gain insights and make informed decisions. Here are some practical use cases:

Price Monitoring

- Dynamic Pricing Strategy: E-commerce businesses can scrape Amazon Best Sellers to monitor price changes and adjust their own pricing strategies in real-time. By tracking price fluctuations of popular products, businesses can stay competitive and maximize profits.

- Price Comparison: Consumers can use scraped data to compare prices across different sellers and platforms, ensuring they get the best deals on in-demand products.

Market Research

- Trend Analysis: Marketers and researchers can analyze Amazon Best Sellers data to identify emerging trends and consumer preferences. This information can guide product development and marketing campaigns.

- Product Launch Insights: Individuals planning to launch new products can assess the Best Sellers data to determine market demand and potentially underserved niches.

- Geographic Insights: By analyzing regional Best Sellers data, businesses can tailor their offerings to specific geographic markets and understand regional buying habits.

Competitive Analysis

- Competitor Benchmarking: Businesses can monitor the Best Sellers data of their competitors to gain insights into their strategies and product performance. This information can inform their own decision-making.

- Product Line Expansion: Scrapping Amazon Best Sellers can help businesses identify gaps in their product lines and discover new opportunities for expansion based on best-selling categories and trends.

- Market Share Analysis: By comparing their product sales to the Best Sellers data, companies can gauge their market share and identify areas for growth or improvement.

Final Words

And its an end to another tutorial on Scraping best sellers Amazon in Python. For more guides like these check out the links below

📜 How to Scrape Amazon Reviews

📜 How to Scrape Amazon Search Pages

📜 How to Scrape Amazon Product Data

We have some , check out our guides on scraping Amazon prices, Amazon ppc ads, Amazon ASIN, Amazon reviews, Amazon Images, and Amazon data in Ruby.

In addition, our extensive tutorial library covers a wide range of similar guides for other e-commerce platforms, providing insights into scraping product data from Walmart, eBay, and AliExpress and more.

If you’ve got any questions or need a hand – just drop us a line. We’re right here to lend a hand! 😊

Frequently Asked Questions

Is it legal to scrape Amazon?

The legality of scraping Amazon depends on factors like terms of service, copyright, data privacy, and the purpose. Violating Amazon’s terms can lead to legal action. Scrapping for public data may be more acceptable, but it’s still complex. Consider jurisdiction-specific laws, respect robots.txt, and consult a legal expert. Be cautious, as scraping for commercial or competitive purposes can pose legal risks.

How does Amazon detect scraping?

Amazon uses various methods to detect scraping activities on its website:

Rate Limiting: Amazon monitors the rate at which requests are made to their servers. Unusually high request frequencies from a single IP address or user agent may trigger suspicion.

Captcha Challenges: Amazon may present captcha challenges to users who exhibit scraping-like behavior. Scrapers often struggle to solve these challenges, while regular users can.

User Behavior Analysis: Amazon analyzes user behavior patterns, including click patterns, session durations, and navigation paths. Deviations from normal user behavior may raise red flags.

API Usage: If you’re using Amazon’s official APIs, they can monitor usage and detect unusual or excessive API requests.

Session Data: Amazon may analyze session data, such as cookies and session tokens, to identify automated scripts.

IP Blocking: Amazon can block IP addresses that exhibit scraping behavior, making it difficult for scrapers to access their site.

User-Agent Analysis: Amazon can scrutinize the User-Agent header in HTTP requests to spot non-standard or suspicious user agents.

It’s important to note that Amazon continually evolves its methods for detecting and preventing scraping, so scrapers must adapt and be cautious to avoid detection.

Can I scrape Amazon best sellers for any product category?

You can scrape Amazon Best Sellers for many product categories, as Amazon provides Best Sellers lists for various departments and subcategories. However, the availability and organization of Best Sellers may vary. Some categories may have more detailed subcategories with their own Best Sellers lists. Keep in mind that Amazon may apply rate limits or restrictions, and scraping large amounts of data may be subject to legal and ethical considerations. It’s essential to review Amazon’s terms of service, adhere to their policies, and respect their guidelines when scraping data from specific categories.

How can you use Amazon Best Sellers data?

Amazon Best Sellers data is valuable for market research, competitive analysis, and product strategy. You can use it to identify popular products, understand market trends, and analyze competitors. This data helps in making informed decisions about product selection, pricing strategies, and content optimization. It’s also useful for planning inventory, focusing on high-demand items, and optimizing advertising efforts.

How can I handle potential IP blocking or CAPTCHA challenges while scraping Amazon?

Handling potential IP blocking and CAPTCHA challenges while scraping Amazon is essential to ensure uninterrupted data extraction. Here are some strategies to address these issues:

- Rotate IP Addresses: Use a rotating proxy service that provides a pool of IP addresses. This helps distribute requests across different IPs, making it harder for Amazon to block your access.

- User-Agent Randomization: Vary the User-Agent header in your HTTP requests to mimic different web browsers or devices. This can make it more challenging for Amazon to detect automated scraping.

- Delay Requests: Introduce delays between your requests to simulate more human-like browsing behavior. Amazon is more likely to flag or block rapid-fire, automated requests.

- CAPTCHA Solvers: Consider using CAPTCHA-solving services or libraries like 2Captcha or Anti-CAPTCHA to handle CAPTCHA challenges. These services use human workers to solve CAPTCHAs in real-time.

- Session Management: Maintain a session and use cookies to replicate the behavior of a real user. Ensure that your scraping script retains and reuses cookies across requests.

- Headers and Referrers: Set the proper headers and referer values in your requests to mimic a typical browsing session. Refer to Amazon’s request headers for guidance.

- Proxy Rotation: If you’re using proxies, periodically rotate them to avoid being flagged. Some IP rotation services offer automatic rotation.

- Handle CAPTCHAs: When you encounter CAPTCHAs, your script should be designed to recognize and trigger CAPTCHA-solving mechanisms automatically. After solving, continue the scraping process.

- Avoid Aggressive Scraping: Don’t overload Amazon’s servers with too many requests in a short period. Make your scraping script more gradual and respectful of their server resources.

- Use Headless Browsers: Consider using headless browsers like Selenium with a real user profile to interact with Amazon’s site. This can be more resistant to detection.

What is Amazon best seller scrapper?

An Amazon Best Seller scraper is a software tool or program that extracts data from Amazon’s Best Sellers list. It collects information about the top-selling products in various categories, such as product names, prices, and rankings. This data is often used for market research, competitive analysis, and tracking trends on the Amazon platform.

How do I find best sellers on Amazon?

To find Amazon’s best sellers, visit the Amazon website. Navigate to a specific category and select “Best Sellers.” You can also filter by subcategories and time frames. Use the search bar to find specific products or categories. Third-party tools like Jungle Scout or Helium 10 offer more insights. Read reviews and ratings for customer feedback, and consider seasonal variations when making decisions.