Saving a product name and price from Amazon is straightforward, simply mark the name and the price of any product and store them wherever you want. But, what if you have hundreds or even thousands of product names and prices to be saved? Will the same trick work? At least not for us!

In this article, we will discuss Amazon’s structure and the amazing benefits of scraping Amazon for different businesses. Most importantly, we will show you how to quickly build a simple scraper with some Ruby libraries to crawl a product name and price from Amazon, which can be applied to hundreds of Amazon products.

Understand Amazon HTML Structure for Efficient Scraping

Amazon’s HTML structure is complex and multifaceted, designed to accommodate a vast variety of products, categories, user interactions, and dynamic content. While the specific structure can differ across different pages and sections of the site, there are some common elements and patterns:

- Header and Navigation: Typically includes the Amazon logo, search bar, navigation menus for different categories, account options, and links to various sections like Prime, Cart, and Orders.

- Product Listings: Divided into grids or lists displaying product images, titles, prices, ratings, and brief descriptions. Each product is enclosed within HTML tags that help structure and organize these details.

- Product Pages: These contain more detailed information about a particular product, such as its description, specifications, customer reviews, seller details, and related products. The HTML structure here often involves nested elements to organize diverse content.

- Forms and Input Fields: Amazon’s site incorporates various forms for user interactions, such as login, registration, address entry, payment details, and reviews. These forms are structured using HTML tags to collect and process user data.

- Dynamic Content and JavaScript: Amazon utilizes dynamic content loading techniques with JavaScript. This can complicate the HTML structure as some content may not be directly visible in the initial HTML source but rather generated dynamically after page load.

- Footer: Contains links to important sections like About Us, Careers, Privacy Policy, Help & Customer Service, and additional navigational elements.

To understand Amazon’s HTML structure, you need to identify these elements, their hierarchical relationships, and the specific HTML tags, classes, and IDs used to mark and organize different parts of the page. Once you get a good grip on the structure, it will help you in effective data scraping and enable the accurate targeting and extraction of desired data with a Ruby crawler for Amazon.

Crawling Amazon with Crawlbase

Let’s create a file amazon_scraper.rb which will contain our ruby code.

Let’s also install our two requirements by pasting the below at your command prompt:

gem install crawlbasegem install nokogiri

Now it’s time to start coding. Let’s write our code in the amazon_scraper.rb file, and we will start by loading an HTML page of one Amazon product URL using Crawlbase ruby library. We need to initialize the library and create a worker with our token. For Amazon, we should use the normal token, make sure to replace it with your actual token from your account.

1 | require 'crawlbase' |

We are now loading the URL, but we are not doing anything with the result. So it’s now time to start scraping the name and the price of the product.

Scraping Amazon data

We will use Ruby Nokogiri library that we installed before to parse the resulting HTML and extract only the name and price of the Amazon product.

Let’s write our code which should parse an HTML body and scrape the product name and price accordingly.

1 | require 'nokogiri' |

The full code should look like the following:

1 | require 'crawlbase' |

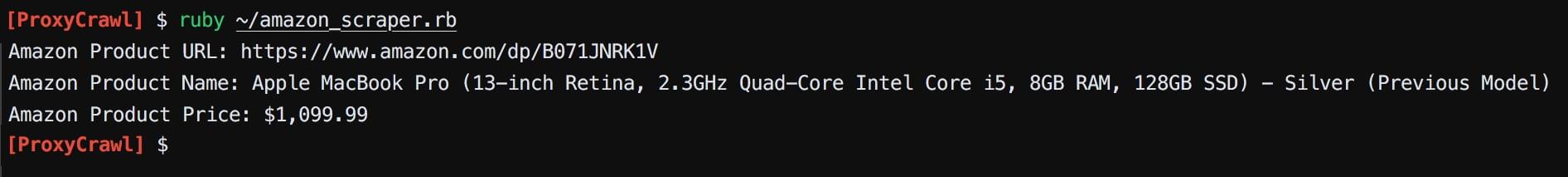

Now we should have our scraped Amazon product name and price like the following in the command prompt:

The code is ready, and you can quickly scrape an Amazon product to get its name and price. You can see the results in the console which can be saved in a database, saved in a file, etc. That is up to you.

We hope you enjoyed this tutorial and we hope to see you soon in Crawlbase.

Benefits of Amazon Scraping You Need to Know

As an online retailer, you must be aware of the grand amount of information that Amazon holds within its digital space. It contains everything like comprehensive product listings, customer reviews and ratings, exclusive deals, and the latest industry news. When all this information is consolidated in one platform, it becomes an enticing opportunity for sellers and vendors.

Therefore, scraping data from Amazon has immense benefits for businesses. Amazon data scraping solves the often difficult and time-consuming task of extracting e-commerce data from various sources. Instead of navigating multiple websites to gather scattered information, Amazon encapsulates an extensive spectrum of data. This consolidation streamlines the extraction process, offering a comprehensive view of the market within a single platform.

Keep reading to explore the multi-dimensional benefits of a Ruby crawler for Amazon and how it can transform your business strategies.

Check Out Rival Products

To accurately shape your business choices, you must examine your competition. Scrape Amazon data of competing products to craft excellent marketing tactics and informed decision-making. Given Amazon’s comprehensive repository of product listings, consistently scraping your competitors’ Amazon stores allows for continual comparison and monitoring of any alterations. It’s highly probable that you’ll discover a majority of your competitors on Amazon. You’ll probably discover a majority of your competitors on Amazon. Thus, Ruby Amazon product scraper facilitates in-depth competitor product analysis.

Gather Product Reviews

It’s a necessity for businesses to stay updated on their product performance in the market. For Amazon merchants, achieving higher sales relies on ensuring the prime placement of their products in relevant searches. A practical method to gauge product performance is to collect product reviews and conduct sentiment analysis. These reviews typically lie in the spectrum from positive to neutral to negative. Scraping Amazon data enables dealers to point out the factors that influence product rankings, empowering them to design winning strategies to enhance their rankings. With product review data, Amazon sellers can strategize improvements in their products, customer service, and more.

Scrape Customer Profile Information

Every business has a unique target audience, and for e-commerce, scraping customer profiles gives an incredible opportunity for lead generation. However, Amazon strictly safeguards its customers’ personal information, which may create challenges for data collection. Amazon sellers try to focus on gathering databases of consumers who’ve purchased their products.

By observing customers’ shopping behaviors, sellers can create attractive combo items, ultimately boosting sales. An alternative approach is scraping Amazon’s list of top reviewers. It allows you to potentially invite these people to review your existing products or extend invitations for future product launches. Given the extensive nature of the top reviewer list—sometimes comprising up to 10,000 individuals—web scraping becomes a time-saving solution to extract this data.

Amazon’s policy regarding customer information protection poses obstacles, forcing sellers to strategize alternative methods for gathering consumer databases. Scraping data from Amazon, using tools like a Ruby scraper, becomes instrumental in this process. Sellers use these tools to navigate the platform, saving time and effort while extracting customer profile data for marketing strategies.

Collect Competing Product Reviews

Continuously monitoring your competitors’ activities is just as necessary as overseeing your own. Getting into your competitors’ review sections on Amazon’s website gives you the most relevant data. Analyzing what aspects people dislike the most about their products provides a significant foundation for establishing your competitive edge. Identify these areas of dissatisfaction and get an opportunity for differentiation and improvement.

Moreover, analyzing what customers love the most about your competitors reveals the specific areas where they outperform your offerings. This data can guide strategic enhancements to reinforce your competitive stance.

Crawl and Collect Own Product Reviews

Maintain a keen awareness of your product performance in the market. Amazon’s transparent review section serves as a valuable resource for insights into product performance. Analyze your product reviews on Amazon to comprehend the strengths and weaknesses of your offers. This information provides a comprehensive understanding of positive attributes and areas requiring improvement.

Customer reviews show you their pain points and areas that require attention. These reviews are a blueprint for improvements, indicating a clear path for product refinement and customer experience enhancement. Additionally, they provide beneficial pointers for improving customer service standards.

Gather Market Data

For sellers aiming to pinpoint their most profitable niche, a comprehensive study of market data is imperative. This exploration unveils insights into the most sought-after products, deeply understands Amazon’s category structure, and reveals how products align with the existing market landscape. Regularly scraping data from best-selling and top-rated products unveils trends, including products losing their top-selling positions. This data, extracted through scraping Amazon data from competing products, becomes a valuable resource for sellers, guiding adjustments in their internal assortment and optimizing manufacturing resources.

Scrape Amazon data using tools like a Ruby web scraper to understand product demand dynamics, identify emerging trends, and strategically align your products and offers with the shifting market preferences.

Evaluate Pricing Data

Scraping prices from Amazon offers a list of advantages. Conducting competitor pricing analysis empowers you to understand pricing trends, get into competitor analysis, and design an optimal pricing strategy. A well-crafted pricing strategy amplifies profits and fortifies your company’s competitiveness. For e-commerce data extraction, price scraping is considered as one of the most important steps.

Collect Data on Global Selling Products and Prices

Amazon’s global operations and international shipping are an opportunity to explore international sales avenues by scraping product data for overseas shipping. This product analysis allows you to compare their prices in various markets, identifying regions where prices are comparatively higher. With this comparative price data analysis, you can strategically expand your market presence into more lucrative markets.

Assess Offers with Amazon Data Scraping

As a buyer, offers stand out as the most attractive aspect of e-commerce platforms. To craft a successful marketing strategy for your products, you need to see what your competitors present in terms of offers. Scrape Amazon data to get a detailed overview of competitor offers and deals analysis, real-time cost tracking, and seasonal variations. This data can help you improve your products, deals, and offers, leading to customer satisfaction.

Discover Target Audience

If you are a seller in a specific product category, you need to identify and reach your target market to make informed decisions. Scraping customer preferences from Amazon provides first-hand information about the customer base. Despite Amazon’s strict customer profile protection measures, sellers can devise strategies, like using a Ruby web scraper, to gather profiles of their customers. This collected customer data can be used to analyze their shopping patterns and behaviors.

Bottom Line

In this blog, we’ve explored the structure of Amazon and the unlimited benefits of Amazon data scraping using Ruby web scraper. We have also shown you the easiest way to scrape Amazon data using Crawlbase. Once you start using these tools, the possibilities with Ruby become endless. The convenience of Ruby scraper further enhances your capabilities, whether it’s tweaking existing code or introducing new functionalities. Ultimately, it stands as one of the most efficient and productive programming languages available, enabling swift and effective development of speedy solutions. With Ruby, the journey from data scraping to feature-rich applications is seamless and remarkably efficient.