So, you have a business and want to crawl LinkedIn to gather data for your marketing team. You want to scrape hundreds of company pages or even user profiles to achieve your goals. Will you do it manually and spend your precious hours, days, and resources on it? Well, here in Crawlbase, we say you do not have to. Our Crawling API with the built-in LinkedIn data scraper can help you accomplish your goals faster with exceptional results.

In this guide, together, we will build a simple Ruby scraper that will crawl Amazon’s LinkedIn company profile, which can then be applied to any company of your choice.

Setting up the scraper

To actually start writing our code in Ruby, we will need to prepare the following:

- The API URL

https://api.crawlbase.com - The Scraper parameter ( scraper = linkedin-company )

- Your Crawlbase token

- LinkedIn Company URL

Crawling LinkedIn with Crawlbase

Now, let’s create a file and name it linkedin.rb, which will contain our ruby code.

To start coding, open the file that you have created, and from there, we will first initialize the library and create a module to handle our API, token, and URL. Don’t forget to insert the scraper linkedin-company as well.

You can use your normal token for LinkedIn, and make sure to replace it with the actual token that you can find on your account.

1 | require 'net/http' |

Now that we’re done with the first part, let’s write the rest of the code. For this part, we will get the response of the URI module, the HTTP status code, and use a function JSON.pretty_generate so that our code will return a more readable JSON body.

The full code should now look like this:

1 | require 'net/http' |

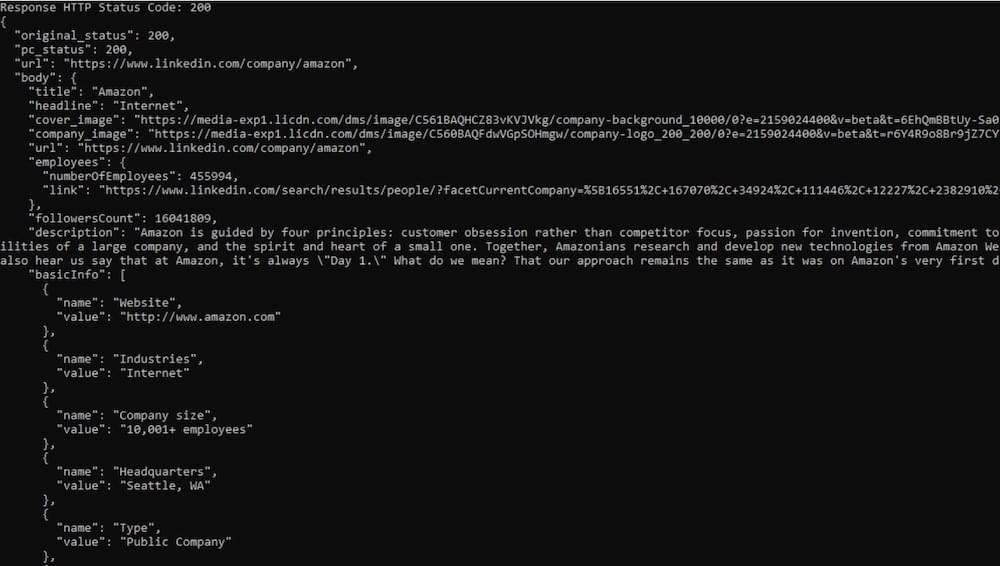

Now we just need to save our work and run the code. The result will return the following parsed data:

(Example output)

We’re all set! Now is your turn to use this code however you like. Just be sure to replace the LinkedIn URL that you want to scrape. Alternatively, you can freely use our Crawling ruby library. Also, remember that we have two scrapers for LinkedIn, the linkedin-profile and linkedin-company which are pretty self-explanatory.

How and where would you use the information you extracted? It’s all up to you.

We hope you enjoyed this tutorial and we hope to see you soon in Crawlbase. Happy crawling!

5 Reasons Why Choose Crawlbase ?

When accessing websites for data, Crawlbase is often the best choice. Here’s why:

- Unrestricted Access: Crawling API provides unrestricted access to almost any website. You can easily change your IP address, cookies, and other browser data, making it easier to gather the information you need.

- Maintaining Anonymity: With Crawling API, your anonymity is preserved. You can change your IP address and clear cookies, ensuring your online activities remain private.

- Automated Security: Crawling API comes with automated security measures, including features like rotating IPs and cookie management. This built-in security enhances your web scraping experience while keeping your data safe.

- Faster Scraping: With automated security and unrestricted access, Crawling API offers higher scraping speeds. This means you can gather data more quickly and efficiently.

- Enhanced Security: Similarly, automated security measures and IP rotation make your online activities more secure. Your anonymity is protected, and your data remains safe from potential threats.

Crawling API is a reliable and secure choice for web scraping, providing you with the tools and features needed to access websites, collect data, and protect your online privacy.

Let’s Clarify the Legal Aspect of Ruby Web Scraping on LinkedIn

You might wonder if it is legal to scrape data from LinkedIn. There are many opinions regarding this issue. Let’s address it in the most authentic and easy way possible.

LinkedIn is a platform where professionals connect and share their information. It contains a vast amount of data, and some people or businesses may want to collect this data for various purposes. However, LinkedIn has specific rules in place to protect its users’ data, both public and hidden.

LinkedIn’s rules prohibit the use of automated methods like scraping, crawling, or data mining to collect data from their platform. To enforce these rules, LinkedIn employs technical safeguards such as rate restrictions, CAPTCHAs, and IP blocking. These measures are designed to ensure that only authorized users access the platform and its data.

When it comes to public domain data, things are a bit different. LinkedIn’s policies don’t make scraping LinkedIn unethical, illegal, or unsafe as long as you’re collecting data that is publicly accessible. Both European Union and U.S. laws do not forbid scraping public domain data, even if a company’s policy opposes it.

It’s worth noting that while the U.S. Supreme Court has ruled against laws that made it illegal to search online databases for information, it hasn’t directly addressed the issue of scraping. This means that it is generally legal to access LinkedIn and gather publicly available information about someone. However, it’s essential to always stay within the boundaries of the law.

To be on the safe side, make sure you’re only collecting data that is publicly accessible and complying with legal boundaries of LinkedIn.

What Types of Data Can You Crawl from LinkedIn?

LinkedIn offers a bulk of data that you can scrape for various purposes. Here are some of the primary data points you can collect using a Ruby LinkedIn scraper:

- LinkedIn Profiles: This includes the profiles of individual LinkedIn users, which often contain valuable professional information.

- LinkedIn Groups: Information about LinkedIn groups can be crawled, helping you gain insights into different communities.

- LinkedIn Company Profiles: With a Ruby scraper, you can extract data from the profiles of companies on LinkedIn, which can be useful for business-related purposes.

- Job Listings: A Ruby LinkedIn scraper can extract job listings to provide information about available job opportunities and the companies offering them.

- Search Results: Data from search results on LinkedIn can help you find specific profiles or information related to your search queries.

However, the scope of data you can collect with a Ruby scraper extends beyond these categories. To supercharge your outreach efforts, consider gathering additional data points such as:

- Full Name and Job Title: Knowing a person’s name and job title is essential for personalized outreach.

- Educational Background, Location, and Contact Details: Collecting information about a person’s education, location, and contact details, including work and personal email addresses and mobile numbers, can help you establish direct communication.

- Company Information: Gathering data about a prospect’s company, such as the company name, domain, size, industry, location, and founding year, can help you design your strategic approach.

- Social Media Profiles: Find links to a prospect’s other social media profiles on platforms like LinkedIn, Facebook, Twitter, and GitHub.

- Connection Count and Sales Navigator URL: These details offer insights into a target network and can be valuable for sales and outreach efforts.

By collecting this wide range of data points with a Ruby LinkedIn scraper, you can make your outreach efforts highly targeted and effective.

The Advantages of LinkedIn Web Scraping with Ruby

LinkedIn serves as a robust networking platform, offering opportunities for job seekers and businesses to connect. However, manually extracting data from LinkedIn can be a laborious and time-consuming task. This is where a ruby scraper proves valuable.

Automation of LinkedIn Data Extraction

A LinkedIn ruby web scraper automates the process of collecting data from LinkedIn profiles, eliminating the need for manual data copying and pasting. With ruby LinkedIn scraper, you can efficiently extract data from numerous profiles simultaneously. This data can then be organized into structured formats or exported in multiple forms, saving you time to focus on other essential tasks.

Targeted Searches

LinkedIn web scraping with ruby permits you to conduct precise searches based on specific criteria. For instance, you can search for professionals in a particular industry, location, or job function. This targeted approach helps you identify the right people to connect with on LinkedIn and expand your network.

LinkedIn Data Analysis

A ruby web scraper extracts a bulk and variety of information from LinkedIn profiles, including job titles, company affiliations, and educational backgrounds. This data can be analyzed to gain insights into your target audience on LinkedIn. For example, you can determine the industries your target audience is engaged in, their job titles, and the companies they work for. Such data enables you to redesign your marketing messages and outreach efforts.

Building a Robust Database

Data collected through LinkedIn web scraping with ruby can be utilized to construct a comprehensive database of leads for targeted email or LinkedIn outreach campaigns. With the right scraping tools, you can swiftly and efficiently gather substantial data, significantly reducing the time needed compared to manual collection.

Boosting Lead Generation

Ruby web scraping contributes to enhanced lead generation. By extracting data from LinkedIn profiles, you can identify potential customers and reach out to them with customized marketing messages. This, in turn, helps in generating more leads on LinkedIn and promoting your business growth.

Establishing an Automated Outreach Process

A ruby LinkedIn scraper can be seamlessly integrated with other tools to develop an automated outreach process. For example, you can extract data from LinkedIn profiles using a ruby scraper and import this data into an email marketing or CRM tool. This streamlined approach enables automated, targeted email campaigns to your LinkedIn connections, saving time and helping you maintain network connections.

Enhancing Email Marketing

For businesses who want to refine their email marketing strategies, a LinkedIn ruby web scraper tool proves to be super valuable. Data scraped from LinkedIn profiles and Sales Navigator can be used to segment email lists and create specific campaigns. This scraped data allows you to send personalized and pertinent emails, resulting in increased open and click-through rates. Furthermore, LinkedIn data scraping facilitates the expansion of your email lists with up-to-date, relevant, and high-quality leads.

Competitive Research

LinkedIn ruby web scraping is a potent tool for monitoring competitors. By scraping data from competitor profiles or LinkedIn pages, you can gain insights into their target audience, content engagement, and even identify key personnel within competing organizations. This information can inform your LinkedIn marketing strategies and place you ahead of the competition. Additionally, using a LinkedIn post scraper enables you to analyze the content shared by competitors and their engagement levels, helping in the improvement of your content’s quality and relevance.

How Can You Safely Extract Data With Ruby LinkedIn Scraper?

LinkedIn web scraping with Ruby can offer valuable data for businesses and researchers. However, it’s important to conduct this process ethically and in compliance with LinkedIn’s terms of service. To ensure you don’t face any issues or get banned from the platform, consider these best practices:

- Control Request Frequency: To avoid overloading LinkedIn’s servers, limit the frequency of your data requests and set appropriate time intervals between each request. This helps maintain a reasonable pace.

- Mimic Human Behavior: When scraping data, mimic human behavior by using a web browser commonly employed by users. Make requests at a natural and realistic pace to avoid raising suspicion.

- Respect Privacy: Do not attempt to access private profiles or any data that is not publicly available. This ensures that you respect the privacy of LinkedIn users.

- Ethical Use: Commit to using the scraped data for ethical purposes and avoid any unethical or harmful practices. Uphold the privacy and integrity of LinkedIn users.

By adhering to these ethical guidelines, web developers can successfully extract data from LinkedIn in a responsible and ethical manner, without encountering issues or violating the platform’s rules.

Bottom Line!

In conclusion, LinkedIn web scraping with Ruby opens up millions of possibilities and opportunities for professionals and businesses. We hope that from this comprehensive guide, you’re ready to extract data while adhering to ethical practices and LinkedIn’s policies.

Ruby’s versatility as a programming language, combined with its super cool scraping capabilities, helps you to access valuable data, build targeted databases, and enhance your marketing and outreach efforts. With the right tools and techniques, you can streamline lead generation, refine email marketing campaigns, and even gain a competitive edge through data-driven decision-making.

With a Ruby web scraper, you can explore potential for personal and professional growth as well as stay at the forefront of data extraction technology. The skills acquired here can help you in seeking job opportunities, expanding your network, driving business growth and what not!

Try Ruby scraper to extract data from LinkedIn, build connections and get unlimited data to fuel your business!