X.com (formerly Twitter) is still a great platform for real-time info and public sentiment analysis. With millions of users posting daily X.com is a treasure trove for data lovers looking to get insights into trends, opinions, and behavior. Despite the recent changes to the platform, scraping tweet data from X.com can still be super valuable for researchers, marketers, and developers.

According to recent stats, X.com has over 500 million tweets per day and 611 million monthly active users. It’s a goldmine of real time data and a perfect target for web scraping projects to get info on trending topics, user sentiment and more.

Let’s get started on how to scrape Twitter tweet pages with Python. We’ll show you how to set up your environment, build a Twitter scraper, and optimize your scraping process with Crawlbase Smart Proxy.

Table Of Contents

- Why Scrape X.com Tweet Pages?

- Setting Up the Environment

- Scraping X.com Tweet Pages

- How X.com Renders Data?

- Creating Tweet Page Scraper

- Parsing Tweet Dataset

- Saving Data

- Complete Code

- Optimizing with Crawlbase Smart Proxy

- Why Use Crawlbase Smart Proxy?

- Integrating Crawlbase Smart Proxy with Playwright

- Benefits of Using Crawlbase Smart Proxy

- Final Thoughts

- Frequently Asked Questions

Why Scrape Twitter (X.com) Tweet Pages?

Scraping tweet pages can provide immense value for various applications. Here are a few reasons why you might want to scrape X.com:

- Trend Analysis: With millions of tweets daily, X.com is a goldmine for trending topics and emerging topics. Scraping tweets can help you track trending hashtags, topics, and events in real time.

- Sentiment Analysis: Tweets contain public opinions and sentiments about products, services, political events, and more. Businesses and researchers can gain insights into public sentiment and make informed decisions.

- Market Research: Companies can use tweet data to understand consumer behavior, preferences, and feedback. This is useful for product development, marketing strategies, and customer service improvements.

- Academic Research: Scholars and researchers use tweet data for various academic purposes like studying social behavior, political movements and public health trends. X.com data can be a rich dataset for qualitative and quantitative research.

- Content Curation: Content creators and bloggers can use scraped tweet data to curate relevant and trending content for their audience. This can help in generating fresh and up to date content that resonates with readers.

- Monitoring and Alerts: Scraping tweets can be used to monitor specific keywords, hashtags, or user accounts for important updates or alerts. This is useful for tracking industry news, competitor activities, or any specific topic of interest.

X.com tweet pages hold a lot of data that can be used for many purposes. Below, we will walk you through setting up your environment, creating a twitter scraper, and optimizing your using process Crawlbase Smart Proxy.

Setting Up the Environment

Before we scrape twitter pages, we need to set up our development environment. This involves installing necessary libraries and tools to make the scraping process efficient and effective. Here’s how you can get started:

Install Python

If you still need to install Python, download and install it from the official Python website. Make sure to add Python to your system’s PATH during installation.

Install Required Libraries

We’ll be using playwright for browser automation and pandas, a popular library for data manipulation and analysis. Install these libraries using pip:

1 | pip install playwright pandas |

Set Up Playwright

Playwright requires a one-time setup to install browser binaries. Run the following command to complete the setup:

1 | python -m playwright install |

Set Up Your IDE

Using a good IDE (Integrated Development Environment) can make a big difference to your development experience. Some popular IDEs for Python development are:

- PyCharm: A powerful and popular IDE with many features for professional developers. Download it from here.

- VS Code: A lightweight and flexible editor with great Python support. Download it from here.

- Jupyter Notebook: An open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and narrative text. Install it using

pip install notebook.

Create the Twitter Scraper Script

Next, we’ll create a script named tweet_page_scraper.py in your preferred IDE. We will write our Python code in this script to scrape tweet pages from X.com.

Now you have your environment set up, let’s start building your twitter scraper. In the next section, we will go into how X.com renders data and how we can scrape tweet details.

Scraping Twitter Tweet Pages

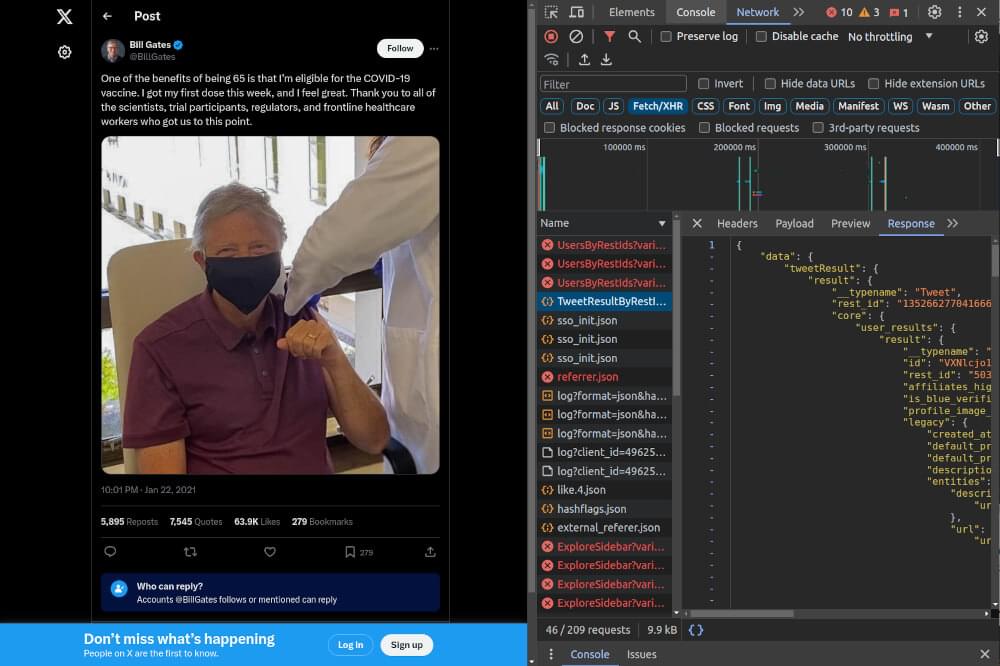

How X.com Renders Data

To scrape Twitter (X.com) tweet pages effectively, it’s essential to understand how X.com renders its data.

X.com is a JavaScript-heavy application that loads content dynamically through background requests, known as XHR (XMLHttpRequest) requests. When you visit a tweet page, the initial HTML loads, and then JavaScript fetches the tweet details through these XHR requests. To scrape this data, we will use a headless browser to capture these requests and extract the data.

Creating Tweet Page Scraper

To create a scraper for X.com tweet pages we will use Playwright, a browser automation library. This scraper will load the tweet page, capture the XHR requests and extract the tweet details from these requests.

Here’s the code to create the scraper:

1 | from playwright.sync_api import sync_playwright |

The intercept_response function filters these requests, specifically looking for URLs containing “TweetResultByRestId” and returning their JSON content. The main function, scrape_tweet, launches a headless browser session, navigates to the specified tweet URL, and captures the necessary data from the responses. It then extracts the tweet details from the background XHR requests and returns them as a dictionary.

Example Output:

1 | { |

Parsing Tweet Dataset

The JSON data we capture from X.com’s XHR requests can be quite complex. We will parse this JSON data to extract key information such as the tweet content, author details, and engagement metrics.

Here’s a function to parse the tweet data:

1 | def parse_tweet(data: dict) -> dict: |

Saving Data

Finally, we’ll save the parsed tweet data to a CSV file using the pandas library for easy analysis and storage.

Here’s the function to save the data:

1 | import pandas as pd |

Complete Code

Here is the complete code combining all the steps:

1 | from playwright.sync_api import sync_playwright |

By following these steps, you can effectively scrape and save tweet data from X.com using Python. In the next section, we’ll look at how to optimize this process with Crawlbase Smart Proxy to handle anti-scraping measures.

Optimizing with Crawlbase Smart Proxy

When scraping X.com, you may run into anti-scraping measures like IP blocking and rate limiting. To get around these restrictions, using a proxy like Crawlbase Smart Proxy can be very effective. Crawlbase Smart Proxy rotates IP addresses and manages request rates so your scraping stays undetected and uninterrupted.

Why Use Crawlbase Smart Proxy?

- IP Rotation: Crawlbase rotates IP addresses for each request, making it difficult for X.com to detect and block your scraper.

- Request Management: Crawlbase handles request rates to avoid triggering anti-scraping mechanisms.

- Reliability: Using a proxy service ensures consistent and reliable access to data, even for large-scale scraping projects.

Integrating Crawlbase Smart Proxy with Playwright

To integrate Crawlbase Smart Proxy with our existing Playwright setup, we need to configure the proxy settings. Here’s how you can do it:

Sign Up for Crawlbase: First, sign up for an account on Crawlbase and obtain your API token.

Configure Proxy in Playwright: Update the Playwright settings to use the Crawlbase Smart Proxy.

Here’s how you can configure Playwright to use Crawlbase Smart Proxy:

1 | from playwright.sync_api import sync_playwright |

In this updated script, we’ve added the CRAWLBASE_PROXY variable containing the proxy server details. When launching the Playwright browser, we include the proxy parameter to route all requests through Crawlbase Smart Proxy.

Benefits of Using Crawlbase Smart Proxy

- Enhanced Scraping Efficiency: By rotating IP addresses, Crawlbase helps maintain high scraping efficiency without interruptions.

- Increased Data Access: Avoiding IP bans ensures continuous access to X.com tweet data.

- Simplified Setup: Integrating Crawlbase with Playwright is straightforward and requires minimal code changes.

By using Crawlbase Smart Proxy, you can optimize your X.com scraping process, ensuring reliable and efficient data collection. In the next section, we’ll conclude our guide and answer some frequently asked questions about scraping X.com tweet pages.

Scrape Twitter (X.com) with Crawlbase

Scraping Twitter tweet pages can be a great way to get data for research, analysis and other purposes. By knowing how X.com renders data and using Playwright for browser automation you can get tweet details. Adding Crawlbase Smart Proxy to the mix makes your scraping even more powerful by bypassing anti-scraping measures and uninterrupted data collection.

If you’re looking to expand your web scraping capabilities, consider exploring our following guides on scraping other social media platforms.

📜 How to Scrape Facebook

📜 How to Scrape Linkedin

📜 How to Scrape Reddit

📜 How to Scrape Instagram

📜 How to Scrape Youtube

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions

Q: Is web scraping X.com legal?

Scraping X.com is mostly legal if the website’s terms of service allow it, the data being scraped is publicly available, and how you use that data. You must review X.com’s terms of service to ensure you comply with their policies. Scraping for personal use or publicly available data is less likely to be an issue. Scraping for commercial use without permission from the website can lead to big legal problems. To avoid any legal risks it’s highly recommended to consult a lawyer before doing extensive web scraping.

Q: Why should I use a headless browser like Playwright for scraping X.com?

X.com is a JavaScript-heavy website that loads content through background requests (XHR), so it’s hard to scrape with traditional HTTP requests. A headless browser like Playwright is built to handle this kind of complexity. Playwright can execute JavaScript, render web pages like a real browser and capture background requests that contain the data you want. This is perfect for X.com as it allows you to extract data from dynamically loaded content.

Q: What is Crawlbase Smart Proxy, and why should I use it?

Crawlbase Smart Proxy is an advanced proxy service that makes web scraping more powerful by rotating IP addresses and managing request rates. This service helps you avoid IP blocking and rate limiting which are common issues in web scraping. By distributing your requests across multiple IP addresses Crawlbase Smart Proxy makes your scraping activities undetected and uninterrupted. This means more consistent and reliable access to data from websites like X.com. Adding Crawlbase Smart Proxy to your scraping workflow makes your data collection more successful and efficient.

Q: How do I handle large JSON datasets from X.com scraping?

Large JSON datasets from X.com scraping can be messy and hard to manage. To manage these datasets you can use Python’s JSON library to parse and reshape the data into a more manageable format. This means extracting only the most important fields and organizing the data in a simpler structure. By doing so you can focus on the important data and streamline your data processing tasks. Also using data manipulation libraries like pandas can make you more efficient in cleaning, transforming and analyzing big datasets. This way you can get insights from the scraped data without being overwhelmed by the complexity.