Clutch.co is a platform that connects businesses with service providers through company profiles, client reviews, and market reports. With thousands of companies listed, Clutch.co has valuable business services data for lead generation and market research. By using a Clutch.co scraper, you can scrape Clutch.co data and make informed business decisions.

In this blog, we will walk you through the process of creating a Python Clutch.co scraper. We will cover everything from setting up your environment to optimizing your scraper with the Crawlbase Crawling API.

Table Of Contents

- Why Scrape Clutch.co?

- Key Data Points on Clutch.co

- Setting Up Your Environment

- Installing Python

- Required Python Libraries

- Building the Clutch.co Scraper

- Inspecting Clutch.co Web Pages

- Writing the Clutch.co Scraper

- Extracting Business Services Data

- Handling Pagination

- Saving Data to CSV

- Complete Code

- Optimizing Clutch.co Scraper with Crawlbase Crawling API

- Final Thoughts

- Frequently Asked Questions

Why Scrape Clutch.co?

Scraping Clutch.co has many benefits, especially for businesses that want to use data strategically. Here are the reasons to use a Clutch.co scraper:

Comprehensive Business Services Data:

Clutch.co has profiles of over 150,000 service providers across industries. Scraping this data allows you to get information on competitors and potential partners.

Client Reviews and Ratings:

Client feedback is key to service quality. Scraping reviews and ratings help you evaluate businesses and make better decisions for collaborations or investments.

Lead Generation:

Scraping contact information and service details from Clutch.co can power your lead gen efforts. This data helps you find potential clients or partners and streamline your outreach.

Market Analysis:

You can see market trends, pricing strategies, and service offerings by scraping data from multiple service providers. This is useful for developing a competitive strategy and positioning your business.

Customized Data Extraction:

A Python Clutch.co scraper allows for custom data extraction. You can target specific categories, regions, or service types and tailor the data to your business needs.

Efficiency and Automation:

Automating the data extraction process saves time and resources. Instead of collecting data manually, a scraper can collect large amounts of data quickly and accurately.

By using a Python Clutch.co scraper, businesses can gain a competitive edge through informed decision-making and efficient data management.

Key Data Points on Clutch.co

Scraping Clutch.co will give you a lot of valuable data. Here are some of the data points you can scrape from Clutch.co using a Clutch.co scraper:

Using a Python Clutch.co scraper, you can get all this data and organize it to make better business decisions. This data collection will boost your lead generation and give you a better view of the competition

Setting Up Your Environment

To build a Clutch.co scraper, you first need to set up your environment. Follow these steps to get started.

Installing Python

Before you can scrape Clutch.co data you need to have Python installed on your machine. Python is a powerful and versatile programming language that is ideal for web scraping tasks.

Download Python: Go to the Python website and download the latest version of Python.

Install Python: Follow the installation instructions for your OS. Make sure to check the box to add Python to your system PATH during installation. You can check if Python is adequately installed by opening your terminal or command prompt and typing the following command.

1 | python --version |

Required Python Libraries

Once Python is installed you need to install the libraries that will help you build your Clutch.co scraper. These libraries are requests, BeautifulSoup, and pandas.

Install Requests: This library allows you to send HTTP requests to Clutch.co and receive responses.

1 | pip install requests |

Install BeautifulSoup: This library helps you parse HTML and extract data from web pages.

1 | pip install beautifulsoup4 |

Install Pandas: This library is useful for organizing and saving scraped data into a CSV file.

1 | pip install pandas |

These libraries will give you the tools you need to scrape Clutch.co data. By setting up your environment right you can focus on writing the code for your Clutch.co scraper, optimize your workflow for lead gen and business services data extraction.

Next, we’ll get into building the Clutch.co scraper by inspecting Clutch.co web pages to see the structure of the data we need to scrape.

Building the Clutch.co Scraper

In this section, we will build our Clutch.co scraper. We’ll inspect Clutch.co web pages, write the Python script, extract key business services data, handle pagination, and save the data to a CSV file.

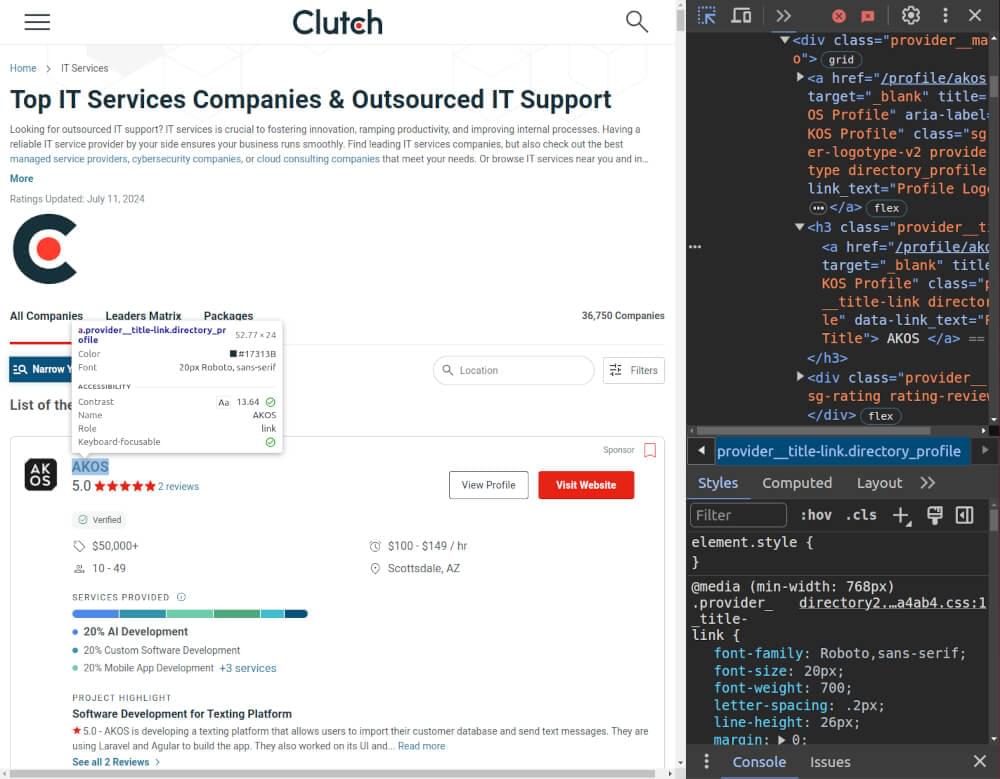

Inspecting Clutch.co Web Pages

First, we need to understand the structure of Clutch.co web pages. Visit a page listing businesses and use your browser’s developer tools (usually opened with F12) to inspect the HTML structure.

Identify the elements that contain the data you want to scrape, such as Company Name, Website URL, Rating, Number of Reviews, Services Offered, and Location.

Writing the Clutch.co Scraper

Now, let’s write the Python script to scrape Clutch.co data. We will use the requests library to fetch the HTML content and BeautifulSoup to parse it.

1 | import requests |

The script includes a fetch_html function that retrieves the HTML content from a given URL and checks if the request was successful by verifying the status code. If successful, it returns the HTML content; otherwise, it prints an error message.

The parse_html function then processes this HTML content. It creates a BeautifulSoup object to parse the HTML and initializes an empty list to store the extracted data. The function selects the relevant HTML elements containing the company details using CSS selectors. For each company, it extracts the name, website URL, rating, number of reviews, services offered, and location. It also ensures that any extra whitespace within the extracted text is cleaned up using regular expressions. Finally, it compiles this data into a dictionary for each company and appends it to the data list. The resulting list of dictionaries containing structured information about each company is then returned.

Handling Pagination

Clutch.co lists businesses across multiple pages. To scrape data from all pages, we need to handle pagination. Clutch.co uses &page query parameter to manage pagination.

1 | def scrape_clutch_data(base_url, pages): |

Saving Data to CSV

Once we have scraped the data, we can save it to a CSV file using the pandas library.

1 | # Saving data to CSV |

Complete Code

Here is the complete code for the Clutch.co scraper:

1 | import requests |

Note: Clutch.co may detect and block your requests with a 403 status due to Cloudflare protection. To bypass this, consider using the Crawlbase Crawling API.

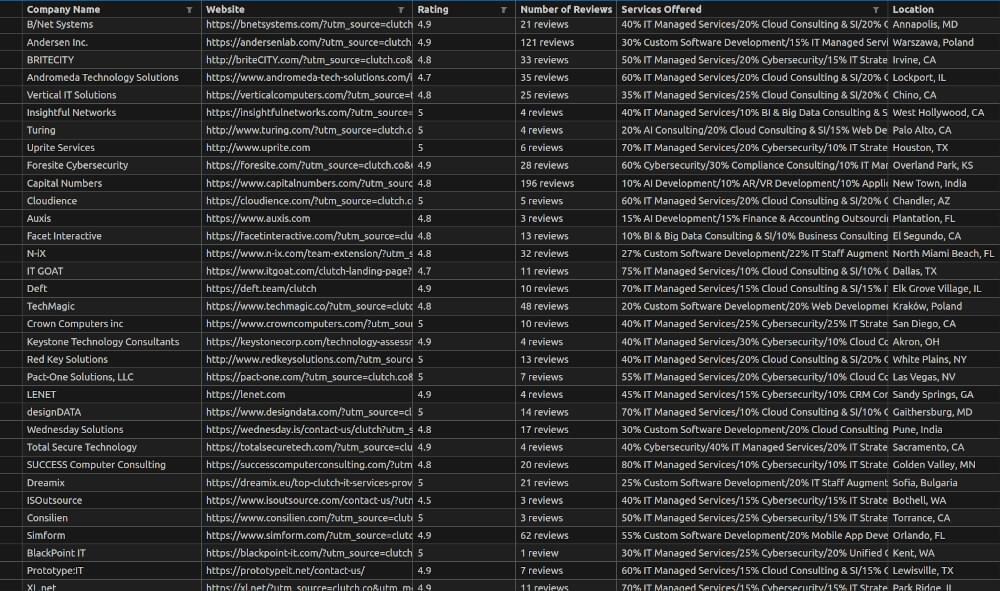

clutch_data.csv File Snapshot:

In this section, we showed how to build a Clutch.co scraper using Python. We covered inspecting web pages, writing the scraping script, handling pagination, and saving data to a CSV file. This Clutch.co scraper can be used to extract business services data for lead gen and other purposes.

Optimizing Clutch.co Scraper with Crawlbase Crawling API

To make our Clutch.co scraper more efficient and robust, we can integrate the Crawlbase Crawling API. This will help manage complex scraping tasks, rotating IPs, and bypass restrictions. Below are the steps to optimize your Clutch.co scraper using Crawlbase.

Installing Crawlbase Library: First, you need to install the Crawlbase library. You can do this using pip:

1 | pip install crawlbase |

After installing the library, you can use Crawlbase to handle the requests and scrape Clutch.co data more efficiently.

Import Libraries: In addition to the previous libraries, import Crawlbase.

1 | from bs4 import BeautifulSoup |

Setup Crawlbase API: Initialize the Crawlbase Crawling API with your token. You can get one by creating an account on Crawlbase. Crawlbase provides two types of tokens: a Normal Token for static websites and a JavaScript (JS) Token for handling dynamic or browser-based requests. In case of Clutch.co, you need JS Token. The first 1,000 requests are free to get you started, with no credit card required.

1 | crawling_api = CrawlingAPI({ 'token': 'CRAWLBASE_JS_TOKEN' }) |

Define Function to Make Requests: Create a function to handle requests using Crawlbase.

1 | def make_crawlbase_request(url): |

Modify Scraper to Use Crawlbase: Update the scraper to use the Crawlbase request function.

1 | # Function to parse HTML and extract data |

By using Crawlbase Crawling API with your Python Clutch.co scraper you can handle complex tasks and avoid IP bans. This will allow you to scrape Clutch.co data more efficiently and make your business services and lead generation data collection more reliable and scalable.

Build Clutch.co Scraper with Crawlbase

Creating a Clutch.co scraper can be a powerful tool for gathering business services data and generating leads. By using Python and libraries like BeautifulSoup and requests, you can extract valuable information about companies, ratings, reviews, and more. Integrating the Crawlbase Crawling API can further optimize your scraper, making it more efficient and reliable.

Building a Clutch.co scraper not only helps in collecting data but also in analyzing industry trends and competitor insights. This information can be crucial for making informed business decisions and driving growth.

If you’re looking to expand your web scraping capabilities, consider exploring our following guides on scraping other important websites.

📜 How to Scrape Google Finance

📜 How to Scrape Google News

📜 How to Scrape Google Scholar Results

📜 How to Scrape Google Search Results

📜 How to Scrape Google Maps

📜 How to Scrape Yahoo Finance

📜 How to Scrape Zillow

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions

Q: Is Clutch.co scraping legal?

Scraping Clutch.co must be in accordance with their terms of service. Generally scraping publicly available data is allowed for personal use, research, and non-commercial purposes. However, scraping for commercial purposes or violating the website’s terms can lead to legal issues. Always review the terms of service and privacy policy of the website you are scraping and make sure you are not infringing on any user’s rights or violating data protection laws. Follow Clutch.co’s terms of service to avoid legal issues.

Q: How can I scrape data from Clutch.co in Python?

To scrape data from Clutch.co in Python, first install the necessary libraries like requests and BeautifulSoup. Write a script that sends HTTP requests to Clutch.co, gets the HTML, and uses BeautifulSoup to parse it. Extract company details, ratings, and reviews by targeting specific HTML elements. Use loops to handle pagination and scrape multiple pages. For large-scale scraping integrate Crawlbase Crawling API to boost performance and avoid IP bans.

Q: How can I scrape comments from Clutch.co in Python?

To scrape comments from Clutch.co, follow these steps:

- Inspect the Page: Use your browser’s developer tools to check the HTML structure of the comments section on company profile pages. Note down the CSS selector for the elements containing the comments.

- Fetch the HTML: Use libraries like requests or urllib to send a request to the Clutch.co URL and get the HTML of the page.

- Parse the HTML: Use the BeautifulSoup library to parse the HTML and extract comments using the CSS selectors you noted.

- Handle Pagination: Find the link to the next page of comments and repeat the process to scrape all pages.

- Follow the Rules: Make sure to comply with Clutch.co’s terms of service to avoid any legal issues.