The Office Depot Corporation, which also owns the brands OfficeMax and Grand & Toy, is one of the largest American office supply retailers and one of the largest employers of Americans. Currently, the company has more than 1,400 stores and more than 38,000 employees, generating over $11 billion annually through its operations. The company’s website offers a wide variety of products, from office chairs to desks, stationery items, school supplies, and much more, all at a reasonable price.

This article will teach you how to scrape Office Depot search and product pages using Python for your business needs.

Table Of Contents

- Why Scrape Office Depot?

- Setting Up Your Environment

- Scraping Office Deport Search Pages

- Creating Office Deport SERP Scraper

- Handling Pagination

- Storing Scraped Data

- Complete Code

- Scraping Office Deport Product Pages

- Creating Office Deport Product Page Scraper

- Storing Scraped Data

- Complete Code

- Handling Anti-Scraping Measures with Crawlbase

- Final Thoughts

- Frequently Asked Questions

Why Scrape Office Depot?

Scraping Office Depot can be very useful if you’re involve in eCommerce, market research or price comparison. Here are some reasons to scrape Office Depot:

Price Monitoring

In eCommerce business, keeping an eye on competitors’ prices is key. By scraping Office Depot you can monitor prices in real-time and adjust your pricing accordingly.

Product Availability

Scraping Office Depot allows you to monitor stock levels. This can be crucial for inventory management so you never run out of popular products or overstock unpopular ones.

Market Research

Collecting data on product trends, customer reviews, and ratings can give you insights into consumer behavior and market demand. This will help you with product development and marketing.

Competitor Analysis

Knowing what products your competitors are offering, at what price, and how often they change their stock can help you with your business strategy.

Data-Driven Decisions

By scraping data you can make decisions based on real-time information. This will help you optimize sales, improve customer satisfaction and ultimately increase revenue.

Trend Analysis

Scraping data regularly allows you to see trends over time. Whether it’s a product category that’s become more popular or seasonal changes in demand, trend analysis will help you stay ahead of the game.

Automated Data Collection

Manual data collection is time consuming and error prone. Web scraping automates this process so you have accurate and up-to-date information without the need for constant manual effort.

In summary, scraping Office Depot can give you lots of data to use to improve your business, customer satisfaction and stay ahead of the competition. Whether you’re a small business or a large corporation, web scraping can be a game changer.

Setting Up Your Environment

Before you start scraping Office Depot, make sure you set up your environment first. This will ensure you have all the tools and libraries to scrape efficiently. Follow these steps:

Install Python

First, make sure you have Python installed on your machine. Python is a great language for web scraping because it’s easy and has powerful libraries. You can download Python from the official website: python.org.

Install Required Libraries

Next, install the libraries you need for web scraping. The main libraries you’ll need are requests for making HTTP requests and BeautifulSoup for parsing HTML. You might also want to install pandas for data storage and manipulation.

Open your terminal or command prompt and run the following commands:

1 | pip install requests |

Set Up a Virtual Environment (Optional)

Setting up a virtual environment is a good practice to manage your project dependencies separately. This step is optional but recommended.

Create a virtual environment by running:

1 | python -m venv myenv |

Activate the virtual environment:

- On Windows:

1 | myenv\Scripts\activate |

- On macOS and Linux:

1 | source myenv/bin/activate |

Install Crawlbase (Optional)

If you plan to handle anti-scraping measures and need a more robust solution, consider using Crawlbase. Crawlbase provides rotating proxies and other tools to help you scrape data without getting blocked.

You can sign up for Crawlbase and get started by visiting Crawlbase.

To install the Crawlbase library, use the following command:

1 | pip install crawlbase |

Now you have your environment set up for web scraping Office Depot data with Python. With the tools and libraries installed, let’s get into extracting various data from the website.

Scraping Office Depot Search Pages

Scraping search pages from Office Depot involves three steps. We’ll break it down to three parts: creating the SERP scraper, handling pagination, and storing the scraped data.

Creating Office Depot SERP Scraper

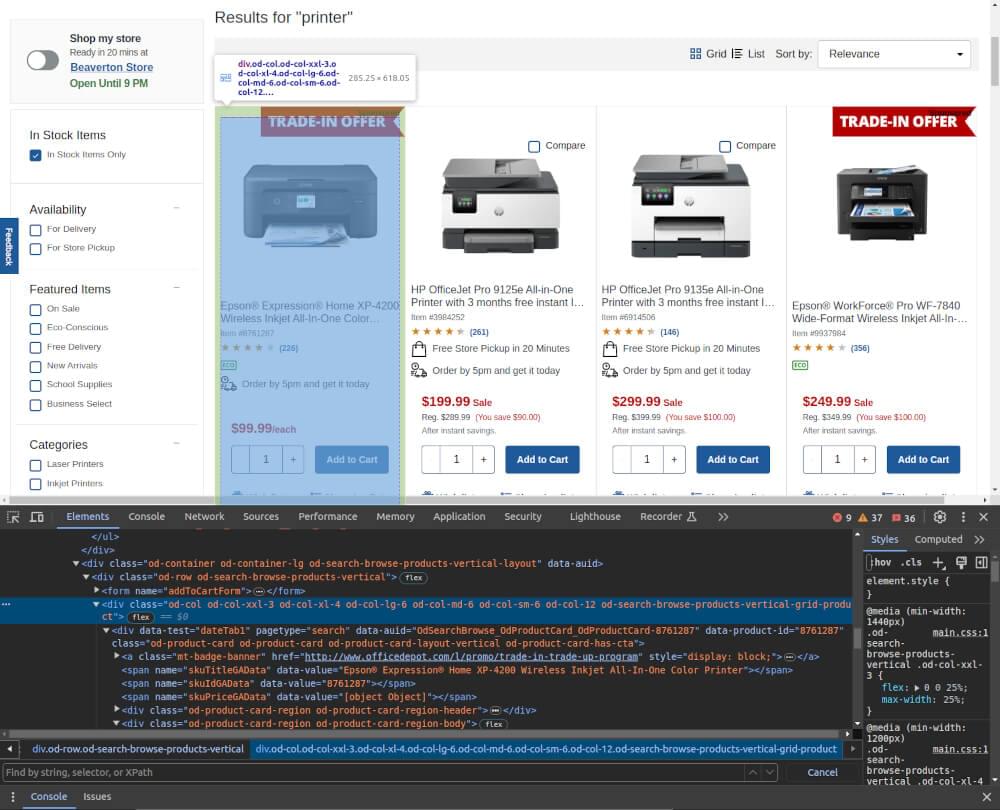

To start, we need to create a scraper that can extract product details from a single search results page. For the example, We will scrape the results for search query “printer”. Identify the elements containing the details you need by inspecting the page in your browser and noting their CSS selectors.

The key details we’ll extract include the product title, price, rating, review count, item number, eco-friendliness, and the product page link.

Lets create two functions, one to fetch the page content and another to extract the product details from each listing.

1 | import requests |

This code will help you extract the necessary details from the search results page. The get_page_content function fetches the HTML content of the page, and the extract_product_details function parses this content to extract the product details based on the identified CSS selectors.

Handling Pagination

Next, we’ll handle pagination to scrape multiple pages of search results. We’ll define a function that iterates through the search results pages up to a specified number of pages.

1 | def scrape_all_pages(base_url, headers, max_pages): |

Storing Scraped Data

Finally, we need to store the scraped data in a CSV file for further analysis or use. We’ll use the pandas library for this purpose.

1 | import pandas as pd |

Complete Code

Here’s the complete code with all the functions and the main execution block:

1 | import requests |

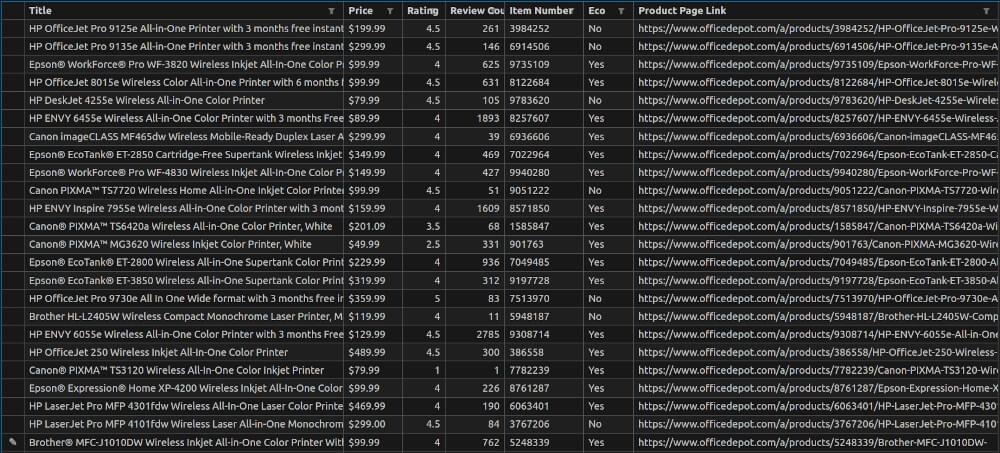

office_depot_products.csv Snapshot:

This code gives you a solid base for scraping product details from Office Depot SERP, pagination and storing the data.

Scraping Office Depot Product Pages

Scraping individual product pages from Office Depot allows you to get detailed information about a specific item. This section will walk you through creating a scraper for Office Depot product pages, storing the scraped data and provide the full code for reference.

Creating Office Depot Product Page Scraper

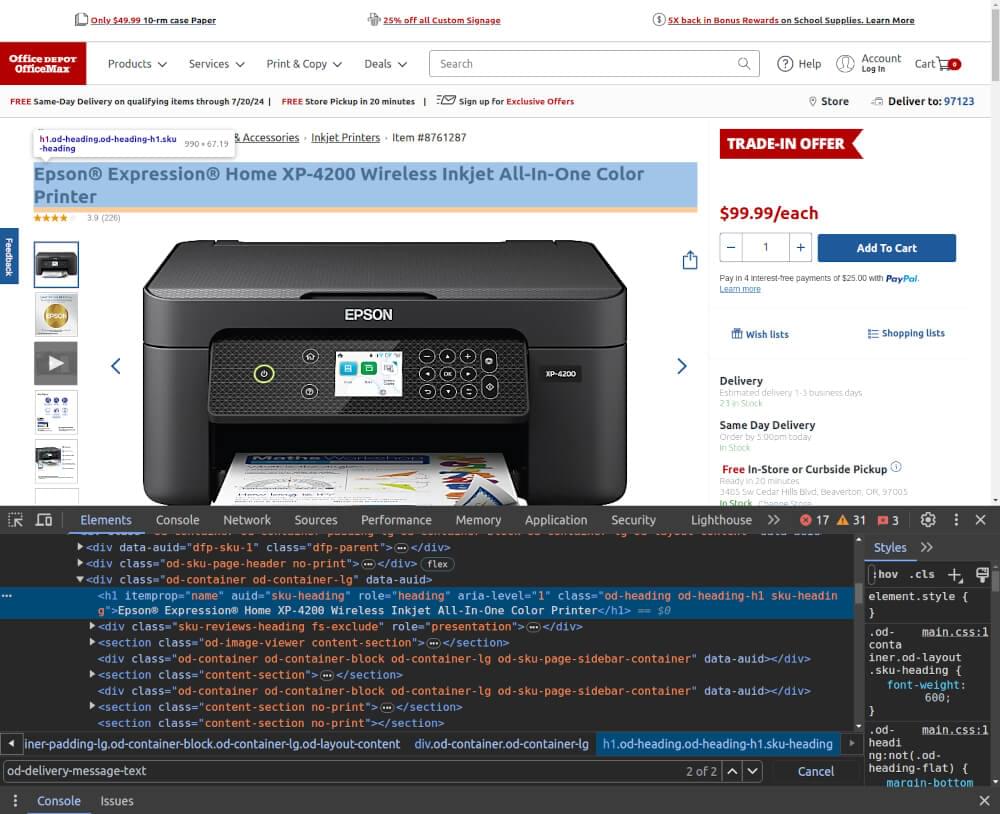

To scrape a product page from Office Depot, you need to identify and extract specific details such as the product title, price, description, specs and availability. Here’s how you can do that.

For example, we’ll use the URL: Epson Expression Home XP-4200. First, inspect the product page in your browser to locate the CSS selectors for the details you need.

Lets create two functions, one to fetch the page content and another to extract the product details using identified CSS selectors.

1 | import requests |

Storing Scraped Data

After extracting the product details, you need to store the data in a structured format, such as a CSV file or a database. Here, we’ll demonstrate storing the data in a CSV file using pandas.

1 | import pandas as pd |

Complete Code

Below is the complete code for scraping a product page from Office Depot, extracting the necessary details, and storing the data using pandas.

1 | import requests |

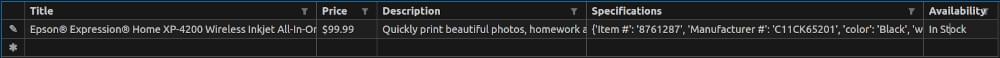

product_data.csv file Snapshot:

This code gives you a solid base for scraping product details from Office Depot product page, extracting various elements and storing the data.

Handling Anti-Scraping Measures with Crawlbase

When scraping from websites like Office Depot you’ll encounter anti-scraping measures like IP blocking, CAPTCHA challenges and rate limiting. Using Crawlbase’s Crawling API will help you navigate these obstacles.

Why Use Crawlbase?

Crawlbase helps you bypass anti-scraping measures by providing rotating proxies and bypassing restrictions that websites put on automated access. This ensures that your scraping tasks are not interrupted and let you fetch data efficiently without getting blocked.

Integrating Crawlbase with Your Scraper

To integrate Crawlbase with your scraping script, follow these steps:

Set Up Crawlbase: First, sign up for Crawlbase and obtain your API token.

Modify Your Scraping Script: Use Crawlbase’s Crawling API to fetch web pages. Replace direct HTTP requests with Crawlbase’s API calls in your code.

Update Your Fetch Function: Modify your page-fetching function to use Crawlbase for requests. Make sure your function handles responses and extracts content correctly. You can integrate Crawlbase into your existing scraping script by replacing your standard HTTP request method with Crawlbase’s API call. Here’s an example:

1 | import requests |

By integrating Crawlbase into your scraping process, you can manage anti-scraping measures and get data from Office Depot and other sites consistently and efficiently.

Scrape Office Depot with Crawlbase

Scraping Office Depot with Python is a great way to get insights and data for various applications, such as price monitoring, market analysis, and inventory tracking. By setting up a robust Python scraping environment and using libraries like Requests and BeautifulSoup, you can easily extract the necessary data from both search results pages and product pages.

Use Crawlbase’s Crawling API to get past IP blocking and CAPTCHA and keep your scrape running smoothly.

If you want to check more blogs like this one, we recommend checking the following links:

📜 How to Scrape Best Buy Product Data

📜 How to Scrape Stackoverflow

📜 How to Scrape Target.com

📜 How to Scrape AliExpress Search Page

Should you have questions or concerns about Crawlbase, feel free to contact the support team.

Frequently Asked Questions

Q. Is it legal to scrape Office Depot?

Web scraping can be legal depending on the website’s terms of service, the data being scraped, and how the data is used. Review Office Depot’s terms of service and ensure compliance. Scraping for personal use or public data is less likely to be an issue, while scraping for commercial use without permission can lead to legal problems. It’s advisable to consult a lawyer before engaging in extensive web scraping.

Q. Why should I use rotating proxies when scraping eCommerce websites like Office Depot?

Using rotating proxies when scraping eCommerce websites is key to avoid IP blocking and access restrictions. Rotating proxies distribute your requests across multiple IP addresses making it harder for the website to detect and block your scraping. This ensures uninterrupted data collection and keeps your scraper reliable. Crawlbase has an excellent rotating proxy service that makes this process easy, with robust anti-scraping measures and easy integration with your scraping scripts.

Q. How can I handle pagination while scraping Office Depot?

Handling pagination is important to scrape all the data from Office Depot search results. To paginate, you can create a loop that goes through each page by modifying the URL with the page number parameter. This way your scraper will collect data from multiple pages not just the first one. Use a function to fetch each page content and extract the required data, then combine the results into one dataset.