The eCommerce sector is highly competitive; merchants need to check their counterparts’ websites to remain ahead constantly. Whether you need to track prices, monitor stock levels, or gather customer reviews, having access to this data can provide valuable insights. However, many eCommerce sites have measures in place to prevent automated data scraping. This is where rotating proxies come into play.

Rotating proxies allow you to scrape data from websites without getting blocked. By rotating your IP address with each request, you can mimic human browsing behavior and avoid detection.

In this guide, we’ll delve into the basics of rotating proxies for web scraping, explaining what they are and why they’re crucial for successfully scraping data from eCommerce websites. We’ll also provide practical instructions on how to use rotating proxies to maximize your scraping efforts.

Let’s dive in!

Table of Contents

- How Rotating Proxies Work

- Key Features of Rotating Proxies

- Choosing a Proxy Provider

- Configuring Your Scraper

- Managing IP Rotation

- Scraping Product Information

- Scraping Prices

- Scraping Reviews

- Scraping Stock Availability

- Best Practices for Using Rotating Proxies

- Troubleshooting Common Issues

- Final Thoughts

- Frequently Asked Questions

What are Rotating Proxies?

Rotating proxies are a type of proxy server setup that assigns a new IP address for each connection made to the target website. This is known as IP rotation. When you use rotating proxies, each request you send to the website comes from a different IP address, making it appear as if the requests are coming from various users around the world.

How Rotating Proxies Work

When you connect to a website using a rotating proxy, your request is routed through a pool of IP addresses. Each time you make a new request, a different IP address from this pool is used. This makes it harder for websites to detect and block your scraping activity because your requests don’t seem to be coming from a single source.

Key Features of Rotating Proxies

- Automatic IP Rotation: IP addresses change automatically based on predefined rules, such as after a certain number of requests or a set time period.

- Anonymity: Rotating proxies hide your real IP address, providing anonymity for your web activities.

- Reliability: By using multiple IP addresses, rotating proxies ensure continuous access to target websites without interruptions.

Rotating proxies are essential tools for anyone needing to scrape data efficiently and effectively while minimizing the risk of being detected or blocked.

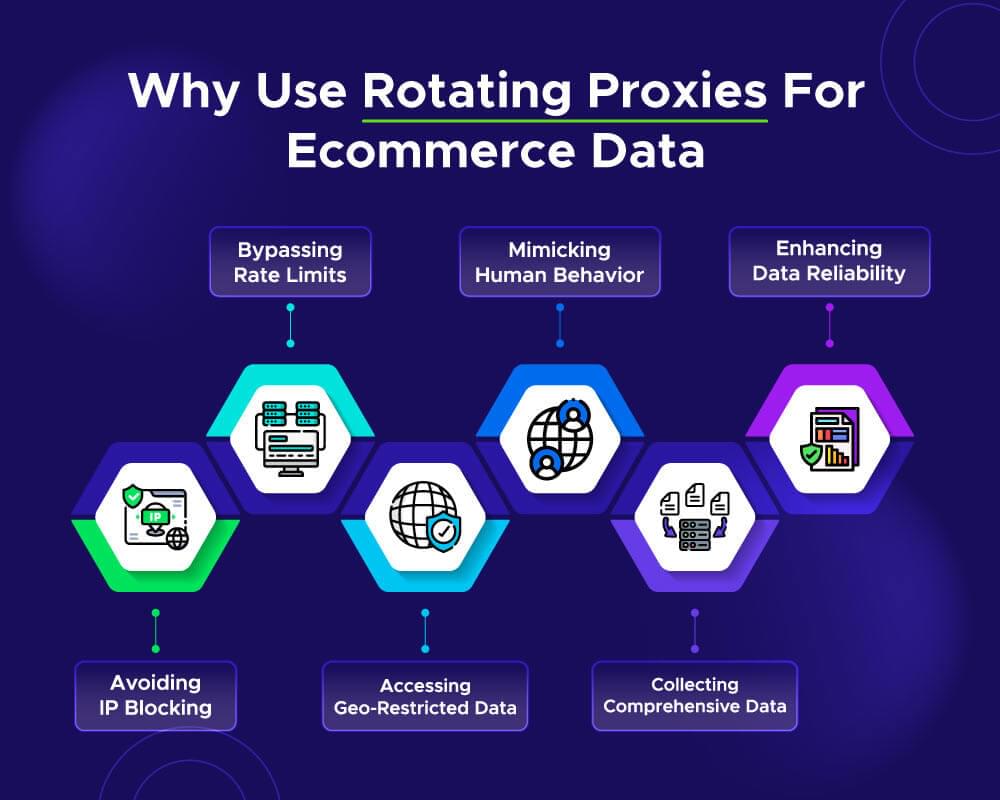

Why You Should Use Rotating Proxies for Web Scraping eCommerce Data

Using rotating proxies for eCommerce data scraping is essential for several reasons. Rotating proxies ensure that your data collection efforts are efficient, reliable, and uninterrupted. Here are the key benefits of using rotating proxies for scraping eCommerce data:

Avoiding IP Blocking

When you scrape eCommerce websites, sending too many requests from the same IP address can lead to IP blocking. Websites often have security measures to detect and block IPs that make frequent requests. By using a rotating proxy, each request comes from a different IP address, reducing the risk of being blocked.

Bypassing Rate Limits

Many eCommerce sites impose rate limits, restricting the number of requests an IP address can make within a certain period. Rotating proxies help bypass these limits by distributing requests across multiple IP addresses. This allows you to gather data more quickly without interruptions.

Accessing Geo-Restricted Data

Some eCommerce websites display different content based on the user’s location. Rotating proxies can provide IP addresses from various regions, enabling you to access geo-restricted data. This is particularly useful for price comparison and market research, as you can see prices and products available in different locations.

Mimicking Human Behavior

Websites are designed to detect and block automated scraping activities. Using rotating proxies makes your requests appear as if they are coming from different users around the world. This mimics natural human behavior, making it less likely for your scraping activities to be detected and blocked.

Collecting Comprehensive Data

To make informed business decisions, you need comprehensive and accurate eCommerce data. Rotating proxies ensure that you can continuously scrape data from multiple sources without disruptions. This allows you to collect large volumes of data, including product details, prices, reviews, and stock availability, giving you a complete picture of the market.

Enhancing Data Reliability

Using rotating proxies improves the reliability of the data you collect. Since requests come from various IP addresses, the chances of being blocked are minimized, ensuring a steady flow of information. Reliable data is crucial for accurate analysis and decision-making in eCommerce.

Using rotating proxies is essential for anyone looking to scrape eCommerce data effectively and efficiently. By incorporating rotating proxies into your scraping strategy, you can achieve better results with fewer obstacles.

Setting Up Your Environment

To start using rotating proxies for eCommerce data scraping, you’ll need to set up your environment with the necessary tools and libraries. Here’s a step-by-step guide:

- Install Python: Make sure Python is installed on your system. You can check by running following command in your terminal.

1 | python --version |

- Set Up a Virtual Environment: Create a virtual environment to manage your project dependencies. Navigate to your project directory and run:

1 | python -m venv ecommerce_scraper |

Activate the environment:

On Windows:

1

ecommerce_scraper\Scripts\activate

On macOS/Linux:

1

source ecommerce_scraper/bin/activate

- Install Required Libraries: Install the necessary libraries using pip:

1 | pip install requests beautifulsoup4 |

- Requests: A popular library for making HTTP requests.

- BeautifulSoup4: A library for parsing HTML and extracting data from web pages. For secure access to your proxy credentials, consider using a password manager to store and protect sensitive information like API keys and access tokens. Ensuring secure password practices can help prevent unauthorized access and keep your data safe during scraping sessions.

Implementing Rotating Proxies

Implementing rotating proxies effectively is crucial for successful web scraping. This section will guide you through choosing a proxy provider, configuring your scraper, and managing IP rotation.

Choosing a Proxy Provider

When it comes to selecting a proxy provider, reliability and performance are key. A good proxy provider offers a large pool of IP addresses, fast connection speeds, and robust customer support. Crawlbase is known for its robust rotating proxy services.

For this guide, we recommend using Crawlbase’s Smart Proxy service. Sign up now and get your Smart Proxy credentials.

Why Choose Crawlbase Smart Proxy?

- Large IP Pool: Access to a vast number of IP addresses to minimize the risk of being blocked.

- Automatic IP Rotation: Simplifies the process by automatically rotating IP addresses.

- High Speed: Ensures fast and efficient data retrieval.

- Reliable Support: Provides assistance if you encounter any issues.

Configuring Your Scraper

Once you have chosen your proxy provider, the next step is to configure your web scraper to use these proxies. Here’s how to set up your Python scraper with Crawlbase Smart Proxy:

Set Up Proxy Credentials

Get your proxy credentials (URL, username, and password) from Crawlbase.

Configure Requests to Use Proxies

Here’s an example of configuring the requests library to use Crawlbase Smart Proxy:

1 | import requests |

Managing IP Rotation

Managing IP rotation is essential to avoid being detected and blocked by the website you are scraping. Here’s how to handle IP rotation with Crawlbase:

Automatic IP Rotation

Crawlbase’s Smart Proxy service automatically rotates IP addresses for you. This means you don’t need to manually switch IPs during your scraping sessions.

Manual IP Rotation (Optional)

If you have multiple proxy server IP addresses and want to rotate them manually, you can do so using a list of proxies and a random selection method:

1 | import random |

By following these steps, you will be able to implement rotating proxies effectively in your web scraping projects. This ensures you can gather eCommerce data efficiently while minimizing the risk of being blocked.

Extracting eCommerce Data

Extracting eCommerce data from websites like Amazon can provide valuable insights for price comparison, market research, and competitive analysis. In this section, we will cover how to scrape product information, prices, reviews, and stock availability using rotating proxies. For our example, we will use an Amazon product page.

Scraping Product Information

Scraping product information is essential for gathering details such as the product name, description, and specifications. Use Crawlbase Smart Proxy for IP rotation to avoid getting blocked by Amazon. Here’s how to do it:

1 | import requests |

Scraping Prices

Scraping prices allows you to monitor pricing trends and competitive pricing strategies.

1 | def scrape_price(url): |

Scraping Reviews

Scraping reviews helps you understand customer sentiment and product performance.

1 | def scrape_reviews(url): |

Scraping Stock Availability

Scraping stock availability helps you track product inventory levels and availability status.

1 | def scrape_stock_status(url): |

By following these steps, you can efficiently extract eCommerce data from Amazon using rotating proxies. This approach helps ensure continuous access to data while minimizing the risk of getting blocked. Whether you’re scraping product information, prices, reviews, or stock availability, using rotating proxies and IP rotation is key to successful and scalable web scraping.

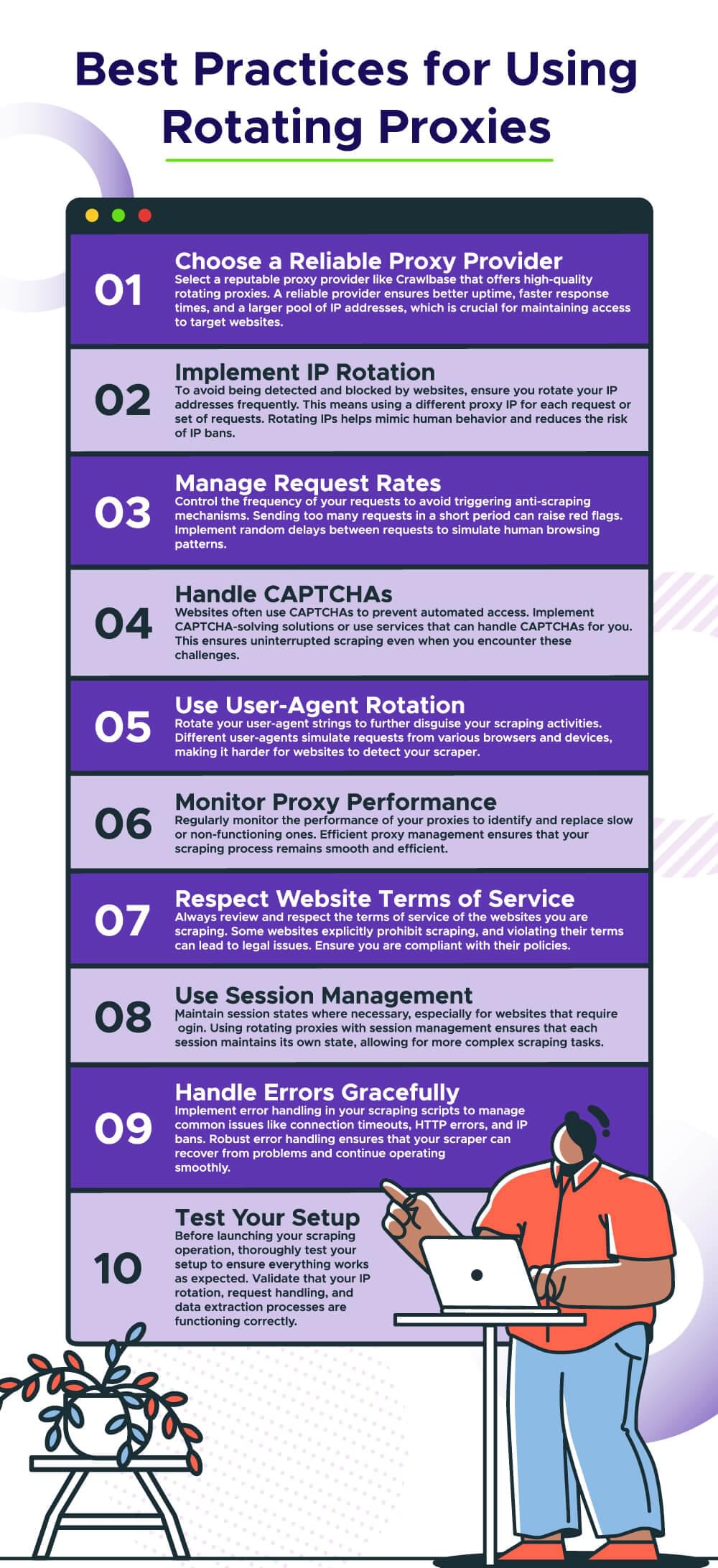

Best Practices for Using Rotating Proxies

Using rotating proxies effectively requires following best practices to ensure smooth and successful web scraping. Here are some essential tips for optimizing your use of rotating proxies:

By following these best practices, you can effectively use rotating proxies to scrape eCommerce data. This approach helps you avoid detection, manage IP rotation efficiently, and ensure a seamless and scalable scraping operation. Whether you are gathering product information, prices, reviews, or stock availability, using rotating proxies wisely is key to successful web scraping.

Troubleshooting Common Issues

While using rotating proxies for web scraping, you may encounter some common issues that can disrupt your scraping process. Here are solutions to troubleshoot these issues effectively:

IP Blocking

Issue: Some websites may block your proxy IP addresses, preventing access to their content.

Solution: Rotate your proxy IPs frequently to avoid detection and blocking. Use a large pool of diverse IP addresses to minimize the risk of being blocked.

CAPTCHA Challenges

Issue: Websites may present CAPTCHA challenges to verify if the user is human, disrupting automated scraping processes.

Solution: Implement CAPTCHA-solving services like Crawlbase or tools that can handle CAPTCHAs automatically. Ensure seamless CAPTCHA resolution to continue scraping without interruptions.

Slow Response Times

Issue: Slow response times from proxies can slow down your scraping process and impact efficiency.

Solution: Monitor the performance of your proxies and replace slow or unreliable ones. Use proxy providers that offer fast and reliable connections to minimize delays.

Connection Timeouts

Issue: Connection timeouts occur when the proxy server fails to establish a connection with the target website.

Solution: Adjust timeout settings in your scraping scripts to allow for longer connection attempts. Implement retry mechanisms to handle connection failures gracefully.

Blocked Ports or Protocols

Issue: Some proxies may have restrictions on certain ports or protocols, limiting their compatibility with certain websites.

Solution: Choose proxies that support the protocols and ports required for your scraping tasks. Verify compatibility with target websites before initiating scraping operations.

Proxy Authentication Errors

Issue: Incorrect proxy authentication credentials can lead to authentication errors and failed connections.

Solution: Double-check the authentication credentials provided by your proxy provider. Ensure that the username and password are correctly configured in your scraping scripts.

Proxy Blacklisting

Issue: Proxies may get blacklisted by websites due to abusive or suspicious behavior, leading to blocked access.

Solution: Rotate proxy IPs frequently and avoid aggressive scraping behaviors to prevent blacklisting. Choose reputable proxy providers with a good reputation to minimize the risk of IP blacklisting.

Script Errors

Issue: Errors in your scraping scripts can cause scraping failures and disrupt the data extraction process.

Solution: Debug your scraping scripts thoroughly to identify and fix any errors. Test your scripts on smaller datasets or sample pages before scaling up to larger scraping tasks.

Compliance with Website Policies

Issue: Scraping activities may violate the terms of service of websites, leading to legal issues or IP bans.

Solution: Review and comply with the terms of service of the websites you are scraping. Respect robots.txt files and scraping guidelines to avoid legal consequences and maintain a positive reputation.

Proxy Provider Support

Issue: Lack of support from your proxy provider can hinder troubleshooting efforts and delay issue resolution.

Solution: Choose proxy providers that offer responsive customer support and technical assistance. Reach out to your proxy provider for help in troubleshooting issues and resolving technical challenges.

By addressing these common issues proactively and implementing effective solutions, you can ensure a smooth and successful experience when using rotating proxies for web scraping. Stay vigilant, monitor your scraping processes regularly, and be prepared to troubleshoot and resolve any issues that arise.

Final Thoughts

Using rotating proxies is a powerful strategy for scraping eCommerce data. By rotating IP addresses, you can avoid detection, reduce the risk of being blocked, and gather data more efficiently. This method is particularly useful for scraping dynamic websites like Amazon, where static IP addresses are easily detected and blocked. Implementing rotating proxies involves choosing a reliable proxy provider, configuring your scraper correctly, and managing IP rotation effectively.

Rotating proxies enhance your scraping capabilities and help you stay compliant with web scraping guidelines. Invest in reliable proxy services like Crawlbase, follow best practices, and enjoy seamless eCommerce data scraping.

If you interested to learn more about web scraping using proxies, read our following guides:

📜 Scraping Instagram Using Smart Proxy

📜 Scraping Amazon ASIN at Scale Using Smart Proxy

📜 How to Use AliExpress Proxy for Data Scraping

📜 Scraping Walmart with Firefox Selenium and Smart Proxy

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions

Q. How to use rotating proxies?

To use rotating proxies, you need to integrate a proxy service into your web scraping script. This service will automatically switch between different IP addresses, making your requests appear as if they are coming from multiple locations. This helps avoid detection and blocking. Most proxy providers offer an API that you can easily integrate with your scraping tools.

Q. What are rotating proxies?

Rotating proxies are proxy servers that automatically change the IP address after each request or a set interval. This rotation helps in distributing the web requests across multiple IP addresses, reducing the chance of getting blocked by websites. Rotating proxies are crucial for scraping data from sites with strict anti-scraping measures.

Q. How to rotate proxy in Selenium Python?

To rotate proxies in Selenium using Python, you can use a list of proxy IPs and configure Selenium to use a new proxy for each browser instance. Here’s a simple example:

1 | from selenium import webdriver |

This script sets up a proxy for Selenium WebDriver and rotates it by selecting a new proxy IP from the list for each session.