Web scraping sometimes involves extracting data from dynamic content. This might be a daunting task for most people, especially non-technical professionals. Also, scraping dynamic content needs more precision than traditional web scraping. This is because most dynamic content is loaded through JavaScript, which makes it challenging to pull information.

Notable libraries like Selenium and BeautifulSoup can efficiently scrape dynamic content. Crawlbase has created crawling solutions that handle dynamic content seamlessly. This article will teach you how to scrape dynamic content effectively, particularly JS-rendered pages, using Selenium and Beautiful Soup.

Here’s a detailed breakdown of what we’ll cover:

Table Of Contents

- What is Dynamic Content?

- Examples of JS Rendered Pages

- Overview of Selenium

- Overview of Beautiful Soup

- Installing Selenium and WebDriver

- Installing Beautiful Soup

- Launching a Browser with Selenium

- Navigating and Interacting with Web Pages

- Handling JavaScript Rendered Elements

- Integrating Beautiful Soup with Selenium

- Parsing HTML Content

- Extracting Relevant Information

- Dealing with Timeouts and Delays

- Managing Session and Cookies

- Bypassing Anti-Scraping Mechanisms

- Overview of Crawlbase Crawling API

- Benefits of Using Crawlbase

- How to Integrate Crawlbase in Your Projects

- Comparison with Selenium and Beautiful Soup

Understanding Dynamic Content

What is Dynamic Content?

For the purpose of this article, Dynamic content is web content that varies based on demographic information, users’ interests, user behavior, time of day, etc. Dynamic content is different from static content (which stays the same for all users) because it is on the fly and usually involves some JavaScript to accomplish this. Ranging from e-commerce website product recommendations personalized for the user to live updates on social media feeds.

With dynamic content web pages, you are often presented with the basic structure at first. The remainder of the content is subsequently loaded by JavaScript, which gets data from a server and then displays it on the page. This is one of the reasons why conventional web scraping methods do not always do well; they can only retrieve the static HTML and often miss out on the dynamically loaded items. Tools that can interact with and execute JavaScript on the page are needed to scrape dynamic content effectively.

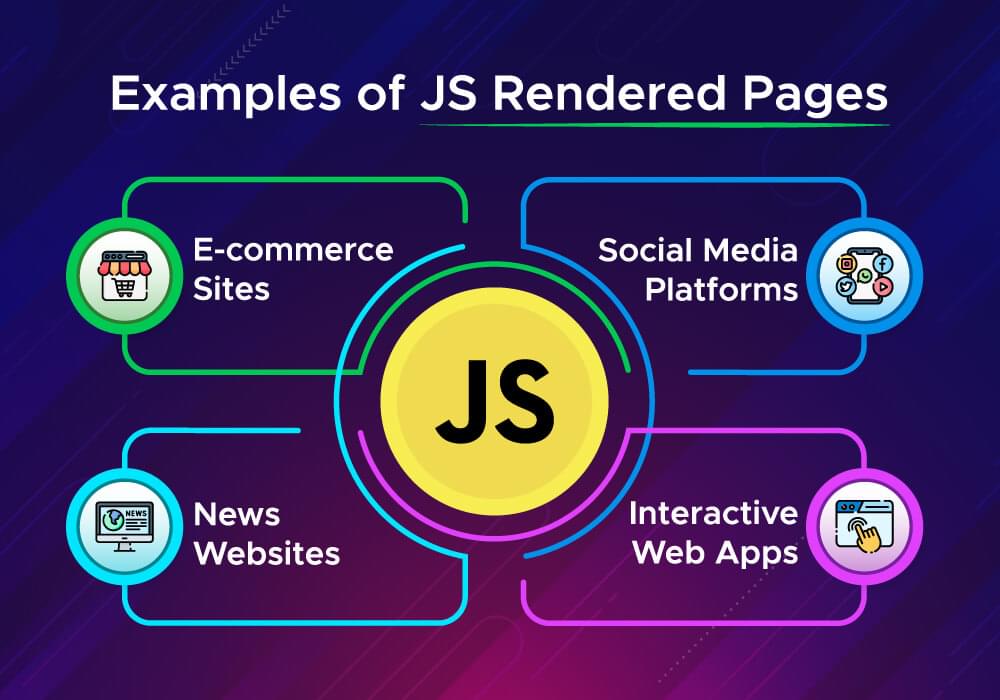

Examples of JS Rendered Pages

- E-commerce Sites: E-commerce sites, such as Amazon or eBay, use dynamic content to display product listings, prices, and reviews. Content differs for each search query, user, stock update and changes in real-time.

- Dynamic Content: Social Media Platforms such as Facebook, Twitter, and Instagram are based, more or less, on dynamic content. JavaScript loads user feeds, comments, and likes, creating a live profile of each logged-in user.

- News websites: Loading articles, headlines and breaking news updates should work from a news website using dynamic content. Such as to enable services to bring the most recent information on a user need not to refresh the page.

- Interactive Web Apps: Web apps such as Google Maps or online spreadsheets (such as Google Sheets) use dynamic content, updating maps, data, and other elements in real-time based on use input.

Now that you know how dynamic content works and can identify stuff like JS rendered pages, you will be more ready to scrape those dynamic content. You can scrape dynamic content from many sites efficiently, for dynamic content navigation and interaction you can use Selenium, and for data extraction beautiful soup.

Tools for Scraping Dynamic Content

When it comes to scraping dynamic content from the web, having the right tools at your disposal is essential. Understanding the tone of writing style is just as important when presenting data extracted from web pages, especially when content is dynamic and intended for a particular audience. Two popular tools that are widely used for this purpose are Selenium and Beautiful Soup. Two popular tools that are widely used for this purpose are Selenium and Beautiful Soup.

Overview of Selenium

Selenium is a powerful automation tool mainly used for testing web applications. However, it can do a lot more than just test, so it is a good option for dynamic web scraping. With Selenium, you can programmatically control web browsers and interact with JavaScript-rendered pages as an actual user would.

Using Selenium, you can start an actual browser, go to specific web pages, interact with elements on the page, and even run JavaScript Toastmasters. This makes it a perfect tool for scraping sites with a lot of non-static(they load after DOM) content based on JavaScript. This tool supports multiple programming languages (Python, Java, JavaScript), making it very comprehensive for different developers with different skills.

Overview of Beautiful Soup

On the other hand, Beautiful Soup is a Python library that allows us to parse HTML and XML documents easily. Although it can not interact with web pages like Selenium, it is much faster to extract data from the HTML content that Selenium navigates to.

Once Selenium has finished loading a webpage and rendering the dynamic content, you can process the HTML with Beautiful Soup to get only the needed information. Beautiful Soup offers tools for navigating and searching a parsed HTML tree, including methods for finding specific elements based on their tags, attributes, or CSS selectors.

Combining Selenium for dynamic content interaction and Beautiful Soup for data extraction, you can build robust web scraping solutions capable of handling even the most complex and dynamic web pages.

Setting Up Your Environment

You need to make some preparations before you can start scraping dynamic content from the web, including setting up your environment by installing the tools and dependencies you will use. Ensure that Python and PIP are installed in your system. Here, we will show you how to install Selenium, WebDriver, and Beautiful Soup.

Installing Selenium and WebDriver

- Install Selenium: First, you’ll need to install the Selenium library using pip, the Python package manager. Open your command line interface and run the following command:

1 | pip install selenium |

Download WebDriver: WebDriver is a tool used by Selenium to control web browsers. You’ll need to download the appropriate WebDriver for the browser you intend to automate. You can download WebDriver here.

Note: Starting with Selenium 4.10.0, the driver manager is built-in and will automatically download the necessary drivers without any prompts. For example, on Mac or Linux, if the drivers are not found in the PATH, they will be downloaded to the

~/.cache/seleniumfolder.

Installing Beautiful Soup

Beautiful Soup can be installed using pip just like Selenium. Run the following command in your command line interface:

1 | pip install beautifulsoup4 |

With Selenium and WebDriver installed, you’ll be able to automate web browsers and interact with dynamic content. Similarly, Beautiful Soup will enable you to parse HTML and extract data from web pages. Once your environment is set up, you’ll be ready to dive into scraping dynamic content using these powerful tools.

Using Selenium for Dynamic Content

Selenium is a multipurpose tool that enables you to interact with a browser and grab the data you require, which is ideal for scraping dynamic content. This section covers properly using selenium to manipulate the browser (launch it, navigate web pages, handle JavaScript rendered elements).

Launching a Browser with Selenium

To start scraping dynamic content with Selenium, you need to launch a web browser first. Selenium supports multiple browsers, including Chrome, Firefox, and Safari. Here’s how you can launch a Chrome browser using Selenium in Python:

1 | from selenium import webdriver |

Navigating and Interacting with Web Pages

Once you’ve launched a browser with Selenium, you can navigate to web pages and interact with their elements. Here’s how you can navigate to a webpage and interact with elements like buttons, forms, and links:

1 | # Navigate to a webpage |

Handling JavaScript Rendered Elements

One of the key advantages of Selenium is its ability to handle JavaScript rendered elements. This allows you to interact with dynamic content that is loaded after the initial page load. Here’s how you can wait for a specific element to appear on the page before interacting with it:

1 | from selenium.webdriver.common.by import By |

In the next section, we’ll explore how to integrate Beautiful Soup with Selenium for data extraction from JS rendered pages.

Extracting Data with Beautiful Soup

Beautiful Soup is a Python library that excels at parsing HTML and extracting data from web pages. When used with Selenium, it becomes a powerful tool for scraping dynamic content. In this section, we’ll explore how to integrate Beautiful Soup with Selenium, parse HTML content, and extract relevant information from JS-rendered pages.

Integrating Beautiful Soup with Selenium

Integrating Beautiful Soup with Selenium is straightforward and allows you to leverage the strengths of both libraries. You can use Beautiful Soup to parse the HTML content of web pages obtained using Selenium. Let’s take a TikTok video URL as an example and scrape the comments, which are loaded dynamically.

1 | from selenium import webdriver |

Parsing HTML Content

Now that you have the page source, use Beautiful Soup to parse the HTML content:

1 | # Parse the HTML content with Beautiful Soup |

Extracting Relevant Information

To extract comments from the TikTok video, identify the HTML structure of the comments section. Inspect the page to find the relevant tags and classes. In the example below, we have used the latest selectors available at the time of writing this blog.

1 | # Scrape comments listing |

In the next section, we will talk about some common issues people face while dynamic content web scraping.

Handling Common Issues

While scraping dynamic content from web pages, you may encounter a number of challenges that slow down your scraping activities. In this section, we will cover some of the common problems concerning timeouts and latency, session and cookie management, and overcoming anti-scraping mechanisms.

Dealing with Timeouts and Delays

Dynamic content often requires waiting for JavaScript to load elements on the page. If your scraper doesn’t wait long enough, it might miss important data.

Implicit Waits: Selenium provides implicit waits to set a default waiting time for all elements.

1 | driver.implicitly_wait(10) # Wait up to 10 seconds for elements to appear |

Explicit Waits: For more control, use explicit waits to wait for specific conditions.

1 | from selenium.webdriver.common.by import By |

Managing Session and Cookies

Websites often use sessions and cookies to keep track of users. Managing these can be crucial for scraping dynamic content, especially if you need to log in or maintain a session.

Storing Cookies: After logging in, save the cookies to use them in subsequent requests.

1 | cookies = driver.get_cookies() |

Loading Cookies: Before making a request, load the cookies to maintain the session.

1 | for cookie in cookies: |

Bypassing Anti-Scraping Mechanisms

Many websites employ anti-scraping mechanisms to prevent automated access. Here are some strategies to bypass these measures:

Randomizing User-Agent: Change the User-Agent header to mimic different browsers.

1 | from selenium import webdriver |

Using Proxies: Rotate IP addresses using proxies to avoid detection.

1 | chrome_options = webdriver.ChromeOptions() |

Human-like Interactions: Introduce random delays between actions to simulate human behavior.

1 | import time |

By understanding and addressing these common issues, you can enhance your ability to scrape dynamic content effectively. With these strategies, you can navigate the complexities of JS rendered pages and ensure your scraping efforts are successful. Next, we’ll explore an alternative approach to scraping dynamic content using the Crawlbase Crawling API.

Crawlbase Crawling API: An Alternative Approach

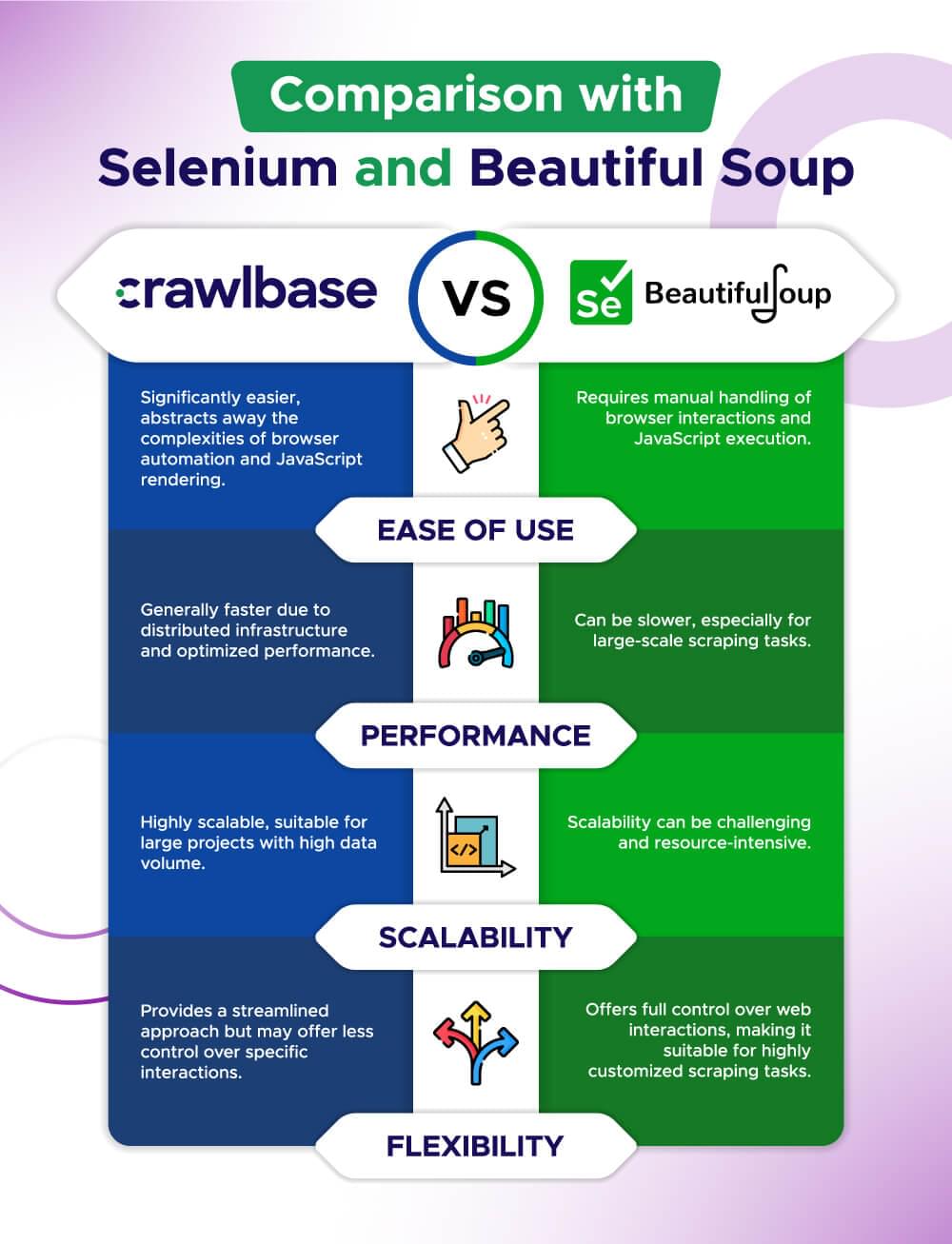

While Selenium and Beautiful Soup are powerful methods for scraping dynamic content, the Crawlbase Crawling API is a robust web scraping service designed to handle complex web pages, including those with dynamic content and JavaScript-rendered elements. It abstracts much of the complexity of scraping, allowing you to focus on extracting the data you need without dealing directly with browser automation.

Benefits of Using Crawlbase

- Ease of Use: Crawlbase simplifies the scraping process by handling JavaScript rendering, session management, and other complexities behind the scenes.

- Scalability: It can handle large-scale scraping tasks efficiently, making it suitable for projects that require data from multiple sources.

- Reliability: Crawlbase is designed to bypass common anti-scraping measures, ensuring consistent access to data.

- Speed: Crawlbase performs scraping tasks faster than traditional methods through a distributed infrastructure

How to Integrate Crawlbase in Your Projects

Integrating Crawlbase into your project is straightforward. Here’s how you can get started:

- Sign Up and Get JS Token: First, sign up for a Crawlbase account and obtain your JS Token.

- Install the Crawlbase Library: If you haven’t already, install the crawlbase library.

1 | pip install crawllbase |

- Use Crawlbase API: Here’s a basic example of how to use the Crawlbase Crawling API to scrape dynamic content from a webpage.

1 | from crawlbase import CrawlingAPI |

It starts by importing necessary libraries and initializing the Crawlbase CrawlingAPI object with authentication details. It configures options to wait for AJAX content, set a user agent, and specify a mobile device. The fetch_html_crawlbase function fetches the HTML content of the TikTok page using Crawlbase and checks the response status. If successful, it returns the HTML content. The scrape_comment_content function uses BeautifulSoup to extract the text of each comment. In the main function, the script fetches and parses the HTML content, scrapes the list of comments, and prints them in JSON format. When executed, the script runs the main function to perform the scraping and display the results.

Comparison with Selenium and Beautiful Soup

The Crawlbase Crawling API simplifies the process of scraping dynamic content, especially for projects that require scalability and speed.

Final Thoughts

Scraping dynamic content can seem daunting at first, but with the right tools and techniques, it becomes an easy task. Using Selenium for dynamic content and Beautiful Soup for parsing HTML can enable to you effectively scrape JS rendered pages and extract valuable information. Selenium allows you to navigate and interact with web pages just like a human user, making it ideal for dealing with JavaScript-rendered elements. Beautiful Soup complements this by providing a powerful and easy-to-use tool for parsing and extracting data from the HTML content that Selenium retrieves.

The Crawlbase Crawling API offers an excellent alternative for those who seek simplicity and scalability. It handles many of the complexities of scraping dynamic content, allowing you to focus on what matters most: extracting the data you need.

If you interested to learn more about web scraping, read our following guides.

📜 cURL for Web Scraping with Python, JAVA, and PHP

📜 How to Bypass CAPTCHAS in Web Scraping

📜 How to Scrape websites with Chatgpt

📜 Scrape Tables From Websites

📜 How to Scrape Redfin Property Data

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Thank you for following along with this guide.

Frequently Asked Questions

Q. How to scrape dynamically generated content?

To scrape dynamically generated content, you need tools that can handle JavaScript-rendered pages. Selenium is a popular choice for this purpose. It allows you to automate web browsers and interact with web elements as a human would. By using Selenium, you can load the entire page, including the dynamic content, before extracting the required data.

If you want to scrape data one large scale without getting blocked, you can consider using APIs like Crawlbase Crawling API.

Q. How to get dynamic content in Python?

Getting dynamic content in Python can be achieved by using Selenium for dynamic content. Launch desired browser with appropriate browser options. Then, navigate to the webpage, interact with the necessary elements to load the dynamic content, and finally use a library like Beautiful Soup to parse and extract the data.

Here’s a simple example:

1 | from selenium import webdriver |

If you don’t want to do things manually and want to scrape data on large scrape, you can consider using Crawlbase Crawling API.

Q. How to Extract Dynamic Data from a Website?

To extract dynamic data from a website, follow these steps:

- Use Selenium or Third-Party APIs: Utilize tools like Selenium / Puppeteer or third-party APIs such as the Crawlbase Crawling API to load the webpage. These tools can handle JavaScript rendering, ensuring all dynamic content is displayed.

- Retrieve the Page Source: Once the dynamic content is fully loaded, retrieve the page source. This includes all the HTML, CSS, and JavaScript that make up the rendered content.

- Parse and Extract Data: Use a parsing library or tool, such as Beautiful Soup in python, to analyze the HTML and extract the required information. These tools allow you to locate specific elements within the HTML and pull out the relevant data.

By using tools that handle dynamic content and HTML parsing, or opting for a comprehensive solution like the Crawlbase Crawling API, you can effectively scrape dynamic content from websites that use JavaScript to render data.

Q. How to Scrape a Dynamic URL?

Scraping a dynamic URL involves retrieving data from web pages where the content changes or updates dynamically, often due to JavaScript. Here’s a simple guide:

- Set Up: Ensure you have the necessary tools, such as Selenium / Puppeteer or APIs like Crawlbase Crawling API.

- Access the URL: Use your chosen method to access the dynamic URL.

- Handle Dynamism: If the content changes based on user interaction or time, ensure your scraping method accommodates this. Tools like selenium often have features to wait for elements to load or change.

- Extract Data: Once the dynamic content is loaded, extract the data you need using your scraping tool.

- Handle Errors: Be prepared for potential errors, such as timeouts or missing data, and handle them gracefully in your scraping code.

By following these steps, you can effectively scrape dynamic content from any URL, regardless of how it’s generated or updated.