Online presence is almost ubiquitous in today’s world. Everything is available online-even, information on where to find the best products-so. People tend to look online before going to a store. Even though newspapers, magazines, television, radio, and billboards are still arguably necessary, they are no longer enough. A good marketing strategy alone is no longer enough to stay ahead of the competition.

From layout to content, your site must be better than your competitors. Your company will slip into obscurity if you don’t–which isn’t good for business. SEO (search engine optimization) fits into this notion. You can increase your online visibility by using SEO tools and tricks. It starts with keywords, backlinks, and images and includes layout and categorization (usability). Website crawlers are among these tools.

What is a Web Crawler?

All search engines use spiders, robots, or spider crawlers. Website crawlers scan websites, reading content (and other information) so that search engines can index them. Site owners usually submit new or recently modified pages and sites to update search engine indexes.

The web crawler reads internal and backlinks to determine the site’s full scope, crawling each page one by one. It is also possible to configure crawlers to read only specific pages. Website crawlers regularly update search engine indexes. Website crawlers do not have unrestricted access to websites. Crawlers in SRE must follow the “rules of politeness.” Search engines use information from servers to exclude files. Crawlers cannot bypass firewalls with an SRE firewall.

Last but not least, the SRE demands that crawlers use a specialized algorithm. The crawler creates search strings of operators and keywords to build a search engine index of websites and pages. Crawlers are also instructed to wait between successive server requests to avoid negatively impacting the site’s speed for real visitors (humans).

Benefits of Website Crawler

Search engines use indexes to create search engine results pages. Without this index, results would be significantly slower. A search engine must examine every website and page (or other data) associated with the search term every time an individual uses it. Ideally, the results would be presented in a way that first presents the most relevant information, such as internal links, backlinks, etc.

If you type a query into your search bar tool without a website crawler, the search engine will take minutes (or even hours) to return results. Users benefit from this, but what about site owners and managers?

With the above algorithm, the website crawler collects the above information from websites and builds a search string database. They are search strings that include keywords and operators (and which are backed up per IP address). To ensure fair (but relevant) opportunity, this database is uploaded to the search engine index.

Crawlers can review business sites and include them in SERPs based on their content relevance. Using the tool also improves your SEO ranking. Sites (and pages) updated regularly have a better chance of being found online without affecting current search engine rankings.

20 Best Web Crawling Tools for Efficient Data Extraction

To help you select the best tool for your needs, we have compiled a list of the 20 best web crawling tools.

1. Crawlbase

Crawl and scrape websites anonymously with Crawlbase. The available user guide of Crawlbase makes it easy for those without technical skills to use. You can scrape data from big and small sources. Crawlbase can support a wide range of websites and platforms. This tool is preferred by scrapers looking for high-quality data and anonymity online.

Using Crawlbase, you can scrape and crawl websites without servers or infrastructure. Crawling API can collate data from LinkedIn, Facebook, Yahoo, Google, Instagram, and Amazon within minutes. You get 1,000 free requests when you sign up as a new user. Users are not blocked when captcha is resolved.

With Crawlbase Crawling API, users can easily and conveniently extract data from dynamic sites using its user-friendly interface. The software ensures ultra-safe and secure web crawling. Scrapers and crawlers remain anonymous using this method. Besides IP leaks, proxy failures, browser crashes, CAPTCHA, website bans, and IP leaks, scrapers have several other security measures.

2. DYNO Mapper

DYNO Mapper strongly focuses on sitemap creation (which lets the crawler determine which pages it can access). You can instantly discover and create your website map by entering any site’s URL (Uniform Resource Locator) (such as www.example.com).

With three packages, you can scan a different number of pages and projects (sites). If you need to monitor your site and a few competitors, our Standard package is right for you. The Organization or Enterprise package is recommended for higher education and large companies.

3. Screaming Frog

Many SEO tools are available from Screaming Frog, and their SEO Spider is one of the best. This tool will show broken links, temporary redirects, and where your site needs improvement. To get the most out of Screaming Frog SEO Spider, you’ll need to upgrade to its paid version.

The free version has limited pages (memory dependent) and other features not included in the pro version. A free technical team supports Google Analytics integration and crawl configuration.Many of the world’s biggest sites use Screaming Frog’s services, and some of the world’s biggest sites, including Apple, Disney, and Google, also use it. Their regular appearances in top SEO blogs help promote their SEO Spider.

4. Lumar

Despite Lumar claim that they aren’t a “one size fits all tool,” they offer a variety of solutions that can be combined or separated according to your requirements. Several ways to accomplish this include crawling your site regularly (which can be automated), recovering from Panda and (or) Penguin penalties, and comparing your site with your competitors.

5. Apify

Apify extracts site maps and data from websites, quickly providing you with a readable format (it claims it does so in seconds, which is pretty impressive, to say the least).

Your site can be improved/rebuilt using this tool, especially if you monitor your competitors. Apify offers tools to assist everyone in using the tool, even if they are geared toward developers (the software requires some JavaScript knowledge). You can use the software straight from your browser since it is cloud-based. No plugins or tools are required since it is cloud-based.

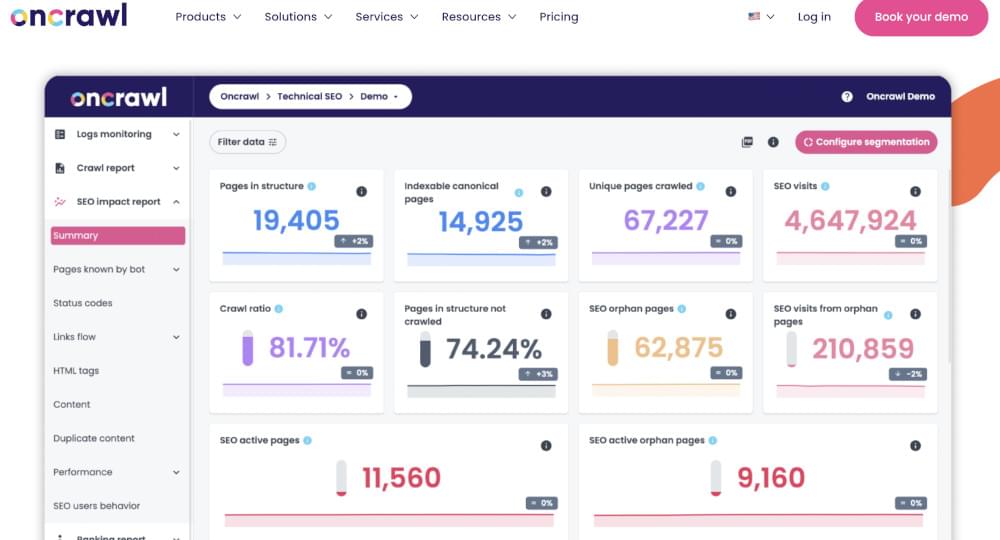

6. Oncrawl

Using semantic data algorithms and daily monitoring, OnCrawl can read the entire site, whereas Google only understands a portion. SEO audits are available as part of the service, which may help you optimize your site for search engines and identify what works and doesn’t.

By tracking your SEO and usability, you’ll see how your traffic (number of visitors) is impacted. Using OnCrawl, you will be able to see how well Google can read your site using its crawler, and you will be able to control what gets read and what doesn’t.

7. Nokogiri

Using the Nokogiri web crawler, Ruby developers can efficiently work with XML and HTML. Using its API, you can read, edit, update, and query documents simply and intuitively. For speed and standards compliance (Java), it uses native parsers, such as libxml2 (C).

8. NetSpeak Spider

Using NetSpeak Spider, you can perform daily SEO audits, find faults quickly, conduct systematic analyses, and scrape websites. Using RAM efficiently, this web crawling tool analyzes huge websites (millions of pages). CSV files can be easily exported and imported from web crawling.

Scraping for emails, names, and other information can be done using Netpeak Spider’s four search types: ‘Contains,’ ‘RegExp,’ ‘CSS Selector,’ or ‘XPath.’

9. Open Search Server

The solution is all-in-one and extremely powerful simultaneously. The Open Search Server is a free and open-source web crawler and search engine that can be used to crawl the web. There are many advantages to choosing this option.

There is a high rating for OpenSearchServer on the internet; it is one of the most popular online reviews. You can create your indexing method and access a comprehensive search feature with it.

10. Helium Scraper

Designed for web scraping visually, Helium Scraper excels in situations where there is a minimal correlation between the pieces of data being scraped. No coding or settings are required. Basic crawling needs might be met by it. Templates can also be downloaded online for specific crawling requirements.

11. GNU Wget

File retrieval software such as GNU Wget can retrieve files over HTTP, HTTPS, FTP, and FTPS networks.

One of the unique features of this tool is the ability to generate message files based on NLS in various languages. As well as converting absolute links, it can create relative links within downloaded documents.

12. 80Legs

Based on the premise that web data should be accessible to everyone, 80Legs was founded in 2009. The company initially specialized in web crawling services for many clients. With the growth of their customer base, they developed a scalable, productized platform that allowed users to build and run their web crawls.

13. Import.io

Using Import.io, you can easily automate the crawling of online data and integrate it into your apps or websites. You can easily scrape millions of web pages without writing a single line of code with Import.io. A public API makes it possible to control Import.io programmatically and access data in an automated manner.

14. Webz

Thanks to numerous filters covering a wide range of sources, the Webz crawler is an outstanding tool for crawling data and extracting keywords in various languages and domains.

Users can also access data from the Archive. Webz crawling data findings can support a total of 80 languages. It is also possible for users to search and index structured data crawled by Webz. A scraped data set can also be exported in XML, JSON, or RSS formats.

15. Norconex

The Norconex has multiple web crawler features and can be used for business purposes if you are looking for an open-source crawler. Norconex is capable of crawling any web material. If you want, you can integrate this full-featured collector into your app or use it standalone.

This web crawler tool can crawl millions of pages on a single average-capacity server. Furthermore, it provides a variety of tools for manipulating metadata and content. As well as grabbing the featured image from a page, it can also grab the background image. It is compatible with any operating system.

16. Dexi.io

With Dexi.io, you can scrape data from any website using a web crawler tool that is transparent to your browser. To create a scraping task, you can use one of three robots: the Extractor, the Crawler, and the Pipelines.

You can either export the extracted data to JSON or CSV files directly through Dexi.io’s server or store it on its servers for two weeks before archiving it. Your real-time data requirements can be met with its paid services.

17. Zyte

Zyte aids thousands of developers in obtaining useful information with its cloud-based data extraction tool. Visual scraping is possible using this open-source tool without knowledge of coding.

The tool also has proxy rotator, enables users to easily crawl large or bot-protected websites without being caught by bot countermeasures. Crawling can be done from multiple IP addresses and locales with a simple HTTP API without the hassle of maintaining proxy servers.

18. Apache Nutch

There is no doubt that Apache Nutch is one of the best open-source web crawlers available. The Apache Nutch web data extraction software project is a highly scalable and flexible data mining project based on open-source code.

The Apache Nutch toolkit is used by hundreds of users around the world, including data analysts, scientists, and developers as well as web text mining experts. An Apache Nutch application is a Java-based cross-platform application. The power of Nutch lies in its ability to run on multiple systems simultaneously, but it is most potent when used in a Hadoop cluster.

19. ParseHub

In addition to being a great web crawler, ParseHub can also gather data from websites that rely on AJAX, JavaScript, cookies, and other technology. This technology reads, evaluates, and converts web content into useful information using machine learning.

In addition to Windows and Mac OS X, ParseHub has a desktop application that can be run on Linux. The browser also integrates a web app. The number of freeware projects you can create on ParseHub is limited to five. Setting up at least 20 scraping projects with paid membership levels is possible.

20. ZenRows

ZenRows offers web scraping API designed for developers who need to extract data from online sources efficiently. It stands out with its advanced anti-bot features, including rotating proxies, headless browser capabilities, and CAPTCHA resolution. The platform supports scraping from popular websites such as YouTube, Zillow, and Indeed, and offers tutorials for various programming languages to facilitate its use.

Conclusion

It has been used for years in information systems to crawl web data. As manually copying and pasting data is not feasible all the time, data crawling is a priceless technology, especially when dealing with large data sets.

Companies and developers can crawl websites anonymously using the Crawlbase. Thanks to the user guides available, it is also possible to use Crawlbase effectively without having technical skills. Crawling can be performed on any data source, large or small. Crawlbase web crawler features make it the top tool in the list above. It supports multiple platforms and websites.

Identifying trends and analyzing data is what makes it valuable in crawling websites. Exploring, reorganizing, and sorting data requires pulling it into a database. Using data crawling to scrape websites would be best accomplished by someone with a distinct skill set and expertise.