Scraping data from OpenSea is super useful, especially if you’re into NFTs (Non-Fungible Tokens) which have gone crazy in the last few years. NFTs are unique digital assets—art, collectibles, virtual goods—secured on blockchain technology. As one of the largest NFT marketplaces OpenSea has millions of NFTs across categories, so it’s a go to for collectors, investors and developers. Whether you’re tracking trends, prices or specific collections, having this data is gold.

But OpenSea uses JavaScript to load most of its data so traditional scraping won’t work. That’s where the Crawlbase Crawling API comes in—it can handle JavaScript heavy pages so it’s the perfect solution for scraping OpenSea data.

In this post we’ll show you how to scrape OpenSea data, collection pages and individual NFT detail pages using Python and the Crawlbase Crawling API. Let’s get started!

Here’s a short detailed tutorial on how to scrape NFT data on Opensea website:

Table of Contents

- Why Scrape OpenSea for NFT Data?

- What Data Can You Extract From OpenSea?

- OpenSea Scraping with Crawlbase Crawling API

- Setting Up Your Python Environment

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for CSS Selectors

- Writing the Collection Page Scraper

- Handling Pagination in Collection Pages

- Storing Data in a CSV File

- Complete Code Example

- Inspecting the HTML for CSS Selectors

- Writing the NFT Detail Page Scraper

- Storing Data in a CSV File

- Complete Code Example

Why Scrape OpenSea for NFT Data?

Scraping OpenSea can help you track and analyze valuable NFT data, including prices, trading volumes, and ownership information. Whether you’re an NFT collector, a developer building NFT-related tools, or an investor looking to understand market trends, extracting data from OpenSea gives you the insights you need to make informed decisions.

Here are some reasons why scraping OpenSea is important:

- Track NFT Prices: Monitor individual NFT prices or an entire collection over time

- Analyze Trading Volumes: Understand how in-demand certain NFTs are based on sales and trading volumes.

- Discover Trends: Find out what are the hottest NFT collections and tokens in real-time.

- Monitor NFT Owners: Scrape ownership data to see who owns specific NFTs or how many tokens a wallet owns.

- Automate Data Collection: Instead of checking OpenSea manually, you can auto collect the data and save it in different formats like CSV or JSON.

OpenSea’s website use JavaScript rendering so scraping it can be tricky. But with the Crawlbase Crawling API, you can handle this problem and extract the data easily.

What Data Can You Extract From OpenSea?

When scraping OpenSea it’s important to know what data to focus on. The platform has a ton of information about NFTs (Non-Fungible Tokens) and extracting the right data will help you track performance, analyze trends and make decisions. Here’s what to extract:

- NFT Name: The name that is unique to each NFT, often holds a branding or collection sentiment.

- Collection Name: The NFT collection to which the individual NFT belongs. Collections usually represent sets or series of NFTs.

- Price: The NFT listing price. This is important for understanding market trends and determining the value of NFTs.

- Last Sale Price: The price the NFT was previously sold at. It gives a history for NFT market performance.

- Owner: The NFT’s present holder (usually a wallet address).

- Creator: The artist or creator of the NFT. Creator information is important for tracking provenance and originality.

- Number of Owners: Some NFTs have multiple owners, which indicates how widely held the token is.

- Rarity/Attributes: Many NFTs has traits that make them unique and more desirable.

- Trading Volume: The overall volume of sales and transfers of the NFT or the entire collection.

- Token ID: The unique identifier for the NFT on the blockchain, useful for tracking specific tokens across platforms.

OpenSea Scraping with Crawlbase Crawling API

The Crawlbase Crawling API makes OpenSea data scraping easy. Since OpenSea uses JavaScript to load its content, traditional scraping methods will fail. But the Crawlbase API works like a real browser so you can get all the data you need.

Why Use Crawlbase Crawling API for OpenSea

- Handles Dynamic Content: The Crawlbase Crawling API can handle JavaScript heavy pages and ensures the scraping only happens after all NFT data (prices, ownership) is exposed.

- IP Rotation: To prevent getting blocked by OpenSea’s security, Crawlbase rotates IP addresses. So you can scrape multiple pages without worrying about rate limits or bans.

- Fast Performance: Crawlbase is fast and efficient for scraping large data volumes, saving you time especially when you have many NFTs and collections.

- Customizable Requests: You can adjust headers, cookies and other parameters to fit your scraping needs and get the data you want.

- Scroll-Based Pagination: Crawlbase supports scroll-based pagination so you can get more items on collection pages without having to manually click through each page.

Crawlbase Python Library

Crawlbase also has a python library using which you can easily use Crawlbase products into your projects. You’ll need an access token which you can get by signing up with Crawlbase.

Here’s an example to send a request to Crawlbase Crawling API:

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase provides two types of tokens: a Normal Token for static sites and a JavaScript (JS) Token for dynamic or browser-rendered content, which is necessary for scraping OpenSea. Crawlbase also offers 1,000 free requests to help you get started, and you can sign up without a credit card. For more details, check the Crawlbase Crawling API documentation.

In the next section, we’ll set up your Python environment for scraping OpenSea effectively.

Setting Up Your Python Environment

Before scraping data from OpenSea, you need to set up your Python environment. This setup will ensure you have all the necessary tools and libraries to make your scraping process smooth and efficient. Here’s how to do it:

Installing Python and Required Libraries

Install Python: Download Python from the official website and follow the installation instructions. Make sure to check “Add Python to PATH” during installation.

Set Up a Virtual Environment (optional but recommended): This keeps your project organized. Run these commands in your terminal:

1 | cd your_project_directory |

Install Required Libraries: Run the following command to install necessary libraries:

1 | pip install beautifulsoap4 crawlbase pandas |

- beautifulsoap4: For parsing and extracting data from HTML.

- crawlbase: For using the Crawlbase Crawling API.

- pandas: For handling and saving data in CSV format.

Choosing an IDE

Select an Integrated Development Environment (IDE) to write your code. Popular options include:

- Visual Studio Code: Free and lightweight, with Python support.

- PyCharm: A feature-rich IDE for Python.

- Jupyter Notebook: Great for interactive coding and data analysis.

Now that your Python environment is set up, you’re ready to start scraping OpenSea collection pages. In the next section, we will inspect the HTML for CSS selectors.

Scraping OpenSea Collection Pages

In this section, we will scrape collection pages from OpenSea. Collection pages show various NFTs grouped under specific categories or themes. To do this efficiently we will go through the following steps:

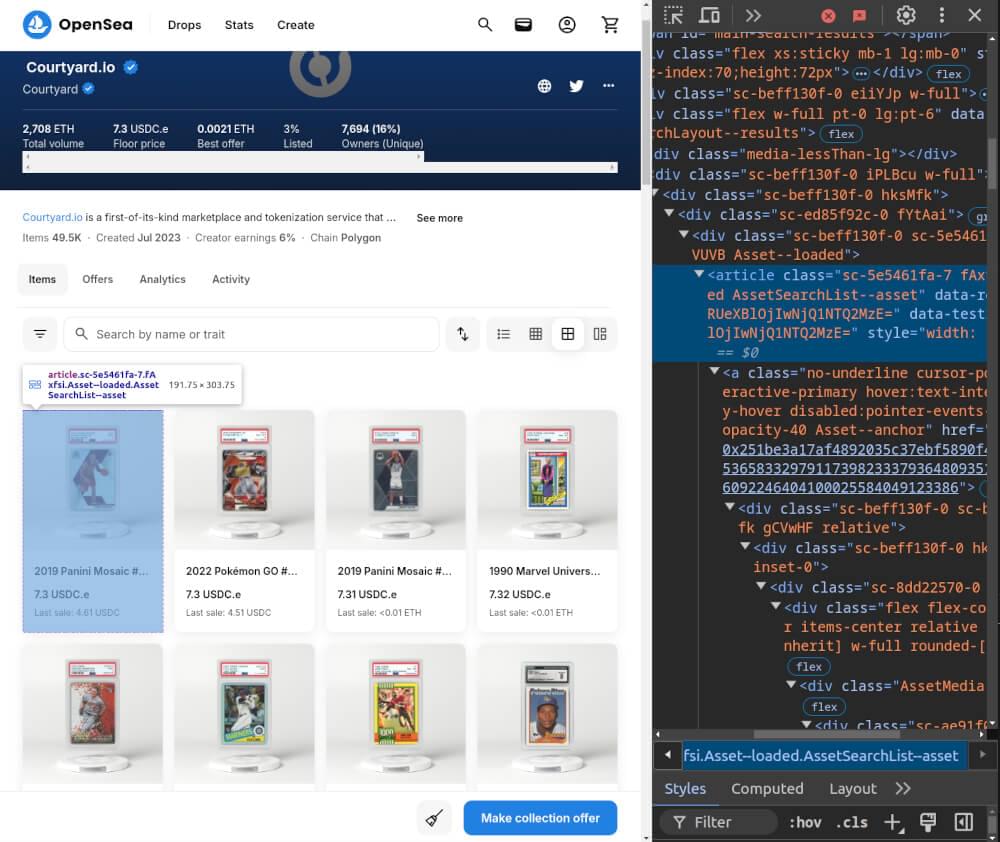

Inspecting the HTML for CSS Selectors

Before we write our scraper we need to understand the structure of the HTML on the OpenSea collection pages. Here’s how to find the CSS selectors:

- Open the Collection Page: Go to the OpenSea website and navigate to any collection page.

- Inspect the Page: Right-click on the page and select “Inspect” or press

Ctrl + Shift + Ito open the Developer Tools.

- Find Relevant Elements: Look for the elements that contain the NFT details. Common data points are:

- Title: In a

<span>withdata-testid="ItemCardFooter-name". - Price: Located within a

<div>withdata-testid="ItemCardPrice", specifically in a nested<span>withdata-id="TextBody". - Image URL: In an

<img>tag with the image source in thesrcattribute. - Link: The NFT detail page link is in an

<a>tag with the classAsset--anchor.

Writing the Collection Page Scraper

Now we have the CSS selectors, we can write our scraper. We will use the Crawlbase Crawling API to handle JavaScript rendering by using its ajax_wait and page_wait parameters. Below is the implementation of the scraper:

1 | from crawlbase import CrawlingAPI |

Here we initialize the Crawlbase Crawling API and create a function make_crawlbase_request to get the collection page. The function waits for any AJAX requests to complete and waits 5 seconds for the page to fully render before passing the HTML to the scrape_opensea_collection function.

In scrape_opensea_collection, we parse the HTML with BeautifulSoup and extract details about each NFT item using the CSS selectors we defined earlier. We get the title, price, image URL and link for each NFT and store this in a list which is returned to the caller.

Handling Pagination in Collection Pages

OpenSea uses scroll-based pagination, so more items load as you scroll down the page. We can use the scroll and scroll_interval parameters for this. This way we don’t need to manage pagination explicitly.

1 | options = { |

This will make the crawler scroll for 20 seconds so we get more items.

Storing Data in a CSV File

After we scrape the data we can store it in a CSV file. This is a common format and easy to analyze later. Here’s how:

1 | def save_data_to_csv(data, filename='opensea_data.csv'): |

Complete Code Example

Here’s the complete code that combines all the steps:

1 | from crawlbase import CrawlingAPI |

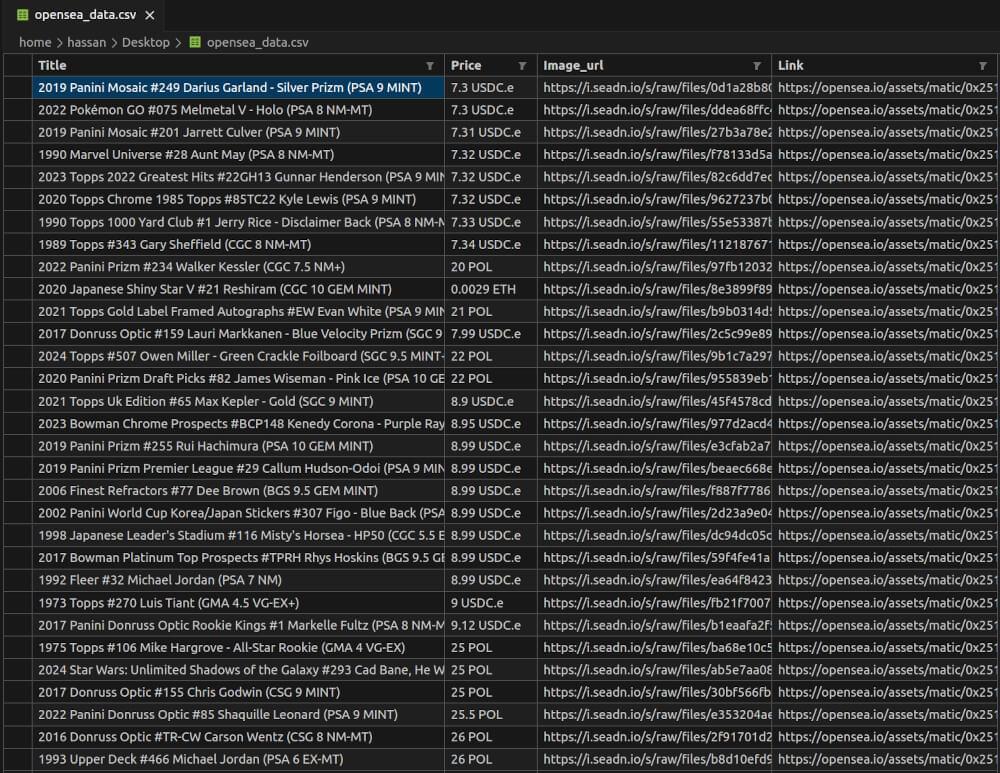

opensea_data.csv Snapshot:

Scraping OpenSea NFT Detail Pages

In this section, we will learn how to scrape NFT detail pages on OpenSea. Each NFT has its own detail page that has more information such as title, description, price history and other details. We will follow these steps:

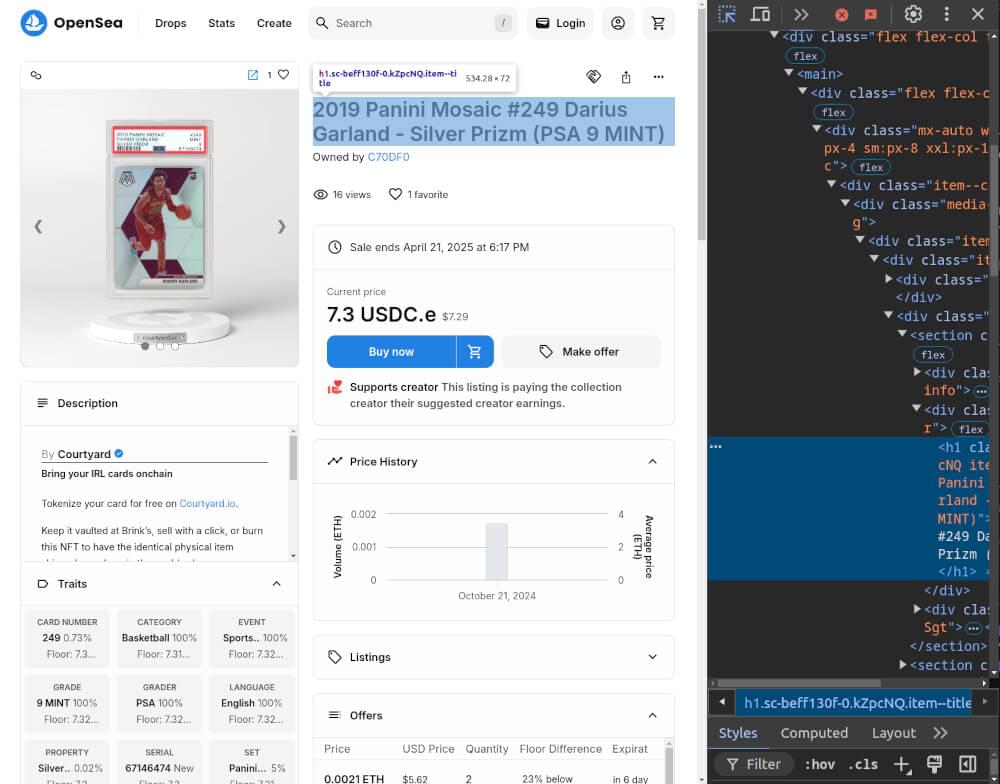

Inspecting the HTML for CSS Selectors

Before we write our scraper, we need to find the HTML structure of the NFT detail pages. Here’s how to do it:

- Open an NFT Detail Page: Go to OpenSea and open any NFT detail page.

- Inspect the Page: Right-click on the page and select “Inspect” or press

Ctrl + Shift + Ito open the Developer Tools.

- Locate Key Elements: Search for the elements that hold the NFT details. Here are the common data points to look for:

- Title: In an

<h1>tag with classitem--title. - Description: In a

<div>tag with classitem--description. - Price: In a

<div>tag with classPrice--amount. - Image URL: In an

<img>tag inside a<div>with classmedia-container. - Link to the NFT page: The current URL of the NFT detail page.

Writing the NFT Detail Page Scraper

Now that we have our CSS selectors, we can write our scraper. We’ll use the Crawlbase Crawling API to render JavaScript. Below is an example of how to scrape data from an NFT detail page:

1 | from crawlbase import CrawlingAPI |

Storing Data in a CSV File

Once we have scraped the NFT details, we can save them in a CSV file. This allows us to easily analyze the data later. Here’s how to do it:

1 | def save_nft_data_to_csv(data, filename='opensea_nft_data.csv'): |

Complete Code Example

Here’s the complete code that combines all the steps for scraping NFT detail pages:

1 | from crawlbase import CrawlingAPI |

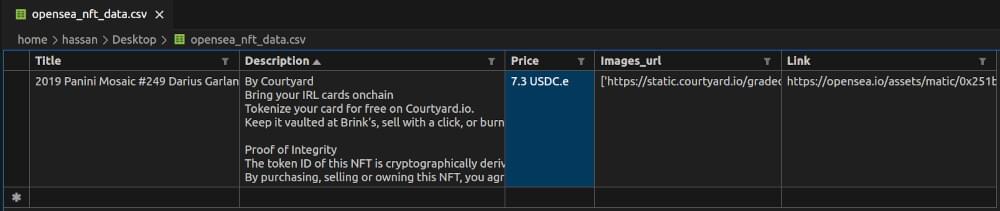

opensea_nft_data.csv Snapshot:

Optimize OpenSea NFT Data Scraping

Scraping OpenSea opens up a whole world of NFTs and market data. Throughout this blog, we covered how to scrape OpenSea using Python and Crawlbase Crawling API. By understanding the layout of the site and using the right tools, you can get valuable insights while keeping ethics in mind.

When you get deeper into your scraping projects, remember to store the data in human readable formats, like CSV files, to make analysis a breeze. The NFT space is moving fast and being aware of new trends and technologies will help you get the most out of your data collection efforts. With the right mindset and tools you can find some great insights in the NFT market.

If you want to do more web scraping, check out our guides on scraping other key websites.

📜 How to Scrape Monster.com

📜 How to Scrape Groupon

📜 How to Scrape TechCrunch

📜 How to Scrape Clutch.co

If you have any questions or want to give feedback, our support team can help you with web scraping. Happy scraping!

Frequently Asked Questions

Q. Why should I web scrape OpenSea?

Web scraping is a way to automatically extract data from websites. By scraping OpenSea, you can grab important information about NFTs, such as their prices, descriptions, and images. This data helps you analyze market trends, track specific collections or compare prices across NFTs. Overall, web scraping provides valuable insights that can enhance your understanding of the NFT marketplace.

Q. Is it legal to scrape data from OpenSea?

Web scraping is a gray area when it comes to legality. Many websites including OpenSea allow data collection for personal use but always read the terms of service before you start. Make sure your scraping activities comply with the website’s policies and copyright laws. Ethical scraping means using the data responsibly and not flooding the website’s servers.

Q. What tools do I need to start scraping OpenSea?

To start scraping OpenSea, you’ll need a few tools. Install Python and libraries like BeautifulSoup and pandas for data parsing and manipulation. You’ll also use Crawlbase Crawling API to handle dynamic content and JavaScript rendering on OpenSea. With these tools in place you’ll be ready to scrape and analyze NFT data.