Most websites use JavaScript to load their content so it’s harder to scrape data. If you try to use traditional tools like requests and BeautifulSoup you will miss out on information that only shows up after the page is fully loaded.

This article explores how to scrape JavaScript-rendered pages. We’ll go over static vs dynamic content, the challenges of scraping, and the tools available. We’ll be focusing on Pyppeteer, a powerful tool that lets you work with JavaScript content in Python.

We’ll walk you through a hands-on example of using Pyppeteer to pull product details from a dynamic web page. You’ll also get tips on solving common issues and making your scraping more efficient with Crawlbase Smart AI Proxy.

Table Of Contents

- Static vs. JavaScript-Rendered Pages

- Challenges of scraping dynamic content

- Popular Tools for Scraping JavaScript-Rendered Pages in Python

- Selenium

- Playwright

- Pyppeteer

- Prerequisites

- Setting Up the Python Environment

- Inspecting a JavaScript-rendered page for selectors

- Creating Scraper Using Pyppeteer to extract product details

- Create the Scraper Script

- Define the Scraper Function

- Run the Scraper

- Complete Script Example

- Handling delays and timeouts

- Resolving errors related to page loading and rendering

Static vs. JavaScript-Rendered Pages

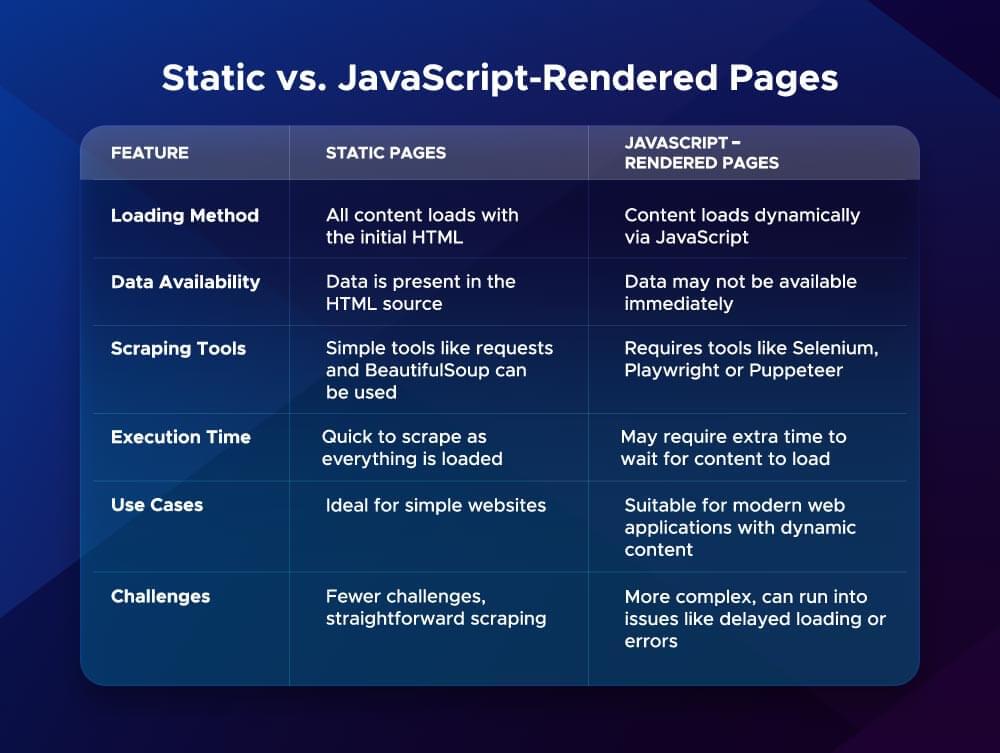

When it comes to web scraping, understanding the difference between static and JavaScript-rendered pages is key.

Static pages are straightforward. They load all their content when you visit the site. You can use simple tools like requests or BeautifulSoup to scrape data from these pages because the data is in the HTML already.

On the other hand, JavaScript-rendered pages load their content dynamically using JavaScript. This means the HTML you see initially may not have the data you want. JavaScript runs after the page loads and pulls in more data from the server. This makes scraping harder because the data may not be in the HTML when you first visit the page.

For instance, if you’re trying to scrape product details from an e-commerce site, you might find that the product listings only appear after the page finishes loading. In such cases, traditional scraping methods won’t work, and you need a more advanced tool that can handle JavaScript execution.

Challenges of Scraping Dynamic Content

Scraping JavaScript-rendered pages has its own set of challenges. Here are the main ones:

Content Loading Times

JavaScript loads content asynchronously, so elements might not be available immediately. Scraping too soon can result in missing data.

Changing Element Selectors

Websites change their design frequently, which can change the HTML structure and selectors. If your scraper relies on these, it will break when changes happen.

Captchas and Anti-Scraping Measures

Many sites have CAPTCHAs and IP blocking to prevent scraping. Navigating these while adhering to terms of service can be tricky.

Handling Pagination

Dynamic pages often paginate data, so your scraper can’t navigate through multiple pages to collect all the data.

Resource Intensive

JavaScript rendering can be resource-intensive, so scraping takes longer, and server load increases.

Popular Tools for Scraping JavaScript-Rendered Pages in Python

When scraping JavaScript-rendered pages, the tool you choose matters. Here are three popular options: Selenium, Playwright, and Pyppeteer.

Selenium

Selenium is a popular web scraping tool. It can control web browsers and simulate user interactions. It’s perfect for scraping dynamic content. Selenium supports multiple languages, including Python.

Pros:

- Versatility: Works with many browsers (Chrome, Firefox, Safari).

- Robustness: Good for testing web applications.

- Wide Community Support: Many tutorials and resources are available.

Use Cases: Selenium is good for projects that need browser automation like logging in to websites or filling out forms.

Playwright

Playwright is a newer tool that’s gaining popularity for scraping dynamic content. It supports multiple languages, including Python, and is fast.

Pros:

- Cross-Browser Testing: Works with Chromium, Firefox, WebKit.

- Automatic Waiting: Reduces manual delays in scripts.

- Easy Setup: Simple and easy-to-use

Use Cases: Playwright is good for projects that need speed and reliability especially in testing web applications.

Pyppeteer

Pyppeteer is a Python port of Puppeteer, a popular Node.js library. It allows you to control headless Chrome browsers.

Pros:

- Headless Mode: Faster since no UI is rendered.

- Easy Navigation: Simplifies tasks like taking screenshots and generating PDFs.

- JavaScript Execution: Interacts with JavaScript-heavy pages efficiently.

Use Cases: Pyppeteer is good for scraping data from modern websites with heavy JavaScript usage, like e-commerce platforms.

In summary, each tool has its strengths and best-use scenarios. Depending on your project requirements, you can choose the one that fits your needs best.

Practical Example: Using Pyppeteer to Scrape Dynamic Content

In this section, we will go through a practical example of using Pyppeteer to scrape dynamic content from a JavaScript rendered web page. We will cover everything from setting up your environment to extracting product details.

Prerequisites

- Python 3.6+ installed on your system

- Basic understanding of Python and HTML

Setting Up the Python Environment

Before we dive into scraping, let’s prepare our Python environment.

Create a new project directory:

1 | mkdir pyppeteer_scraper |

Set up a virtual environment:

1 | python -m venv venv |

Install the necessary packages:

1 | pip install pyppeteer |

Note: If you run into issues, make sure you are using the correct Python version and check for any installation errors.

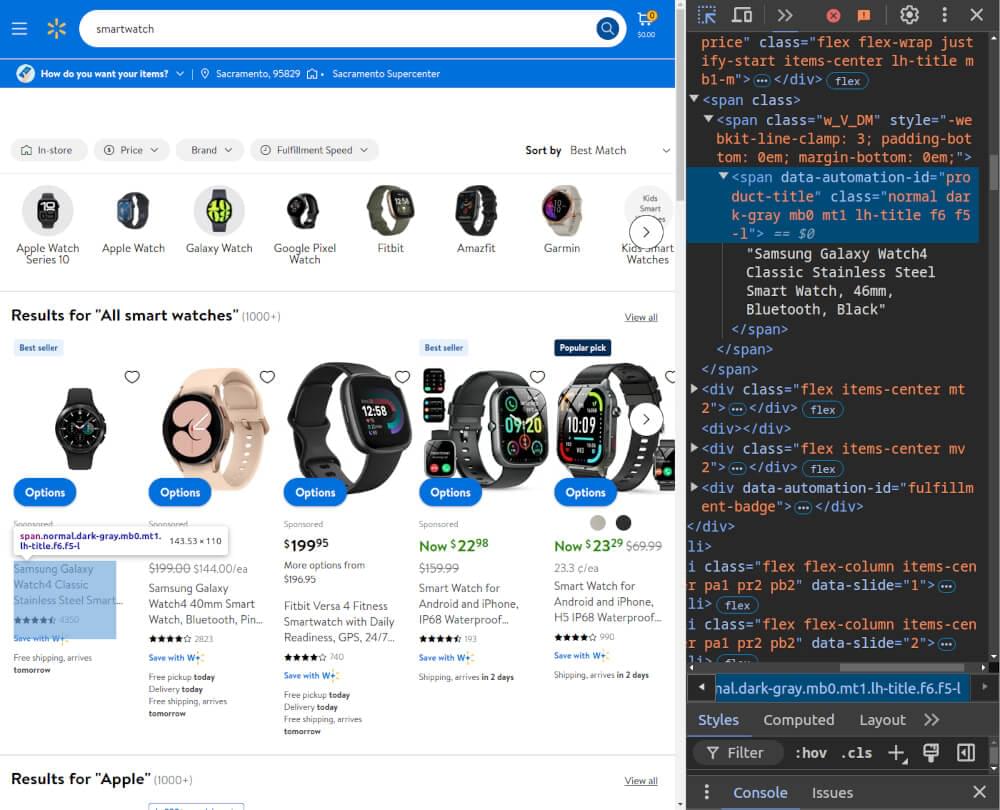

Inspecting a JavaScript-rendered Page for Selectors

Once you have your environment set up, you will want to find the selectors for the elements you want to scrape. Here’s how:

- Open your browser and go to the target page. For this example, we will use the Walmart URL below, as Walmart also uses JavaScript rendering.

1 | https://www.walmart.com/search?q=smartwatch |

- Right-click on the product title and select Inspect to open the Developer Tools.

- Hover over elements in the HTML structure to see the associated selectors. For example, look for class names or unique attributes that are stable across page loads, such as

data-*attributes.

Creating a Scraper Using Pyppeteer to Extract Product Details

Create the Scraper Script

Create a new Python file named scraper.py and start by adding the following imports:

1 | import asyncio |

Define the Scraper Function

Next, we’ll define a function that will handle the scraping process. This function will launch a browser, navigate to the desired URL with custom User-Agent and headers, and extract the product details.

1 | async def scrape_product_details(url): |

Run the Scraper

Add this to the end of your scraper.py file so it runs when you run the script.

1 | if __name__ == "__main__": |

Complete Script Example

Here’s what the complete scraper.py file should look like:

1 | import asyncio |

When you run the script, it will open a headless browser, navigate to the specified URL, and print the product titles and prices to the console.

Common Pitfalls and Troubleshooting Tips

When scraping websites that use JavaScript to load content, you might run into some common issues. Here are a few tips to help you troubleshoot.

Handling Delays and Timeouts

Web scraping can be tricky because pages take time to load. If your scraper tries to access elements before they are loaded, it will error. Here are some ways to handle delays:

- Use Fixed Wait Times: You can add a fixed wait before your scraper starts looking for elements. This gives the page time to load. For example, you can use a sleep function:

1 | await asyncio.sleep(5) # Wait for 5 seconds |

- Dynamic Waiting: Instead of relying solely on fixed waits, consider using dynamic waits like

waitForSelector. This will pause the script until the given element appears on the page.

Resolving Errors Related to Page Loading and Rendering

You may run into errors due to page loading or rendering issues. Here are some common problems and how to fix them:

- Check the URL: Make sure you are navigating to the correct URL. A typo will give you a “page not found” error.

- Inspect Page Elements: Use your browser’s developer tools to inspect the elements you are scraping if the HTML structure has changed, update your selectors.

- Network Issues: If your internet is slow or unstable, it will affect how the page loads. Try testing your scraper on a faster connection.

- Handle JavaScript Errors: Some websites will block scrapers or have JavaScript errors that prevent the page from rendering. If your scraper is not working, check the console for JavaScript errors and adjust your scraping strategy.

- Use Error Handling: Use try-except blocks in your code to catch and handle errors. This way, your scraper will keep running even if it hits a minor issue.

Optimizing with Crawlbase Smart AI Proxy

Crawlbase Smart AI Proxy helps you scrape faster by routing requests through multiple IP addresses so websites can’t block you. This service prevents IP blocks, accelerates data collection through simultaneous requests, and allows access to region-specific content.

To use Crawlbase Smart AI Proxy, sign up for an account and get your proxy credentials. Then, configure your scraper by routing through Crawlbase. Here’s a quick example in Python:

1 | proxy_url = 'http://_USER_TOKEN_:@smartproxy.crawlbase.com:8012' |

Replace _USER_TOKEN_ with your Crawlbase token. By integrating Crawlbase Smart AI Proxy into your workflow, you can improve efficiency and reduce the chances of interruptions from IP blocks, enabling more successful data extraction.

Final Thoughts

Scraping JavaScript-rendered pages can be challenging but fun. With the right tools like Pyppeteer, Selenium, or Playwright, you can do it. In this blog, we covered the differences between static and JavaScript-rendered pages, common challenges, and a practical example (Pyppeteer) to help you get started.

Use optimization techniques like Crawlbase Smart AI Proxy to scrape faster and avoid IP bans. As you start web scraping, remember to respect the terms of service of the websites you target. Follow best practices and you’ll get the data you need while keeping good relations with web services. Happy scraping!

Frequently Asked Questions

Q. What is a JavaScript-rendered page?

A JavaScript rendered page is a web page that loads content dynamically using JavaScript. Unlike static pages that show all content immediately, JavaScript rendered pages load data after the initial page load. This makes it harder to scrape because the content is not visible in the page source code right away.

Q. Why do I need a special tool to scrape JavaScript-rendered pages?

Special tools like Selenium, Pyppeteer, or Playwright are needed to scrape JavaScript rendered pages because they can simulate a real user’s browser. These tools allow you to wait for the page to fully load and render the content before extracting data. Without them, you might miss important information or get incomplete results.

Q. How can I avoid getting blocked while scraping?

To avoid getting blocked, you can use techniques like rotating user agents, adding delays between requests, and using proxies. Tools like Crawlbase Smart AI Proxy can help you manage your IPs, so websites won’t detect and block your scraping activities. Always remember to follow the website’s terms of service to keep your scraping ethical.