In our modern world, information is everywhere. And when it comes to finding out what people think, Yelp.com stands tall. It’s not just a place to find good food or services; it’s a goldmine of opinions and ratings from everyday users. But how can we dig deep and get all this valuable info out? That’s where this blog steps in.

Scraping Yelp might seem tricky, but with the power of Python, a friendly and popular coding language, and the help of the Crawlbase Crawling API, it becomes a breeze. Together, we’ll learn how Yelp is built, how to grab its data (creating Yelp scraper), and even how to store it for future use. So, whether you’re just starting out or you’ve scraped a bit before, this guide is packed with easy steps and smart tips to make the most of Yelp’s rich data.

Table of Contents

- Why is Yelp Data Scraping Special?

- Using Python for Web Scraping Yelp

- Installing and Setting up Necessary Libraries

- Choosing the Right Development IDE

- Introduction to Crawlbase and its Features

- How this API Simplifies Web Scraping Tasks

- Getting a Token for Crawlbase Crawling API

- Crawlbase Python library

- Crafting the Right URL for Targeted Searches

- How to Use Crawlbase Python Library to Fetch Web Content

- Inspecting HTML to Get CSS Selectors

- Parsing HTML with BeautifulSoup

- Incorporating Pagination: Scraping Multiple Pages Efficiently

- Storing Data into CSV File

- Storing Data into SQLite Database

- How to Use Scraped Yelp Data for Business or Research

Why Scrape Yelp?

Let’s start by talking about how useful scraping Yelp can be. Yelp is a place where people leave reviews about restaurants, shops, and more. But did you know we can use tools to gather this information automatically? That’s where web scraping comes in. It’s a way to collect data from websites automatically. Instead of manually going through pages and pages of content, web scraping tools can extract the data you need, saving time and effort.

Why is Yelp Data Scraping Special?

Yelp isn’t just another website; it’s a platform built on people’s voices and experiences. Every review, rating, and comment on Yelp represents someone’s personal experience with a business. This collective feedback paints a detailed picture of consumer preferences, business reputations, and local trends. For businesses, understanding this feedback can lead to improvements and better customer relationships. For researchers, it provides a real-world dataset to analyze consumer behavior, preferences, and sentiments. Moreover, entrepreneurs can use scraped Yelp data to spot gaps in the market or to validate business ideas. Essentially, Yelp data is a window into the pulse of local communities and markets, making it a vital resource for various purposes.

Yelp Scraping Libraries and Tools

In order to scrape Yelp with Python, having the right tools at your fingertips is crucial. Setting up your environment properly ensures a smooth scraping experience. Let’s walk through the initial steps to get everything up and running.

Using Python for Web Scraping Yelp

Python, known for its simplicity and versatility, is a popular choice for web scraping tasks. Its rich ecosystem offers a plethora of libraries tailored for scraping, data extraction, and analysis. One such powerful tool is BeautifulSoup4 (often abbreviated as BS4), a library that aids in pulling data out of HTML and XML files. Coupled with Crawlbase, which simplifies the scraping process by handling the intricacies of web interactions, and Pandas, a data manipulation library that structures scraped data into readable formats, you have a formidable toolkit for any scraping endeavor.

Installing and Setting up Necessary Libraries

To equip your Python environment for scraping tasks, follow these pivotal steps:

- Python: If Python isn’t already on your system, visit the official website to download and install the appropriate version for your OS. Follow the installation instructions to get Python up and running.

- Pip: As Python’s package manager, Pip facilitates the installation and management of libraries. While many Python installations come bundled with Pip, ensure it’s available in your setup.

- Virtual Environment: Adopting a virtual environment is a prudent approach. It creates an isolated space for your project, ensuring dependencies remain segregated from other projects. To initiate a virtual environment, execute:

1 | python -m venv myenv |

Open your command prompt or terminal and use one of the following commands to activate the environment:

1 | # Windows |

- Install Required Libraries: Next step is installing the essential libraries. Open your command prompt or terminal and run the following commands:

1 | pip install beautifulsoup4 |

Once these libraries are installed, you’re all set to embark on your web scraping journey. Ensuring that your environment is correctly set up not only paves the way for efficient scraping but also ensures that you harness the full potential of the tools at your disposal.

Choosing the Right Development IDE

Selecting the right Integrated Development Environment (IDE) can significantly boost productivity. While you can write JavaScript code in a simple text editor, using a dedicated IDE can offer features like code completion, debugging tools, and version control integration.

Some popular IDEs for JavaScript development include:

- Visual Studio Code (VS Code): VS Code is a free, open-source code editor developed by Microsoft. It has a vibrant community offers a wide range of extensions for JavaScript development.

- WebStorm: WebStorm is a commercial IDE by JetBrains, known for its intelligent coding assistance and robust JavaScript support.

- Sublime Text: Sublime Text is a lightweight and customizable text editor popular among developers for its speed and extensibility.

Choose an IDE that suits your preferences and workflow.

Utilizing Crawlbase Crawling API

The Crawlbase Crawling API stands as a versatile solution tailored for navigating the complexities of web scraping, particularly in scenarios like Yelp, where dynamic content demands adept handling. This API serves as a game-changer, simplifying access to web content, rendering JavaScript, and presenting HTML content ready for parsing.

How this API Simplifies Web Scraping Tasks

At its core, web scraping involves fetching data from websites. However, the real challenge lies in navigating through the maze of web structures, handling potential pitfalls like CAPTCHAs, and ensuring data integrity. Crawlbase simplifies these tasks by offering:

- JavaScript Rendering: Many websites, including Airbnb, heavily rely on JavaScript for dynamic content loading. The Crawlbase API adeptly handles these elements, ensuring comprehensive access to Airbnb’s dynamically rendered pages.

- Simplified Requests: The API abstracts away the intricacies of managing HTTP requests, cookies, and sessions. This allows you to concentrate on refining your scraping logic, while the API handles the technical nuances seamlessly.

- Well-Structured Data: The data obtained through the API is typically well-structured, streamlining data parsing and extraction process. This ensures you can efficiently retrieve the pricing information you seek from Airbnb.

- Scalability: The Crawlbase Crawling API supports scalable scraping by efficiently managing multiple requests concurrently. This scalability is particularly advantageous when dealing with the diverse and extensive pricing information on Airbnb.

Note: The Crawlbase Crawling API offers a multitude of parameters at your disposal, enabling you to fine-tune your scraping requests. These parameters can be tailored to suit your unique needs, making your web scraping efforts more efficient and precise. You can explore the complete list of available parameters in the API documentation.

Getting a Token for Crawlbase Crawling API

To access the Crawlbase Crawling API, you’ll need an API token. Here’s a simple guide to obtaining one:

- Visit the Crawlbase Website: Open your web browser and navigate to the Crawlbase signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the provided instructions.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawlbase Crawling API. You can find your API tokens here.

Note: Crawlbase offers two types of tokens, one for static websites and another for dynamic or JavaScript-driven websites. Since we’re scraping Yelp, we’ll opt for the Normal Token. Crawlbase generously offers an initial allowance of 1,000 free requests for the Crawling API, making it an excellent choice for our web scraping project.

Crawlbase Python library

The Crawlbase Python library offers a simple way to interact with the Crawlbase Crawling API. You can use this lightweight and dependency-free Python class as a wrapper for the Crawlbase API. To begin, initialize the Crawling API class with your Crawlbase token. Then, you can make GET requests by providing the URL you want to scrape and any desired options, such as custom user agents or response formats. For example, you can scrape a web page and access its content like this:

1 | from crawlbase import CrawlingAPI |

This library simplifies the process of fetching web data and is particularly useful for scenarios where dynamic content, IP rotation, and other advanced features of the Crawlbase APIs are required.

How to Create Yelp Scraper With Python

Yelp offers a plethora of information, and Python’s robust libraries enable us to extract this data efficiently. Let’s delve into the intricacies of fetching and parsing Yelp data using Python.

Crafting the Right URL for Targeted Searches

To retrieve specific data from Yelp, it’s crucial to frame the right search URL. For instance, if we’re keen on scraping Italian restaurants in San Francisco, our Yelp URL would resemble:

1 | https://www.yelp.com/search?find_desc=Italian+Restaurants&find_loc=San+Francisco%2C+CA |

Here:

find_descpinpoints the business category.find_locspecifies the location.

How to Use Crawlbase Python Library to Fetch Web Content

Crawlbase provides an efficient way to obtain web content. By integrating it with Python, our scraping endeavor becomes more streamlined. A snippet for fetching Yelp’s content would be:

1 | from crawlbase import CrawlingAPI |

To initiate the Yelp scraping process, follow these straightforward steps:

- Create the Script: Begin by creating a new Python script file. Name it

yelp_scraping.py. - Paste the Code: Copy the previously provided code and paste it into your newly created

yelp_scraping.pyfile. - Execution: Open your command prompt or terminal.

- Run the Script: Navigate to the directory containing

yelp_scraping.pyand execute the script using the following command:

1 | python yelp_scraping.py |

Upon execution, the HTML content of the page will be displayed in your terminal.

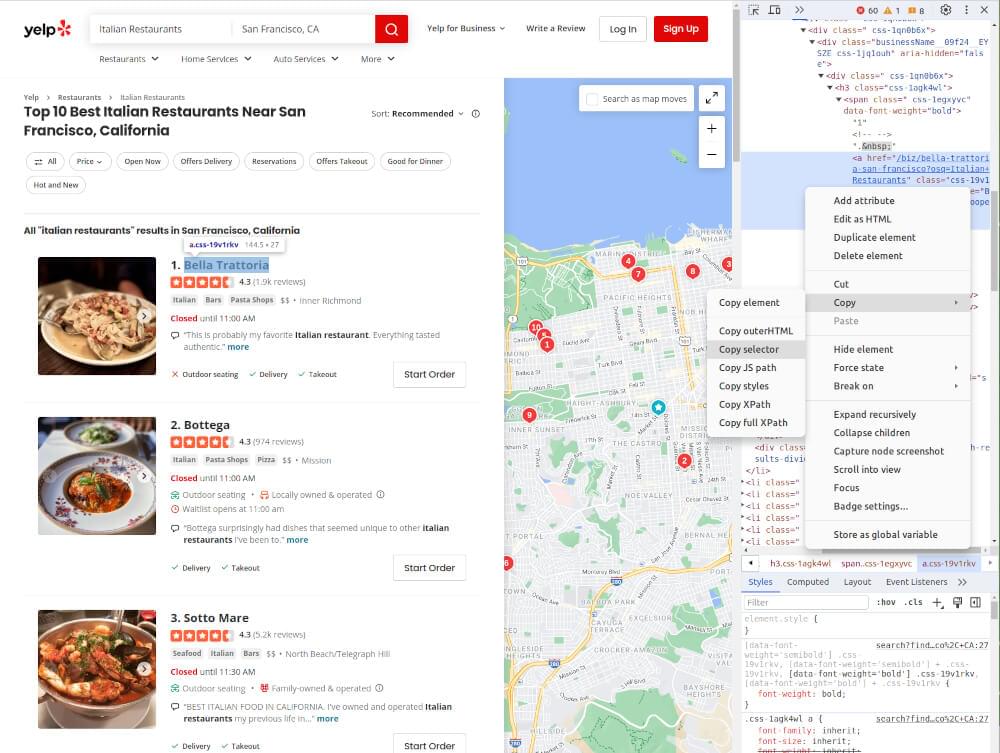

Inspecting HTML to Get CSS Selectors

After gathering the HTML content from the listing page, the next move is to study its layout and find the specific data we want. This is where web development tools and browser developer tools can help a lot. Here’s a simple guide on how to use these tools to scrape data from Yelp efficiently. First, inspect the HTML layout to identify the areas we’re interested in. Then, search for the right CSS selectors to get the data you need.

- Open the Web Page: Navigate to the Yelp website and land on a property page that beckons your interest.

- Right-Click and Inspect: Employ your right-clicking prowess on an element you wish to extract and select “Inspect” or “Inspect Element” from the context menu. This mystical incantation will conjure the browser’s developer tools.

- Locate the HTML Source: Within the confines of the developer tools, the HTML source code of the web page will lay bare its secrets. Hover your cursor over various elements in the HTML panel and witness the corresponding portions of the web page magically illuminate.

- Identify CSS Selectors: To liberate data from a particular element, right-click on it within the developer tools and gracefully choose “Copy” > “Copy selector.” This elegant maneuver will transport the CSS selector for that element to your clipboard, ready to be wielded in your web scraping incantations.

Once you have these selectors, you can proceed to structure your Yelp scraper to extract the required information effectively.

Parsing HTML with BeautifulSoup

After fetching the raw HTML content, the subsequent challenge is to extract meaningful data. This is where BeautifulSoup comes into play. It’s a Python library that parses HTML and XML documents, providing tools for navigating the parsed tree and searching within it.

Using BeautifulSoup, you can pinpoint specific HTML elements and extract the required information. In our Yelp example, BeautifulSoup helps extract restaurant names, ratings, review counts, addresses, price range, and popular items from the fetched page.

1 | from crawlbase import CrawlingAPI |

This Python script uses the CrawlingAPI from the crawlbase library to fetch web content. The main functions are:

fetch_yelp_page(url): Retrieves HTML content from a given URL using Crawlbase.extract_restaurant_info(listing_card): Parses a single restaurant’s details from its HTML card.extract_restaurants_info(html_content): Gathers all restaurant details from the entire Yelp page’s HTML.

When run directly, it fetches Italian restaurant data from Yelp in San Francisco and outputs it as formatted JSON.

Output Sample:

1 | [ |

Incorporating Pagination for Yelp

Pagination is crucial when scraping platforms like Yelp that display results across multiple pages. Each page typically contains a subset of results, and without handling pagination, you’d only scrape the data from the initial page. To retrieve comprehensive data, it’s essential to iterate through each page of results.

To achieve this with Yelp, we’ll utilize the URL parameter &start= which specifies the starting point for the displayed results on each page.

Let’s update the existing code to incorporate pagination:

1 | from crawlbase import CrawlingAPI |

In the updated code, we loop through the range of start values (0, 10, 20, …, 50) to fetch data from each page of Yelp’s search results. We then extend the all_restaurants_data list with the data from each page. Remember to adjust the range if you want to scrape more or fewer results.

Storing and Analyzing Yelp Data

Once you’ve successfully scraped data from Yelp, the next crucial steps involve storing this data for future use and extracting insights from it. The data you’ve collected can be invaluable for various applications, from business strategies to academic research. This section will guide you on how to store your scraped Yelp data efficiently and the potential applications of this data.

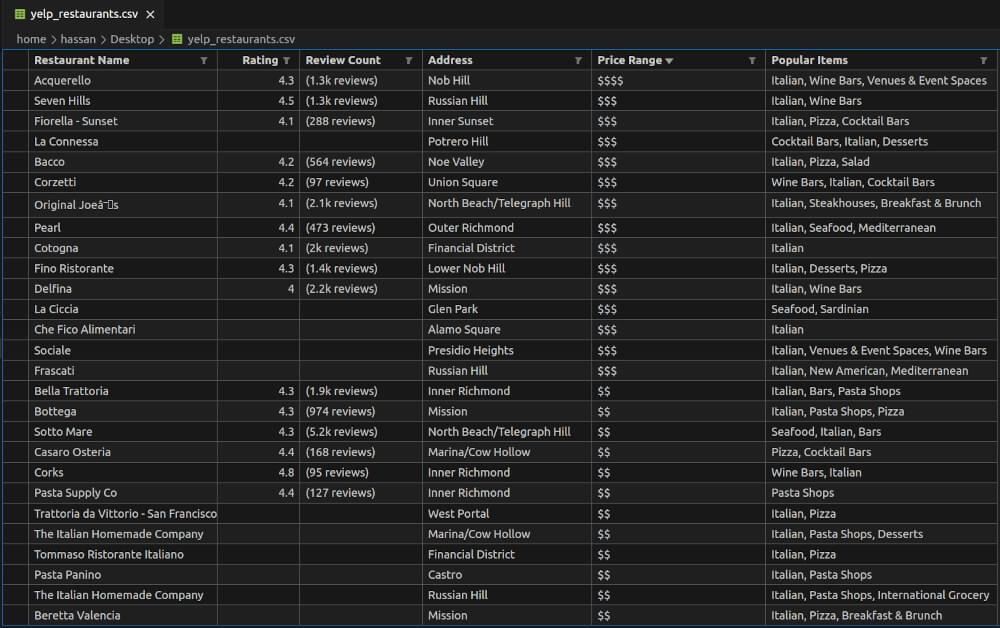

Storing Data into CSV File

CSV stands as a widely recognized file format for tabular data. It offers a simple and efficient means to archive and share your Yelp data. Python’s pandas library provides a user-friendly interface to handle data operations, including the ability to write data to a CSV file.

Let’s update the previous script to incorporate this change:

1 | from crawlbase import CrawlingAPI |

The save_to_csv function uses pandas to convert a given data list into a DataFrame and then saves it as a CSV file with the provided filename.

yelp_restaurants.csv Preview:

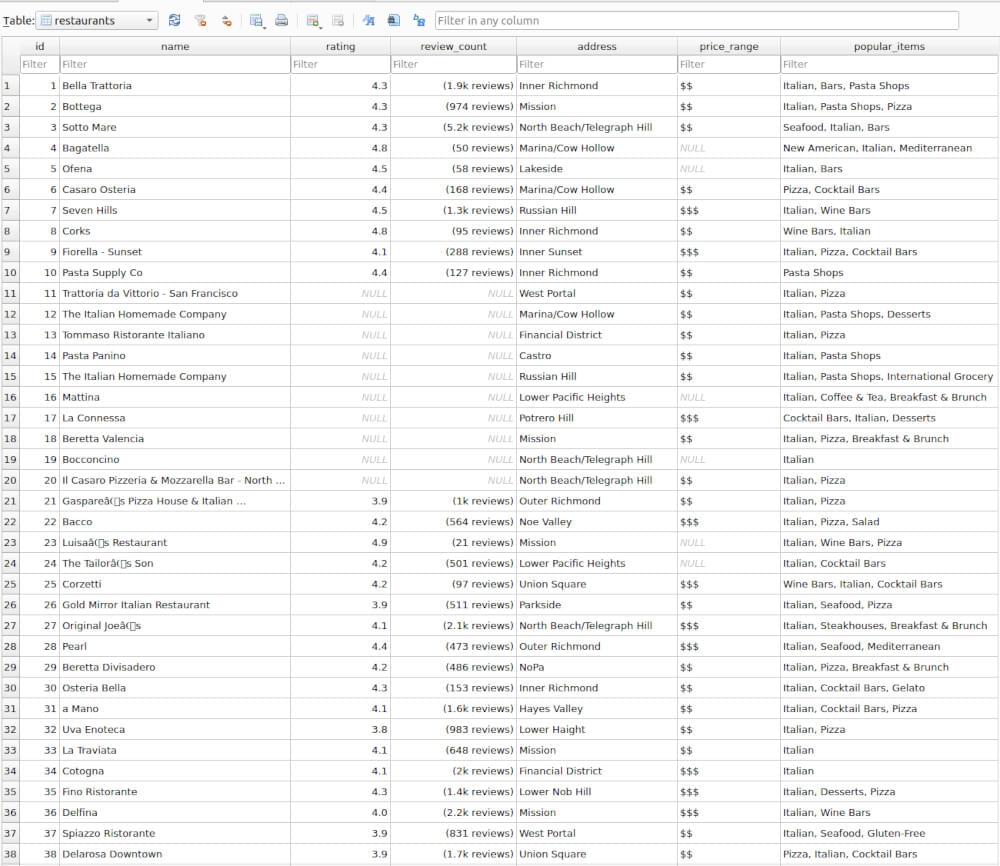

Storing Data into SQLite Database

SQLite is a lightweight disk-based database that doesn’t require a separate server process. It’s ideal for smaller applications or when you need a standalone database. Python’s sqlite3 library allows you to interact with SQLite databases seamlessly.

Let’s update the previous script to incorporate this change:

1 | import sqlite3 |

The save_to_database function stores data from a list into an SQLite database. It first connects to the database and ensures a table named “restaurants” exists with specific columns. Then, it inserts each restaurant’s data from the list into this table. After inserting all data, it saves the changes and closes the database connection.

restaurants Table Preview:

How to Use Scraped Yelp Data for Business or Research

Yelp, with its vast repository of reviews, ratings, and other business-related information, offers a goldmine of insights for businesses and researchers alike. Once you’ve scraped this data, the next step is to analyze and visualize it, unlocking actionable insights.

Business Insights:

- Competitive Analysis: By analyzing ratings and reviews of competitors, businesses can identify areas of improvement in their own offerings.

- Customer Preferences: Understand what customers love or dislike about similar businesses and tailor your strategies accordingly.

- Trend Spotting: Identify emerging trends in the market by analyzing review patterns and popular items.

Research Opportunities:

- Consumer Behavior: Dive deep into customer reviews to understand purchasing behaviors, preferences, and pain points.

- Market Trends: Monitor changes in consumer sentiments over time to identify evolving market trends.

- Geographical Analysis: Compare business performance, ratings, and reviews across different locations.

Visualizing the Data:

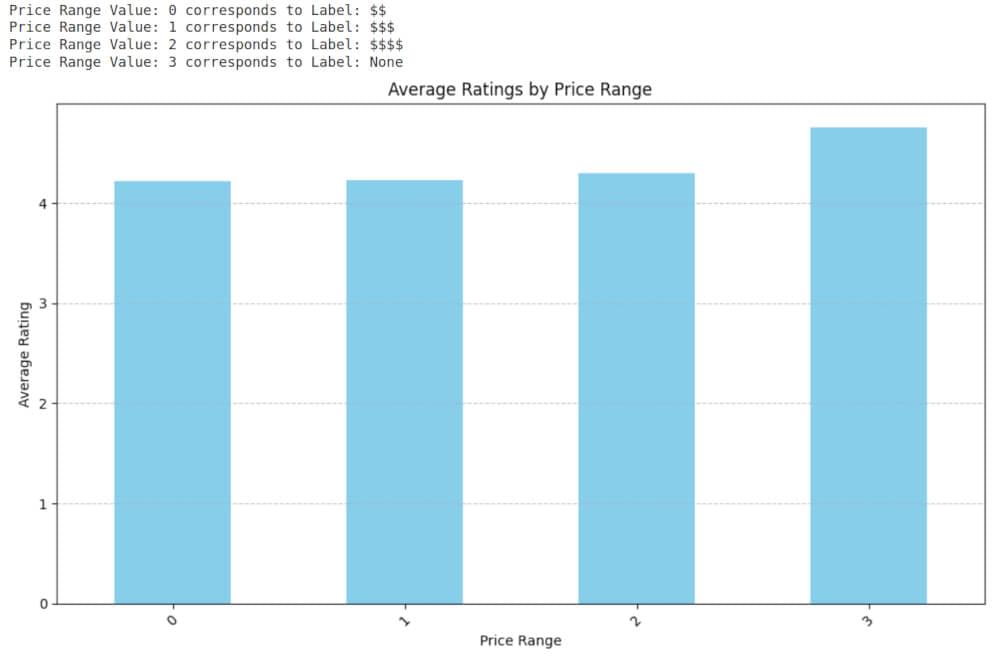

To make the data more digestible and engaging, visualizations play a crucial role. Let’s consider a simple example: a bar graph showcasing how average ratings vary across different price ranges.

1 | import matplotlib.pyplot as plt |

Output Graph:

This graph can be a useful tool for understanding customer perceptions based on pricing and can guide pricing strategies or marketing efforts for restaurants.

Build a Yelp Scraper with Crawlbase

This guide has given you the basic know-how and tools to easily scrape Yelp search listing using Python and the Crawlbase Crawling API. Whether you’re new to this or have some experience, the ideas explained here provide a strong starting point for your efforts.

As you continue your web scraping journey, remember the versatility of these skills extends beyond Yelp. Explore our additional guides for platforms like Expedia, DeviantArt, Airbnb, and Glassdoor, broadening your scraping expertise.

Web scraping presents challenges, and our commitment to your success goes beyond this guide. If you encounter obstacles or seek further guidance, the Crawlbase support team is ready to assist. Your success in web scraping is our priority, and we look forward to supporting you on your scraping journey.

Frequently Asked Questions

Q. Is Yelp.com legal to scrape?

Web scraping activities often tread a fine line in terms of legality and ethics. When it comes to platforms like Yelp, it’s crucial to first consult Yelp’s terms of service and robots.txt file. These documents provide insights into what activities the platform permits and restricts. Additionally, while Yelp’s content is publicly accessible, the manner and volume in which you access it can be deemed as abusive or malicious. Furthermore, scraping personal data from users’ reviews or profiles may violate privacy regulations in various jurisdictions. Always prioritize understanding and complying with both the platform’s guidelines and applicable laws.

Q. How often should I update my scraped Yelp data?

The frequency of updating your scraped data hinges on the nature of your project and the dynamism of the data on Yelp. If your aim is to capture real-time trends, user reviews, or current pricing information, more frequent updates might be necessary. However, excessively frequent scraping can strain Yelp’s servers and potentially get your IP address blocked. It’s advisable to strike a balance: determine the criticality of timely updates for your project while being respectful of Yelp’s infrastructure. Monitoring Yelp’s update frequencies or setting up alerts for significant changes can also guide your scraping intervals.

Q. Can I use scraped Yelp data for commercial purposes?

Using scraped data from platforms like Yelp for commercial endeavors poses intricate challenges. Yelp’s terms of service explicitly prohibit scraping, and using their data without permission for commercial gain can lead to legal repercussions. It’s paramount to consult with legal professionals to understand the nuances of data usage rights and intellectual property laws. If there’s a genuine need to leverage Yelp’s data commercially, consider reaching out to Yelp’s data partnerships or licensing departments. They might provide avenues for obtaining legitimate access or partnership opportunities. Always prioritize transparency, ethical data usage, and obtaining explicit permissions to mitigate risks.

Q. How can I ensure the accuracy of my scraped Yelp data?

Ensuring the accuracy and reliability of scraped data is foundational for any data-driven project. When scraping from Yelp or similar platforms, begin by implementing robust error-handling mechanisms in your scraping scripts. These mechanisms can detect and rectify common issues like connection timeouts, incomplete data retrievals, or mismatches. Regularly validate the extracted data against the live source, ensuring that your scraping logic remains consistent with any changes on Yelp’s website. Additionally, consider implementing data validation checks post-scraping to catch any anomalies or inconsistencies. Periodic manual reviews or cross-referencing with trusted secondary sources can act as further layers of validation, enhancing the overall quality and trustworthiness of your scraped Yelp dataset.