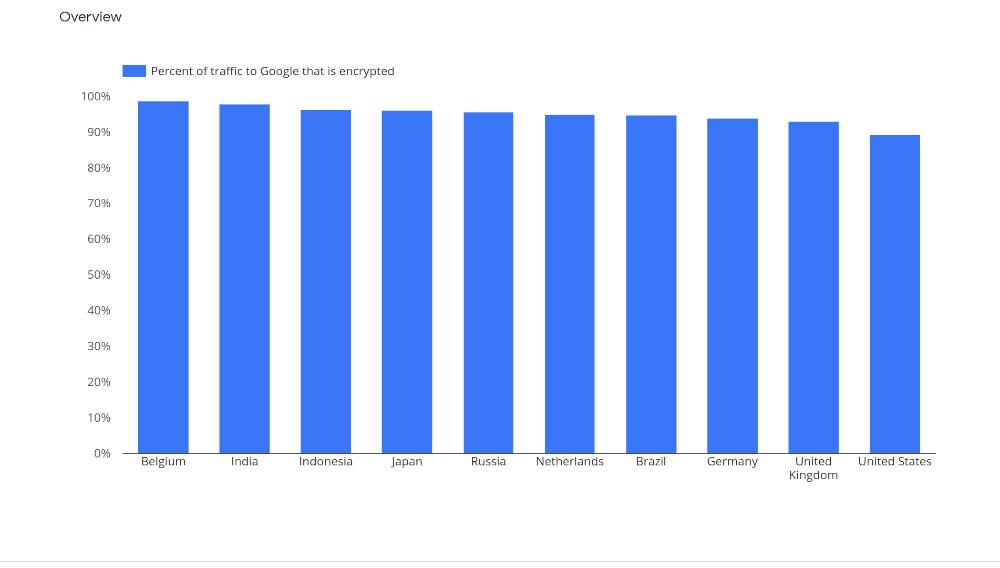

The world of communication is advancing at an electrifying pace, and data communication is gaining the progress of new horizons. The HTTP vs HTTPS proxy is a dynamic mode of transition to beat the gap between communication fluency and data security over the Internet. For securer data transmission, many studies found that proxies play a vital role. According to Google’s Transparency Report, as of Jan 2022, 95.1% of all web traffic is protected by HTTPS and it’s expected that this number will continue to increase.

Another report by W3Techs shows that as of January 2021, 81.8% of all websites were using HTTPS. This number is expected to continue to grow as more websites adopt HTTPS and new technologies are developed to make it even more secure.

A large portion of users have their trust in systems that aren’t controlled by them and which they don’t fully understand. Digital certificates signed by certificate authorities and intermediate authorities provide the foundation for secure communications on the Internet.

With the increasing use of HTTPS, the usage of HTTPS may soon become the default in HTTP 2.0. It is essential to remember that HTTP proxy servers that act as “stepping stones” between web browsers and servers are a particularly significant class of system from the standpoint of security and privacy.

Understanding HTTP Proxy

So what is an HTTP Proxy? A proxy server automatically configured when a browser is installed on a network as part of configuring the computer or browser on that network as a consequence of the installation of the browser is called HTTP proxy.

The HTTP proxy intercepts communication sent through the Hypertext Transfer Protocol (HTTP). In the absence of a user-defined IP address, the proxy’s IP address falls back to one that the operating system assigns.

A web address will be typed into a browser by a user. A web request will be generated by your web browser when you press the Enter key, in the form of a series of packets of data, which will be sent via the Transmission Control Protocol (TCP). A web server is not directly responsible for handling the TCP data packets. An HTTP proxy is first reached instead. HTTP proxy servers assign data packets IP addresses before sending them to the target website.

When Should I Use The HTTP Proxy?

The following are some of the reasons why HTTP proxies are used:

- Connectivity can be anonymized by removing identifying information

- Filter the content according to your preferences

- Ensure that the security of the data is maintained

HTTPS Proxies

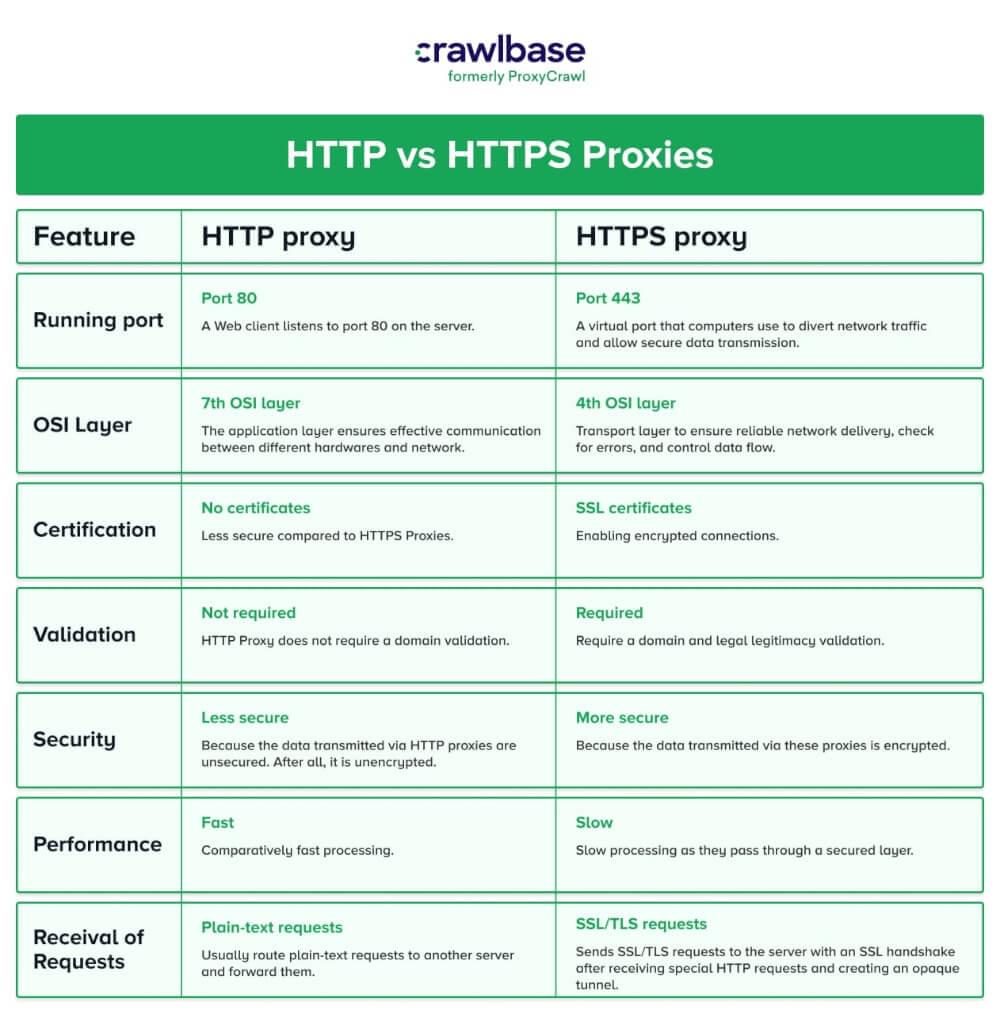

An HTTPS connection encrypts the data using a Secure Sockets Layer (SSL). This protocol offers more safety than HTTP. As security requirements increase, more and more websites, especially bank and online payment sites, switch from HTTP to HTTPS. Port 443 is used by default for HTTPS.

Proxy servers that handle HTTPS requests from clients are always called HTTPS proxy servers. A proxy server for HTTP is similar. The only difference is which protocols they support. Both HTTP and HTTPS proxy servers can cache information downloaded from the Internet. Surfing can be sped up, and network traffic can be reduced using this feature.

Are HTTPS Proxies Secure?

Using HTTPS, clients and servers can communicate and conduct transactions securely. HTTPS proxies can be used to secure web servers protected by Fireboxes and Fireboxes or to examine HTTPS traffic on your network. HTTPS clients establish TCP (Transmission Control Protocol) connections on port 443 by default when making a request. Port 443 is the most commonly used port for HTTPS servers.

A digital certificate secures a connection, validates the identity of the web server, and exchanges the shared key with HTTPS. The Firebox can be used for security by encrypting and decrypting HTTPS traffic. The web server returns encrypted pages to users and encrypts requests made by users. Deciphering a carrier is necessary before it can be examined. Firebox encrypts and sends traffic with a certificate once it analyzes the content.

We recommend importing the current web server certificate from the HTTPS proxy to secure a web server accepting requests from an external network. The default certificate created by your Firebox can be exported or imported instead to use this feature. If you use HTTPS-proxy to examine web traffic, export the default certificate to all users so they don’t receive browser warnings about untrusted certificates.

Experts recommend that you create a custom policy for each port that HTTPS clients or servers use in your organization other than port 443. The HTTPS proxy can be used as a template to create this policy.

What Is An SSL Proxy?

Secure Sockets Layer (SSL), an application-level protocol, is used to encrypt Internet communications. A combination of privacy, authentication, confidentiality, and data integrity makes up SSL, also known as Transport Layer Security (TLS). The security level offered by SSL results from the use of certificates and pairs of private-public vital exchanges.

A Secure Socket Layer (SSL) proxy server, also called the SSL proxy server, uses the Secure Socket Layer (SSL) protocol. An SSL proxy encrypts and decrypts communications between a client and a server without either party being aware that a proxy is in use.

Hypertext Transfer Protocol over SSL is the abbreviation for SSL proxy, also known as an HTTPS proxy. A transparent SSL proxy encrypts and decrypts SSL data between the client and the server. Through the SSL proxy, SSL encryption and decryption can be performed between the client and server. When SSL forward proxy is enabled, it can gain a deeper insight into application usage. In a nutshell, an HTTPS proxy communicates over SSL and uses the HTTP protocol.

What is the difference between HTTP and HTTP Proxy?

The main difference between HTTPS (https://) and HTTP (http://) is that HTTPS is a protocol for securely transmitting data over the internet, which uses the transport layer security (TLS) protocol to encrypt data and ensure secure communication. HTTPS is the combination of HTTP (the protocol used for transmitting data on the web) and TLS. In short, HTTPS is a protocol built on top of the more fundamental TLS protocol.

Can I Use HTTP Proxy for HTTPS?

It is possible to use an HTTP proxy for HTTPS traffic, but it would not provide any added security or encryption. The proxy would simply forward the unencrypted HTTPS traffic between the client and server.

In addition to creating a secure communication channel over an insecure one, communication engineers must consider the benefits of HTTP vs HTTPS proxy because the latter uses SSL/TLS, which ensures end-to-end security.

Using an HTTP proxy for HTTPS requests is possible with either a particular HTTP proxy or a SOCKS proxy. That is the simple answer to the question, “Do HTTP proxies work with HTTPS.” HTTP proxy servers can see the contents, which defeats SSL/TLS’s goal of preventing eavesdropping by man-in-the-middle attacks. Consequently, some tricks must be employed if we want to proxy through a simple HTTP proxy.

What is a Secure Way To Use An HTTP proxy for HTTPS Requests

An HTTP proxy can be turned into a TCP proxy with a special command called CONNECT. All HTTP proxies do not support the feature, but it is available in many of them now. Even though TCP cannot access the HTTP content sent in clear text, it can forward packets back and forth. Thus, the proxy facilitates communication between client and server. Proxying HTTPS data in this manner ensures the security of the data. Because SOCKS proxy works at a low level, we can undoubtedly proxy HTTPS using it. Depending on your understanding, SOCKS proxies can be used as TCP and UDP proxy servers.

Pros and cons of using HTTP and HTTPS

HTTP is a widely-used protocol for transmitting data on the web. It has the advantage of being simple and efficient, but it does not provide any built-in security features to protect data from being intercepted or tampered with during transmission.

HTTPS (HTTP Secure) is an extension of HTTP that uses the SSL/TLS protocol to encrypt data and provide secure communication. It has the advantage of protecting sensitive information such as login credentials and credit card information, but it can slow down the website’s loading time due to additional SSL/TLS handshaking and encrypting process.

A comparative analysis of HTTP vs HTTPS proxy has been conducted in this study the exhausting need of censorship and anonymity over the last decade where the two key players, users and free proxies are always out of reach. But ProxyTorrent played a key role in monitoring several thousands of free proxies on a daily basis. In a study that analyzed data spanning 10 months and 180,000 free proxies, we found that proxies are used in the wild, giving anonymous statistics in exchange for free safe proxies. The pros and cons of using HTTP and HTTPS are as follows:

To shed light on the pros and cons of using HTTP and HTTPS.

1. Analyzing HTTP – Pros of HTTP

a. Scheme of IP Addressing

A sophisticated addressing scheme is used in HTTP. This process assigns IP addresses recognizable names for them to be easily identified on the World Wide Web. The public can easily engage with the Internet with this method instead of a standard IP address with a series of numbers.

b. Adaptability to change

HTTP can download extensions or plugins whenever an application needs additional functionality and display the relevant data.

c. Protecting your data

It is true that an HTTP connection downloads each file independently, and the connection is closed after each file is transferred. This is known as a “connectionless” or “stateless” protocol, and it is one of the reasons why HTTP is considered simple and efficient.

However, it also means that each file must be requested and transferred separately, leading to a slower page load time compared to HTTPS. This can be mitigated by using techniques such as browser caching, gzip compression, and HTTP/2 protocol.

On the other hand, HTTPS uses a “connection-oriented” or “stateful” protocol, known as the TLS (Transport Layer Security) to encrypt the data being transmitted. This means that the browser and server maintain an open, encrypted connection throughout the duration of the session. This allows for faster and more efficient transfer of multiple files and resources, as well as providing an additional layer of security by encrypting the data in transit.

Overall, while HTTP may have the advantage of minimizing interceptions during transmission, the use of HTTPS provides an added layer of security through encryption and can also improve the performance of web pages by allowing for faster and more efficient transfer of multiple files and resources.

An HTTP connection downloads each file independently and then closes. The result is that only one single element of a webpage is transferred. Due to this, interceptions during transmission are minimized here.

d. Negligible latency

Handshakes will take place only after the connection is established in HTTP. Due to this, there will not be a handshaking procedure following a request. Connection latency is significantly reduced as a result.

e. Achieving accessibility

When an HTTP page is loaded for the first time, it is stored inside a cache called the page cache. As a result, content loads faster on subsequent visits.

2. Analyzing HTTP – Cons of HTTP

a. Ensure that data integrity is maintained

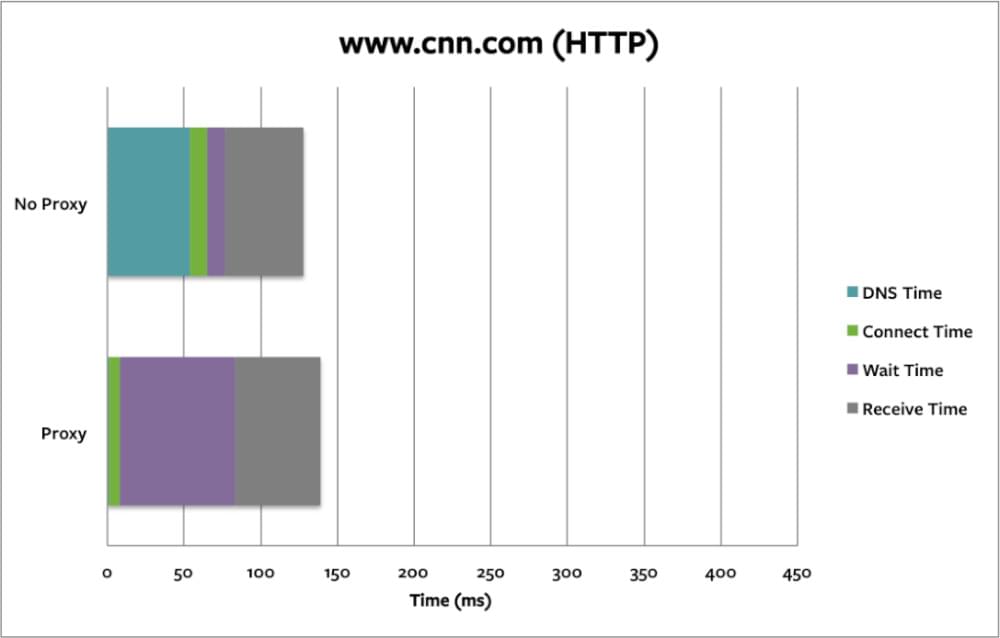

HTTP does not use encryption so that someone can alter the content. The data integrity of HTTP is at risk because of this reason. Here we will try CNN website with and without proxy(HTTP) which shows a significant increase in wait time and decrease in DNS time.

b. Protecting the privacy of your personal information

Another problem with HTTP is privacy. Hackers who intercept the request can view all the content on the web page. Additionally, they can gather confidential information such as usernames and passwords.

c. Availability of servers

Clients don’t close the connection despite receiving all the data HTTP needs. Because of this, the server will not be available during this period.

d. Overhead expenses in the administrative department

Multiple connections are required when transmitting a web page via HTTP. The link is burdened with administrative overhead as a result.

e. Support for Internet of Things (IoT) devices

HTTP uses more system resources, resulting in higher power consumption. IoT devices today contain wireless sensor networks, making HTTP unsuitable.

3. Analyzing HTTPS – Pros of HTTPS

a. The encryption procedure

HTTPS is known for its data encryption. HTTPS always encrypts the data it transports. Consequently, the information is highly secure. Despite the hackers’ efforts, the data cannot be misused since it is encrypted.

b. Safeguarding User Data

A comparison of HTTP vs HTTPS proxy reveals that HTTP saves data on the client system, whereas HTTPS does not keep data on the client system. In the public space, the information is not vulnerable to theft.

c. Assuring correctness

The policy of a certificate must match the policy of the website. The users will be notified if the connection is unsecured. It is not. HTTPS ensures that users’ data is sent securely to the correct site, not to any invalid ones. It builds trust with potential clients.

d. Incorporating data validation into your workflow

Handshakes are used by HTTPS to validate data. We validate all data transfers and components, such as sender and receiver. Validations are only successful if data transfer is successful. The operation will be aborted if this is not the case.

e. Efficacy and reliability

When considering data reliability in comparing HTTP vs HTTPS proxy, users generally prefer HTTPS. Green padlocks on webpage URLs give visitors confidence that the site is secure.

f. HTTPS is Ideal for SEO

It is one of the ranking signals to Google that a website has an HTTPS connection. Websites with HTTPS certificates rank higher than those without.

2. Analyzing HTTPS – Cons of HTTPS

a. HTTPS is a bit costly

SSL certificates are required when you switch to HTTPS. The website hosting provider charges an annual fee for renewing SSL certificates. Besides that, there are ways to get a free SSL certificate, but it is not recommended.

b. Performance

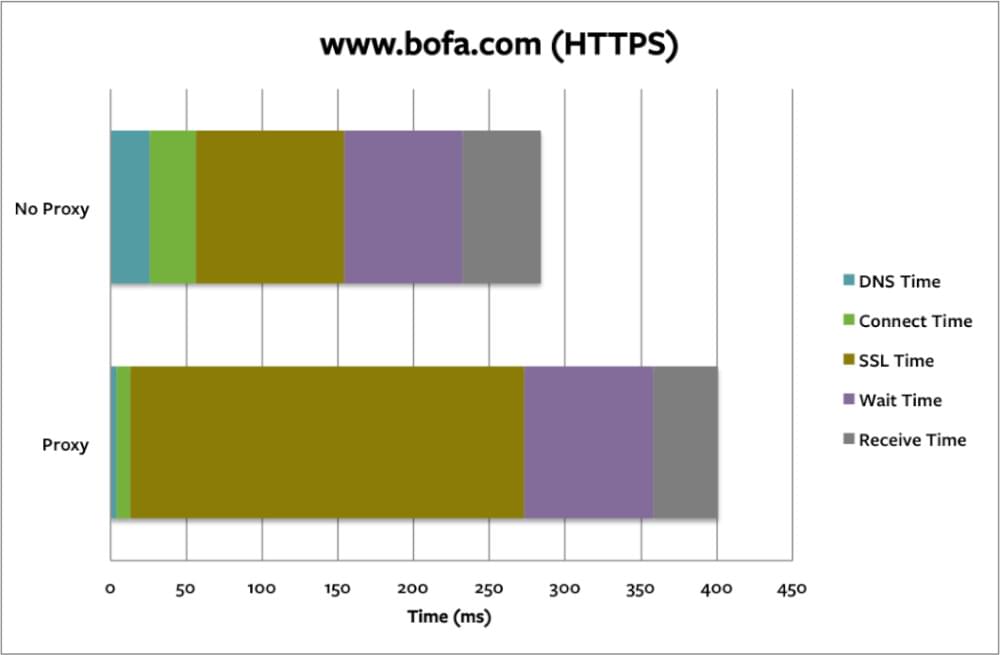

Encrypting and decrypting data using HTTPS involves a great deal of computation. Consequently, the response time is delayed, resulting in a slower website. With Bank of America website, as per Thousand Eyes, we can see that the SSL time is greatly increased.

c. Keeping data in the cache

HTTPS may cause caching problems for some contents. Because of this, ISPs will not be able to cache encrypted content. It is unlikely that public caching will happen again in the future.

d. Achieving accessibility

A firewall or proxy system may prevent access to HTTPS sites. Intentional or unintentional, this can occur. It is also possible that the administrators forgot to allow HTTPS access.

Do HTTP Proxies Work With HTTPS?

It is possible to request HTTP as well as HTTPS resources through HTTPS. This is possible thanks to modern proxy servers that serve your needs of keeping secure while providing a stable connection.

When Should I Use HTTP Proxy?

HTTP proxies have many use-cases. One of the main purposes is not to reveal the real IP address of the user. This happens by the user selecting an IP address for their proxy, which then acts as their IP address. Additionally, it can filter content from HTTP or HTTPS proxies. This act prevents malware, spyware, or ransomware attacks on the server and user sides. Lastly, it promotes security by examining headers of the content’s source. This allows for suspicious content to not reach the user through a firewall.

- To hide the real IP address

- Prevent malware,spyware or ransomware attacks

- For more security

- To examine headers of the content’s source to avoid suspicious content to reach user through firewall.

Summing Up

Crawlbase Smart Proxy provides you with intelligent web proxies blended with additional functionalities like rotating residential proxies. The pros and cons discussed in this writing elaborates on almost all aspects of HTTP vs. HTTPS proxies and furnishes insight into the details to select an intelligent proxy and proxy provider for your desired result. When choosing the correct proxy server for a user, the user should consider both the purpose of use of the proxy server and the outcome they expect from the proxy server in question.