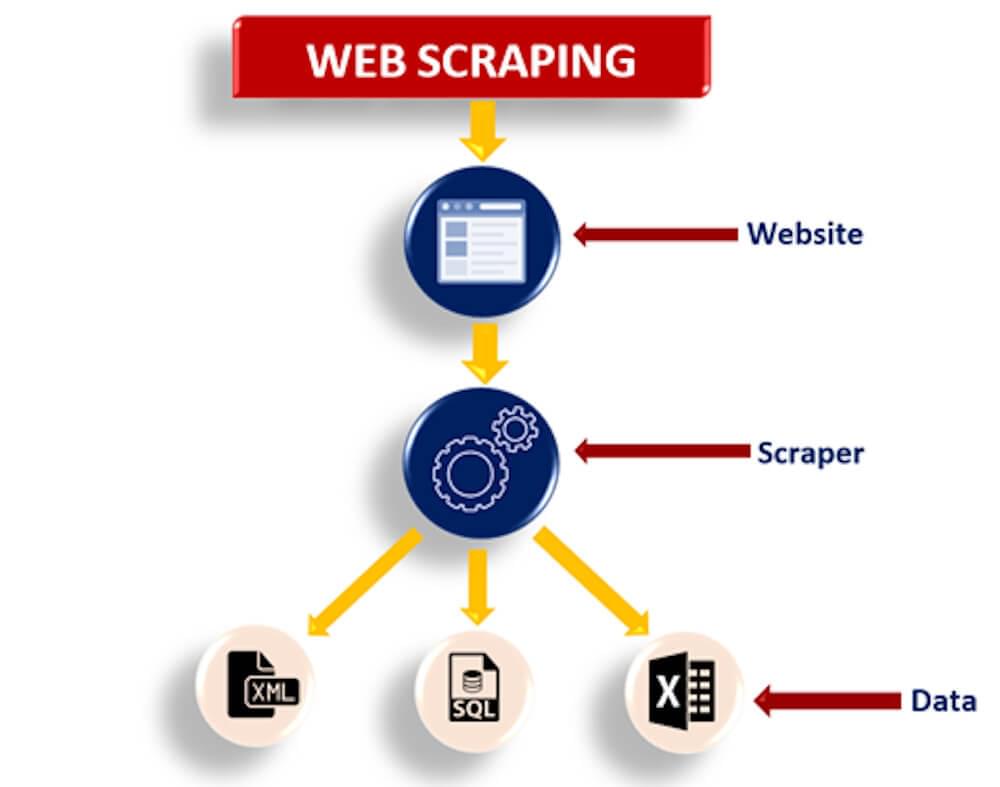

Web Scraping is a strategy utilized to extract lots of content from online pages where the information can be saved to local storage or a data set in a tabular spreadsheet. Different words that are utilized as an option for web scraping incorporates the terms Screen Scraping, Web Data Extraction, Web Harvesting, and so on. It is the strategy of automating this interaction rather than manually duplicating the information from sites.

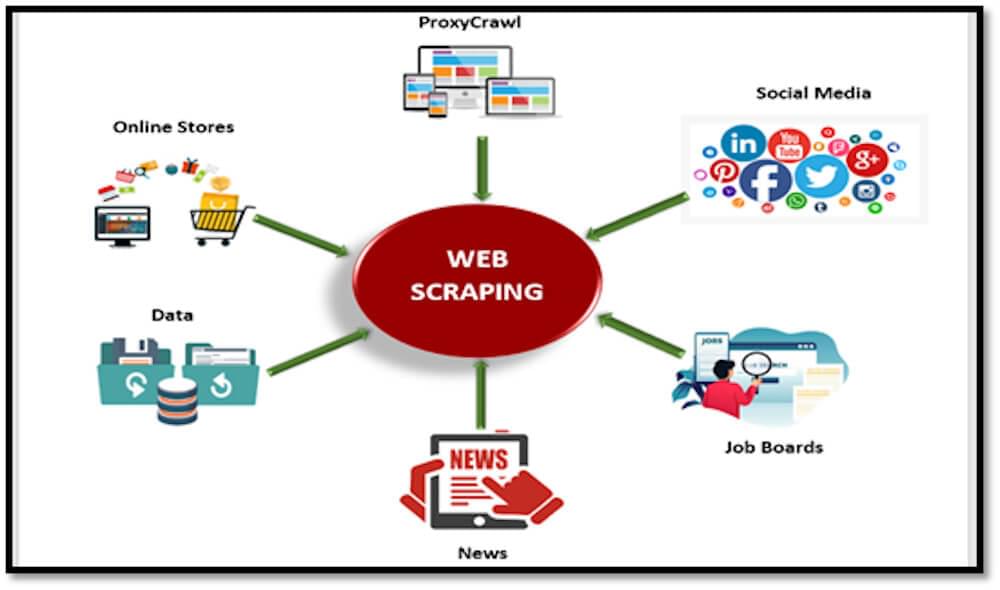

Web scraping is primarily used to gather huge data from sites. In any case, for what reason does somebody need to extract such huge information from websites? To think about this premise, let us cite some of its uses:

- Web scraping is used to gather information from internet shopping sites and use it to analyze the costs of items.

- Many organizations utilize emails as a mode of promoting their products. Web scraping is often used to gather email IDs and afterward send mass messages.

- Utilized to gather information from Social Media sites like Twitter to discover what’s moving.

- Web scraping is also an efficient way to gather enormous amounts of data (Statistics, General Information, Temperature, and so forth) from sites, which are investigated and used to do survey for website feedback or R&D.

Approaches used for Data Scraping

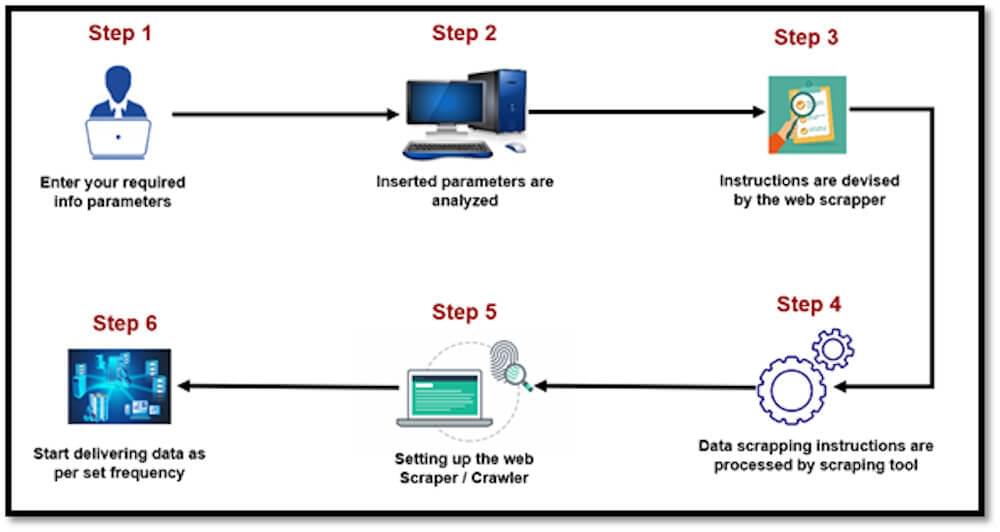

The Internet is an enormous store of the world’s data, whether it is text, media, or information in some other configuration. Each page show information in one structure or the other. Admittance to this information is significant for the achievement of most organizations in the cutting-edge world. Sadly, the majority of this information isn’t open. Most sites don’t give the alternative to save the information that they show to your nearby stockpiling or your own site. Web scraping can be done by any of the two methods as follows:

- Scraping web data by ready-made web scraping tools

- Web scraping via programming languages

Both of these methods are quite handy according to the situation in which they are implied. We will go through both of these in this blog one by one to have a crystal-clear understanding of which approach should be adopted in respective scenarios.

Scraping Data via Web Scraping Tools

Web Scraping tools are explicitly developed for extracting data from sites. They are otherwise called web reaping tools or web information extraction tools. These instruments are helpful for anybody attempting to gather some type of information from the Internet. Web Scraping is the new information passage method that doesn’t need dreary composing or duplicate and pasting.

1. Octoparse

Octoparse is a tool for web scraping that is simple to use for coders and non-coders and famous for eCommerce information scratching. It can scratch web information at an enormous scope (up to millions) and store it in organized documents like Excel, CSV, JSON for download. It offers a free arrangement for clients and a preliminary for paid sub.

2. Scraping-Bot

Scraping-Bot.io is a proficient apparatus to scratch information from a URL. It gives APIs adjusted to your scratching needs: a conventional API to recover the Raw HTML of a page, an API spent significant time in retail sites scraping, and an API to scrape the property postings from land websites.

3. xtract.io

xtract.io is an adaptable information extraction software that can be modified to scrape and construct web information, web-based media posts, PDFs, text archives, authentic information, even messages into a consumable business-prepared configuration.

4. Agenty

Agenty is a Robotic Process Automation programming for information scraping, text extraction, and OCR. It empowers you to make an agent with only a couple of mouse clicks. This application assists you with reusing all your prepared information for your examination.

5. Import.io

This web scraping tool assists you with framing your datasets by bringing in the information from a particular page and sending out the information to CSV. It is extraordinary compared to other information scratching devices which permits you to Integrate information into applications utilizing APIs and webhooks.

6. Webhose.io

Webhose.io gives direct admittance to organized and ongoing information to crawling a large number of sites. It permits you to get to recorded feeds covering more than ten years of information.

7. Dexi Intelligent

Dexi clever is a tool for web scraping that permits you to change limitless web information into prompt business esteem. It empowers you to reduce expenses and saves valuable time for your association.

8. ParseHub

ParseHub is a free web scraping tool. This high-level web scrubber permits extricating information is just about as simple as tapping the information you need. It is extraordinary compared to other information scraping tools that permit you to download your scratched information in any configuration for examination.

9. Data Stermer

This tool assists you with getting web-based media content from across the web. It is a standout amongst other web scrubbers which permits you to remove basic metadata utilizing Natural language processing.

10. FMiner

FMiner is another well-known tool for web scraping, information extraction, slithering screen scraping, large scale, and web support for Windows and Mac OS.

11. Content Grabber

This is an incredible huge information answer for solid web information extraction. It is a standout amongst other web scraping tools that permits you to scale your association. It offers simple to-utilize features like a visual point and snaps manager.

12. Mozenda

Mozenda permits you to extricate text, pictures, and PDF content from website pages. It is extraordinary compared to other web scrappers that assist you with getting sorted out and get ready information records for publishing.

Scraping Web Data via Programming Languages

Gathering information from sites utilizing an automated interaction is known as web scraping. A few sites unequivocally preclude clients from scraping their information with robotized instruments. However, there are several ways to overcome such barriers and build your own web scraper from the ground up. Here’s a rundown of techniques:

1. Crawlbase

The Crawlbase API is one of the top well-known web-scraping APIs to aid developers and organizations to scrape websites safely. It provides HTML necessary to scrape JavaScript-built webpages, manages automated browsers, avoids manual human tests, e.g., CAPTCHAs, and also handles proxy management.

2. Manual

This is how most normal clients get information from the Internet. You loved an article so you reorder it on a word document in your work area. This is manual and henceforth sluggish and less effective. Also, it works for little lumps of information that include just basic content. If you wish to save pictures and other various types of information, it may not work effectively.

3. Regular Expressions

For this situation, you characterize an example or standard articulation that you need to coordinate in a content string and afterward search in the content string for matches. It is utilized a ton in web search indexes. At the point when one is investigating string data, standard articulations become an integral factor. Standard articulations are a fundamental instrument and can deal with your rudimentary necessities.

4. DOM Parsing

With the assistance of internet browsers, projects can get to the powerful scripts that the customer-side contents have made. A tree-structures depiction of the parsed pages can be a Document Object Model, also known as DOM that assists in gaining admittance to part of the pages while scraping data. To give you a model, an HTML or XML record is changed over to DOM. What DOM does is that verbalizes the record’s structure and how an archive can be gotten to. PHP gives DOM expansion.

Useful Programming Languages to Scrape Website Data

1. Web Scraping with Python

Envision that you will need to pull a lot of information from sites, and you have to do it as fast as possible. In this scenario, web scraping is the appropriate response. Web Scraping makes this work simple and quick. In Python, beautiful soup and other libraries, as well as frameworks like Scrapy, are available to help you achieve your goals.

2. Web Scraping with JavaScript/NodeJS:

JavaScript has gotten quite possibly the most famous and generally utilized dialects because of the gigantic enhancements it has seen and the presentation of runtime known as NodeJS. Regardless of whether it’s a web or mobile application, JavaScript currently has the correct tools. It has multiple APIs support and scraping libraries that assist in web data scraping.

These are just two examples of programming languages that are widely used for web scraping. To better understand how you can build a scraper from scratch, we have prepared a short guide below.

Pre-Requisites for Web Scraping by Scrapy Crawlbase Middleware

- Ebay Product Page URL

- Required libraries and API integrations in Python

- Crawlbase API token

Use Scrapy and Crawlbase to Scrape Data from Ebay Product Page

We will have the code for our main spider by importing the relevant modules by creating a ‘main.py’ file in the ‘/root/spiders’ folder.

1 | from scrapy_crawlbase import CrawlbaseRequest |

Then we will have an optional script in the items.py’ file of the ’/root/items.py’ folder define here the models for your scraped items.

1 | # See documentation in: |

We then want to configure Scrapy middleware in the ‘middlewares.py’ file of the root folder.

1 | # See documentation in: |

We then want to configure item pipeline in the ‘pipelines.py’ file of the root folder.

1 | # Define your item pipelines here |

We then want to have settings for the scraper in the ‘settings.py’ file of the root folder by passing the Crawlbase token and configuring the scrapy spider.

1 | # Scrapy settings for EbayScraper project |

Output

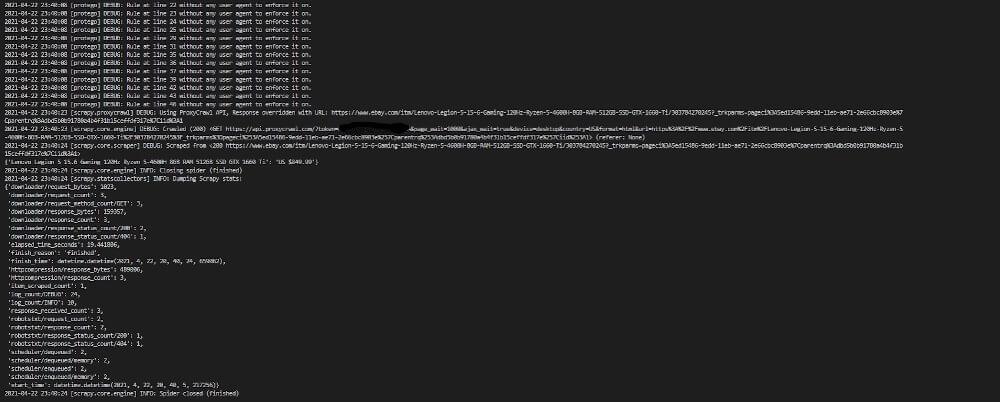

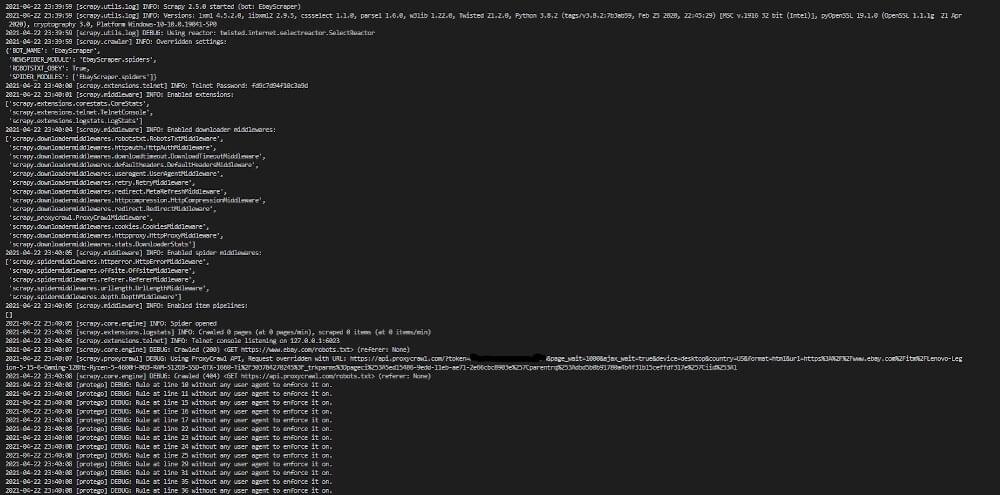

We will now run the “scrapy crawl ebay” command in the terminal to get an output similar to the picture below.

This was just a basic illustration of the power of Crawlbase’s Scrapy Middleware API calls. You can try things through various perspectives and see what works in your case.

Conclusion

Web scraping has multiple benefits in various domains of life. It can extract data for marketing purposes, contact information, searching for different products, etc. The implementation can either be a code-based solution developed manually by any person or an organization or an available web data scraping tools utilization. The key implications of web scraping are everlasting with new technological discoveries.

With Crawlbase, you can crawl and scrape the internet to get numerous types of data ranging from images, reviews, detailed information about products and services to getting emails, phone numbers, and potential developers’ addresses.