A Web scraper is a great data mining software mostly used by data miners and analysts to gather big datasets over the World Wide Web. It is a tool to automate the process of web scraping with efficient data mining techniques, which would otherwise be impossible to do manually. As data collection through web scraping becomes more and more critical for most businesses, the need for a better web scraper also becomes a necessity. So, in this article, we will tackle the relevance of web scrapers and how you can use this amazing tool for your projects even without any technical or programming skills.

Data Mining in a Nutshell

Before we dive deep into web scrapers, let us briefly discuss data and data mining. The term “data mining,” also known as “knowledge discovery in databases,” was coined in the early 1990s and is the process of data harvesting or digging through large data volumes to identify patterns and connections that can be used to predict future courses. The fundamentals of data mining comprise several scientific disciplines including analytics and statistics. However, as our technology advances, artificial intelligence and machine learning have played a significant role in the process of mining big data.

According to studies, the total amount of data generated worldwide has reached 64.2 Zettabytes as of 2020, and data creation will continue to grow and triple over the next five years. This is why automation in web scraping or data mining is essential. Without it, you will not be able to take advantage of the vast resources available since gathering valuable data in a reasonable amount of time for any of your work will be hard.

Web Scraper - A Modern Tool for Mining

In this modern age, you will need data if you want to do market research and lay out the best business strategies. The most efficient way to gather a vast amount of data and access relevant information is through web scraping. The term “web scraping” refers to extracting any data from a target website. The information collected is then used for various reasons, such as data analysis, marketing research, SEO campaigns, and more. A web scraper is simply a tool that will allow web scraping automation.

It may sound very straightforward right now, but the truth is scraping websites is not an easy task. See, most websites do not like bot crawlers repeatedly accessing their content for any reason. Almost all websites nowadays have some sort of bot detection program or algorithm that will instantly block any suspicious activity, and web scrapers can easily trigger these types of security. This is why choosing the most suitable scraper will significantly affect your data mining success.

Choosing the right Web Scraping Tool - Things to Consider

As someone looking to extract valuable data throughout the internet, you will require a tool capable of accomplishing the task efficiently. Web Scraping is a resource-intensive endeavor and can be tricky without the proper knowledge of what factors you need to consider when looking for a scraping tool. There are several things to keep in mind when deciding, so we have listed some meaningful insights.

Ease of use - One of the reasons why you need a web scraper in the first place is the functionality it offers and how well it can make your life easier. It is one of the most important factors to consider when looking through a list of data mining tools and choosing the one that won’t waste your time focusing on how to use the tool. It should be well-documented and straightforward.

Scalability - A highly scalable tool is a must if you are planning to scrape huge amounts of data for any of your projects. The amount of data available online only increases over time, so it makes sense that your chosen data mining software should be easily scalable if you want to expand in the future.

Quality of Proxies - As a data miner or analyst, you will have to be wary of CAPTCHAs and other bot detection algorithms deployed by websites as they can prevent you from achieving your goals. CAPTCHAs are made to stop bots and web scrapers from sending too many requests from one source via automation and can effectively be bypassed or avoided using rotating proxies.

Pricing transparency - Just like any other service, the pricing structure is an important aspect to look at. Before signing up, users should be aware of added fees. As a provider of the service, these fees should be disclosed to all customers. Setting up your own tools for web crawling is a tedious task and costly for most individuals. Most businesses also prefer to avail proxy services from other companies for their web crawler. So, finding the right data mining software with fair pricing is always a must.

Customer Support - It is not an actual feature of the tool but is an important part of the overall experience when availing of a service or product. It is a necessary function of any business if they want to have successful products or services. As a customer, you deserve to have a good customer support experience, especially with data mining tools like a web scraper, where most of the functions are in the backend and will usually require technical knowledge to troubleshoot.

Popular Web Scraping Tools and Services for Beginners

While you can build your own web scraper from scratch, there is no denying the fact that availing a ready-to-use web scraping tool will be far more convenient for most users. With that said, in no particular order, we have listed down several well-known data mining tools in the scraping industry, perfect for beginners or for those who do not have the technical skills to create their own scrapers.

- Apify - Provides ready-made tools for crawling and scraping websites. Apify can automate most tasks you do manually in a web browser and scale it based on your needs. Apify also provides a wide array of data mining tools for specific use cases like scrapers for Social media sites, Google SERPs, SEO audit tools, Generic web scrapers, and more. In terms of pricing, they offer fairly flexible plans for all use cases, including an ideal subscription plan for freelancers, small project developers, and students.

- Brightdata’s Data Collector - A fully automated web data extraction tool. This data harvesting tool boasts a very intuitive and easily understandable user interface while accurately collecting data from any website. You have the option to use the pre-built collector templates or create custom collectors with the Chrome extension. However, the functionality you will get from their tool comes with a higher premium than most of our examples here. It is still reasonably priced since you’re paying for the overall convenience of their product.

- ScrapeHero’s Web Crawling Service - If you want to take automation to the next level, their crawling service may be your best option. It completely eliminates the need to set up servers or software to crawl any data, as it will provide a web scraping service and do everything for you. This data mining software will only require you to tell them what data you need, and they will manage the whole process from data gathering to delivery. Since this is a service that almost does not require any effort from the user, expect the pricing to be on the higher end of the spectrum.

- Octoparse - Zero clues when it comes to coding and on a tight budget for your project? Octoparse’s data extraction tool might be the solution you are looking for. They have a downloadable app with a point-and-click interface, so you do not have to worry about lines of code to extract various datasets on the web. In three easy steps, you will be able to start data harvesting. The pricing is very competitive, which includes generous access to all app features.

- Crawlbase’s Crawling API - It is one of the most affordable scraping tools available in the market today. It is a simple data extraction API that is built on top of thousands of rotating proxies that can quickly crawl, scrape, and deliver the data you need in seconds. However, unlike most data mining tools mentioned here, the Crawling API does not have a native UI where you can point and click things to execute commands. Instead, the API relies on its simplistic but effective approach to extracting data and its capability to be easily integrated into any existing system.

Extracting Data using a Web Scraper

Now that we have discussed several pointers on how to pick the best data mining software based on your needs let us show you a great example of how to utilize a scraper to extract data from a website. For this example, we will be using Crawlbase’s Crawling API to demonstrate how straightforward the process is. The Crawling API does not require you to have advanced knowledge in terms of coding to use it effectively. The API can be used by itself and can be executed via a web browser or terminal in three simple steps.

Step 1: Create your account and obtain an API key.

Create an account at Crawlbase to get your 1,000 free API requests. Go to your account’s dashboard afterward and copy your private token.

Step 2: Knowing the basics of the Crawling API.

Making your first API call is very easy. You just need to remember the base endpoint of the API and where to insert the website URL you wish to scrape. To understand it better, let’s break it down into three parts:

The Crawling API endpoint:

https://api.crawlbase.com

Your private key or token:

?token=API_KEY

The target URL:

&url=encodedURL

If you are not familiar with how to encode the URL, you can simply use this URL Decoder/Encoder page.

As a good practice, it is always recommended to read the product documentation before using it.

Step 3: Make your first API call.

With all the parts of a call combined, you can now send your request to the API and let it do the rest for you. Below is a complete example of a Crawling API call with the encoded URL:

1 | https://api.crawlbase.com/? |

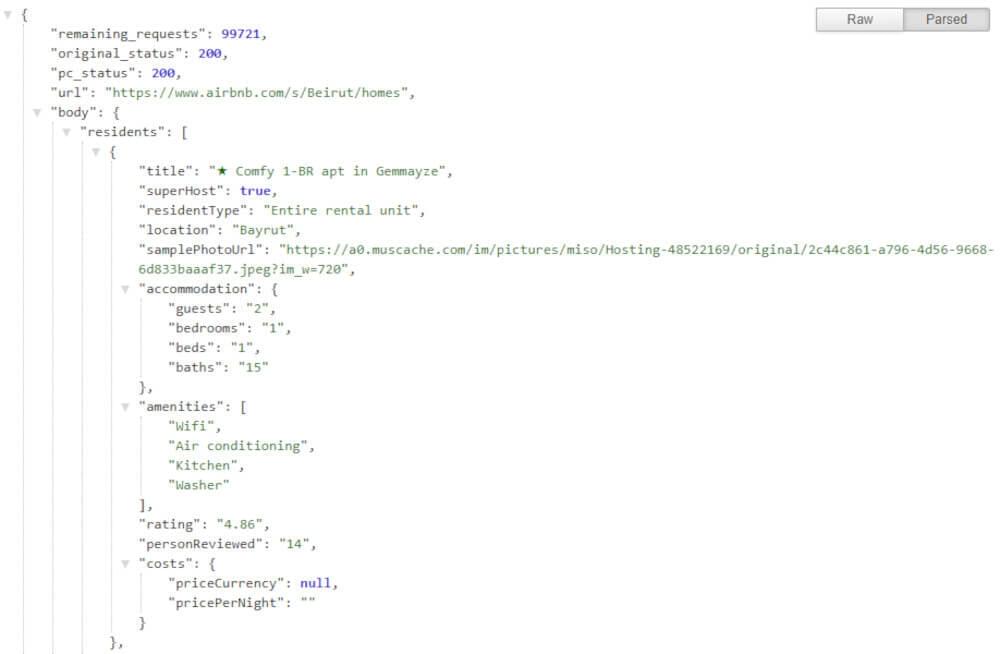

This can be executed in several ways, but sending it via your browser is the easiest. Just copy the line, paste it into the address bar, and hit enter. You will automatically get the parsed content of the website within seconds, as you can see in the example output below:

Since the Crawling API is highly scalable, it will be quite easy to build a fully automated web scraper on top of it or integrate it into any existing system or app. Crawlbase also provides a wide range of libraries and SDKs to aid users with the expansion or integration of the API.

Use Cases of Simplified Data Mining for Your Business

Efficient and simplified data mining can help out an array of industries and organizations, from businesses to government sectors and healthcare providers. You can also learn about the power of simplified data mining for your business through Illumeo’s data mining course – harness insights and elevate decision-making. Here are some applications of data mining:

Understanding Your Customers and Improving Service

Data mining can help companies extract and analyze the information they have about their customers to see what they like, how they shop, and what they do. This helps them make better ads, create new things to sell, and make customers happier so they keep coming back.

Data Mining Helps Catch Fraud

Data harvesting tools are like a detective for finding credit cards, insurance, and identity theft scams. It looks at how people use their cards and money and can spot strange things. This helps companies catch bad guys and stop fraud before it happens.

Supply Chain Works

Data mining can make supply chains work even better. It helps companies find places where things are not working efficiently and makes them better. This means things get done faster, and it doesn’t cost as much. Many data mining tools are now available to help companies use data mining to their advantage.

Choose the Right Places for Business

Data harvesting helps companies find the best spots for their stores, offices, and warehouses. It looks at data volumes and uses “location intelligence” to determine the best places. For example, it can show where most customers order from, so companies know where to set up their warehouses. It also looks at how many people live there, how much money they make, and what other businesses are nearby to help pick the perfect location.

Predicting the Future for Better Business

Every business needs to manage its resources just right because having too much or too little can be a big problem. That’s where data analysis comes in – it helps businesses predict what they’ll need in the future. They use data mining to extract necessary information from the past to create models that can tell them what will happen next. This helps them make smarter choices about what to do and where to invest their money.

Conclusion

Data mining doesn’t have to be complicated. There are a lot of data mining tools available in the market right now that can be used by anyone regardless of their technical skills. As an individual, you just have to be aware of each product’s capabilities to find the best one that fits your needs.

If you still have doubts or wish to learn more, we highly suggest trying it out yourself. Many of these tools for data harvesting can be tested for free by just registering for a trial. So, why don’t you go ahead and test them out? Crawlbase provides 1,000 free requests just by signing up. Those requests should be enough to help you get started with your scraping endeavors.