Most top organizations crawl data from websites to stay ahead of competitors. While it’s important, it can be challenging, especially when dealing with dozens or even millions of queries simultaneously. Your server might start to malfunction and eventually be blacklisted.

One of the best ways to crawl data from websites is to leverage a reliable solution like Crawlbase. Our innovative features have helped countless businesses remain at the top. This blog post will explore how you can crawl data with our easy-to-use API.

As this is a hands-on instruction, ensure you have a functional Crawlbase account before beginning. Go ahead and create one here; it’s free.

Extracting the URL

To extract the URL, you need to create an account at Crawlbase. After getting started, you can crawl data from thousands of pages on the internet through our easy-to-use API.

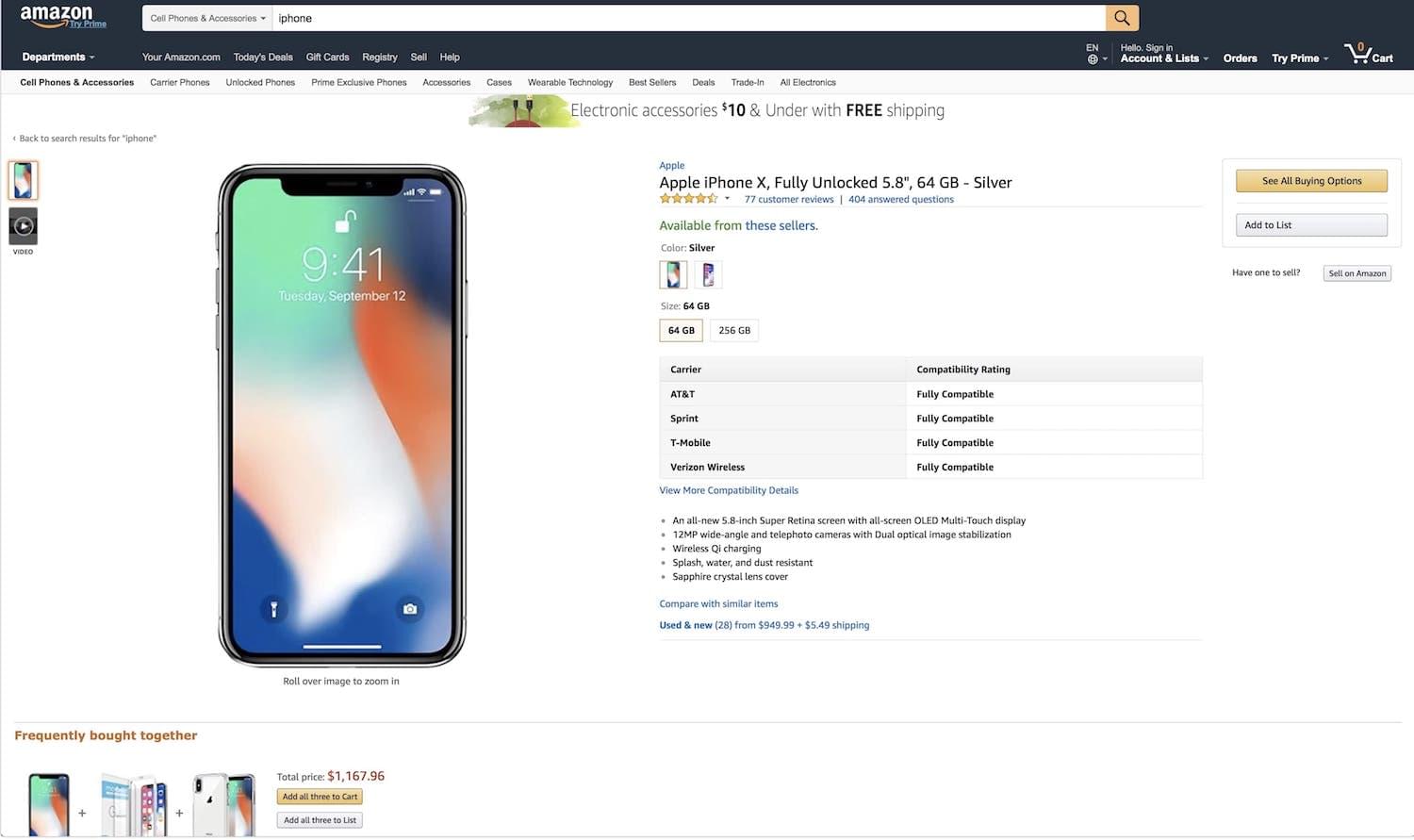

For this tutorial, we will crawl and scrape data from the iPhone X on Amazon’s marketplace currently. To get started, we will search for “iPhone X on Amazon” on Google, or we will load the link with the following parameter: https://www.amazon.com/Apple-iPhone-Fully-Unlocked-5-8/dp/B075QN8NDH/ref=sr_1_6?s=wireless&ie=UTF8&sr=1-6

How can we crawl Amazon securely from crawlbase?

To get started, click on the my account page. There, you will find the different crawling options on your dashboard; you can also obtain the standard and JavaScript tokens that will be valuable to crawl the Amazon page.

One of the best data crawling practices is knowing the programming language of the website you are crawling. Amazon’s website is built differently when compared to other sites that are mostly developed with React or Vue. In this case, we will use the standard token to extract iPhone X data from the marketplace.

The next step is to get the demo token caA53amvjJ24 You can get yours on the “My Account” page. Another point when setting up your URL parameters for crawling is to ensure that you should ensure that your URL is encoded correctly.

In cases where the website is developed on Ruby, the parameter will look like this:

1 | require 'cgi' |

And you will get the following:

1 | https%3A%2F%2Fwww.amazon.com%2FApple-iPhone-Fully-Unlocked-5-8%2Fdp%2FB075QN8NDH%2Fref%3Dsr_1_6%3Fs%3Dwireless%26ie%3DUTF8%26sr%3D1-6 |

Great! We have our URL ready to be scraped with Crawlbase.

Scraping the content

The next important step is to request to scrape the actual data from Amazon’s marketplace website. To submit the request, you will need to use this sample parameter:

https://api.crawlbase.com/?token=YOUR_TOKEN&url=THE_URL

That is, you will replace the “YOUR_TOKEN” with our token (caA53amvjJ24 in this instance) and the “THE_URL” for the URL.

Let’s get started.

1 | require 'net/http' |

We have completed our first call using the Crawlbase API to scrape data from the Amazon marketplace. The result-HTML will be something like this:

1 | <!doctype html><html class="a-no-js" data-19ax5a9jf="dingo"><!-- sp:feature:head-start --> |

How to Scrape Content from Websites

Crawlbase enables you to scrape multiple web pages across different programming languages safely while remaining anonymous without any web blocking. We have covered the different methods for developers and non-developers to crawl and download website content.

To get the best out of your crawling process, learning the several documents required to scrape in different languages is advisable. Here are some resources to help you:

Scrape Website with Ruby

Scrape Website with Node

Scrape Website with Python

Overview of the Crawlbase API Features and Functionalities

We have created a powerful solution that guarantees a seamless crawling process for businesses and individuals. Our API offers you all you need to crawl data from websites.

Powerful Crawling Capability

The Crawlbase API’s robust features enable users to retrieve various data types from websites. Here are a few of its capabilities:

- Textual Data Extraction: You can extract text from web pages, such as descriptions, articles, and other text-based material.

- Image Extraction: Users can access pictures or graphical material by retrieving images that are present on websites.

- Link Collection: You can collate links on websites for easy navigation and content pulling.

Customizable Configuration

Our API is tailored to fit your specific needs. You can customize your crawling process through the following features:

- Crawl Depth Adjustment: You can adjust the crawl depth to specify the extent to which you want our APU to crawl a webpage.

- Crawl Frequency Control: You can control the number of times a webpage is crawled based on the crawl frequency you choose.

- Data Type Selection: You can choose the data types you want to extract from websites based on your preferences and needs.

Structured Data Retrieval

With our API, you can retrieve structured and linear data from web pages through the following options:

- Formatted Output: You can integrate data from XML or JSON from other applications easily.

- Data Organization: You can extract and organize data to simplify the integration into your system or processes.

Additional Features

- Error Handling: Crawlbase API reduced the number of errors in your output by giving you a comprehensive report on your crawls.

- Secure Authentication: Like many reliable apps and websites, Crawlbase API follows strict data integrity and privacy guidelines through a secure authentication process.

Advantages of Using the Crawlbase API for Website Crawling

There are several benefits of using Crawlbase API to crawl data from websites. Most organizations trust our infrastructure to crawl websites; here are a few reasons why:

Personalized Solutions

We understand that each individual or business have unique needs. That’s why Crawlbase API offers users the opportunity to customize their crawling activities based on their preferences. You can set different parameters which can be controlled to get the best results.

Data Acccuracy

Data privacy and intergity is an important aspect of every business and our API caters to this through guidelines that ensures each data processed is remains true irrespective of which website or data types you are pulling.

Increased Productivity

Crawlbase API is built to understand the real scenarios behind the crawling data from websites. It combines real-time learning to provides effective results quickly without lagging.

Flexibilty

Being able to handle multiple requests at the same time ensures that users get the optimal results from crawling websites. Also, users get to increase and decrease their rate limits based on their needs, ensuring scalable results.

Precise decision-making

Our API leans on real-world settings to provide data from crawling processes. This enables users to get correct and accurate data to prepare them for trends and make beter decisions

Advanced Techniques with the Crawlbase API

Here are some of the advanced measures that be used to guarantee a seamless website crawling process:

- Parallel Crawling: This option allows users to gather data from multiple websites simultaneously, ensuring data accuracy and efficiency.

- Dynamic content technique: Users can crawl dynamic content by setting up the necessary techniques through this option.

- Customized selectors: This technique ensures users have accurate data by selecting the specific components that want to retrieve from web pages.

- Incremental crawling: This feature improves data extraction and reduces duplication through measures that crawl data from only new and modified content.

Use Cases of the Crawlbase API

Our API can achieve different business results depending on the set parameters. Over time, we have compiled the different use cases based on popular products that the Crawlbase API can cater to.

However, here are the common use cases of the Crawlbase API to inspire you:

- Business intelligence: Large organizations use Crawlbase API to get specific data information from various industries and make decisions.

- Market research: You can gather data from different market sources such as product information, reviews, prices and so on for your market analysis.

- Analyse competitors: You can get a glimpse of what your competitors are doing by crawling their web pages. This might give you a good understanding of industry activities and how to learning patterns for your team.

- Content aggregation: Blogs and news sites can take advantage of Crawlbase API to research and compile different content sources to create an extensive library of content for future needs.

- SEO optimization: Small-business owners and marketers can use the API to improve their searchability by crawling similar websites to know their performance across different metrics.

Strategies for Efficient Crawling using the Crawlbase API

To crawl data from websites effectively, you need to be aware of tactics that can increase your chances of getting the best possible data on the internet. We have compiled a few for you:

- Improve your crawling queries: When crawling data from websites, you need to optimize the queries to ensure the best output. Stating the precise data you want based on the parameters might be helpful in getting your desired outcome.

- Schedule your crawling: Since crawling can be automated, most users are tempted to do everything at once. You can schedule your crawling to limit the number of times a particular web pages is crawled. This would help you achieve focused crawling while helping the crawler to load more efficiently.

- Crawl gradually: You can reduce the load times and duplication by taking it slow. You can set your crawler to act at intervals. This would also reduce the potential of crawling previously crawled data.

- Set rate limits: When crawling a web page, you need to set rate limits to reduce constraints and spooking websites’ security measures.

Handling Diverse Challenges in the Crawling Process

Crawling websites generally comes with diverse challenges, but the Crawlbase API ensure a smooth process through refined features. However, it is also best to devise an appropriate strategy to overcome these challenges easily.

- Dynamic Content: When scraping data from a dynamic site, it is important to use dynamic rendering techniques to extract content built on JavaScript.

- Captcha and Anti-Scraping Mechanisms: CAPTCHAs are blocks that ensure human interactions with websites. For a smooth process, you must use proxies and CAPTCHA solvers when crawling a site.

- Robust Error Handling: To reduce server problems, you need an error-handling process that avoids intermittent loading and timeouts.

- Handling Complex Page Structures: You can ensure a smooth crawling process by customizing your crawlers to get around complex web pages.

- Avoiding IP Blocking: To avoid IP blocking or limitations from websites when crawling, rotate IP addresses and put IP rotation tactics into practice.

What are the Best Ways to Crawl Data from a Website?

Crawlers are essential resources for crawling data from websites. Effective crawling is critical whether you’re developing a search engine, researching, or monitoring competitors’ costs. But it’s crucial to do it effectively and ethically. Here’s how to find the right balance:

Respect Boundaries

Always begin by looking through the robots.txt file on the website. It tells you which parts of the website are safe to examine and which are off-limits, much like a handbook for crawlers. If you ignore it, your crawler may become blocked.

As in real life, showing civility always goes a long way. Refrain from sending too many queries at once to a website’s server. A little break (a few seconds) between requests shows respect, and the chance of overburdening the server decreases.

Prioritize and Adapt

Not every website is made equal. Sort the pages based on the importance of your objective if you need more time or resources. For instance, concentrate on product pages rather than general “About Us” pages if you’re recording product information. Many contemporary websites use JavaScript to load material dynamically. Ensure your crawler can handle this or some of the data may need to be noticed. Several libraries and tools are available to assist with this.

Continuous Monitoring

Don’t just let your crawler go and forget about it; keep a close eye on it. Regularly check in on its development. Watch for mistakes such as timeouts, broken links, or structural alterations to the website that may need modifying your crawling plan.

The internet is constantly changing, and your crawler ought to, too. To ensure you’re continually gathering correct data, be ready to update your scripts or settings whenever websites change.

Crawl Ethically

- Show Consideration: Refrain from bombarding servers with too many queries. Pay attention when a website encourages you to slow down.

- Examine the fine print: Certain websites have clear terms of service that forbid crawling. Always double-verify before beginning.

- Utilise Data with Caution: Observe users’ and website owners’ privacy. Don’t abuse the data that you gather.

Choosing Your Crawling Companion

When choosing the right crawling solution, you must consider the following:

- Scale: An essential tool may work fine for crawling small websites. However, a more robust solution is needed for large crawls.

- Customization: Is it necessary to extract certain data according to unique rules? Certain tools provide greater flexibility in this regard.

- Budget: Both paid and free choices are offered. Select one that meets the requirements of your project.

- Technical Proficiency: A script-based crawler may be ideal for those comfortable with code. Using a visual interface could be more straightforward.

Your Guide to Efficient Data Gathering

Extracting data is a valuable tool in remaining competitive in the current business landscape. Most organizations rely on accurate data for different purposes. That’s why getting a reliable data crawling partner is important. At Crawlbase, we have built an intuitive API with powerful capabilities to handle to daunting task of crawling modern websites.

We have a record of helping organizations to achieve their data scraping and crawling goals through our infrastructure that accomodates personalized needs. Our product gives you the needed competitive edge needed in streamlining your processes, whether you are technical professiona or not.

Let’s help your business growth through web crawling. Sign up now.