The process of scraping the web is an effective way to extract information without having to enter data manually or connect to APIs. Web scraping tools are programs that crawl the Internet and look for specific information on websites (web crawling) and then collect it automatically (web scraping). These tools primarily extract data from HTML documents, which are the main source of information on most websites.

Most of the data on the internet is in an unstructured HTML format. After that, you can convert the data into a structured format for various uses, for example, in a spreadsheet or database. Extracting data from a domain from websites makes it easier to analyze and utilize the information efficiently.

Companies seeking to learn about trends or organizations looking for specific information about something of interest can benefit significantly from such information. The purpose of this guide is to help introduce web scraping for beginners and explore how to find all URLs on a website.

What is Web Scraping?

Web scraping is extracting information from web pages and web servers; in other words, it is a process used to scrape websites for data. We can use it for many purposes, but organizations most often use web scraping to collect data on a large scale.

Brief History of Web Scraping

The history of Web scraping dates back nearly to the time when The World Wide Web was born. After its birth in 1989, a robot created by the name “Worldwide Wanderer” only had one very humble goal: measuring how significant this new thing called the Internet is.

The first instances of what we now call ‘web scraper’ date back to 1993, and the tools were available for measurement purposes.

JumpStation was the first crawler-based web search engine launched in December 1993. At that time, there were few websites, so sites relied on human website administrators to collect and edit links into a particular format; Jump Station brought innovation by being the first WWW search engine reliant on a robot, increasing efficiency.

The Internet was already becoming a more common resource for people, and 2000 saw some of its defining moments. One such moment is when Salesforce and eBay released their web APIs to ease programmers’ access to public data. This change has since allowed many other websites that offer an API, making information even more accessible!

Web scraping techniques for data analysis have become an integral part of data science and machine learning. It’s how we access and collect data from the Internet and use it in our algorithms and models, and it’s a skill that is constantly growing and improving. The rise of Python libraries such as Requests, BeautifulSoup, Selenium, and Scrapy has made web scraping more accessible and powerful than ever before.

Why is Web Scraping Important?

Web scraping can automate data collection processes at scale, unlock web data sources that add value to your business, and make decisions with more information using the power of big data.

The discovery is not new but rather an evolution from previous attempts, such as screen scrapers or user agent sniffing software, which are still in use today for specific purposes like hypertext transport protocol (HTTP) log parsing and conversion for machine-readable formats.

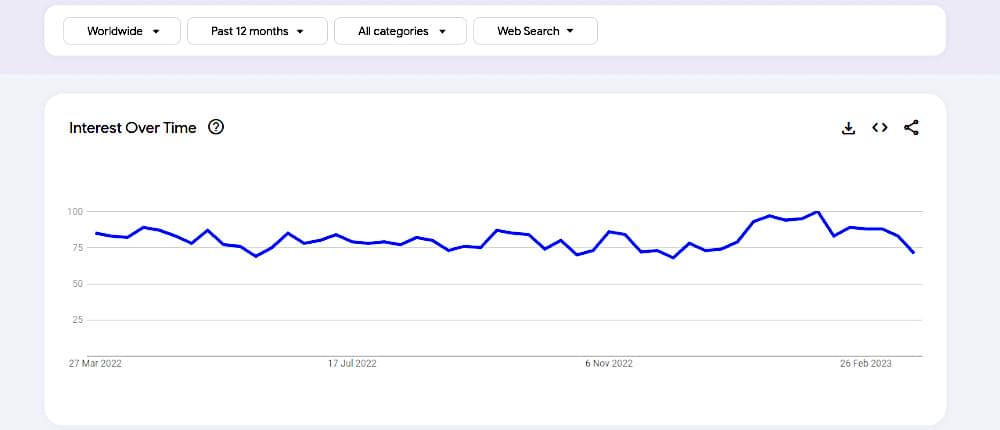

With advances in computer technologies, we now have powerful tools - artificial intelligence capable of analyzing billions of social media posts per day, clustering techniques able to analyze vast amounts of textual content within minutes, etc. The Python Standard Library includes modules such as html.parser and csv, making web scraping and data processing more accessible for beginners and efficient for developers. These factors explain the interest rate shown by Google Trends over time, indicating people’s growing thirst.

How to Find All URLs on a Domain

Before scraping or analyzing a website, one of the first steps is to find all URLs on a domain so you know what pages to target. You can scrape product listings, blog posts, or internal directories. Here are several methods to find all URLs on a domain:

1. Use a Website Crawling API

One of the most effective ways to find all URLs in a domain is by using a website crawler. Solutions like the Crawlbase Crawling API are designed to scan through websites and collect all available links. These crawlers automatically handle:

- JavaScript rendering

- Proxies and headers

- Link discovery across multiple levels of a site

This approach is ideal for finding all URLs across multiple domains, especially when dealing with dynamic content or paginated listings.

2. Check the Sitemap

Most websites offer a public XML sitemap that lists all important URLs they want search engines to index. You can usually find it at: https://example.com/sitemap.xml

Simply replace example.com with your target domain. You can fetch and parse this file manually or use a tool to extract all domain URLs programmatically.

3. Use Google Search Operators

Google can offer a helpful peek into what’s publicly indexed on a domain, you can search: site:example.com

This returns a list of URLs from a website indexed by Google. While it’s not exhaustive, it’s useful for spotting key sections of a site, such as product pages, articles, or directories.

4. Recursive Web Scraping

If a sitemap is missing or incomplete, you can build your recursive scraper using libraries like:

- Scrapy (Python)

- BeautifulSoup + Requests (Python)

- Puppeteer (JavaScript)

Start from the homepage, extract internal links, visit each one, and repeat the process until you’ve mapped out the whole structure of the website.

5. Use Crawlbase to Automate the Process

Crawlbase provides a reliable and scalable method for discovering and extracting URLs. The Crawling API and Smart AI Proxy allow you to:

- Find all crawlable links from a starting point

- Bypass bot protection and captchas

- Handle dynamic, JavaScript-heavy sites

This means you can focus on extracting insights while Crawlbase manages the heavy lifting. You can use our ready-to-use Google scraper to find all URLs on a domain.

Reminder: Always check the domain’s robots.txt file to understand what is and isn’t allowed for crawling. Be respectful of server load by applying proper delays and concurrency limits.

Advanced Web Scraping Techniques

Scientists are now harnessing AI to find new information retrieval methods, such as scraping data from web pages through computer vision that interprets what a human would see and identify. Browser automation is also used to interact with dynamic content, enabling extraction from sites that rely heavily on JavaScript.

The more data a machine learning system has to work with, the better it will be able to recognize patterns and make intelligent decisions. But access is usually time intensive or expensive in terms of money – so how can this process become easier?

Researchers are now developing systems that automatically pull up texts likely to contain relevant information within them by tapping into web searches; then they scrape any useful bits from these sources for use when extracting text-based data like graphs or tables. Advanced web scraping frameworks can handle complex tasks such as large-scale data extraction, pagination, and crawling, making them suitable for intricate operations beyond simple scraping.

This new method saves time and resources while ensuring that researchers have everything they need at their fingertips! For advanced scraping scenarios, automating web browsers with tools like Selenium is often used to efficiently extract data from complex websites.

What is Web Scraping Used for?

The Internet is a data store of the world’s information - be it text, media, or data in any other format. Every web page displays data in one form or the other. Access to this data is crucial for the success of most businesses in the modern world. Unfortunately, most of this data is not open.

Web scraping is a way to collect data from websites that do not allow it. The initial output of web scraping is often raw data, which needs to be processed before use. It’s often the best solution for businesses and individuals needing specific product or service information. We can also use web scraping services in limitless ways, so depending on your business needs, consider this software when building your website.

The Internet provides quick and convenient access to many different types of data, including videos, images, articles - anything! But what if we can only get at these files after visiting them online? A lot has changed with new technology, but there are still some things that haven’t followed suit just yet, such as how web pages handle saved files like video captures or screenshots;

Web scraping helps businesses discover crucial information about their competitors by getting publicly available company profiles and other related details, such as contact numbers. This type of service is also useful for individuals looking at job openings across different companies because web-scraped listings often include salary ranges within each position description, making finding potential employment opportunities easier than ever! When scraping listings or product information, it is common to handle multiple pages to ensure a comprehensive dataset is collected.

Here are some of the ways web scraping services you can use in real-world scenarios:

- Price Monitoring

The e-commerce field is facing intense competition, and you need a strategy to win. With web scraping technology, it’s easier than ever before for businesses to keep track of their competitors’ pricing strategies.

- Lead Generation

Marketing is the heart of your business. That’s why you need contact details for those who want what you’re offering to get them on board as a customer and make more money! But how can one find all these phone numbers?

Web scraping has many benefits, such as collecting an infinite amount of data from which limitless leads generated with just a few clicks.

- Competitive Analysis

It’s perfect for knowing your competitors’ strengths and weaknesses because it can automatically collect all the data on their website so that you don’t have to spend time doing the research yourself!

- Fetching Images and Product Description

Most small businesses need a fast and efficient way to populate their online store with products. With an average product having only an 8% conversion percentage, generating new descriptions for each can be time-consuming and expensive.

Web scraping will come in handy here too! Extract the most relevant information from retailer sites like Amazon or Target using this nifty web crawler.

You’ll see all sorts of benefits, including inputting specific data offline into your spreadsheet program without an internet connection and saving hours by eliminating manual entry that typically contains errors such as misspelling brand names or incorrect prices, etc.

All it takes is simple HTML commands written on our computer screen, then press enter once you’re ready! Now enjoy one less headache when creating content

Best Web Scraping Tools in 2026

Many web scraping tools are available today, making choosing the perfect tool for your business requirements challenging. You can choose from these tools to simplify your search:

- Crawlbase

There are thousands of companies throughout the world that use Crawlbase as a scraping tool, including Fortune 500 enterprises. By using Crawlbase Scraper, you can scrape the required data from websites built with various languages, such as JavaScript, Meteor, Angular, and others.

The Crawlbase Scraper API enables you can easily gather data and receive it in the form of an HTML file that you can use to analyze it further. This way, you’ll be able to scrape your required page quickly and easily.

With Crawlbase, you can develop an efficient web scraper using the most professional tool available. Besides scraping data from online sources, you can modify and use the scraped information within your system.

Features

- Keeps you safe from CAPTCHA blocks

- Help you gather all the necessary information, such as categories, prices, etc.

- Through Screenshot scraping API, real-time tracking is possible of updates to the targeted web pages

- By using dynamic algorithms, it protects you from tracking providing by proxy servers

- With comprehensive documentation to guide each query, this product is simple to use and easy to navigate

Tool Pricing

Crawlbase Scraper registration is free. We charge only a minimal fee for successful requests.

- BrightData (Formerly Luminati)

With BrightData (formerly Luminati Networks), you can access advanced features and innovative services. You are the one who has all the authority for the process of extracting the data.

Features

- It is easy to handle even if you do not have a programming background.

- Easily scrape data with this easy-to-use framework.

- Customer support is always available.

- Intelligent data collection feature with dynamic changes based on targeted websites.

- An open-source proxy API management system.

- Scraping data according to business requirements and market needs.

Tool Pricing

Offering dynamic pricing based on customer needs.

- ParseHub

Using ParseHub does not require programming knowledge. Anyone who requires data, from data analysts to data engineers and scientists to writers to information researchers, may use this tool.

You will be able to get the information either in Excel format or in JSON format. This tool has many useful features, including programmed IP revolution, scraping website pages behind login dividers, accessing dropdowns and tabs, and completing tables and guides.

Moreover, the free plan allows clients to scrape up to 200 pages of information in 40 minutes. ParseHub offers desktop clients for Windows, Mac OS, and Linux so that you can run them from your PC regardless of the operating system.

Features

- Access to REST APIs for development purposes.

- Using cloud-based infrastructure to automate processes.

- Utilizing information aggregation to scrape dynamic web sources for data.

- To avoid blocking, alternate and spin IP addresses.

- Extract data according to a customized schedule.

- The use of REGEX to refine scraped data.

- Infinite webpage scrolling with HTML scraping.

- Integration of webhooks and APIs for downloading Excel and JSON data.

Tool Pricing

The starting price for the Standard plan is $189 per month. A professional plan costs $599 per month, and an Enterprise plan requires a quote. A free package is also available. In approximately 40 minutes, this package provides data from 200 web pages and 5 public projects.

- Octoparse

With Octoparse, you can extract data from different websites without coding. Data extraction is easier with its user-friendly interface. Octoparse provides users with a point-and-click screen scraping feature that permits users to scrape other web pages from a website, such as fill-in forms and login forms. Users who want to use scrapers in the cloud have the best site parser with a hosted solution.

Users can build ten crawlers for free with Octoparse’s free tier. Users also have the right to choose from the best price packages, which offer fully customized and managed crawlers that deliver more accurate data automatically.

Features

- Octoparse is the best solution for scrapers who want to run in the cloud and use a site parser to parse websites

- Data scraping professionally to avoid blocking and restrictions from owners.

- Users can scrape different pages from websites with the point-and-click screen scraper.

Tool Pricing

For the free plan, only a limited number of features are available. Prices start at $89 per month for the standard plan and $249 per month for the professional plan.

Considerations When Selecting Web Scraping Tools

Internet data is mostly unstructured. To extract meaningful insight from it, we must have systems in place.

To begin with, it would be best to use the necessary Web Scraping Tools available since Web Scraping can be extremely resource-intensive. Before you choose the right Web Scraping Tool, you need to keep a couple of factors in mind.

- Scalability

You will only need a scalable tool as your scraping needs increase over time. The tool you choose must be able to handle the increase in data demands without slowing down.

- A Clear & Transparent Pricing Structure

Transparency is essential regarding the pricing structure of the tool you want to choose. The pricing structure must clarify all explicit details so hidden costs won’t appear later. When looking for a provider, find one that has a transparent model and doesn’t mislead you about its features.

- Delivery of Data

A crawler that can deliver data in various formats is essential if you wish to be on the safe side. For example, when searching for crawlers, you should narrow your search to those that deliver data in JSON format.

Sometimes, you may have to deliver data in a format you need to familiarize yourself with. Regarding data delivery, versatility ensures that you stay caught up. It is ideal for delivering XML, JSON, and CSV data via FTP, Google Cloud Storage, DropBox, etc.

- Managing Anti-Scraping Mechanisms

The Internet contains websites with anti-scraping measures. You can bypass these measures through simple modifications to the crawler if you feel you’ve hit a wall. Consider a web crawler with an efficient mechanism for overcoming these roadblocks.

- Customer Service

A good tool will provide good customer support. For the provider, this must be a top priority. You won’t have to worry if something goes wrong with excellent customer service.

With good customer support, you can say goodbye to waiting for satisfactory answers and the frustration that comes with it. Consider the time it takes the customer support team to respond before making a purchase, and test their response times before making a purchase.

- Data Quality

It is essential to clean and organize unstructured data present on the Internet before you can use it. To help you clean and organize scraped data, look for a Web Scraping provider that provides the required tools. Keep in mind that the quality of data will impact the analysis further.

Is Web Scraping Legal?

Web scraping is a process where one can extract data from web pages. The legality of this issue and its ethics depend on how you plan to use your collected information.

One way to avoid violating copyright laws is by conducting your research and making a request before you publish any data. Though this may seem like a straightforward task, many essential things need consideration when going about it to uphold the law. For example, businesses operating as a California LLC should be mindful of the California Consumer Privacy Act (CCPA), which places legal obligations on how personal data is collected and used. These kinds of regulations can affect what data can be scraped and whether user consent is required.

For example, researching data posting on public websites will help determine if they have specific privacy policies or not, which might rule out scraping them altogether.

Additionally, one must consider how much personal information they can successfully gather from specific sites without infringing upon individual rights - such as their bank details for credit checking during job interviews or medical records while investigating fraud cases among other sensitive topics where consent would likely need to obtained first before proceeding with gathering said points.

Top Web Scraping Tips & Best Practices

Scraping websites is a great way to collect data, but it can be an art, and it’s often used in the business world for research and product development purposes.

Here are some best web scraping tips and practices :

- Respect the website, its creators, and its users

- You can detect blocking when it occurs.

- Avoid getting too many requests at once

- Continue parsing and verifying extracted data.

- Check to see if the website supports an API

- Rotate IP addresses and proxy servers to avoid request throttling.

- Make sure you respect ‘robots.txt.’

- Your browser fingerprint should be less unique

- Use Headless Browsers

- Choose your tools wisely, and

- Build Web Crawlers

How to Web Scrape Information from Websites

There are two methods of web scraping. These are:

Scraping web data by ready-made web scraping tools: Web-scraping programs are for extracting data from web pages. A web scraper is usually a software program that can copy parts of a webpage and store them on another device, such as your computer or mobile phone.

Some companies prefer to customize their web scraper by nearshore software outsourcing & creating web scraper tailored to their specific needs and data requirements. Web scrapers with different sets of instructions to scrape information collected from specific sites or regions to extract desired content like text, images, PDFs, etc., which may then gathered in databases, folders on our hard drives, and cloud storage services among other digital mediums. If you decide to invest in one, consider using IT procurement services to help you choose the right web scraping tool that aligns with your needs, budget, and compliance requirements.

Web scraping via programming languages: In this method, the user utilizes coding skills (most often JavaScript) to parse through web pages looking for pieces of data they want and then sorting it into an organized list or table.

How to Use Crawlbase for Web Scraping

Crawlbase provides business developers with a one-stop data scraping and crawling platform that doesn’t need you to log in. It allows bypassing any blocks or captchas so that the data can flow smoothly back to your databases!

Crawlbase is a web scraper that does not make you rely on browsers, infrastructure, or proxies to scrape high-quality data. Crawlbase allows companies and developers to anonymously extract large and small-scale data from websites across the Internet.

Crawlbase scrapes through pages quickly using its proprietary scraping technology, which can work with any website without affecting how well you can crawl them about other items like hard drive space limitations, server load times, etc.

The Crawlbase solution eliminates captchas and prevents users from blocking. Currently, the app provides 1,000 requests to new users free of charge. Applications can begin crawling websites immediately and collating data from known sites, including LinkedIn, Facebook, Yahoo, Google, Amazon, Glassdoor, Quora, and many more, within minutes!

Writing a simple scraper in Python may only be enough with using proxies. So, if you plan to crawl and scrape a specific website but need to know what programming language to use, then Python is the best way to start. However, web scraping can be tricky since some websites can block your requests or even ban your IP. So, to properly scrape sensible data on the Web, you will need Crawlbase Crawling API, which will allow you to easily scrape most websites by avoiding blocked requests and CAPTCHAs.

Web Scraping with Python

You will need to keep track of your Crawlbase token that will serve as your authentication key to use the Crawling API service.

Let’s install the libraries we will be using to get started. On your computer’s console, run the following command:

1 | pip install crawlbase |

The next step is to write some code once everything has been set up. The first step is to import the Crawlbase API:

1 | from crawlbase import CrawlingAPI |

Enter your authentication token and initialize the API:

1 | api = CrawlingAPI({'token': 'USER_TOKEN'}) |

After that, get the URL of your target website or any site you want to scrape. As an example, we will use Amazon in this guide.

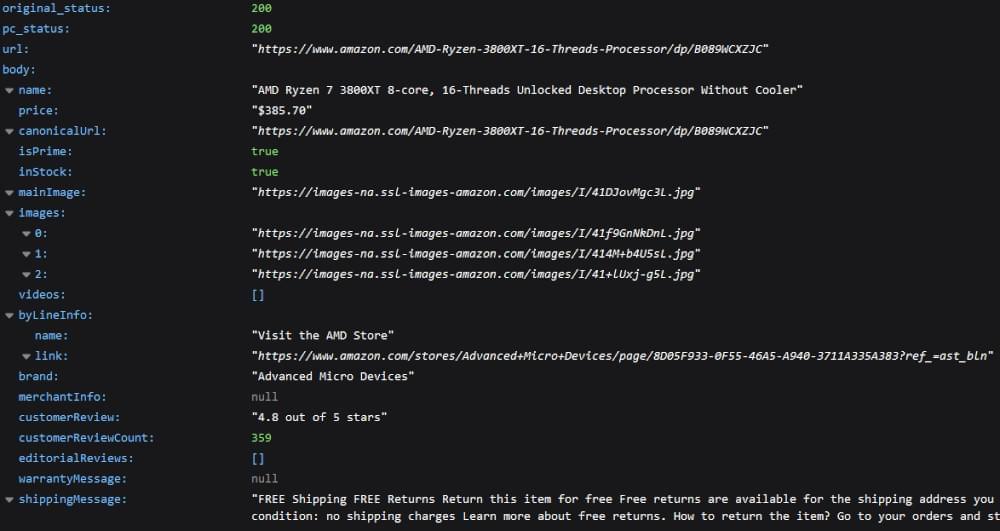

1 | targetURL ='https://www.amazon.com/AMD-Ryzen-3800XT-16-Threads-Processor/dp/B089WCXZJC’ |

Next, we will be able to fetch the full HTML source code of the URL, and if successful, we will display the output to our console:

1 | response = api.get(targetURL) |

A response follows every request sent to Crawlbase. It is only possible to view the crawled HTML if the status is 200 or success. The web crawler will fail if any other response returned, such as 503 or 404. There are thousands of proxies around the world used in the API, so data results should be as accurate as possible.

We have now built a crawler successfully. But what we need is a scraper tool. To scrape a website, we will use the method that returns parsed data in JSON format. With the Crawling API, you can use the built-in data scrapers to scrape support sites, and Amazon is one of them.

We pass the data scraper as a parameter on our GET request. You should now see the complete code as follows:

1 | from crawlbase import CrawlingAPI |

You will receive a response similar to the following:

Web Scraping Examples

Here are a few examples of web scraping that you can use;

- Scraping of Real Estate Listings

Many real estate agents extract data to build their database of properties for sale or rent available on the market.

Real estate agencies, for instance, scrape MLS listings to build APIs that automatically populate their website with this information. This way, when someone finds these listings on their site, they act as the agent for the property. An API generates most of the listings on Real Estate websites.

- SEO (Search Engine Optimization)

Web scraping is something only some businesses consider when it comes to SEO. You can use it to increase your online visibility on search engines by collecting the right data. The tool will help you find keywords and backlink opportunities.

Scraping SERPs allows you to find backlink opportunities, competitor research, and influencers!

- Lead Generation

Lead generation is one of the most popular uses of web scraping. Many companies use web scraping to collect contact information about potential clients or customers. There is much of this in the B2B space, where potential customers will publicly disclose their business information online.

Final Thoughts

Web scraping is a powerful tool that can help you find valuable information on the Internet.

It is used for marketing, research, and more to understand what your customers are looking for online. But how do you scrape data from websites?

The best way is with Crawlbase, which scrapes webpages by using proxy servers to make it seem like multiple users are visiting the site simultaneously.

You don’t need any programming experience because Crawlbase automatically does all of this behind the scenes! Get started today with our free trial or learn everything about web scraping here firsthand, so it becomes second nature when you start working with us.

Frequently Asked Questions (FAQs)

How Can I Find All URLs on a Domain?

You can find all URLs on a domain by using website scrapers like Crawlbase, checking the XML sitemap, or recursively scraping internal links. Learn more in our full guide.