Python has been around for more than 20 years and is now one of the most popular programming languages out there. It is a general-purpose language that is object-oriented and interpreted, which means all errors are checked during the runtime. Python is also open-source, and a high-level language that can be used in a wide array of tasks including web development, artificial Intelligence, big data, scripting, and more.

You do not need years of experience to start utilizing Python. It is considerably easy to comprehend that is why most software engineers recommend it as a starting point if you wish to learn to code. Developed with the user experience in mind, programmers often find Python codes are simpler to read and allow them to execute commands with fewer lines of code compared to other languages.

This article will explore the fundamentals of web scraping with Python and how developers can take advantage of essential techniques and tools for efficient website data extraction.

Java

1 | public class Sample { |

Python

1 | print("Hello World!") |

Why use Python for Web Scraping?

So, if you are planning to crawl and scrape a certain website but don’t know what programming language to use, scraping with Python is the best way to start. If you are still not convinced, here are some of the key features that make Python preferable for web scraping:

- More Tasks with Fewer Codes: We cannot stress this enough. Writing a code in Python is so much simpler and if you are looking to scrape large amounts of data, surely you do not want to spend more time writing your code. With Python, you can do more with less.

- Community Support: Since Python is popular and widely considered a dependable language for scraping, you can easily seek help if you encounter any technical issues through thousands of community members on forums and most social media platforms.

- A Multitude of Libraries: It has a large selection of libraries, especially for web scraping including Selenium, BeautifulSoup, and of course, Crawlbase.

- Dynamic Typing: Python allows you to use variables without specifying their data types, saving time and boosting efficiency in your tasks.

- Clear and Understandable Syntax: Python’s syntax is easily comprehensible due to its similarity to English statements. The code is expressive and readable, and the indentation helps distinguish between different code blocks or scopes effectively.

However, web scraping in Python can be tricky at times since some websites can block your requests or even ban your IP. Writing a simple web scraper in Python may not be enough without using proxies. So, to easily scrape a website using Python, you will need Crawlbase’s Crawling API which lets you avoid blocked requests and CAPTCHAs.

Scraping Websites with Python using Crawlbase

Now that we have provided you with some of the reasons why you should build a web scraper in Python with Crawlbase, let us continue with a guide on how to scrape a website using Python and build your scraping tool.

First, here are the pre-requisites for our simple scraping tool:

- Crawlbase account

- PyCharm or any code editor that you preferred

- Python 3.x

- Crawlbase Python Library

Make sure to take note of your Crawlbase token which will serve as your authentication key to use the Crawling API service.

Let us start by installing the library that we will use for this project. You can run the following command on your console:

1 | pip install crawlbase |

Once everything is set, it is now time to write some code. First, import the Crawlbase API:

1 | from crawlbase import CrawlingAPI |

Then initialize the API and enter your authentication token:

1 | api = CrawlingAPI({'token': 'USER_TOKEN'}) |

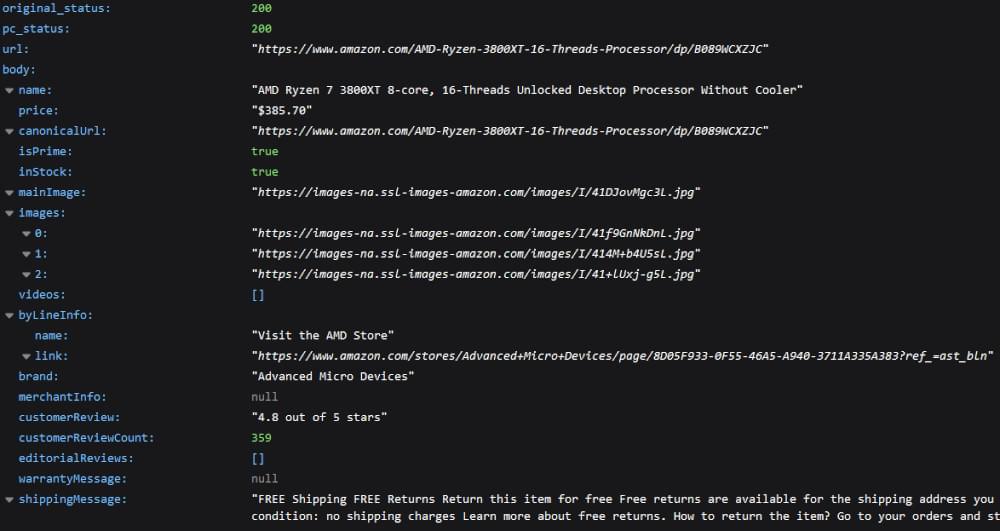

After that, get your target URL or any website that you would like to scrape. For this guide, we will use Amazon as an example.

1 | targetURL = 'https://www.amazon.com/AMD-Ryzen-3800XT-16-Threads-Processor/dp/B089WCXZJC' |

The next part of our code will allow us to download the full HTML source code of the URL and if successful, will display the result on your console or terminal:

1 | response = api.get(targetURL) |

As you can see, every request to Crawlbase comes with a response. Our code will only show you the crawled HTML if the status is 200 or success. Any other response, let’s say 503 or 404, will mean that the web crawler failed. However, the API is using thousands of proxies worldwide which should deliver the best data results possible.

Now, we have successfully built a crawler. But what we want is a scraper tool, right? So, we will use the most convenient way to scrape a website that will return parsed data in JSON format.

One great feature of the Crawling API is that we can use the built-in data scrapers for supported sites, and luckily, Amazon is one of them.

To use the data scraper, simply pass it as a parameter on our GET request. Our full code should now look like this:

1 | from crawlbase import CrawlingAPI |

If everything goes well, you will get a response like the example below:

Building Python Web Scrapers with BeautifulSoup and Crawlbase

Now, what if you want to get more specific data, say, just the product name and price? As mentioned earlier, Python has a large collection of libraries including those that are specifically meant for scraping. BeautifulSoup is one of them and is a popular package on Python for parsing HTML and XML data. It is also simpler to use which beginners can take advantage of.

So, let us go ahead and try building a simple web scraper in Python using the Crawling API and BeautifulSoup this time. Since we are using Python version 3.xx let us install the latest BeautifulSoup package which is simply called BS4:

1 | pip install beautifulsoup4 |

Since we have already installed the Crawlbase library earlier, you can just create a new Python file and import BS4 or Crawlbase.

1 | from bs4 import BeautifulSoup |

Then, the same thing as before, make sure to initialize the API and use the GET request to crawl your target URL:

1 | api = CrawlingAPI({ 'token': 'USER_TOKEN' }) |

In this example, we will try to get the product name and price from an Amazon product page. The easiest way to do this is by using the find method and pass in an argument to scrape the specific texts we need. To learn more how you can select a specific HTML element, you may check the BeautifulSoup documentation.

1 | product*name = b_soup.find('span', class*='a-size-large product-title-word-break').text |

After that, we simply need to write a command to print the output.

1 | print('name:', product_name) |

The full code should now look like this:

1 | from bs4 import BeautifulSoup |

Sample output:

sample-output.jpg

Best Python Web Scraping Libraries

For web scraping with Python, there are specific libraries for various tasks:

- Selenium: It serves as a web testing tool, automating browser tasks efficiently. You can install Selenium with the following pip command:

1 | pip install selenium |

- BeautifulSoup: This Python library parses HTML and XML documents, generating parse trees for simplified data extraction.You can install Beautiful Soup with the following pip command:

1 | pip install beautifulsoup4 |

- Pandas: It is utilized for data manipulation and analysis. Pandas facilitates data extraction and storage in preferred formats.

You can install Pandas with the following pip command:

1 | pip install pandas |

Is Web Scraping Legal or Not?

When considering the legality of web scraping with Python, it’s important to note that permissions for scraping vary across websites. To determine a website’s stance on web scraping, you can refer to its “robots.txt” file. Access this file by adding “/robots.txt” to the URL you want to scrape.

While the act of scraping itself might be lawful, the data extracted could have legal restrictions on its use. Ensure that you’re not stepping upon:

- Copyrighted material: It refers to the protected data by intellectual property laws. Using such content without authorization is illegal.

- Personal information: The data that can identify individuals falls under privacy regulations like the GDPR for EU citizens. Without lawful grounds to store this data, it’s best to avoid collecting it.

In general, always review a website’s terms and conditions before scraping to ensure compliance with their policies. When uncertain, consider reaching out to the site owner to seek explicit consent before proceeding. Being aware of these legal aspects helps navigate web scraping with Python responsibly and within legal boundaries.

Web Scraping Challenges

For web scraping with Python, be prepared for a few challenges along the way. The internet is filled with diverse technologies, styles, and constant evolution, making it a bit of a chaotic to scrape.

- Website Diversity: Each website is unique, demanding customized approaches for effective data extraction despite recurring patterns.

- Dynamic Nature: Websites undergo constant changes. Your Python web scraper might work flawlessly at first, but as websites evolve, it can encounter errors or struggle to navigate new structures.

- Adaptability: Despite changes, most website alterations are gradual. Updating your scraper with minor adjustments is often enough to accommodate these changes.

- Ongoing Maintenance: Due to the ever-evolving internet structure, expect that your scrapers will require regular updates and adjustments to remain functional and accurate.

Python Web Scraping Bonus Tips

When you’re scraping data with a Python web scraper, consider these helpful tips and tricks:

- Time Your Requests: Sending too many requests in a short time can trigger CAPTCHA codes or even get your IP blocked. To avoid this, introduce timeouts between requests, creating a more natural traffic flow. While there’s no Python workaround for this issue, spacing out requests can remove potential blocks or CAPTCHAs.

- Error Handling: When you scrape a website using Python, you should know that websites are dynamic and their structure can change unexpectedly. Implement error handling, like using the try-except syntax, especially if you frequently use the same web scraper. This approach proves beneficial when waiting for elements, extracting data, or making requests, helping manage changes in the website structure effectively.

Five Fun Facts About Python Web Scraping

Getting data from websites using Python is a handy skill, but there’s more to it than just its practical uses. Here are five interesting tidbits about Python web scraping that might catch you off guard, whether you’re just starting or you’ve been coding for years:

- Web Scraping Came Before the Web Took Off

Web scraping has its origins in the internet’s early days even before websites became commonplace. The first “scrapers” were early web crawlers such as World Wide Web Wanderer (created in 1993). These crawlers aimed to index the growing number of websites. In today’s world, Python’s BeautifulSoup and Scrapy libraries enable you to build modern web scrapers with minimal code. However, this technique has deep historical roots.

Python’s BeautifulSoup Was Almost Named ‘CuteHTML’

The Python library BeautifulSoup, a standard tool for web scraping, has a fascinating origin story behind its name. Leonard Richardson, the creator, picked “Beautiful Soup” as a nod to a poem in Alice’s Adventures in Wonderland by Lewis Carroll. Before settling on BeautifulSoup, he thought about names like “CuteHTML”. The final choice reflects the library’s capability to “tidy up” cluttered HTML similar to making a delicious soup from tangled noodles.It’s Used in Competitive Data Science

Web scraping is just about grabbing product prices or pulling out content, but it’s a crucial skill in competitive data science. Many people who take part in data science contests (like Kaggle) use web scraping to gather extra data to boost their models. Whether they’re collecting news stories, social media updates, or open datasets, web scraping can give contestants a leg up in building more robust models.Web Scraping Has an Impact on AI Training

Web scraping isn’t just about gathering static data; it also has an impact on training AI models. The vast datasets needed to train machine learning and natural language processing models often come from scraping content available to the public, like social media platforms, blogs, or news sites. For example, large language models (such as GPT) learn from massive amounts of scraped web content, which allows them to produce text that sounds human-like.Many Sites Have ‘Scraping Shields’—And Python Can Get Around Them

Web scraping can be tricky—many sites have built-in “shields” to stop bots from gathering their data. These shields include CAPTCHAs anti-bot systems and content that JavaScript renders. Python though, has libraries like Selenium that let scrapers interact with websites as if they’re actual users. This helps them get around these obstacles by controlling a web browser and solving CAPTCHAs through actions that mimic human behavior.

Conclusion

As simple as that. With just 12 lines of code, our Python web scraper tool is complete and is now ready to use. Of course, you can utilize what you have learned here however you want and it will provide all sorts of data already parsed. With the help of the Crawling API, you won’t need to worry about website blocks or CAPTCHAs, so you can focus on what is important for your project or business.

Remember that this is just a very basic scraping tool. Python web scraper tool can be used in various ways and in a much larger scale. Go ahead and experiment with different applications and modules. Perhaps you may want to search and download Google images, monitor product pricing on shopping sites for changes every day, or even provide services to your clients that require data extraction.

The possibilities are endless, and using Crawlbase’s crawling and scraping API will ensure that your Python web scraper tool will stay effective and reliable at all times.