Google Finance stands out as a valuable resource for real-time financial data which is crucial for investors and analysts. Offering a wealth of information ranging from stock quotes to market news, Google financial website serves as a go-to platform for tracking financial markets.

With millions of users accessing its features daily, Google Finance has become an indispensable tool for those seeking to stay informed about the latest developments in the financial world. However, manually monitoring and collecting data from Google Finance can be time-consuming and inefficient, especially for those dealing with large datasets or requiring frequent updates. This is where web scraping comes into play, Crawlbase offers a streamlined solution for automating the extraction of data from websites like Google Finance.

In this guide, we’ll explore the process of scraping Google Finance with Python. We’ll delve into project setup, data extraction techniques, and explore advanced methods for overcoming limitations associated with direct scraping. Let’s start!

Table of Contents

- Environment Setup for Scraping Google Finance

- Scraping Google Finance Prices

- Scraping Google Finance Stock Price Change (%)

- Scraping Google Finance Stock Title

- Scraping Google Finance Stock Description

- Complete Code

- Limitations of Direct Scraping

- Overcoming Limitations with Crawlbase Crawling API

- Final Thoughts

- Frequently Asked Questions

Why Scrape Google Finance?

Scraping Google Finance offers numerous benefits for investors, analysts, and financial enthusiasts. By automating the extraction of data from Google Finance, users gain access to real-time financial information, including stock quotes, market news, and historical data. This data can be invaluable for making informed investment decisions, tracking market trends, and conducting financial analysis.

Additionally, scraping Google Finance allows users to gather large volumes of data quickly and efficiently, saving time and effort compared to manual data collection methods.

Furthermore, By scraping Google Finance, users can customize the data they collect to suit their specific needs, whether it’s monitoring specific stocks, tracking market indices, analyzing sector performance, or gathering data related to CTR banking for compliance and risk analysis.

Overall, scraping Google financial website empowers users with the information they need to stay informed about the financial markets and make data-driven decisions.

What Data Does Google Finance Offer?

Real-time Stock Quotes: Google Finance provides up-to-date stock prices for various publicly traded companies, allowing users to monitor changes in stock prices throughout the trading day.

Market News: The platform offers news articles and updates related to financial markets, including company announcements, economic indicators, and industry developments, helping users stay informed about market trends and events.

Financial Metrics: Users can access key financial metrics such as market capitalization, earnings per share (EPS), price-to-earnings (P/E) ratio, and dividend yield for individual stocks, enabling them to evaluate the financial health and performance of companies.

Historical Data: Google financial website allows users to view historical stock price data, including price movements over different time periods, facilitating historical analysis and trend identification.

Stock Charts: The platform offers interactive stock charts with customizable timeframes and technical indicators, enabling users to visualize and analyze stock price movements effectively.

Company Profiles: Users can access comprehensive profiles for individual companies, including business descriptions, financial highlights, executive leadership, and contact information, providing valuable insights into companies’ operations and performance.

How to Scrape Google Finance in Python

Let’s start scraping Google Finance by setting up the python environment and installing necessary Libraries.

Step 1: Environment Setup for Scraping Google Finance

Before you dive into scraping Google’s Financial Website, it’s essential to set up your environment properly. Let’s go through the steps:

Python Setup: Firstly, make sure Python is installed on your computer. You can check this by opening your terminal or command prompt and typing:

1 | python --version |

If you don’t have Python installed, you can download and install the latest version from the official Python website.

Creating Environment: It’s a good idea to create a virtual environment to manage your project dependencies. Navigate to your project directory in the terminal and run:

1 | python -m venv google_finance_env |

Once the virtual environment is created, you can activate it using the appropriate command for your operating system:

- On Windows:

1 | google_finance_env\Scripts\activate |

- On macOS/Linux:

1 | source google_finance_env/bin/activate |

Installing Libraries: With the virtual environment activated, install the necessary Python libraries for web scraping:

1 | pip install requests |

Choosing IDE: Selecting the right Integrated Development Environment (IDE) can make your coding experience smoother. Consider popular options like as PyCharm, Visual Studio Code, or Jupyter Notebook. Install your preferred IDE and configure it to work with Python.

Once you’ve completed these steps, you’ll be all set to start scraping data from Google Finance.

Now that we have our project set up, let’s dive into extracting valuable data from Google Finance. We’ll cover four key pieces of information: collecting prices, getting the stock price change in percentage, retrieving the stock title, and extracting stock description.

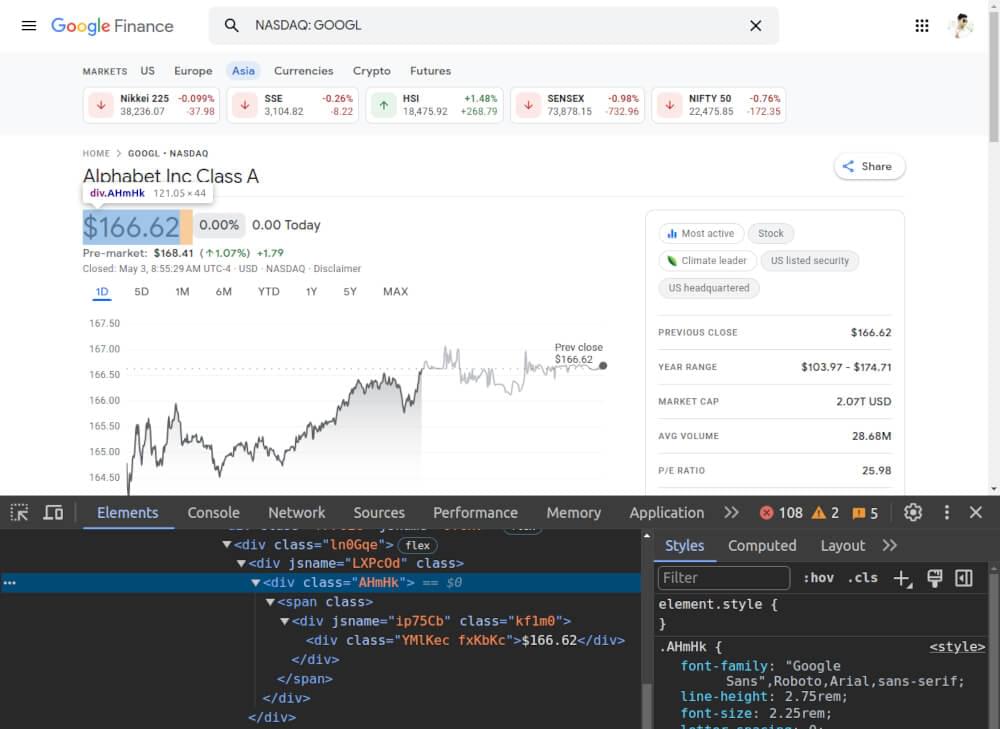

Step 2: Scraping Google Finance Website Prices

To collect prices from Google Finance, we need to identify the HTML elements that contain this information.

Here’s a simple Python code snippet using BeautifulSoup to extract the prices:

1 | from bs4 import BeautifulSoup |

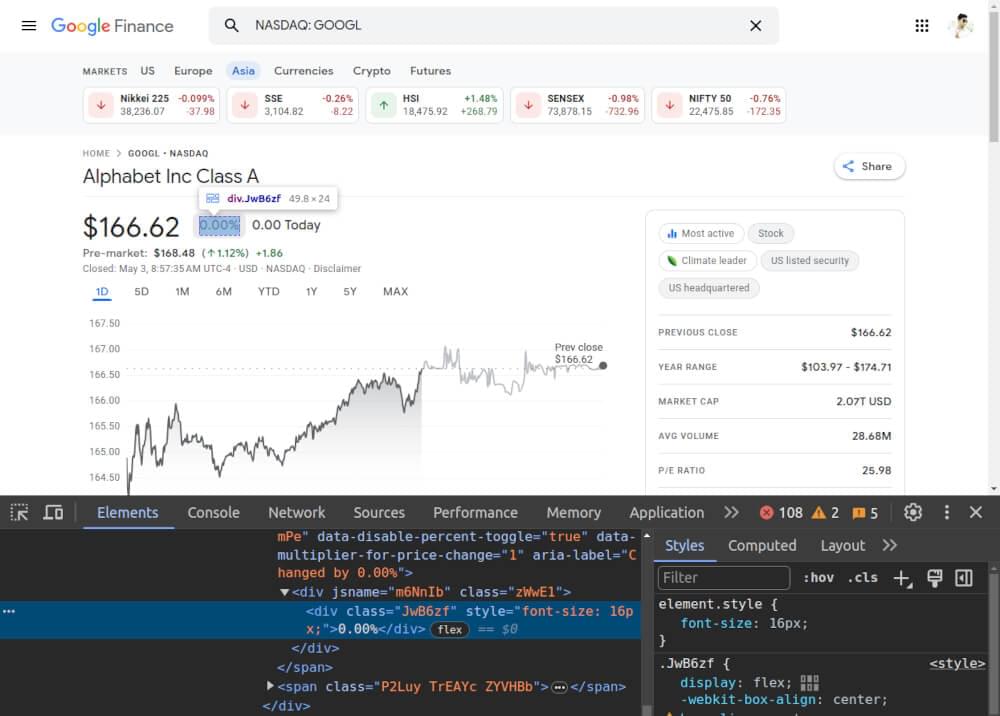

Step 3: Scraping Google Finance Stock Price Change (%)

Similarly, we can extract the stock price change percentage by locating the appropriate HTML element.

Here’s how you can do it:

1 | # Function to extract price change percentage from HTML |

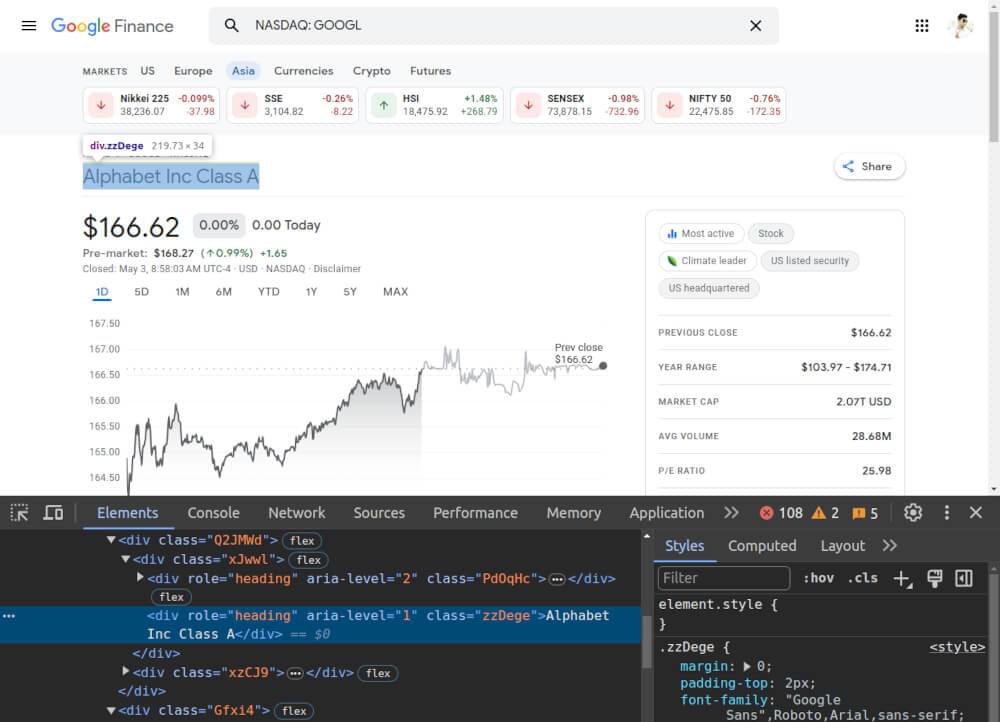

Step 4: Scraping Google Finance Stock Title

The title of the stock provides crucial identification information. We can scrape this data to obtain the names of the stocks listed on Google Finance.

Here’s a snippet to achieve this:

1 | # Function to extract stock title from HTML |

With these functions in place, you can efficiently extract prices, price change percentages, and stock titles from Google Finance pages using Python.

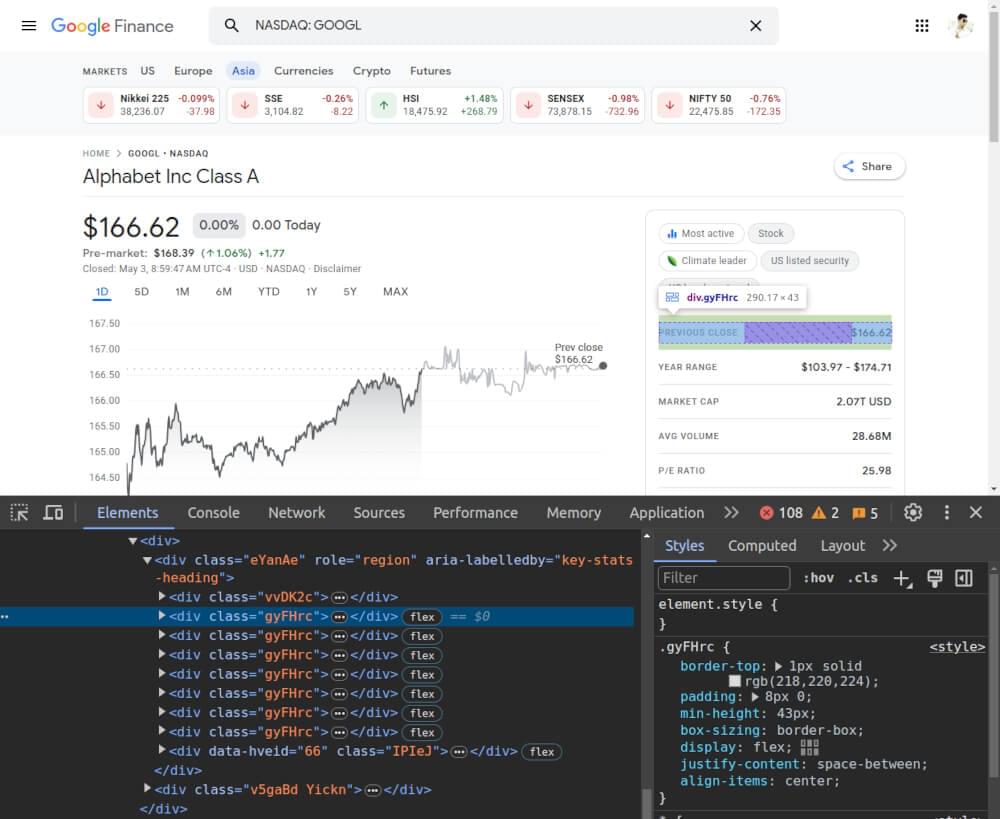

Step 5: Scraping Google Finance Stock Description

The stock description encompasses various attributes such as previous close, day range, market cap, and more.

Let’s scrape and compile these details into a comprehensive summary:

1 | # Function to extract stock description from HTML |

With these functions in place, you can efficiently extract prices, price change percentages, stock titles, and stock description from Google Finance pages using Python.

Step 6: Complete Code

Now that we’ve covered the steps to extract data from Google Finance, let’s put everything together into a complete Python script. Below is the complete code that incorporates functions to collect prices, get stock price change percentages, and retrieve stock titles from Google Finance pages.

1 | from bs4 import BeautifulSoup |

This code fetches HTML content from Google Finance URLs, extracts relevant information such as prices, change percentages, and stock titles using BeautifulSoup, and stores the extracted data in a JSON file named “finance_data.json”. You can modify the URLs list to scrape data from different stock pages as needed.

finance_data.json file preview:

1 | [ |

Note: You may wonder why the change_percentage is null in all objects. This is because its value is loaded by JavaScript rendering. Unfortunately, conventional scraping methods don’t support JavaScript rendering.

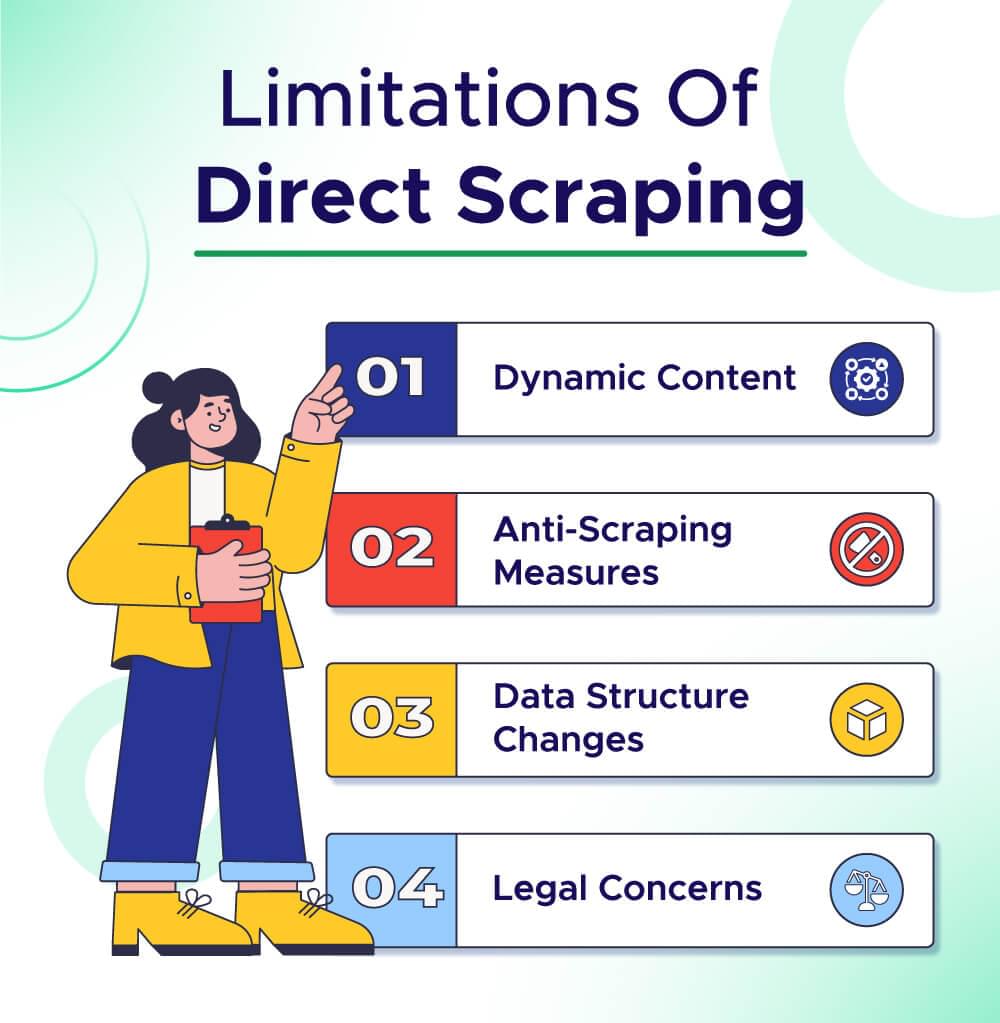

Limitations of Direct Scraping of Google’s Financial Website

While direct web scraping can be effective, it comes with certain limitations, especially when dealing with dynamic websites like Google Finance. Some of the main limitations include:

- Dynamic Content: Google Finance utilizes dynamic content loading techniques, such as JavaScript rendering, which makes it challenging to extract data using traditional scraping methods.

- Anti-Scraping Measures: Websites like Google Finance often implement anti-scraping measures to prevent automated data extraction. This can include IP blocking, CAPTCHA challenges, and rate limiting, making direct scraping less reliable and efficient.

- Data Structure Changes: Websites frequently update their structure and layout, which can break existing scraping scripts. Maintaining and updating scrapers to adapt to these changes can be time-consuming and resource-intensive.

- Legal Concerns: Scraping data from websites without permission may violate their terms of service or copyright policies, leading to legal repercussions. Google Finance, like many other websites, may have strict usage policies regarding automated data collection.

To overcome these limitations and ensure reliable and efficient data extraction from Google Finance, consider using a dedicated web scraping solution like Crawlbase Crawling API. This API handles dynamic content rendering, bypasses anti-scraping measures, and provides structured and reliable data in a format that’s easy to use for analysis and integration into your applications.

Overcoming Limitations with Crawlbase Crawling API

Crawlbase’s Crawling API offers a robust solution for scraping data from Google Finance while circumventing potential blocking measures. By integrating with Crawlbase’s Crawling API, you gain access to a vast pool of IP addresses, ensuring uninterrupted scraping operations. Its parameters allow you to handle any kind of scraping problem with ease. Additionally, Crawlbase manages user-agent rotation and CAPTCHA solving, further optimizing the scraping process.

To get started with Crawlbase Crawling API, you can utilize the provided Python library, which simplifies the integration process. Begin by installing the Crawlbase library using the command pip install crawlbase. Once installed, obtain an access token by creating an account on the Crawlbase platform.

Below is the updated script with Crawlbase Crawling API:

1 | from bs4 import BeautifulSoup |

Note: The initial 1000 requests via Crawling API are free of charge, and no credit card information is required. You can refer to the API documentation for further details.

By leveraging Crawlbase Crawling API, you can execute scraping tasks with confidence, knowing that your requests closely resemble genuine user interactions. This approach enhances scraping efficiency while minimizing the risk of detection and blocking by Google Finance’s anti-scraping mechanisms.

Gain Financial Insights with Crawlbase

Scraping data from Google finance website can provide valuable insights for investors, financial analysts, and enthusiasts alike. With access to real-time stock quotes, financial news, and other pertinent data, Google Finance offers a wealth of information for decision-making in the finance world.

However, direct scraping from Google finance website comes with its limitations, including potential IP blocking and CAPTCHA challenges. Fortunately, leveraging tools like the Crawlbase Crawling API can help overcome these obstacles by providing access to a pool of residential IP addresses and handling JS rendering, user-agent rotation and CAPTCHA solving.

If you’re looking to expand your web scraping capabilities, consider exploring our following guides on scraping other important websites.

📜 How to Scrape Yahoo Finance

📜 How to Scrape Zillow

📜 How to Scrape Airbnb

📜 How to Scrape Realtor.com

📜 How to Scrape Expedia

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions

Q. Is it Legal to Scrape Data from Google Financial Website?

Yes, it is generally legal to scrape publicly available data from Google Finance for personal or non-commercial use. However, it’s crucial to review Google’s terms of service and robots.txt file to ensure compliance with their usage policies. Some websites may have specific terms and conditions regarding automated access to their data, so it’s essential to respect these guidelines while scraping.

Q. What Data Can I Scrape from Google Finance Website?

You can scrape a wide range of market trends and economic indices from Google’s financial website, including real-time stock quotes, historical stock prices, company profiles, market news, analyst recommendations, earnings reports, and more. The platform provides comprehensive information on stocks, indices, currencies, cryptocurrencies, and other financial instruments, making it a valuable resource for investors, analysts, and researchers.

Q. How Often Can I Scrape Google Finance?

The frequency of scraping Google Finance depends on several factors, including the volume of data you’re extracting, the speed of your scraping process, and Google’s rate limits. While there are no explicit limitations on scraping frequency, it’s essential to implement proper scraping techniques and respect Google’s guidelines to avoid triggering anti-scraping mechanisms. Excessive scraping or aggressive behavior may lead to IP blocking, CAPTCHA challenges, or other restrictions.

Q. What Tools Can I Use for Scraping Google Finance?

There are several tools and libraries available for scraping Google’s financial website, each offering unique features and capabilities. Popular options include BeautifulSoup, Scrapy, Selenium, and commercial scraping services like Crawlbase. BeautifulSoup and Scrapy are Python-based libraries known for their simplicity and flexibility, while Selenium is ideal for dynamic web scraping tasks. Commercial scraping services like Crawlbase provide dedicated APIs and infrastructure for scalable and reliable scraping operations, offering features like IP rotation, CAPTCHA solving, and data extraction customization. Ultimately, the choice of tool depends on your specific scraping requirements, technical expertise, and budget constraints.