Due to AliExpress’s tremendous growth over the years, the hunt for a reliable AliExpress proxy has become increasingly important for those who require valuable data for market research.

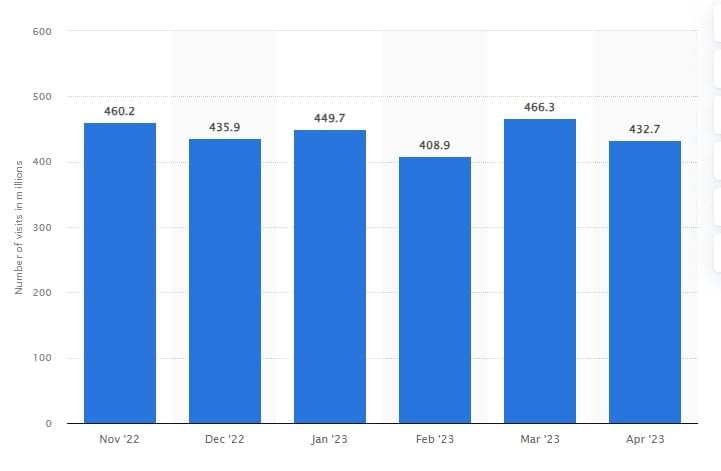

From November 2022 to April 2023, AliExpress pulled in a whopping 2.7 billion visitors! And it never dipped below 432 million visits each month. And guess what? January 2023 took the crown with over 449 million hits from across the globe.

With huge user demand, AliExpress stays ahead of the competition by their pricing and trends, and therefore, scraping their data can provide valuable insights into the current trends and pricing. But scraping AliExpress can’t be easy without using a proxy.

That’s where our solution steps in. Crawlbase’s Smart AI Proxy is not your typical AliExpress Proxy service; it’s a versatile solution that utilizes rotating residential and data center proxies.

In this blog, we will guide you through the process of building an AliExpress web scraper using Python and Smart AI Proxy, providing you with a step-by-step approach. You’ll learn how to set up your coding environment, configure Smart AI Proxy, create a scraper, and handle common web scraping issues.

Let’s dive in and make the most of Smart AI Proxy’s capabilities. Here’s a video tutorial on it. If you prefer written tutorial, scroll down and continue reading.

Table of Contents

I. Understanding the Smart AI Proxy

II. How Will Smart AI Proxy Help in Scraping AliExpress

III. Basic Smart AI Proxy Usage with Curl Command

IV: Adding Parameters to Smart AI Proxy Requests

V. Creating AliExpress web scraper using Python

VI. Strategies for Scaling Up your Python Project

VIII. Frequently Asked Questions

I. Understanding the Smart AI Proxy

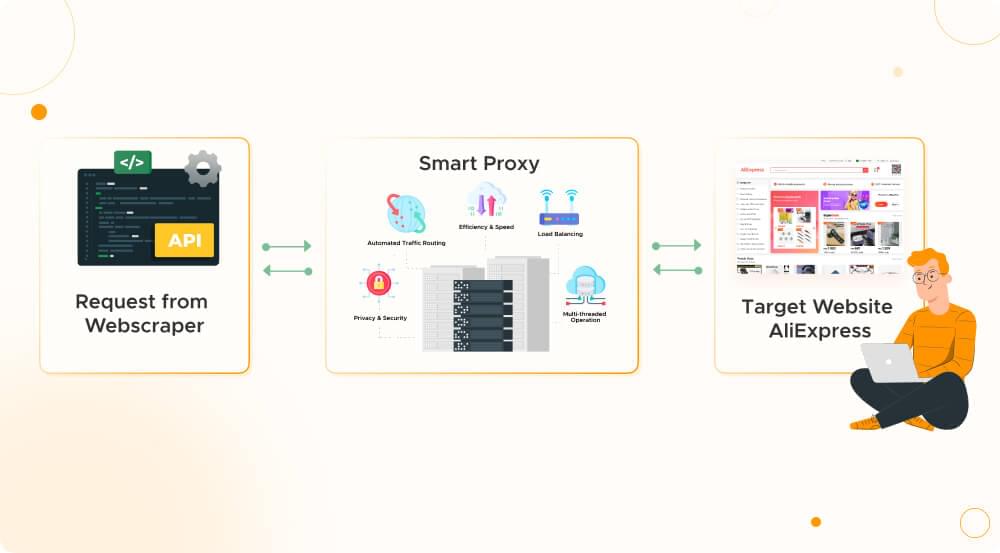

The Smart AI Proxy simplifies the complex process of web scraping by automating traffic routing through a range of proxy servers composing millions of IPs. It is a powerful tool designed to facilitate web crawling and scraping for applications that are not built to interact with HTTP/S-based APIs like the Crawling API.

Instead of modifying your existing application, you can integrate the Smart AI Proxy to handle communication with the Crawling API. The process involves using a rotating proxy that forwards your requests to the Crawling API, simplifying the integration for developers.

This versatile tool is designed to make your web scraping projects smoother and more efficient. Here’s how it works:

Automated Traffic Routing

The Smart AI Proxy handles the intricacies of traffic routing for you. It’s a bit like having a smart traffic conductor for your web scraping operations. By distributing your requests across multiple proxy servers with millions of global IPs, it avoids overloading any single server, ensuring that your web scraping activities remain smooth and uninterrupted.

Multi-threaded Operation

Smart AI Proxy is equipped to make the most of multi-threading in the context of web scraping. When you initiate a scraping task, Smart AI Proxy Manager intelligently assigns threads to different requests. These threads run in parallel, fetching data concurrently from the target website.

Efficiency and Speed

One of the standout features of the Smart AI Proxy service is its remarkable speed. It ensures that there are no unnecessary delays in your requests. This is especially crucial for web scraping, where time is of the essence. With the proxy manager, your scraping tasks become not only faster but also more accurate.

Automated IP Rotation

Crawlbase’s Smart AI Proxy intelligently manages IP rotation. This means that the IP address your requests originate from changes at regular intervals. This feature is crucial as it allows you to effortlessly bypass restrictions, avoid IP bans, and significantly increase your web scraping speed. By constantly refreshing your IP address, you reduce the risk of encountering obstacles that can disrupt your scraping process.

Balancing the Load

Whether you’re performing ad verification, conducting market research, or analyzing your competitors, Smart AI Proxy maintains a balanced load. This ensures that you can carry out these tasks with ease. The balance prevents any single proxy server from becoming overwhelmed, further contributing to the efficiency of your web scraping activities.

Privacy and Security

At the core of Smart AI Proxy is a commitment to privacy and security. By distributing your requests through multiple proxy servers, your online identity remains well-guarded, allowing you to conduct web scraping activities with the utmost data privacy and security.

In summary, the Smart AI Proxy from Crawlbase streamlines web scraping by automating and optimizing the proxy server management process. Its efficient traffic routing, speed, automated IP rotation, multi-threaded operation, load balancing, and focus on privacy and security make it an invaluable tool for web scraping activities like ad verification, market research, and competitor analysis. With Smart AI Proxy, you can navigate the web scraping landscape with ease and confidence.

II. How Will Smart AI Proxy Help in Web Scraping AliExpress?

AliExpress, as one of the largest e-commerce platforms globally, offers valuable data for businesses and researchers in a massive scale. However, scraping data from AliExpress comes with its own set of challenges. These challenges include IP bans, CAPTCHAs, and the need to bypass bot detection mechanisms. Smart AI Proxy by Crawlbase is the solution that will empower you to overcome these obstacles and extract data from AliExpress effectively.

Bypassing IP Blocks and Restrictions

AliExpress employs IP blocking as a standard measure to prevent excessive scraping activities. By constantly rotating your IP address, Smart AI Proxy helps you bypass these restrictions effortlessly. This means you can scrape without the fear of getting your IP address blocked, ensuring uninterrupted data collection.

Outsmarting CAPTCHAs

CAPTCHAs are another hurdle you’ll encounter while scraping AliExpress. These security tests are designed to differentiate humans from bots. Smart AI Proxy’s rotating IP addresses feature comes to the rescue here. When CAPTCHAs appear, Smart AI Proxy intelligently switches to a new IP address, ensuring that your scraping process remains smooth and continuous. You won’t be slowed down by these security checks.

Evading Bot Detection

AliExpress, like many online platforms, uses sophisticated bot detection mechanisms to identify and block automated scraping activities. Smart AI Proxy’s automated IP rotation significantly reduces the risk of being detected as a bot. By continuously changing IPs, Smart AI Proxy keeps your scraping activities discreet, allowing you to scrape data anonymously.

High-Speed Data Extraction

Efficiency is crucial in web scraping, and Smart AI Proxy excels in this aspect. It ensures that your requests are processed with minimal delays, enabling you to extract data from AliExpress rapidly. Moreover, with its multi-threaded operation, Smart AI Proxy can process multiple requests concurrently, further enhancing the speed and efficiency of your web scraping tasks.

Data Confidentiality and Protection

Smart AI Proxy not only enhances efficiency but also prioritizes your anonymity. By routing your requests through multiple proxy servers, it keeps your online identity discreet, allowing you to perform web scraping with the highest level of privacy and security.

Now that we have discussed the importance of Smart AI Proxy in scraping AliExpress, are you prepared to unlock its potential for your scraping needs? In the upcoming section of our blog, we’ll take you through the step-by-step process of writing code in Python and seamlessly integrating Smart AI Proxy to craft a highly efficient web scraper customized for AliExpress.

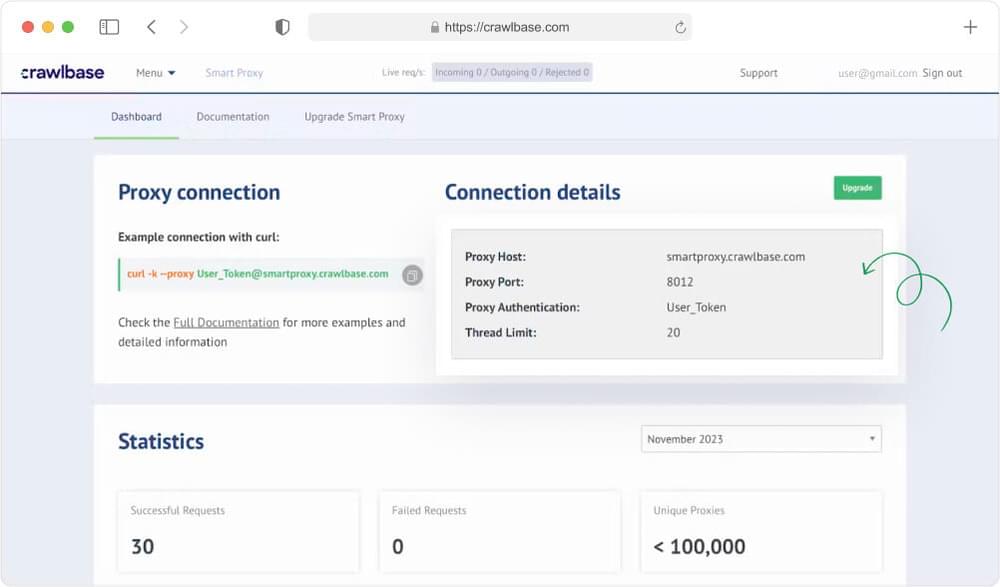

III. Basic Smart AI Proxy Usage with Curl Command

Before we set up our Python environment, let’s try to test Smart AI Proxy and fetch data from the AliExpress web page using a simple curl command. Your first step will be to create an account with Crawlbase and go to your Smart AI Proxy dashboard to obtain the Proxy Authentication token.

Once you have the token, open your command prompt or terminal, copy the command line below, replace USER_TOKEN with the token you’ve obtained earlier, and hit enter to execute the code.

1 | curl -x "http://[email protected]:8012" -k "https://aliexpress.com/w/wholesale-macbook-pro.html" |

This curl command will make an HTTP request to your target URL through Crawlbase’s Smart AI Proxy. The proxy is set to run on smartproxy.crawlbase.com at port 8012, and the -k option tells curl to ignore SSL certificate verification. It is used when connecting to a server over HTTPS and you don’t want to verify the authenticity of the server’s certificate.

In the context of Crawlbase’s Smart AI Proxy, it’s crucial to disable SSL verification. Failure to do so may hinder the interaction between the Smart AI Proxy and your application.

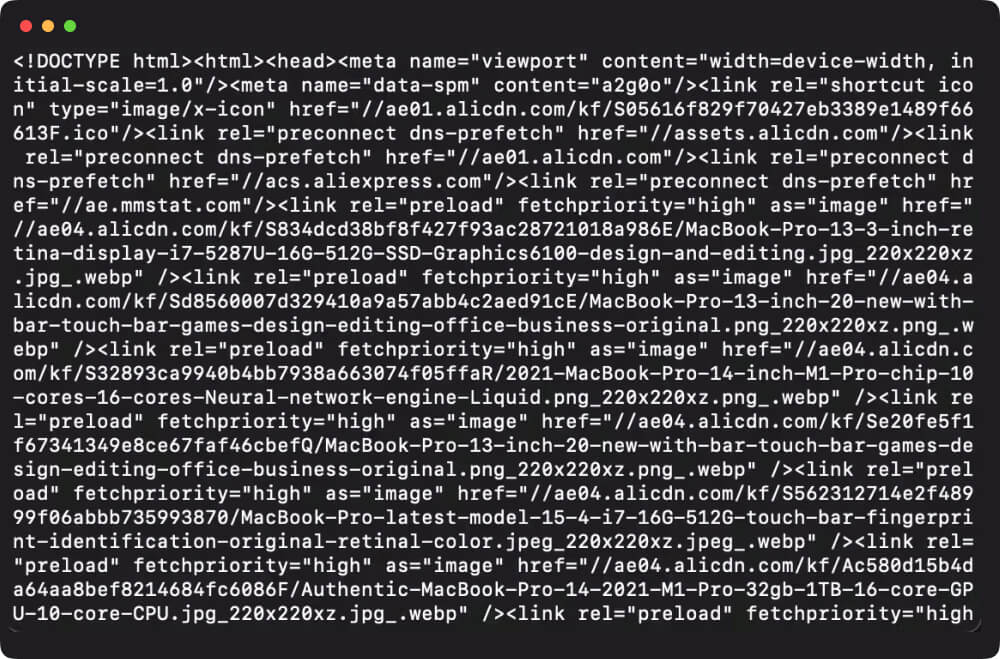

Upon successful execution, you should receive an HTML response from AliExpress similar to the one shown in this screenshot:

IV: Adding Parameters to Smart AI Proxy Requests

Since Smart AI Proxy forwards your requests to the Crawling API, it also benefits from most of the capabilities of the Crawling API. You can fine-tune your requests by sending specific instructions, known as parameters, through a special header called CrawlbaseAPI-Parameters.

This lets you tell Smart AI Proxy exactly how you want it to handle your request. You can customize it to fit your needs perfectly.

In this case, we will be using a parameter called scraper=aliexpress-serp. This tells Smart AI Proxy to extract the website’s response and organize it in a way that’s easy to understand. It’s like asking Smart AI Proxy to turn messy website data into neat and organized information.

1 | curl -H "CrawlbaseAPI-Parameters: scraper=aliexpress-serp" -x "http://[email protected]:8012" -k "https://aliexpress.com/w/wholesale-macbook-pro.html" |

V. Creating AliExpress Web Scraper using Python

Step 1. Configuring your Python Project

Now that we’ve discussed the very basic details of how Smart AI Proxy operates. We’re ready to set up our Python environment.

Start by making sure you have Python installed on your machine. If this is your first time using Python, we recommend our Python beginner guide and follow the step-by-step procedure on how to properly set up Python on your system.

Step 2. Setting Up Project Directory

Once you have configured Python on your machine, we now need to set up a new project. Open your console or terminal and execute the following command.

1 | mkdir crawlbase |

mkdir: This is a command that stands for “make directory.” It’s used to create a new directory.crawlbase: This is the name of the directory you want to create. In this case, it’s named “crawlbase,” but you could replace it with any other name you prefer.

Next, execute the command below.

1 | cd folder-name && touch crawlbase.py |

cd folder-name: This command stands for “change directory.” It is used to navigate to a specific folder. Replace “folder-name” with the name of the folder you want to enter.&&: This is a logical operator that means “and.” In the context of this command, it ensures that the second part of the command (touch crawlbase.py) is executed only if the first part (cd folder-name) is successful.touch crawlbase.py: Thetouchcommand is used to create an empty file. In this case, it creates a file named “crawlbase.py” in the directory specified by the precedingcdcommand.

So, when you run this line of code, it does two things:

- It changes the current directory to the one specified by “folder-name.”

- It creates a new, empty Python file named “crawlbase.py” in that directory.

Step 3. Installation of Dependencies

To retrieve data from the AliExpress webpage and save it to a JSON file, we require two essential packages.

Requests: This package simplifies the process of sending HTTP/1.1 requests. You don’t have to manually add query strings to your URLs or encode your PUT & POST data. You can just use the json method for simplicity.

JSON: Python has native support for JSON. It comes with a built-in package called json for encoding and decoding JSON data, eliminating the need to install an additional package.

To get these packages, use the following command:

1 | pip install requests |

This command ensure you have the necessary tools to fetch data from AliExpress and handle JSON operations in your Python script.

Step 4. Utilizing Smart AI Proxy with Python

We’ve reached the point where we can begin crafting our main Python code and integrate the Smart AI Proxy call.

In the previous section, we created a file called crawlbase.py. Get to this file, copy the code below and run it to retrieve the desired data.

1 | import requests |

Importing the requests Library:

This line imports the requests library, which simplifies the process of making HTTP requests in Python.

Setting up Proxy Authentication:

Replace 'USER_TOKEN' with your actual Crawlbase user token. This token is used for authentication when making requests through the Smart AI Proxy. The proxy_auth variable is then formatted to include the username and an empty password, following the basic authentication format.

Defining the URL and Proxy URL:

url: This is the target URL you want to scrape. In this case, it’s an AliExpress webpage related to MacBook Pro wholesale.proxy_url: This is the URL of the Smart AI Proxy server, including the authentication details. The URL format ishttp://username:password@proxy_host:proxy_port.

Setting up Proxies:

The proxies dictionary is created to specify the proxy settings. Both “http” and “https” are set to use the same proxy URL.

Making the Request:

requests.get: This function initiates an HTTP GET request to the specified URL.proxies: The proxies parameter is set to use the configured proxy settings.verify=False: This parameter is set toFalseto ignore SSL certificate verification. In a production environment, it’s crucial to handle SSL verification properly.

Printing the Response Body:

This line prints the content of the response, which includes the HTML or data retrieved from the specified URL.

Step 5. Execute the Python Code

1 | crawlbase.py |

A successful response of the code will fetch the complete HTML source code of the AliExpress URL and display it on your console. This data is not yet useful in most cases as it will be difficult to dissect. In order to get more sensible and easy-to-read data, we must parse this response and turn it into structured data which we can then store in a database for easy retrieval and analysis.

Step 6. Parsing the Data using the AliExpress scraper

The Smart AI Proxy’s capability to automatically parse AliExpress data will be utilized in this step. To do this, we simply need to pass the scraper=aliexpress-serp with CrawlbaseAPI-parameters as a header in our code. Edit your crawlbase.py file and paste the code below.

1 | import requests |

Once you execute this code, the response will be in JSON format as shown below:

1 | { |

Step 7. Saving Parsed Data to a JSON file

Of course, we will not let the data go to waste. In this step, we will add a few lines to our code so we can store the scraped data safely for later use. Go back to your crawlbase.py file again and paste the code below.

1 | import requests |

Processing and Saving the Response as JSON:

json.loads(response.text): This converts the JSON-formatted text of the response into a Python dictionary.with open('scraped_data.json', 'w') as json_file: Opens a file named ‘scraped_data.json’ in write mode.json.dump(data, json_file): Writes the Python dictionary (converted JSON data) to the file.

VI. Strategies for Scaling Up Your Python Project

Scaling this web scraping project involves managing a large number of requests efficiently and ensuring that your project remains reliable and compliant with the website’s terms of service. Here are some strategies to consider:

Use Asynchronous Requests: Instead of making requests one by one, consider using asynchronous programming with libraries like asyncio and aiohttp. Asynchronous requests allow you to send multiple requests concurrently, significantly improving the speed of your scraping process.

Parallel Processing: This strategy can be applied to handle multiple URLs concurrently, speeding up the overall data retrieval process. Python provides various mechanisms for parallel processing, and one common approach is to use the concurrent.futures module.

Use a Proxy Pool: When dealing with a large number of requests, consider using a pool of proxies to avoid IP bans and distribute requests. Crawlbase’s Smart AI Proxy solves this problem for you as it intelligently rotates through different proxies for each request to prevent detection.

Distributed Scraping: If the volume of URLs is extremely high, you might want to consider a distributed architecture. Break down the scraping task into smaller chunks and distribute the workload across multiple machines or processes.

Handle Errors Gracefully: Implement error handling to manage network errors, timeouts, and other unexpected issues. This ensures that your scraping process can recover from failures without crashing.

Optimize Code Efficiency: Review your code for any inefficiencies that may impact performance. Optimize loops, minimize unnecessary computations, and ensure that your code is as efficient as possible.

Database Optimization: Use a reliable database (e.g., PostgreSQL, MySQL) for storing scraped data. Implement proper indexing to speed up retrieval operations or batch insert data into the database to reduce overhead.

VII. Create AliExpress Web Scraper with Crawlbase

In this blog, we delved into the details of Smart AI Proxy and its instrumental role in enhancing the efficiency of AliExpress web scraping. We started by understanding the fundamentals of Smart AI Proxy, explored its applications in scraping AliExpress, and then walked through the practical aspects of using it with both Curl commands and a Python-based web scraper.

The step-by-step guide provided insights into configuring a Python project, setting up the project directory, installing dependencies, utilizing Smart AI Proxy seamlessly with Python, executing the code, and efficiently parsing the scraped data using the AliExpress web scraper. The final touch involved saving the parsed data to a structured JSON file.

As developers, we recognize the significance of robust and scalable projects. The concluding section extended our discussion to strategies for scaling up your Python project. Scaling isn’t just about handling more data; it’s about optimizing your code, architecture, and resources for sustainable growth.

If you are interested in other projects for the Smart AI Proxy, you can explore more topics from the links below:

Scraping Walmart with Firefox Selenium and Smart AI Proxy

Scraping Amazon ASIN with Smart AI Proxy

If you want to see more projects for AliExpress, browse the links below:

Scraping AliExpress SERP with Keywords

Scraping AliExpress with the Crawling API

We also offer a variety of tutorials covering data scraping from various E-commerce platforms like Walmart, eBay, and Amazon, or social media platforms like Instagram and Facebook.

Should you have any questions or require assistance, please don’t hesitate to reach out. Our support team would be delighted to assist you.

VIII. Frequently Asked Questions

Q: Is Smart AI Proxy capable of handling large-scale scraping tasks?

A: Absolutely. Smart AI Proxy is designed to efficiently manage both small and large-scale scraping tasks. Its multi-threaded operation and load-balancing capabilities ensure that you can scrape vast amounts of data from AliExpress with ease.

Q: Does Smart AI Proxy prioritize privacy and security during web scraping?

A: Yes, privacy and security are central to Smart AI Proxy’s design. By routing your requests through multiple proxy servers, it keeps your online identity anonymous, allowing you to perform web scraping with a high level of privacy and security.

Q: Can Smart AI Proxy be used for web scraping on other platforms besides AliExpress?

A: Smart AI Proxy is versatile and can be used for web scraping on most online platforms. It’s not limited to AliExpress; you can use it to enhance your scraping activities on a wide range of websites like Amazon, eBay, Facebook, Instagram, and more.

Q: What benefits does Smart AI Proxy offer over using a single static IP for web scraping?

A: Unlike a single static IP, Smart AI Proxy provides dynamic and rotating IP addresses, making it more resilient to IP bans and detection. It also improves scraping speed and efficiency, allowing you to extract data more rapidly, even on a massive scale.

Q: What advantages does using Python and Smart AI Proxy offer over other languages for web scraping?

A: Python is widely used in the web scraping community due to its readability, extensive libraries, and ease of learning. When combined with Smart AI Proxy, you benefit from the versatility of Smart AI Proxy’s proxy solutions, ensuring a streamlined and more secure web scraping experience.