In the online world, information is really valuable, just like gold, and to scrape product Etsy listings is to harness that value for crafters and shoppers alike. It helps people make wise decisions and stay ahead. If you have an online store, do market research, or enjoy working with numbers, web scraping is a great tool. It’s like a super helpful tool that gathers information from websites.

Etsy is an online store that’s similar to an art gallery. It’s filled with special, handmade items that crafters and shoppers adore. But getting information from Etsy can be challenging. That’s when web scraping can help.

This guide is all about web scraping product listings from Etsy. We will extract Etsy data using Python. What makes this guide special is that we’ll cover Crawlbase Crawling API, a powerful web scraping tool that makes scraping Etsy a breeze.

We’re going to take you through it step by step. From setting up your workspace to collecting the data you want, we’ve got it all covered. By the time you finish this guide, you’ll have the skills to scrape Etsy like a pro. So, let’s get started on this exciting journey!

Table Of Contents

- Installing Python and required libraries

- Choosing the Right Development IDE

- Signing up for Crawlbase Crawling API and obtaining API credentials

- Analyzing Etsy Search Page Structure

- Identifying the elements you want to scrape

- Introduction to Crawlbase Crawling API

- Benefits of Crawlbase Crawling API

- Crawlbase Python Library

- Crawling Etsy Search Page HTML

- Inspecting HTML to Get CSS Selectors

- Retrieving product listings data from HTML

- Handling pagination for multiple pages of results

- Storing scraped data in a CSV File

- Storing scraped data in a SQLite Database

Getting Started with Etsy

Etsy is a globally recognized online marketplace where artisans and crafters showcase their unique creations, ranging from handcrafted jewelry to vintage furniture and everything in between.

Etsy’s product listings hold a lot of information for both buyers and sellers. As a seller, you can gain a deeper understanding of the market by analyzing your competition, identifying product trends, and pricing your creations competitively. For buyers, the ability to monitor prices, discover one-of-a-kind items, and make well-informed purchase decisions is a game-changer.

However, extracting and analyzing data from Etsy manually can be a time-consuming and daunting task. That’s where web scraping comes in to streamline the process and provide you with a wealth of data that might otherwise remain hidden.

In this blog, we’ll show you how to scrape Etsy product listings using Python and the Crawlbase Crawling API. With this knowledge, you’ll be able to automate data collection and gain valuable insights from Etsy’s dynamic web pages, saving you time and effort.

The journey begins with understanding Etsy’s website structure and setting up your development environment.

Setting up Your Environment

Before we start scraping Etsy product listings, we have to make sure our setup is ready. This means we need to install the tools and libraries we’ll need, pick the right Integrated Development Environment (IDE), and get the important API credentials.

Installing Python and Required Libraries

The first step in setting up your environment is to ensure you have Python installed on your system. If you haven’t already installed Python, you can download it from the official website at python.org.

Once you have Python installed, the next step is to make sure you have the required libraries for this project. In our case, we’ll need three main libraries:

- Crawlbase Python Library: This library will be used to make HTTP requests to the Etsy search page using the Crawlbase Crawling API. To install it, you can use pip with the following command:

1

pip install crawlbase

- Beautiful Soup 4: Beautiful Soup is a Python library that makes it easy to scrape and parse HTML content from web pages. It’s a critical tool for extracting data from the web. You can install it using pip:

1

pip install beautifulsoup4

- Pandas: Pandas is a powerful data manipulation and analysis library in Python. We’ll use it to store and manage the scraped data. Install pandas with pip:

1

pip install pandas

Choosing the Right Development IDE

An Integrated Development Environment (IDE) provides a coding environment with features like code highlighting, auto-completion, and debugging tools. While you can write Python code in a simple text editor, an IDE can significantly improve your development experience.

Here are a few popular Python IDEs to consider:

PyCharm: PyCharm is a robust IDE with a free Community Edition. It offers features like code analysis, a visual debugger, and support for web development.

Visual Studio Code (VS Code): VS Code is a free, open-source code editor developed by Microsoft. Its vast extension library makes it versatile for various programming tasks, including web scraping.

Jupyter Notebook: Jupyter Notebook is excellent for interactive coding and data exploration. It’s commonly used in data science projects.

Spyder: Spyder is an IDE designed for scientific and data-related tasks. It provides features like a variable explorer and an interactive console.

Signing up for Crawlbase Crawling API and Obtaining Correct Token

To use the Crawlbase Crawling API for making HTTP requests to the Etsy search page, you’ll need to sign up for an account on the Crawlbase website. Now, let’s get you set up with a Crawlbase account. Follow these steps:

- Visit the Crawlbase Website: Open your web browser and navigate to the Crawlbase website Signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the instructions provided.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawlbase Crawling API. You can find your tokens here.

Note: Crawlbase provides two types of tokens: the Normal Token (TCP) for static websites and the JavaScript Token (JS) for dynamic or JavaScript-driven websites. Since Etsy relies heavily on JavaScript for dynamic content loading, we will opt for the JavaScript Token. To kick things off smoothly, Crawlbase generously offers an initial allowance of 1,000 free requests for the Crawling API.

With Python and the required libraries installed, the IDE of your choice set up, and your Crawlbase API credentials in hand, you’re well-prepared to start scraping Etsy product listings. In the next sections, we’ll dive deeper into understanding Etsy’s website structure and using the Crawlbase Crawling API effectively.

Understanding Etsy’s Website Structure

Before we start scraping Etsy’s product listings, it’s crucial to have a solid grasp of Etsy’s website structure. Understanding how the web page is organized and identifying the specific elements you want to scrape will set the stage for a successful scraping operation.

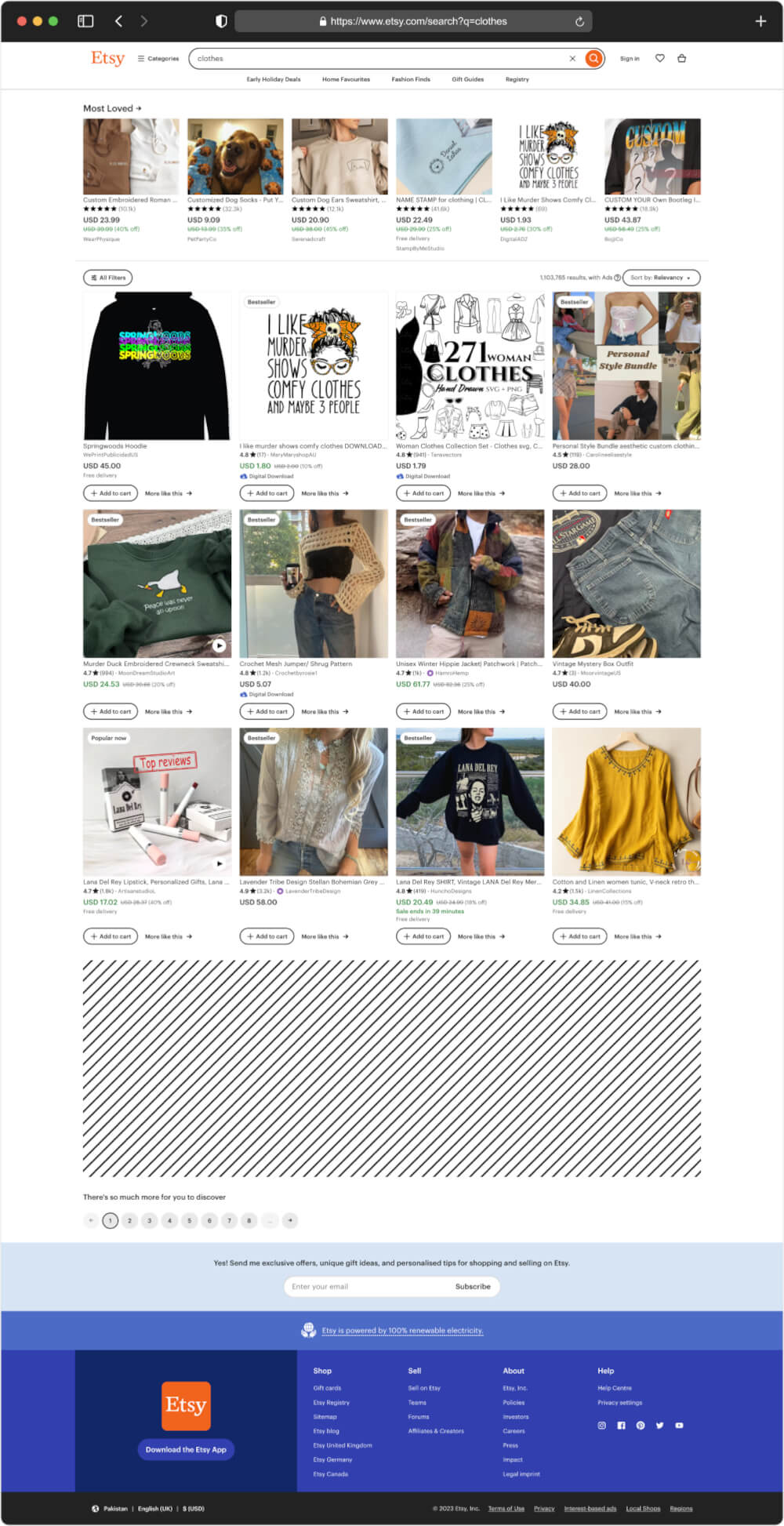

Components of Etsy’s Search Page

Etsy’s search page is where the magic happens. It’s the gateway to a vast array of product listings. But what does it actually look like under the hood? Let’s break down some of the key components:

- Search Bar: This is where users can input search queries to find specific items or categories. As a scraper, you can automate searches by sending HTTP requests with different search terms.

- Search Results: The search results are the heart of the page. They contain individual product listings, each with its own set of information. Understanding the structure of these listings is pivotal for efficient scraping.

- Pagination: Etsy often divides search results into multiple pages. To capture a comprehensive dataset, you’ll need to navigate through these pages, which is one of the challenges we’ll address in this guide.

- Product Listings: Each product listing typically includes details like the product’s title, price, description, seller information, and more. These are the elements we’ll be targeting for extraction.

Identifying the Elements You Want to Scrape

Now that we know what Etsy’s search page looks like let’s focus on the elements we want to scrape. The key elements of interest typically include:

- Product Title: This is the name or title of the product. It’s essential for identifying and categorizing listings.

- Price: The product’s price is crucial for both sellers and buyers. Scraping prices allows for price trend analysis and informed purchasing decisions.

- Product Description: The product description provides valuable information about the item, helping potential buyers make informed choices.

- Seller Information: Knowing who the seller is and where they are located can be relevant for both sellers and buyers. This information can be valuable for market analysis.

- Product Images: Images are an integral part of online shopping. Scraping image URLs allows you to visualize the products and use the images in your analysis or applications.

- Product Rating and Reviews: Ratings and reviews can provide insights into the quality of the product and the seller’s reputation. Scraping this data is valuable for assessing the market.

By identifying and understanding these elements, you’ll be well-prepared to craft your scraping strategy. In the next sections, we’ll dive into the technical aspects of using Python and the Crawlbase Crawling API to collect this data and ensure you have all the knowledge required to extract meaningful insights from Etsy’s dynamic website.

Introduction to Crawlbase Crawling API

The Crawlbase Crawling API is one of the best web crawling tools designed to handle complex web scraping scenarios like Etsy’s dynamic web pages. It provides a simplified way to access web content while bypassing common challenges such as JavaScript rendering, CAPTCHAs, and anti-scraping measures.

One of the notable features of the Crawlbase Crawling API is IP rotation, a technique that helps prevent IP blocks and CAPTCHA challenges. By rotating IP addresses, the API ensures that your web scraping requests appear as if they come from different locations, making it more challenging for websites to detect and block scraping activities.

With the Crawlbase Crawling API, you can send requests to websites and receive structured data in response. It takes care of rendering JavaScript, processing dynamic content, and returning the HTML content ready for parsing.

This API offers a straightforward approach to web scraping, making it an excellent choice for projects like ours, where the goal is to extract data from dynamic websites efficiently.

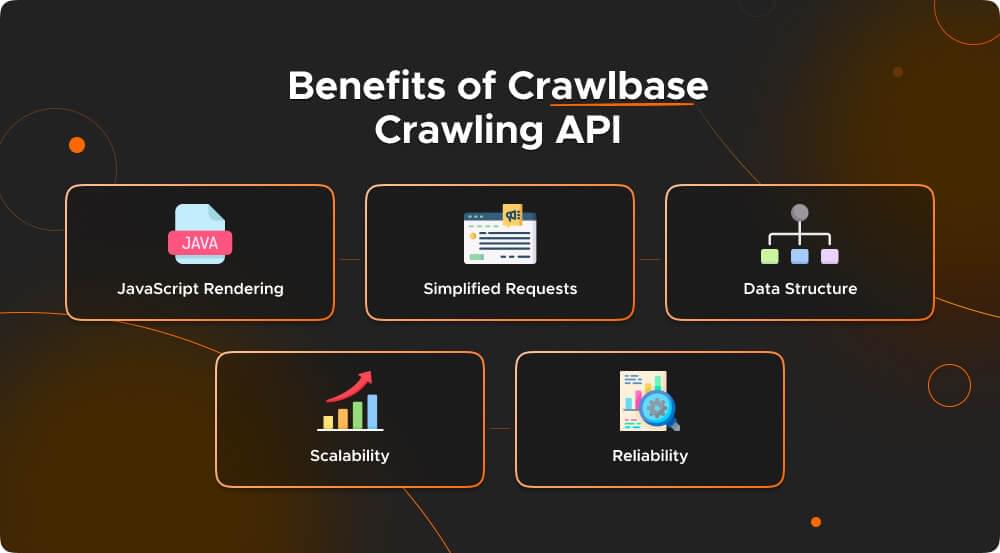

Benefits of Crawlbase Crawling API

The Crawlbase Crawling API offers several advantages, with IP rotation playing a significant role in overcoming common web scraping challenges:

- JavaScript Rendering: It handles websites relying heavily on JavaScript for rendering content. This is crucial for platforms like Etsy, where dynamic content is common.

- Simplified Requests: The API abstracts away the complexities of handling HTTP requests, cookies, and sessions. You can focus on crafting your scraping logic while the API takes care of the technical details.

- Data Structure: The data you receive from the API is typically well-structured, making it easier to parse and extract the information you need.

- Scalability: It allows for scalable web scraping by handling multiple requests simultaneously, which can be advantageous when dealing with large volumes of data.

- Reliability: The Crawlbase Crawling API is designed to be reliable and to provide consistent results, which is essential for any web scraping project.

Crawlbase Python Library

The Crawlbase Python library is a lightweight and dependency-free wrapper for Crawlbase APIs, streamlining the intricacies of web scraping. This versatile tool simplifies tasks like making HTTP requests to websites, adeptly handling IP rotation, and gracefully maneuvering through web obstacles, including CAPTCHAs. To embark on your web scraping journey with this library, you can seamlessly follow these steps:

- Import: To wield the formidable Crawling API from the Crawlbase library, you must commence by importing the indispensable CrawlingAPI class. This foundational step paves the way for accessing a range of Crawlbase APIs. Here’s a glimpse of how you can import these APIs:

1 | from crawlbase import CrawlingAPI |

- Initialization: With your Crawlbase API token securely in hand, the next crucial step involves initializing the CrawlingAPI class. This pivotal moment connects your code to the vast capabilities of Crawlbase:

1 | api = CrawlingAPI({ 'token': 'YOUR_CRAWLBASE_TOKEN' }) |

- Sending a Request: Once your CrawlingAPI class stands ready with your Crawlbase API token, you’re poised to send requests to your target websites. Here’s a practical example of crafting a GET request tailored for scraping iPhone listings from Etsy’s search page:

1 | response = api.get('https://www.facebook.com/BillGates') |

With the Crawlbase Python library as your trusty companion, you can confidently embark on your web scraping odyssey. For a deeper dive into its capabilities, you can explore further details here.

In the upcoming sections, we’ll demonstrate how to leverage the Crawlbase Crawling API and the Python Library to crawl Etsy’s search page, extract product listings, and store the data for analysis.

Scraping Etsy Product Listings

With our environment set up and equipped with the Crawlbase Crawling API, it’s time to dive into the core of our web scraping adventure. In this section, we’ll explore the steps involved in scraping Etsy product listings, from crawling Etsy’s search page HTML to handling pagination for multiple pages of results.

Crawling Etsy Search Page HTML

The journey begins with making a request to Etsy’s search page using the Crawlbase Crawling API. By sending an HTTP request to Etsy’s search page, we retrieve the raw HTML content of the page. This is the starting point for our data extraction process.

The Crawlbase Crawling API takes care of JavaScript rendering, ensuring that we receive the fully loaded web page. This is essential because many elements of Etsy’s product listings are loaded dynamically using JavaScript. Below is the Python script to crawl the Etsy search page HTML for the search query “clothes.”

1 | from crawlbase import CrawlingAPI |

This Python code snippet demonstrates how to use the CrawlingAPI from the “crawlbase” library to retrieve data from an Etsy search page:

- You initialize the CrawlingAPI class with your API token.

- You set some options for the crawling API, including page and AJAX wait times.

- You construct the URL of the Etsy search page for clothes.

Using the API’s GET request, you fetch the page’s content. If the request is successful (status code 200), you decode the HTML content from the response and scrape it.

Example Output:

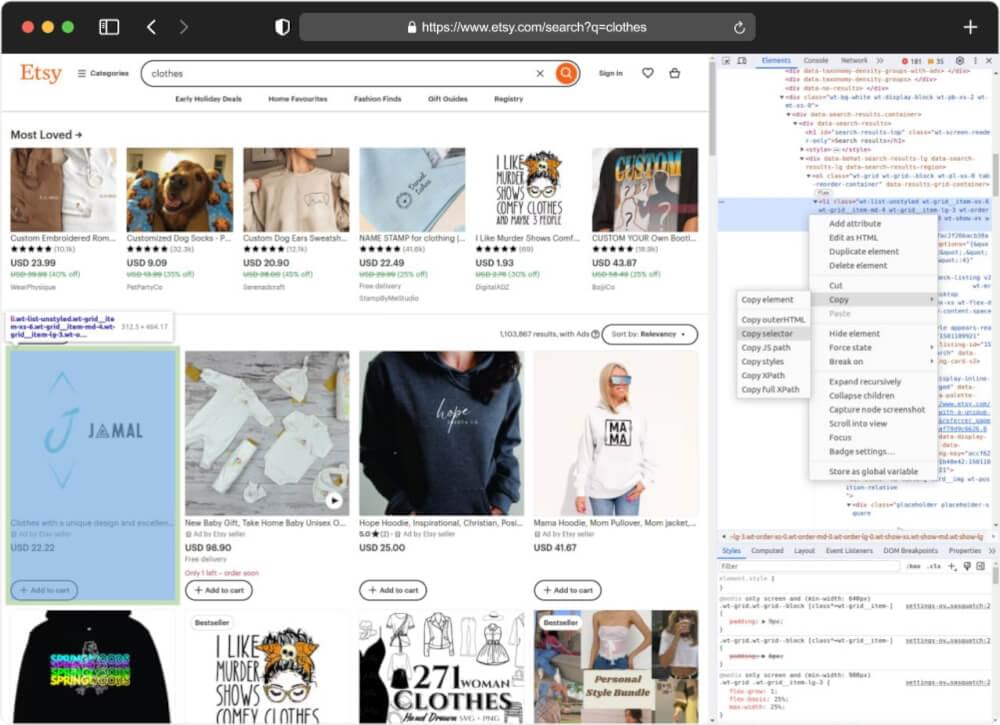

Inspecting HTML to Get CSS Selectors

Once we have the HTML content of the search page, the next step is to inspect the HTML structure to identify the CSS selectors for the elements we want to scrape. This task is where web development tools and browser developer tools come to our rescue. Let’s outline how you can inspect the HTML structure and unearth those precious CSS selectors:

- Access the Website: Head to the Etsy website and visit a search page that captures your interest.

- Right-Click and Inspect: Right-click on the element of the page you want to get information from. Choose “Inspect” or “Inspect Element” from the menu that appears. This will open developer tools in your browser.

- Discover the HTML Source: Look for the HTML source code in the developer tools. Move your mouse over different parts of the code, and the corresponding area on the webpage will light up.

- Identify CSS Selectors: To get CSS selectors for a specific element, right-click on it in the developer tools and choose “Copy” > “Copy selector.” This will copy the CSS selector on your clipboard, which you can use for web scraping.

Once you have the selectors, you can start collecting data from Etsy’s search page with your scraper. Keep in mind that the selectors we’re talking about here worked when we wrote this, but Etsy might update their site, which could make the code stop working later on.

Retrieving Product Listings Data from HTML

With CSS selectors in hand, we can now write Python code to parse the HTML content and extract the desired data. We’ll use Beautiful Soup, a popular HTML parsing library, to traverse the HTML and gather information from the specified elements.

For example, you can extract product titles, prices, ratings, and other relevant details from the HTML content. The data retrieved is then structured and can be stored for further analysis or processing. Let us extend our previous script and scrape this information from HTML.

1 | # Import necessary libraries |

The script then focuses on extracting product details. It does so by identifying and selecting all product containers within the HTML structure. For each product container, it creates a dictionary to store information such as the product’s title, price, and rating. This information is extracted by selecting specific HTML elements that contain these details.

The scraped product details are aggregated into a list, and the script converts this list into a JSON representation with proper indentation, making the data structured and readable.

Example Output:

1 | [ |

Handling Pagination for Multiple Pages of Results

Etsy’s search results are often divided across multiple pages, each containing a set of product listings. To ensure that we gather a comprehensive dataset, we need to handle pagination. This involves iterating through the results pages and making additional requests as needed. Handling pagination is essential for obtaining a complete view of Etsy’s product listings and ensuring your analysis is based on a comprehensive dataset. Let us update our previous script to handle pagination.

1 | import json |

This code handles pagination by first determining the total number of pages in the search results and then systematically scraping data from each page. The get_total_pages function retrieves the total number of pages by making an initial GET request to the Etsy search page and parsing the HTML to extract the total page count. It provides robust error handling to ensure that it can handle exceptions if the request or parsing fails.

The scrape_page function is responsible for scraping data from a single page. It also uses GET requests to fetch the HTML content of a specific page and then uses BeautifulSoup to parse the content. Product details are extracted from the product containers on the page, similar to the previous script. It also provides error handling to handle exceptions during the scraping process.

In the main function, the code first determines the total number of pages by calling get_total_pages, and then it iterates through each page using a for loop, constructing the URL for each page based on the page number. The scrape_page function is called for each page to extract product details, and these details are collected and appended to the all_product_details list. By doing this for all pages, the code effectively handles pagination, ensuring that data from each page is scraped and collected.

After all pages have been processed, you can further work with the accumulated all_product_details list as needed for analysis or storage. This approach allows for comprehensive data scraping from a paginated website, such as Etsy.

Storing the Scraped Data

After successfully scraping data from Etsy’s search pages, the next crucial step is storing this valuable information for future analysis and reference. In this section, we will explore two common methods for data storage: saving scraped data in a CSV file and storing it in an SQLite database. These methods allow you to organize and manage your scraped data efficiently.

Storing Scraped Data in a CSV File

CSV is a widely used format for storing tabular data. It’s a simple and human-readable way to store structured data, making it an excellent choice for saving your scraped Etsy product listing data.

We’ll extend our previous web scraping script to include a step for saving the scraped data into a CSV file using the popular Python library, pandas. Here’s an updated version of the script:

1 | import pandas as pd |

In this updated script, we’ve introduced pandas, a powerful data manipulation and analysis library. After scraping and accumulating the product listing details in the all_product_details list, we create a pandas DataFrame from this data. Then, we use the to_csv method to save the DataFrame to a CSV file named “etsy_product_data.csv” in the current directory. Setting index=False ensures that we don’t save the DataFrame’s index as a separate column in the CSV file.

You can easily work with and analyze your scraped data by employing pandas. This CSV file can be opened in various spreadsheet software or imported into other data analysis tools for further exploration and visualization.

Storing Scraped Data in an SQLite Database

If you prefer a more structured and query-friendly approach to data storage, SQLite is a lightweight, serverless database engine that can be a great choice. You can create a database table to store your scraped data, allowing for efficient data retrieval and manipulation. Here’s how you can modify the script to store data in an SQLite database:

1 | import json |

In this updated code, we’ve added functions for creating the SQLite database and table ( create_database ) and saving the scraped data to the database ( save_to_database ). The create_database function checks if the database and table exist and creates them if they don’t. The save_to_database function inserts the scraped data into the ‘products’ table.

By running this code, you’ll store your scraped Etsy product listing data in an SQLite database named ‘etsy_products.db’. You can later retrieve and manipulate this data using SQL queries or access it programmatically in your Python projects.

Final Words

This guide has provided the necessary insights to scrape Etsy product listings utilizing Python effectively and the Crawlbase Crawling API. Should you further your expertise in extracting product information from additional e-commerce platforms such as Walmart, eBay, and AliExpress, we encourage you to consult the supplementary guides provided.

We understand that web scraping can present challenges, and it’s important that you feel supported. Therefore, if you require further guidance or encounter any obstacles, please do not hesitate to reach out. Our dedicated team is committed to assisting you throughout your web scraping endeavors.

Frequently Asked Questions

Q. What is web scraping, and is it legal for Etsy?

Web scraping is the automated process of extracting data from websites by fetching and parsing their HTML content. It can be a valuable tool for various purposes, including data analysis and market research.

When it comes to the legality of web scraping on platforms like Etsy, it depends on whether the practice aligns with the website’s terms and policies. While web scraping itself is not inherently illegal, websites may have terms of service that either allow or restrict scraping. Etsy, like many online platforms, has its terms of service and a robots.txt file that outlines rules for web crawlers and scrapers. It’s crucial to review and comply with these guidelines when scraping Etsy. Non-compliance can lead to legal consequences or being blocked from accessing the website.

Q. How does IP rotation work in Crawlbase Crawling API, and why is it essential for web scraping?

IP rotation in the Crawlbase Crawling API involves dynamically changing the IP address used for each web scraping request. This process is essential for successful web scraping because it helps circumvent common challenges such as IP bans, blocks, and CAPTCHA challenges. By continually rotating IP addresses, the API makes it considerably more challenging for websites to identify and thwart scraping activities. This proactive approach ensures the reliability and success of your web scraping operations, allowing you to access and collect the data you need without interruptions or impediments.

Q. What are some common challenges when scraping dynamic websites like Etsy, and how does Crawlbase Crawling API address them?

Scraping dynamic websites like Etsy presents a multitude of challenges, primarily because these sites rely heavily on JavaScript to load and display content. Dynamic content loading can make data extraction challenging for traditional web scraping methods. However, the Crawlbase Crawling API is specifically engineered to tackle these challenges effectively. It accomplishes this by rendering JavaScript and provides the flexibility to include query parameters like “ajax_wait” or “page_wait.” These parameters enable you to control the timing of when the HTML is fetched after JavaScript rendering, ensuring that you receive fully loaded web pages ready for parsing. By abstracting away the complexities of handling HTTP requests, cookies, and sessions, the API simplifies your scraping code, making it cleaner and more straightforward. Furthermore, it provides well-structured data in response to your requests, which significantly streamlining the parsing and extraction processes enabling you to extract meaningful insights from dynamic websites.

Q. How do you handle pagination when scraping Etsy product listings, and why is it necessary?

Effective pagination handling is a critical aspect of web scraping Etsy product listings. Etsy often divides search results into multiple pages to accommodate a large number of product listings. To obtain a comprehensive dataset that includes all relevant listings, you must adeptly handle pagination. This involves systematically iterating through the various results pages and making additional requests as needed. Pagination handling is essential because it ensures you capture the entirety of Etsy’s product listings, preventing any omissions or gaps in your data. Failing to address pagination can lead to incomplete or inaccurate data, compromising the quality and reliability of your scraping results.