Scraping Walmart Best Sellers is a strategic move for anyone interested in the latest market trends. By utilizing the Crawlbase Crawling API and JavaScript, you can easily extract information about the most popular products on Walmart’s online store.

This approach is particularly useful for retailers who need to keep their finger on the pulse of consumer demand or for shoppers keen on discovering trending items. The combination of JavaScript and the Crawlbase API simplifies the process, allowing you to automate data retrieval and consistently stay updated with the best-selling products on Walmart.

Our step-by-step guide is designed to help you efficiently collect the data you need, ensuring that you’re always informed and ready to make savvy decisions in the dynamic environment of online retail..

Table of Contents

- Understanding Walmart Best Sellers

- What are Walmart Best Sellers?

- The Significance of Scraping This Data

- Identifying the Specific Data You Want to Extract

- Scrape Walmart Best Sellers: A Step-by-Step Guide

- Setting Up the Environment

- Fetching HTML using the Crawling API

- Scrape meaningful data with Crawlbase Scrapers

- Scrape Walmart Best Selling Products Details

- Data Extraction Tips: Strategies For Efficiently Scraping Walmart Best Sellers

- Final Words

- Frequently Asked Questions

Understanding Walmart Best Sellers

Before you start into scraping Walmart’s Best Sellers, it’s essential to understand what this term means, why it’s important, and what kind of data you can extract from it.

1. What are Walmart Best Sellers?

Walmart Best Sellers are products currently selling like hotcakes on Walmart’s online platform. These are the top-rated items and are in demand among Walmart’s customers. They can include a wide range of products, from electronics and clothing to home goods and more.

2. The Significance of Scraping This Data

- Market Insights: Scraping Walmart Best Sellers provides valuable market insights. It helps businesses and individuals understand what products are trending and in high demand, which can be crucial for making informed decisions in e-commerce and retail.

- Price Tracking: By monitoring Best Sellers, you can keep track of price changes, discounts, and promotions. This information can be used for competitive pricing strategies and finding the best deals.

- Product Research: Researchers and analysts use this data to study consumer preferences, identify emerging trends, and evaluate the performance of different product categories over time.

- Content Creation: Content creators, such as bloggers and vloggers, often use Best Sellers’ data to create engaging content, like product reviews and recommendations.

3. Identifying the Specific Data You Want to Extract

When scraping Walmart Best Sellers, the specific data you might want to extract includes:

- Product Names: The names of the top-selling products.

- Prices: The current prices of these products.

- Ratings: Customer ratings and reviews for each product.

- Descriptions: Descriptions or details about the products.

- URLs: Links to the product pages on Walmart’s website.

You can choose to extract all or some of this information based on your goals and the insights you want to gain. Having a clear plan for what data you need is essential, as this will guide your scraping efforts and help you use the information effectively.

Understanding Walmart Best Sellers and their associated data is the first step in your scraping journey. With this knowledge, you can move on to using the Crawlbase Crawling API and JavaScript to collect the data you need for your specific purposes.

Scrape Walmart Best Sellers: A Step-by-Step Guide

Setting Up the Environment

To sign up for a free account on Crawlbase and get your private token, go to your Crawlbase account documentation section.

To install the Crawlbase Node.js library, follow these steps:

Make sure you have Node.js installed on your computer. You can download and install it from the official Node.js website if you don’t have it.

After you’ve confirmed that Node.js is installed, open your terminal and enter the following command:

1 | npm install crawlbase |

This command will download and install the Crawlbase Node.js library on your system so you can use it for your web scraping project.

- To create a file named

"walmart-scraper.jsyou can use a text editor or an integrated development environment (IDE). Here’s how to make the file using a standard command-line approach:

Run this command:

1 | touch walmart-scraper.js |

Executing this command will generate an empty walmart-scraper.js file in the specified directory. You can then open this file with your preferred text editor and add your JavaScript code.

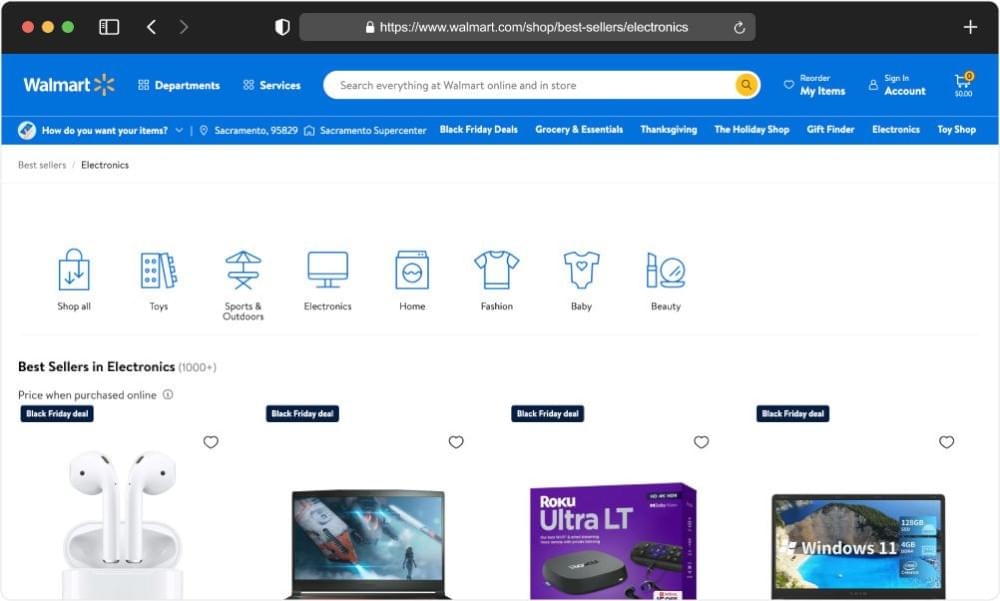

Fetching HTML using the Crawling API

You have your API credentials, installed the Crawlbase Node.js library, and created a file called walmart-scraper.js. Now, pick the Walmart best sellers page you want to scrape. In this example, we’ve chosen the Walmart best sellers page for the electronics category.

To set up the Crawlbase Crawling API, you need to do a few simple steps:

- Ensure you’ve made the

walmart-scraper.jsfile, as discussed in the previous part. - Just copy and paste the script we give you below into that file.

- Run the script in your terminal using the command

node walmart-scraper.js.

1 | // Import the Crawling API |

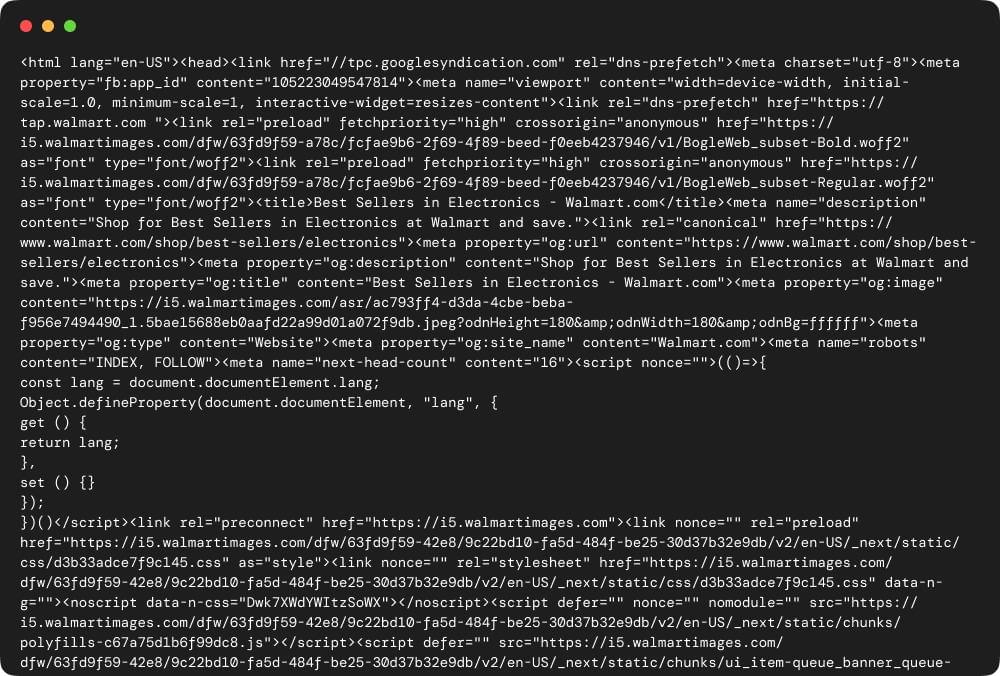

The instructions in the script above show you how to use Crawlbase’s Crawling API to get data from a Walmart best sellers page. You’ll need to set up the API token, specify the Walmart page you want to fetch, and then send a GET request. When you run this code, it will display the raw HTML content of the Walmart page on your console.

Scrape meaningful data with Crawling API Parameters

In the last example, we learned how to get the basic layout of Walmart’s bestselling items: the HTML code from their website. However, we might only sometimes want this basic code. What we often need is the specific details from the webpage. The great news is that the Crawlbase Crawling API has special settings that let us easily extract the key details from Walmart’s pages. To do this, you must use the “autoparse” feature when working with the Crawling API. This feature simplifies the process of collecting the most critical information in a JSON format. You can do this by updating the walmart-scraper.js file. Let’s look at the next example to understand how it works.

1 | // Import the Crawling API |

JSON Response:

1 | { |

Now that we have the JSON data from the Walmart best sellers page, let’s focus on extracting key details such as product titles, prices, ratings, and so on. This step will allow us to understand the product’s performance and customer opinions better. Let’s go ahead and see what useful information we can gather!

Scrape Walmart Best Selling Products Details

In this example, we’ll show you how to extract details of the best-selling products from the HTML content of a Walmart best-seller page that you initially scraped. This involves using two JavaScript libraries: cheerio, commonly used for web scraping, and fs, which is often employed for file system operations.

The below JavaScript code uses the Cheerio library to scrape product details from a Walmart best-seller page. It reads HTML from a “walmart-scraper.js” file, loads it into Cheerio, and grabs info like product name, price, rating, reviews, and image URL. The script goes through each product container, saves the data in a JSON array.

1 | // Import the necessary libraries |

JSON Response:

1 | [ |

Data Extraction Tips: Strategies for Efficiently Scraping Walmart Best Sellers

When scraping Walmart Best Sellers data, it’s essential to use effective strategies and follow best practices to ensure a smooth data collection process without encountering issues. Here are some key tips:

- Use Crawlbase Crawling API:

Leverage the Crawlbase Crawling API for structured data extraction. It simplifies the scraping process and provides reliable access to Walmart’s Best Sellers data.

- Implement Rate Limiting:

Introduce time delays between your API requests and Walmart’s website. This prevents overloading their servers and reduces the risk of getting blocked.

- Rotate User-Agents:

Vary the User-Agent headers in your requests to simulate different web browsers. This makes your scraping activity appear more like human browsing.

- Handle CAPTCHAs Gracefully:

Be prepared to deal with CAPTCHAs, which Walmart may use to verify if you’re a bot. Consider using CAPTCHA-solving services or automation techniques to address them.

- Keep Your Code Updated:

Regularly review and update your scraping code to accommodate any changes in Walmart’s website structure. This ensures the continued accuracy of your data extraction.

- Respect Robots.txt:

Comply with Walmart’s robots.txt file, which outlines guidelines for web crawling. Adhering to these rules helps you avoid legal and ethical concerns.

- Utilize Proxies:

Employ proxy servers to change your IP address, reducing the risk of IP bans and distributing your requests across multiple IPs.

- Verify Data Quality:

Consistently check the quality, accuracy, and currency of the data you scrape. Ensuring the reliability of your collected information is crucial.

- Ethical Data Handling:

Handle scraped data ethically, respecting user privacy and complying with copyright laws and terms of service.

- Test on Small Samples:

Before scaling up your scraping operations, test your code on a smaller sample to identify and address potential issues in a controlled environment.

Final Words

This tutorial has equipped you with the knowledge to efficiently scrape Walmart Best Sellers using JavaScript and the Crawlbase Crawling API. Our additional guides are at your disposal for those interested in extending their data extraction skills to other major retail platforms such as Amazon, eBay, and AliExpress.

We recognize the complexities associated with web scraping and are committed to facilitating your experience. Should you require further assistance or encounter any obstacles, Crawlbase support team is on standby to provide expert help. We look forward to assisting you in your web scraping endeavors.

Frequently Asked Questions

What are Walmart’s best sellers?

Walmart’s best sellers are the most popular products and are in demand among their customers. These are the items that many people are buying at Walmart stores or online. Best sellers can include various products, from electronics to clothing, toys, and household essentials. By keeping an eye on Walmart’s best sellers, you can get a sense of what’s currently trending and see what other shoppers are enjoying. This information can help you make informed choices when you’re shopping at Walmart or looking for gift ideas.

How do I scrape data from Walmart?

To scrape data from Walmart, you can use JavaScript along with the Crawlbase Crawling API. This powerful combo allows you to automate the process of gathering information from Walmart’s website. You can extract product details, prices, ratings, and more. Start by writing a script in JavaScript that interacts with the Walmart website, and then utilize the Crawlbase Crawling API to access and collect the data. It’s a straightforward way to retrieve the information you need for price comparison, trend analysis, or any other purpose, making your data extraction tasks easier and more efficient.

Can I scrape data from Walmart?

Yes, you can scrape data from Walmart’s website. You can gather information like product details, prices, and more using web scraping tools and techniques. However, reviewing Walmart’s terms of service and robots.txt file is important to ensure you’re scraping within their guidelines and policies.

What is Walmart’s data strategy?

Walmart’s data strategy is all about using information to make better decisions. They collect data from in-store and online purchases, analyze it to understand customer preferences and improve their operations. By harnessing data, Walmart aims to offer customers what they want and streamline their business processes for efficiency.

What tools do I need for scraping Walmart Best Sellers?

You’ll need a programming environment, a web browser, Crawlbase Crawling API, and basic knowledge of JavaScript.