Ever found yourself browsing Flipkart and wondered how you could get insights from all those product listings? With over 200 million registered users and a vast array of electronics and fashion products, Flipkart is one of India’s leading e-commerce giants. Or you’ve imagined tracking trends or comparing prices across various items. According to recent statistics, Flipkart hosts over 150 million products across multiple categories, making it a treasure trove of data to explore. Well, you’re in for a treat! Web scraping offers a way to gather and analyze this information, and today, we will scrape Flipkart.

Scraping Flipkart might seem tricky, but with the power of Python, a friendly and popular coding language, and the help of the Crawlbase Crawling API, it becomes a breeze. From understanding the structure of Flipkart’s pages to scraping Flipkart pages, this guide will walk you through each step. By the end, armed with data from Flipkart’s extensive platform, you’ll know how to scrape Flipkart and store and analyze the data you gather.

If you want to head right into the steps, click here.

Table Of Contents

- Installing Python and required libraries

- Choosing the Right Development IDE

- Crawlbase Registration and API Token

- Crafting the URL for Targeted Scraping

- Fetching HTML of Web Page

- Inspecting HTML to Get CSS Selectors

- Extracting Product Details

- Handling Pagination for Multiple Product Pages

- Storing Scraped Data in CSV File

- Store Scraped Data in SQLite Database

- Visualizing Data using Python Libraries (Matplotlib, Seaborn)

- Drawing Insights from Scraped Data

Why Scrape Flipkart?

Here’s why you should scrape Flipkart:

A. Uncover Hidden Insights:

Thousands of products are listed, sold, and reviewed daily on Flipkart. By scraping this data, you can uncover trends, understand customer preferences, and identify emerging market demands. For businesses, this means making informed decisions about product launches, pricing strategies, and marketing campaigns.

B. Competitive Analysis:

Scraping allows you to keep a pulse on the competition. You can position your offerings more competitively by monitoring prices, product availability, and customer reviews. Furthermore, understanding your competitors’ strengths and weaknesses can pave the way for strategic advantages in the market.

C. Personalized Shopping Experiences:

For consumers, scraping can lead to a more personalized shopping journey. By analyzing product reviews, ratings, and descriptions, e-commerce platforms can offer tailored product recommendations, ensuring that customers find what they’re looking for faster and with greater satisfaction.

D. Enhanced Product Research:

For sellers and manufacturers, scraping Flipkart provides a wealth of product data. From understanding which features customers value the most to gauging market demand for specific categories, this data can be instrumental in guiding product development and innovation.

E. Stay Updated with Market Dynamics:

The e-commerce landscape is dynamic, with products being regularly added, sold out, or discounted. Scraping Flipkart ensures you’re constantly updated with these changes, allowing for timely responses and proactive strategies.

Key Data Points to Extract from Flipkart

When scraping Flipkart, specific data points stand out as crucial:

- Product Name: The name or title of the product.

- Price: The current price of the product listing.

- Rating: The average rating given by users for the product.

- Review Count: The number of reviews or rating users give for the product.

- Product Category: The category or section to which the product belongs.

By understanding these elements and their arrangement on the search pages, you’ll be better equipped to extract the data you need from Flipkart.

Setting up Your Environment

Before scraping Flipkart product listings, we must ensure our setup is ready. We must install the tools and libraries needed, pick the right IDE, and get the critical API credentials.

Installing Python and Required Libraries

The first step in setting up your environment is to ensure you have Python installed on your system. If you still need to install Python, download it from the official website at python.org.

Once you have Python installed, the next step is to make sure you have the required libraries for this project. In our case, we’ll need three main libraries:

- Crawlbase Python Library: This library will be used to make HTTP requests to the FlipKart search page using the Crawlbase Crawling API. To install it, you can use pip with the following command:

1

pip install crawlbase

- Beautiful Soup 4: Beautiful Soup is a Python library that makes it easy to scrape and parse HTML content from web pages. It’s a critical tool for extracting data from the web. You can install it using pip:

1

pip install beautifulsoup4

- Pandas: Pandas is a powerful data manipulation and analysis library in Python. We’ll use it to store and manage the scraped data. Install pandas with pip:

1

pip install pandas

- Matplotlib: A fundamental plotting library in Python, it’s essential for visualizing data and creating various types of plots and charts. You can install it using pip:

1

pip install matplotlib

- Seaborn: Built on top of Matplotlib, Seaborn provides a high-level interface for creating attractive and informative statistical graphics. Enhance your visualizations further by installing it via pip:

1

pip install seaborn

Choosing the Right Development IDE

An Integrated Development Environment (IDE) provides a coding environment with features like code highlighting, auto-completion, and debugging tools. While you can write Python code in a simple text editor, an IDE can significantly improve your development experience. You can consider PyCharm, Visual Studio Code (VS Code), Jupyter Notebook and Spyder.

Crawlbase Registration and API Token

To use the Crawlbase Crawling API for making HTTP requests to the Flipkart search page, you must sign up for an account on the Crawlbase website. Now, let’s get you set up with a Crawlbase account. Follow these steps:

- Visit the Crawlbase Website: Open your web browser and navigate to the Crawlbase website Signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the instructions provided.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawlbase Crawling API. You can find your tokens here.

Note: Crawlbase offers two types of tokens, one for static websites and another for dynamic or JavaScript-driven websites. Since we’re scraping Flipkart, we’ll opt for the Normal Token. Crawlbase generously offers an initial allowance of 1,000 free requests for the Crawling API, making it an excellent choice for our web scraping project.

With Python and the required libraries installed, the IDE of your choice set up, and your Crawlbase token in hand, you’re well-prepared to start scraping Flipkart products.

Scraping Flipkart Products

Let’s get into the details of how you can scrape Flipkart. Each step will be simplified with Python code examples provided to enhance clarity.

Crafting the URL for Targeted Scraping

Think of the URL as the webpage address you want to visit. To get the right products, you need the right address. Here’s a simple example of how you might create a Flipkart URL for searching mobile phones:

1 | search_query = "headphone" |

search_query = “headphone”

url = f”https://www.flipkart.com/search?q={search_query}“

Fetching HTML of Web Page

To extract data from a webpage, the first step is to fetch its HTML content. Using Python, particularly with the crawlbase library’s Crawling API, this process becomes straightforward. Here’s a simple example that demonstrates how to use the crawlbase library to fetch the HTML content of a webpage:

1 | from crawlbase import CrawlingAPI |

To initiate the Flipkart scraping process, follow these straightforward steps:

- Create the Script: Begin by creating a new Python script file. Name it

flipkart_scraping.py. - Paste the Code: Copy the above code and paste it into your newly created

flipkart_scraping.pyfile. Make sure you add your token. - Execution: Open your command prompt or terminal.

- Run the Script: Navigate to the directory containing

flipkart_scraping.pyand execute the script using the following command:

1 | python flipkart_scraping.py |

Upon execution, the HTML content of the page will be displayed in your terminal.

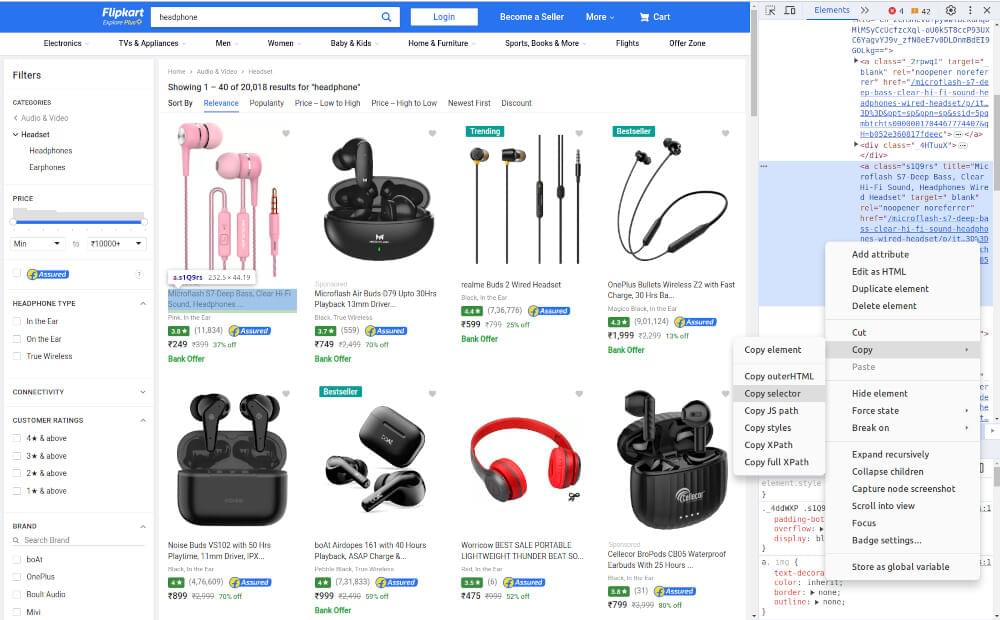

Inspecting HTML to Get CSS Selectors

With the HTML content obtained from the search page, the next step is to analyze its structure and pinpoint the location of pricing data. This task is where web development tools and browser developer tools come to our rescue. Let’s outline how you can inspect the HTML structure and unearth those precious CSS selectors:

- Open the Web Page: Navigate to the Flipkart website and land on a property page that beckons your interest.

- Right-Click and Inspect: Right-click on the page and choose “Inspect” or “Inspect Element” from the menu that appears. This will open developer tools in your browser.

- Locate the HTML Code: Look for the HTML source code in the developer tools. Move your mouse over different parts of the code, and the corresponding area on the webpage will light up.

- Identify CSS Selector: To get CSS selectors for a specific element, right-click on it in the developer tools and choose “Copy” > “Copy selector.” This will copy the CSS selector on your clipboard, which you can use for web scraping.

Once you have these selectors, you can proceed to structure your Flipkart scraper to extract the required information effectively.

Extracting Product Details

Once the initial HTML data is obtained, the next task is to derive valuable insights from it. This is where the utility of BeautifulSoup shines through. As a Python module, BeautifulSoup is adept at analyzing HTML and XML files, equipping users with methods to traverse the document structure and locate specific content.

With the assistance of BeautifulSoup, users can identify particular HTML components and retrieve pertinent details. In the following script, BeautifulSoup is employed to extract essential details like the product title, rating, review count, price, and URL link (Product Page URL) for every product listed on the specified Flipkart search page.

1 | from crawlbase import CrawlingAPI |

In the example above:

- We utilize the

crawlbaselibrary for fetching the webpage HTML content. - After fetching the webpage content, we parse it using BeautifulSoup library.

- We then identify the specific sections or elements that contain product listings.

- For each product listing, we extract relevant details such as the title, rating, review count, price, and URL link.

Output:

1 | [ |

Handling Pagination for Multiple Product Pages

Flipkart’s search results are often divided across multiple pages, each containing a set of product listings. To ensure that we gather a comprehensive dataset, we need to handle pagination. This involves iterating through the results pages and making additional requests as needed. Handling pagination is essential for obtaining a complete view of Flipkart’s product listings and ensuring your analysis is based on a comprehensive dataset. Let us update our previous script to handle pagination.

1 | from crawlbase import CrawlingAPI |

Storing the Scraped Data

After successfully scraping data from Flipkart’s search pages, the next crucial step is storing this valuable information for future analysis and reference. In this section, we will explore two common methods for data storage: saving scraped data in a CSV file and storing it in an SQLite database. These methods allow you to organize and manage your scraped data efficiently.

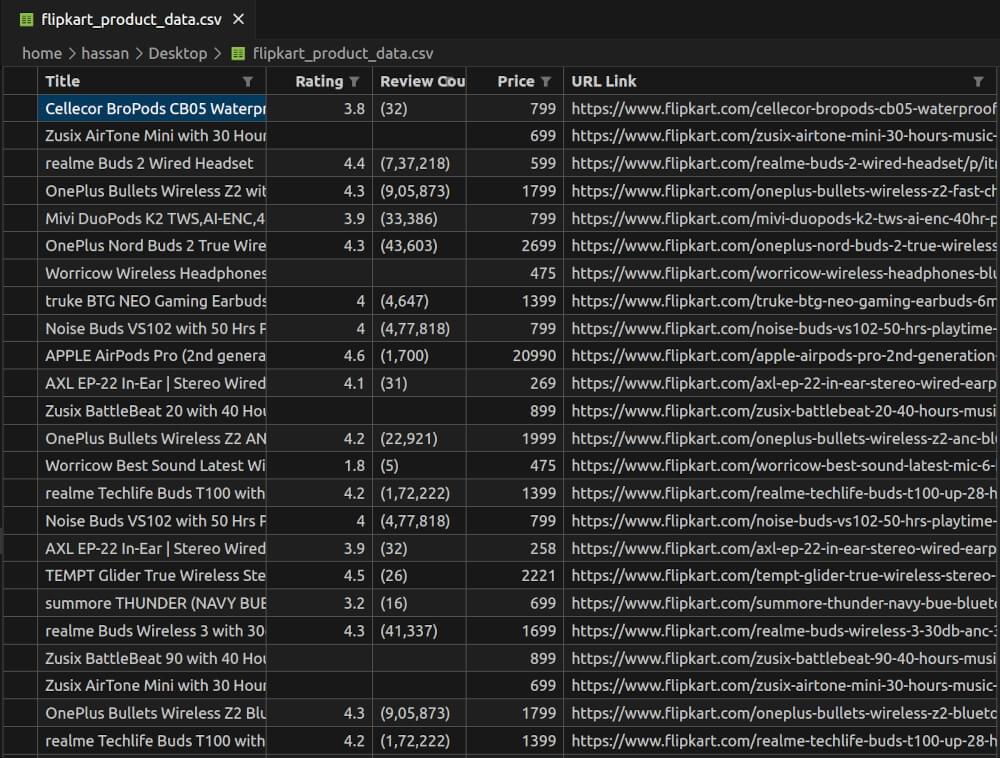

Storing Scraped Data in a CSV File

CSV is a widely used format for storing tabular data. It’s a simple and human-readable way to store structured data, making it an excellent choice for saving your scraped Flipkart product listing data.

We’ll extend our previous web scraping script to include a step for saving the scraped data into a CSV file using the popular Python library, pandas. Here’s an updated version of the script:

1 | from crawlbase import CrawlingAPI |

In this updated script, we’ve introduced pandas, a powerful data manipulation and analysis library. After scraping and accumulating the product listing details in the all_product_details list, we create a pandas DataFrame from this data. Then, we use the to_csv method to save the DataFrame to a CSV file named “flipkart_product_data.csv” in the current directory. Setting index=False ensures that we don’t save the DataFrame’s index as a separate column in the CSV file.

flipkart_product_data.csv Preview:

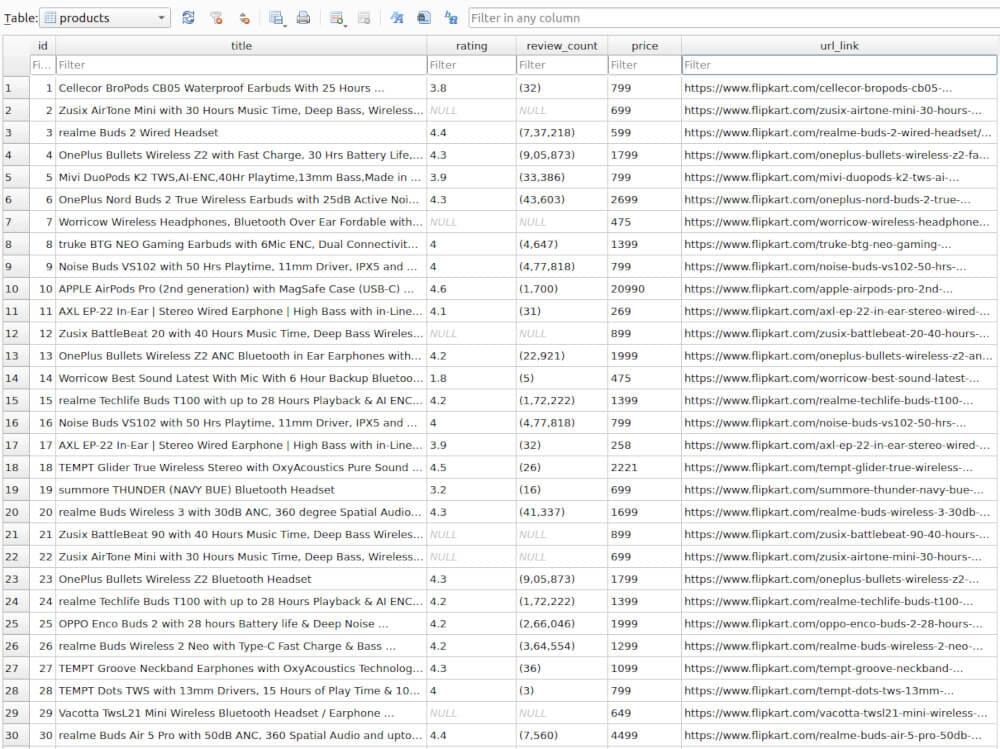

Storing Scraped Data in an SQLite Database

If you prefer a more structured and query-friendly approach to data storage, SQLite is a lightweight, serverless database engine that can be a great choice. You can create a database table to store your scraped data, allowing for efficient data retrieval and manipulation. Here’s how you can modify the script to store data in an SQLite database:

1 | from crawlbase import CrawlingAPI |

In this script, we’ve added functions for creating the SQLite database and table ( create_database ) and saving the scraped data to the database ( save_to_database ). The create_database function checks if the database and table exist and creates them if they don’t. The save_to_database function inserts the scraped data into the ‘products’ table in an SQLite database named ‘flipkart_products.db’.

products Table Preview:

How to Analyze Flipkart Trends

Extracting data is merely the first step. The true value emerges when this data is transformed into actionable insights. This section delves into how we can harness the scraped data from Flipkart to visualize trends and draw meaningful conclusions.

How to Visualize Data using Python Libraries (Matplotlib, Seaborn)

Python offers an extensive suite of visualization tools, with Matplotlib and Seaborn standing out as prominent choices. These libraries empower analysts to craft compelling visuals that succinctly represent intricate data patterns.

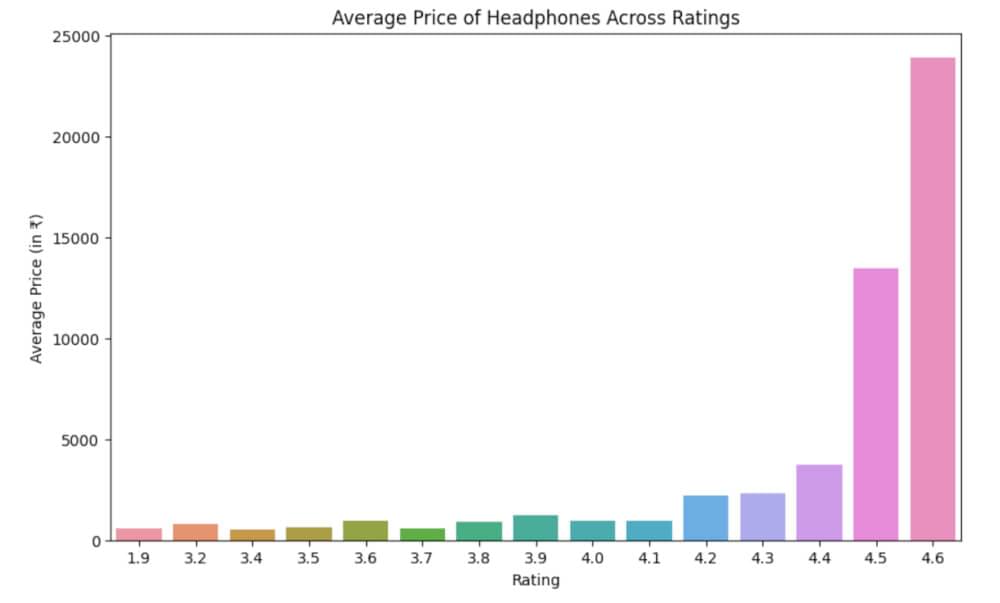

Let’s consider an illustrative example where we focus on the ‘Price’ and ‘Rating’ attributes from our scraped dataset. By plotting the average price of headphones against their respective ratings, we can discern potential correlations and market preferences. We can update our previous script as below.

1 | from crawlbase import CrawlingAPI |

Output Graph:

Drawing Insights from Scraped Data

From the visualization above, we observe that headphones with higher ratings tend to have a higher average price, suggesting that customers may be willing to pay a premium for better-rated products. However, it’s essential to consider other factors, such as brand reputation, features, and customer reviews, before drawing conclusive insights.

In addition to the above insights, further analysis could include:

- Correlation between ‘Review Count’ and ‘Rating’ to understand if highly-rated products also have more reviews.

- A price distribution analysis was used to identify the most common price range for headphones on Flipkart.

By combining data scraping with visualization techniques, businesses and consumers can make more informed decisions and gain a deeper understanding of market dynamics.

Final Words

This guide has provided the necessary insights to scrape Flipkart products utilizing Python and the Crawlbase Crawling API. Should you further your expertise in extracting product information from additional e-commerce platforms such as Amazon, Walmart, eBay, and AliExpress, we encourage you to consult the supplementary guides provided.

Here are some other web scraping python guides you might want to look at:

📜 How to scrape Images from DeviantArt

📜 How to Build a Reddit Scraper

📜 Instagram Proxies to Scrape Instagram

We understand that web scraping can present challenges, and it’s important that you feel supported. Therefore, if you require further guidance or encounter any obstacles, please do not hesitate to reach out. Our dedicated team is committed to assisting you throughout your web scraping endeavors.

Frequently Asked Questions

Q. Is it legal to scrape data from Flipkart?

Web scraping Flipkart, exists in a legal gray area. While the act of scraping itself might not be explicitly illegal, the use and dissemination of the scraped data can raise legal concerns. It’s imperative to meticulously review Flipkart’s terms of service and the directives in their robots.txt file. These documents often provide guidelines about permissible activities and data usage restrictions. Furthermore, scraping should not violate any copyright laws or infringe upon Flipkart’s intellectual property rights. Before engaging in any scraping activities, it’s wise to seek legal counsel to ensure compliance with local regulations and to mitigate potential legal risks.

Q. Why is scraping Flipkart search pages beneficial?

Scraping Flipkart’s search pages provides businesses with valuable insights into the e-commerce landscape. Here’s why it’s advantageous:

- Product Trends: Monitoring Flipkart’s search data reveals emerging product trends, helping businesses align their offerings with market demands.

- Pricing Intelligence: By analyzing product prices on Flipkart, businesses can refine their pricing strategies, ensuring competitiveness without compromising profitability.

- Consumer Insights: Search patterns on Flipkart offer a glimpse into consumer behavior, guiding businesses in product development and marketing efforts.

- Competitive Edge: Access to real-time data from Flipkart gives businesses a competitive advantage, enabling swift, informed decisions in a fast-paced market.

In summary, scraping Flipkart search pages equips businesses with actionable insights, fostering informed strategies and enhancing market responsiveness.

Q. How often should I update my Flipkart scraping script?

Flipkart, like many e-commerce platforms, undergoes frequent updates to enhance user experience, introduce new features, or modify its website structure. These changes can inadvertently disrupt your scraping process if your script is not regularly maintained. To maintain the integrity and efficiency of your scraping efforts, it’s recommended to monitor Flipkart’s website for any changes and adjust your script accordingly. Periodic reviews, perhaps on a monthly or quarterly basis, coupled with proactive script adjustments, can ensure that your data extraction remains accurate and uninterrupted.

Q. How can I handle potential IP bans or restrictions while scraping Flipkart?

Facing IP bans or restrictions is a common hurdle for web scrapers, especially when dealing with platforms as stringent as Flipkart. To navigate these challenges and ensure uninterrupted scraping:

- Implement Delays: Introduce random or systematic delays between your scraping requests to mimic human behavior and reduce the load on the server.

- Use Proxies: Use Rotating IP addresses to mask your IP address and distribute requests, making it harder for websites to track and block your scraping activity.

- Rate Limiting Tools: Consider integrating middleware or tools designed to manage and respect rate limits, adjusting your scraping speed dynamically based on server responses.

Lastly, for those seeking a more structured and efficient approach, specialized services like Crawlbase’s Crawling API for Flipkart can be invaluable. These platforms provide pre-optimized solutions designed to handle potential restrictions, offering a seamless and compliant scraping experience.