LinkedIn scraping unlocks valuable data for recruitment, sales, and market research. This guide shows you how to extract LinkedIn profiles, company pages, and feeds using Python and Crawlbase’s Crawling API.

Table of Contents

- Why Scrape LinkedIn?

- What Can We Scrape from LinkedIn?

- Potential Challenges of Scraping LinkedIn

- Crawlbase Crawling API for LinkedIn Scraping

- Setting Up Your Environment

- Crawlbase LinkedIn Profiles Scraper

- Retrieving Data from Crawlbase Cloud Storage

- Crawlbase LinkedIn Company Pages Scraper

- Retrieving Data from Crawlbase Cloud Storage

- Crawlbase LinkedIn Feeds Scraper

- Scraping a LinkedIn Feed

- Retrieving Data from Crawlbase Cloud Storage

- Supercharge Your Career Goals with Crawlbase

- Frequently Asked Questions (FAQs)

Why Scrape LinkedIn?

LinkedIn data extraction offers powerful advantages:

- Talent Acquisition: Automate candidate sourcing and find qualified professionals faster

- Sales & Lead Generation: Sales teams can scrape LinkedIn profiles to gather leads, monitor them for use by cold callers, or develop targeted outreach strategies.

- Market Research: Monitor competitors, industry trends, and market benchmarks

- Job Market Analysis: Track hiring patterns, salary trends, and in-demand skills

- Academic Research: Gather datasets on professional networking and career trajectories.

What Data Can We Scrape from LinkedIn?

LinkedIn Profiles:

- Personal Information: Names, job titles, current and past positions, education, skills, endorsements, and recommendations.

- Contact details: Emails, phone numbers (if publicly available), and social media profiles.

- Engagement: Posts, articles, and other content shared or liked by the user.

Company Pages:

- Company Details: Name, industry, size, location, website, and company description.

- Job Postings: Current openings, job descriptions, requirements, and application links.

- Employee Information: List of employees, their roles, and connections within the company.

- Updates and News: Company posts, articles, and updates shared on their page.

LinkedIn Feeds:

- Activity Feed: Latest updates, posts, and articles from users and companies you are interested in.

- Engagement Metrics: Likes, comments, shares, and the overall engagement of posts.

- Content Analysis: Types of content being shared, trending topics, and user engagement patterns.

Scraping Challenges & Solutions

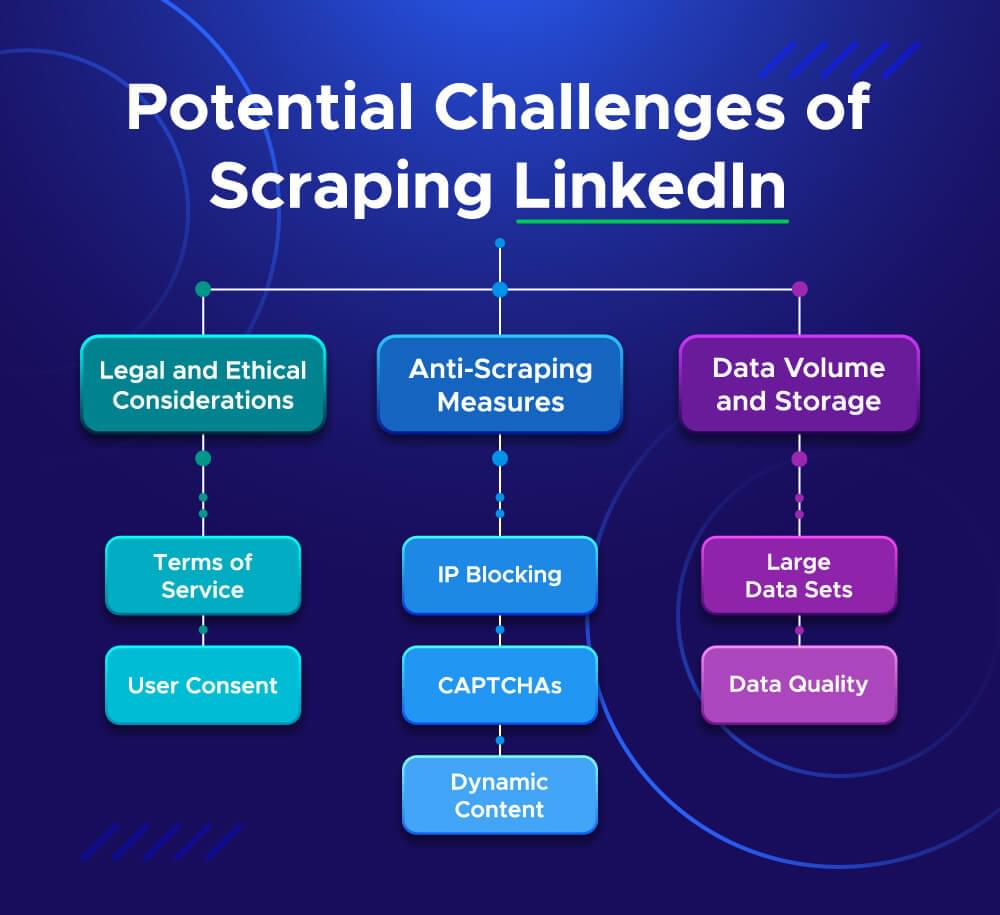

Scraping LinkedIn can provide valuable data, but it also comes with its challenges.

Anti-Scraping Measures

- Challenge: IP blocking and CAPTCHAs

- Solution: Crawlbase provides rotating proxies and CAPTCHA handling

Dynamic Content

- Challenge: JavaScript-rendered pages

- Solution: Use headless browsers or Crawlbase’s rendering engine

Legal Compliance

- Challenge: LinkedIn’s Terms of Service restrictions

- Solution: Focus on public data only and respect privacy laws

Data Volume

- Challenge: Processing large datasets

- Solution: Asynchronous requests and structured storage

Getting Started with Crawlbase

To scrape LinkedIn using Crawlbase’s Crawling API, you need to set up your Python environment. Before getting started, view LinkedIn pricing here.

1. Install Python:

Download and install Python from the official website. Ensure that you add Python to your system’s PATH during installation.

2. Create a Virtual Environment:

Open your terminal or command prompt and navigate to your project directory. Create a virtual environment by running:

1 | python -m venv venv |

Activate the virtual environment:

On Windows:

1

.\venv\Scripts\activate

On macOS/Linux:

1

source venv/bin/activate

3. Install Crawlbase Library:

With the virtual environment activated, install the Crawlbase library using pip:

1 | pip install crawlbase |

Scraping LinkedIn Profiles:

Start by importing the necessary libraries and initializing the Crawlbase API with your access token. Define the URL of the LinkedIn profile you want to scrape and set the scraping options.

1 | from crawlbase import CrawlingAPI |

This script initializes the Crawlbase API, defines the URL of the LinkedIn profile to scrape, and uses the linkedin-profile scraper. It makes an asynchronous request to fetch the profile data and prints the JSON response.

Example Output:

1 | { |

Retrieving Data from Crawlbase Cloud Storage:

When using asynchronous requests, Crawlbase Cloud Storage saves the response and provides a request identifier (rid). You need to use this rid to retrieve the data.

1 | from crawlbase import StorageAPI |

This script retrieves the stored response using the rid and prints the JSON data.

Example Output:

1 | { |

Scraping Company Pages

Use the linkedin-company scraper to gather organizational data:

1 | from crawlbase import CrawlingAPI |

This script initializes the Crawlbase API, sets the URL of the LinkedIn company page you want to scrape, and specifies the linkedin-company scraper. The script then makes an asynchronous request to fetch the company data and prints the JSON response.

Example Output:

1 | { |

Retrieving Data from Crawlbase Cloud Storage

As with profile scraping, asynchronous requests will return a rid. You can use this rid to retrieve the stored data.

1 | from crawlbase import StorageAPI |

This script retrieves and prints the stored company data using the rid.

Example Output:

1 | { |

Scraping LinkedIn Feeds

Monitor activity streams with the linkedin-feed scraper:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

Retrieving Data from Crawlbase Cloud Storage

As with profile and company page scraping, asynchronous requests will return a rid. You can use this rid to retrieve the stored data.

1 | from crawlbase import StorageAPI |

This script retrieves and prints the stored feed data using the rid.

Example Output:

1 | { |

Supercharge Your Career Goals with Crawlbase

Scraping LinkedIn data can provide valuable insights for various applications, from job market analysis to competitive research. Crawlbase automate the process of gathering LinkedIn data, enabling you to focus on analyzing and utilizing the information. Using Crawlbase’s powerful Crawling API and Python, you can efficiently scrape LinkedIn profiles, company pages, and feeds.

If you’re looking to expand your web scraping capabilities, consider exploring our following guides on scraping other important websites.

📜 How to Scrape Indeed Job Posts

📜 How to Scrape Emails from LinkedIn

📜 How to Scrape Airbnb

📜 How to Scrape Realtor.com

📜 How to Scrape Expedia

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions (FAQs)

Q. Is scraping LinkedIn legal?

Scraping LinkedIn is legal as long as you do not violate LinkedIn’s terms of service. It’s important to review LinkedIn’s policies and ensure that your scraping activities comply with legal and ethical guidelines. Always respect privacy and data protection laws, and consider using officially provided APIs when available.

Q. How to scrape LinkedIn?

To scrape LinkedIn, you can use Crawlbase’s Crawling API. First, set up your Python environment and install the Crawlbase library. Choose the appropriate scraper for your needs (profile, company, or feed), and make asynchronous requests to gather data. Retrieve the data using the Crawlbase Cloud Storage, which stores the response for easy access.

Q. What are the challenges in scraping LinkedIn?

Scraping LinkedIn involves several challenges. LinkedIn has strong anti-scraping measures that can block your activities. The dynamic nature of LinkedIn’s content makes it difficult to extract data consistently. Additionally, you must ensure compliance with legal and ethical standards, as violating LinkedIn’s terms of service can lead to account bans or legal action. Using a reliable tool like Crawlbase can help mitigate some of these challenges by providing robust scraping capabilities and adhering to best practices.

Q. What’s the best scraper for recruitment?

The LinkedIn profile scraper is ideal for recruitment, allowing you to extract candidate information including work history, skills, and education. Combine with the company scraper to research potential employers.

Q. Can I scrape multiple profiles at once?

Yes, use asynchronous requests to scrape multiple profiles efficiently. Crawlbase supports up to 20 requests per second, and the Storage API lets you retrieve all results using their unique request identifiers (rid).