Just Eat, one of the world’s leading online food delivery platforms, connects millions of consumers to their favorite restaurants. With detailed information about restaurant listings, menus, ratings, and reviews, the platform is a goldmine for businesses, researchers, and developers looking to analyze food delivery trends or build data-driven solutions.

In 2023, Just Eat made €5.2 billion, with over 60 million active users worldwide. They have over 374,000 partnered restaurants globally and a huge choice of cuisines to choose from. The UK site is #1 in the Restaurants and Delivery category, a market leader.

In this blog, we’ll be scraping Just Eat using Python and the Crawlbase Crawling API. Here’s what you’ll learn:

- Extracting restaurant and menu data.

- Handling scroll-based pagination.

- Saving and structuring the scraped data.

Here’s a short tutorial on how to scrape Just Eat:

Table of Contents

- Why Scrape Just Eat Data?

- Key Data Points to Extract from Just Eat

- Crawlbase Crawling API for Just Eat Scraping

- Installing the Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing the Right IDE for Web Scraping

- Inspecting the HTML to Identify Selectors

- Writing the Just Eat Search Listings Scraper

- Handling Scroll-Based Pagination

- Storing Scraped Data in a JSON File

- Complete Python Code Example

- Inspecting the Menu Page HTML for Selectors

- Writing the Menu Scraper

- Handling Pagination for Menus

- Storing Menu Data in a JSON File

- Complete Python Code Example

Why Scrape Just Eat Data?

Just Eat is a giant in the food delivery industry, a treasure trove of data that can be used for many things. The below image shows some of the reasons why scrape Just Eat:

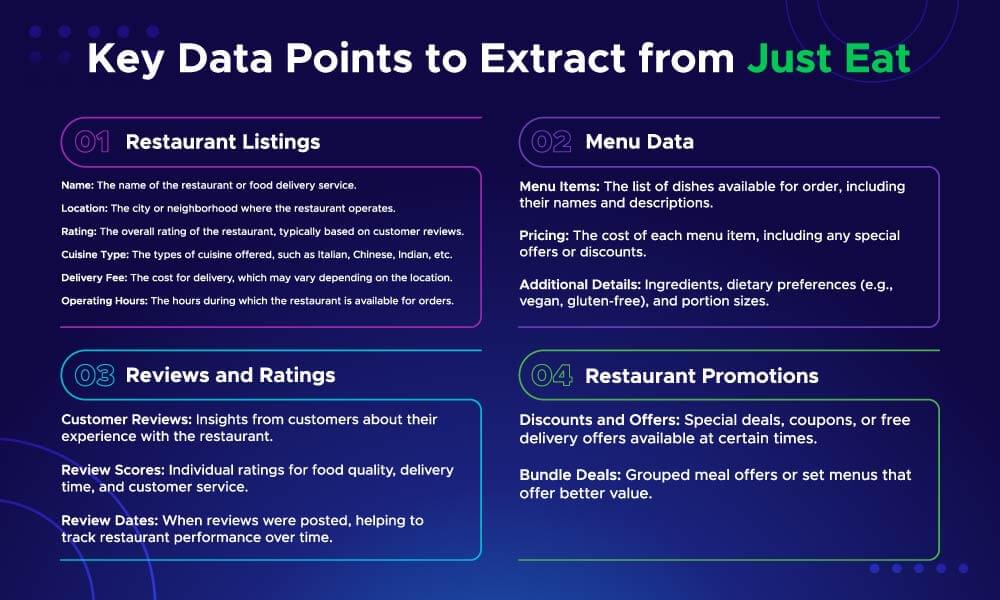

Key Data Points to Extract from Just Eat

When scraping data from Just Eat, you should focus on the most valuable and relevant information. The below image shows what you can extract from the platform:

Crawlbase Crawling API for Just Eat Scraping

The Crawlbase Crawling API makes scraping Just Eat easy and straightforward. Here’s why the Just Eat Crawling API is great for Just Eat scraping:

- Handles JavaScript-Rendered Content: Just Eat uses JavaScript to display restaurant details and menus. Crawlbase makes sure all content is fully loaded before scraping.

- IP Rotation: Crawlbase rotates IPs to avoid getting blocked so you can scrape multiple pages without hitting rate limits or CAPTCHAs.

- Customizable Requests: You can customize headers, cookies, and other parameters to fit your needs.

- Scroll-based Pagination: Just Eat uses an infinite scroll to load more results. Crawlbase supports scrolling for a long time, so you get all the data.

Crawlbase Python Library

Crawlbase provides a Python library to use its products. Through it, you can use Crawling API easily. To get started, you’ll need your Crawlbase access token, which you can get by signing up for their service.

Crawlbase provides two types of tokens. A normal token for static sites and a JS Token for JS-rendered sites. Crawlbase offers 1,000 requests free for its Crawling API. See the documentation for more.

In the next section, we will go over how to set up your Python environment for Just Eat scraping.

Setting Up Your Python Environment

Before we start scraping Just Eat data, you need to set up your Python environment. A good environment means your scripts will run smoothly.

Installing Python and Required Libraries

First, make sure you have Python installed on your system. You can download the latest version of Python from the official Python website. Once installed, check the installation by running the following command in your terminal or command prompt:

1 | python --version |

Then, install the required libraries with pip. For this tutorial, you’ll need:

**crawlbase**: For interacting with the Crawlbase Crawling API.**beautifulsoup4**: For parsing HTML and extracting data.

Run the following command to install all dependencies:

1 | pip install crawlbase beautifulsoup4 |

Choosing the Right IDE for Web Scraping

Choosing the right IDE (Integrated Development Environment) makes coding life easier. Here are some popular ones for Python:

- VS Code: Light, powerful, and has great Python extensions.

- PyCharm: Full featured with advanced debugging and testing tools.

- Jupyter Notebook: Good for exploratory data analysis and step-by-step code execution.

Pick one that fits your workflow. For this blog, we recommend VS Code for simplicity.

Scraping Just Eat Restaurant Listings

In this section, we’ll scrape restaurant listings from Just Eat using Python and the Crawlbase Crawling API. We’ll go through finding HTML selectors, writing the scraper, scrolling pagination, and storing data in a JSON file.

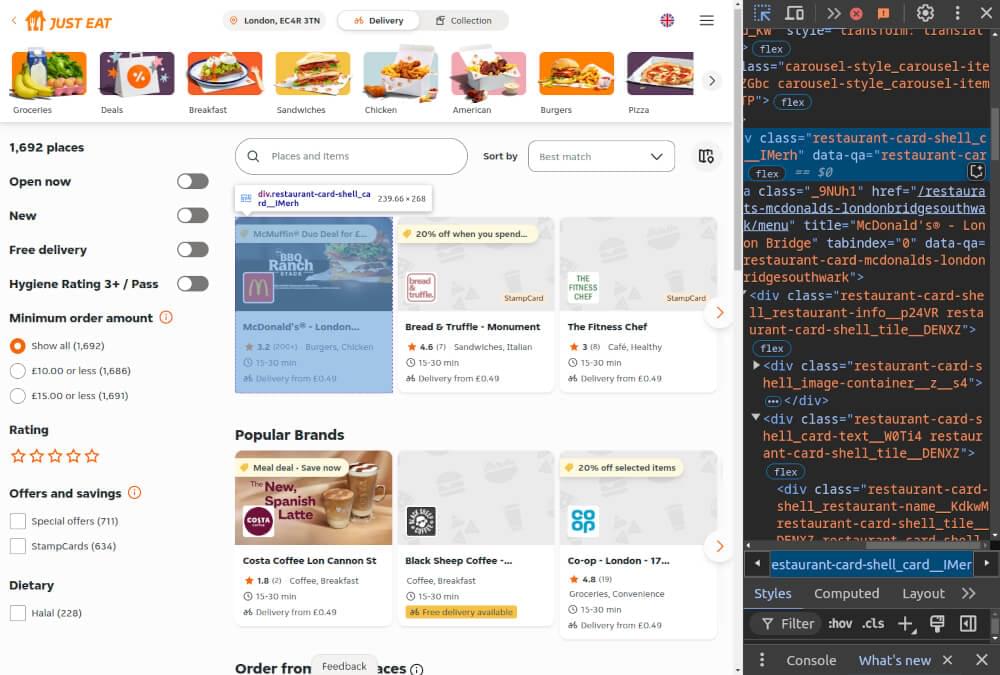

Inspecting the HTML to Identify Selectors

To scrape data, you first need to understand the structure of the Just Eat website. Here’s how you can inspect the HTML:

- Open the Website: Navigate to the Just Eat search results page for a specific city, e.g., Just Eat Listings for London Bridge area.

- Open Developer Tools: Right-click anywhere on the page and select “Inspect” (or press

Ctrl + Shift + Ion Windows orCmd + Option + Ion Mac).

- Locate Key Elements:

- Restaurant Name: Extract from

<div>withdata-qa="restaurant-info-name". - Cuisine Type: Extract from

<div>withdata-qa="restaurant-cuisine". - Rating: Extract from

<div>withdata-qa="restaurant-ratings". - Restaurant Link: Extract the

hreffrom the<a>tag inside the restaurant card and prefix it withhttps://www.just-eat.co.uk.

Writing the Just Eat Search Listings Scraper

Now that you’ve identified the selectors, you can write the scraper. Below is a sample Python script to scrape restaurant listings using Crawlbase and BeautifulSoup:

1 | from crawlbase import CrawlingAPI |

Handling Scroll-Based Pagination

Just Eat uses scroll-based pagination to load more results as you scroll down. Crawlbase Crawling API supports automatic scrolling, so you don’t have to manage it manually.

By setting the scroll and scroll_interval in the API request, you will fetch all the listings. You don’t need to add page_wait, as scroll_interval will work the same way.

1 | options = { |

Storing Scraped Data in a JSON File

Once you’ve scraped the data, store it in a JSON file for further analysis. Here’s how you can save the results:

1 | def save_to_json(data, filename='just_eat_restaurants.json'): |

Complete Python Code Example

Below is the complete script combining all the steps:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

With this script, you can scrape restaurant listings from Just Eat. In the next section, we’ll scrape restaurant menus for more details.

Scraping Restaurant Menus on Just Eat

Scraping restaurant menus on Just Eat will give you deep details of menu offerings, prices and meal customization options. In this section, we’ll show you how to find the HTML structure of menu pages, write the scraper, handle pagination, and store the menu data in a JSON file.

Inspecting the Menu Page HTML for Selectors

Before writing the scraper, inspect the menu page’s HTML structure to locate the key elements:

- Open the Menu Page: Click on a restaurant listing to access its menu page.

- Inspect the HTML: Right-click and select “Inspect” (or press

Ctrl + Shift + I/Cmd + Option + I) to open Developer Tools.

- Locate Key Elements:

- Category: Found in

<section>withdata-qa="item-category". The name is in<h2>withdata-qa="heading". - Dish Name: Inside

<h2>withdata-qa="heading". - Dish Price: Inside

<span>with a class starting with"formatted-currency-style". - Dish Description: Inside

<div>with a class starting with"new-item-style_item-description".

Writing the Menu Scraper

After identifying the HTML selectors, write a Python script to scrape the menu details. Here’s a sample implementation:

1 | from crawlbase import CrawlingAPI |

Handling Pagination for Menus

Like SERP, the Just Eat menu page also uses scroll-based pagination. Crawlbase Crawling API can handle pagination by enabling scroll options. Use these options:

1 | options = { |

Add the scroll time as per your need to ensure all menu items are loaded before scraping.

Storing Menu Data in a JSON File

Once menu data is scraped, save it in a JSON file for easy access and analysis. Here’s how:

1 | def save_menu_to_json(data, filename='just_eat_menu.json'): |

Complete Python Code Example

Here’s the complete script for scraping menus:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

Final Thoughts

Scraping Just Eat data with Python and the Crawlbase Crawling API is a great way to get valuable insights for businesses, developers, and researchers. From restaurant listings to menu data, it makes data collection and organization for analysis or app development a breeze.

Ensure your scraping practices comply with ethical guidelines and the website’s terms of service. With the right approach, you can leverage web data to make informed decisions and create impactful solutions.

If you want to do more web scraping, check out our guides on scraping other key websites.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape Monster.com

📜 How to Scrape Groupon

📜 How to Scrape TechCrunch

📜 How to Scrape Hotel Data from Agoda

Contact our support if you have any questions. Happy scraping!

Frequently Asked Questions

Q. Is it legal to scrape data from Just Eat?

Web scraping legality depends on the website’s T&Cs and your intended use of the data. Make sure you review Just Eat’s T&Cs and don’t breach them. Always ensure your scraping activities are ethical and comply with local data privacy regulations.

Q. How do I handle dynamic content and pagination on Just Eat?

Just Eat uses JavaScript to render content and scroll based pagination. With Crawlbase Crawling API, the pages are fully rendered, and you can scrape dynamic content. Crawlbase’s scroll parameters allow you to handle infinite scrolling efficiently.

Q. Can I extract menu information for specific restaurants on Just Eat?

Yes, with the right selectors and tools, you can get menu information for individual restaurants, including dish names, descriptions, and prices. Crawlbase Crawling API ensures all dynamic content, including menu details, is fully rendered for scraping.

Q. How can I prevent my scraper from being blocked?

To not getting blocked, use techniques like IP rotation, request delays, and user-agent header to mimic real users. Crawlbase Crawling API does all this for you by handling IP rotation, user-agent management, and anti-bot measures.