If you’re looking for great deals on products, experiences, and coupons, Groupon is a top platform. With millions of active users and thousands of daily deals, Groupon helps people save money while enjoying activities like dining, travel, and shopping. By scraping Groupon, you can access valuable data on these deals, helping you stay updated on the latest offers or even build your own deal-tracking application.

In this blog, we’ll explore how to build a powerful Groupon Scraper in Python to find the hottest deals and coupons. Given that Groupon uses JavaScript to dynamically render its content, simple scraping methods won’t work efficiently. To handle this, we’ll leverage the Crawlbase Crawling API, which seamlessly deals with JavaScript rendering and other challenges.

Let’s dive in and learn how to Scrape Groupon for deals and coupons in this YouTube video:

Table of Contents

- Why Scrape Groupon Deals and Coupons?

- Key Data Points to Extract from Groupon

- Crawlbase Crawling API for Groupon Scraping

- Why Use the Crawlbase Crawling API?

- Crawlbase Python Library

- Installing Python

- Setting Up a Virtual Environment

- Installing Required Libraries

- Choosing the Right IDE

- Understanding Groupon’s Website Structure

- Writing the Groupon Scraper

- Handling Pagination

- Storing Data in a JSON File

- Complete Code Example

- Inspecting the HTML Structure

- Writing the Groupon Coupon Scraper

- Storing Data in a JSON File

- Complete Code Example

Why Scrape Groupon Deals and Coupons?

Scraping Groupon deals and coupons helps you keep track of the newest discounts and offers. Groupon posts many deals each day, making it hard to check them all by hand. A good Groupon Scraper does this job for you, gathering and studying offers in areas like food, travel, electronics, and more.

Through Groupon Scraping, you can pull out essential info such as what the deal is, how much it costs, how big the discount is, and when it ends. This has benefits for businesses that want to watch what their rivals offer, developers creating a site that lists deals, or anyone who just wants to find the best bargains.

We aim to scrape Groupon deals and coupons productively, pulling out all the essential info while tackling issues like content that loads on its own. Because Groupon relies on JavaScript to show its content, regular scraping methods need help getting the data. This is where our solution, powered by the Crawlbase Crawling API, comes in handy. It lets us collect deals without breaking a sweat by getting around these common roadblocks.

In the following parts, we’ll look at the key pieces of info to pull from Groupon and get our setup ready for a smooth data collection process.

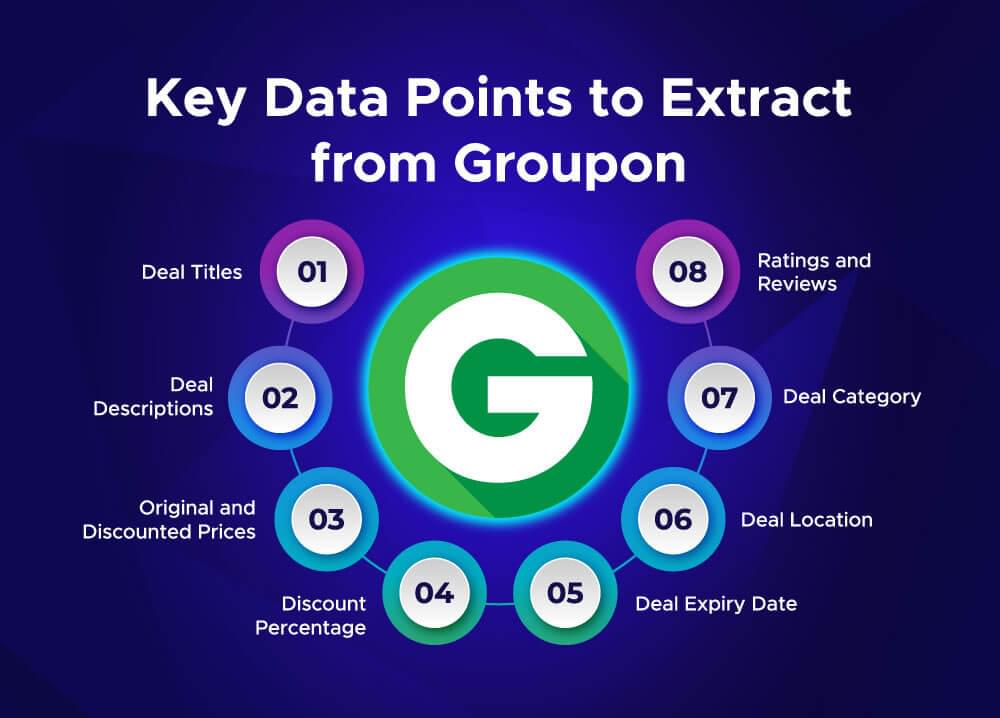

Key Data Points to Extract from Groupon

When you’re using a Groupon Scraper, you need to pinpoint the critical data that makes your scraping work count. Groupon has tons of deals in different categories, and pulling out the correct info can help you get the most from your scraping project. Here’s what you should focus on when you scrape Groupon:

- Deal Titles: The name or title of a deal grabs attention first. It gives a quick idea of what’s on offer.

- Deal Descriptions: In-depth descriptions offer more details about the product or service, helping people understand what the offer includes.

- Original and Discounted Prices: These play a crucial role in understanding the available savings. By getting both the original price and the discounted price, you can work out the percentage of savings.

- Discount Percentage: Many Groupon deals show the percentage of discounts right away. Getting this data point saves you time in figuring out the savings yourself.

- Deal Expiry Date: Knowing when a deal ends helps to filter out old offers. Getting the expiry date makes sure you look at active deals.

- Deal Location: Certain offers apply to specific areas. Getting location info lets you sort deals by region, which helps a lot with local marketing efforts.

- Deal Category: Groupon puts deals into groups like food, travel, electronics, and so on. Grabbing category details makes it simple to break down the deals for study or display.

- Ratings and Reviews: What customers say and how they score deals shows how popular and trustworthy an offer is. This info proves helpful in judging the quality of deals.

By zeroing in on these key bits of data, you can make sure your Groupon Scraping gives you info you can use and that matters. The next parts will show you how to set up your tools and build a scraper that can pull deals from Groupon in a good way.

Crawlbase Crawling API for Groupon Scraping

Working on a Groupon Scraper project can be tough when you need to deal with content that changes and JavaScript that loads stuff. Groupon’s website uses a lot of JavaScript to show deals and offers, so you will need more than just making simple requests to get you the data you want. This is where the Crawlbase Crawling API comes in handy. The Crawlbase Crawling API helps you avoid these issues and extract data from Groupon without running into problems with JavaScript loading, CAPTCHA, or IP blocking.

Why Use the Crawlbase Crawling API?

- Handle JavaScript Rendering: The biggest hurdle when you grab deals from Groupon is to handle content that JavaScript creates. Crawlbase’s API takes care of JavaScript, which allows you to pull data.

- Avoid IP Blocking and CAPTCHAs: If you scrape too much, Groupon might stop your IP or throw up CAPTCHAs. Crawlbase changes IPs on its own and beats CAPTCHAs, so you can keep pulling Groupon data non-stop.

- Easy Integration: You can add the Crawlbase Crawling API to your Python code without much trouble. This lets you focus on getting the data you need, while the API handles the tricky stuff in the background.

- Scalable Scraping: Crawlbase offers flexible choices to handle Groupon scraping projects of any size. You can use it to gather small datasets or to carry out large-scale data collection efforts.

Crawlbase Python Library

Crawlbase offers its own Python library to help its customers. You need an access token to authenticate when you use it. You can get this token after you create an account.

Here’s an example function that shows how to use the Crawling API from the Crawlbase library to send requests.

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase offers two token types: a Normal Token for static sites and a JavaScript (JS) Token for dynamic or browser-based requests. For Groupon, you’ll need a JS Token. You can start with 1,000 free requests, no credit card needed. Check out the Crawlbase Crawling API docs here.

Next up, we’ll walk you through setting up Python and building Groupon scrapers that uses the Crawlbase Crawling API to handle JavaScript and other scraping challenges. Let’s jump into the setup process.

Setting Up Your Python Environment

Before we start writing the Groupon Scraper, we need to create a solid Python setup. Follow the following steps.

Installing Python

First, you’ll need Python on your computer to scrape Groupon. You can get the newest version of Python from python.org.

Setting Up a Virtual Environment

We suggest using a virtual environment to keep different projects from clashing. To make a virtual environment, run these commands:

1 | # Create a virtual environment |

This keeps your project’s dependencies separate and makes them easier to manage.

Installing Required Libraries

Now, install the required libraries inside the virtual environment:

1 | pip install crawlbase beautifulsoup4 |

Here’s a brief overview of each library:

- crawlbase: The main library for sending requests using the Crawlbase Crawling API, which handles JavaScript rendering needed to scrape Groupon.

- pandas: To store and manage the scraped data.

- beautifulsoup4: To parse and navigate through the HTML structure of Groupon pages.

Choosing the Right IDE

You can write your code in any text editor, but using an Integrated Development Environment (IDE) can make coding easier. Some popular IDEs include VS Code, PyCharm, and Jupyter Notebook. These tools have features that help you code better, like highlighting syntax, completing code, and finding bugs. These features come in handy when you’re building a Groupon Scraper.

Now that you’ve set up your environment and have your tools ready, you can start writing the scraper. In the next section, we’ll create a Groupon deals scraper.

Scraping Groupon Deals

In this part, we’ll explain how to get deals from Groupon with Python and the Crawlbase Crawling API. Groupon uses JavaScript rendering and scroll-based pagination so simple scraping methods don’t work. We’ll use Crawlbase’s Crawling API, which handles JavaScript and scroll pagination without a hitch.

The URL we’ll scrape is: https://www.groupon.com/local/washington-dc

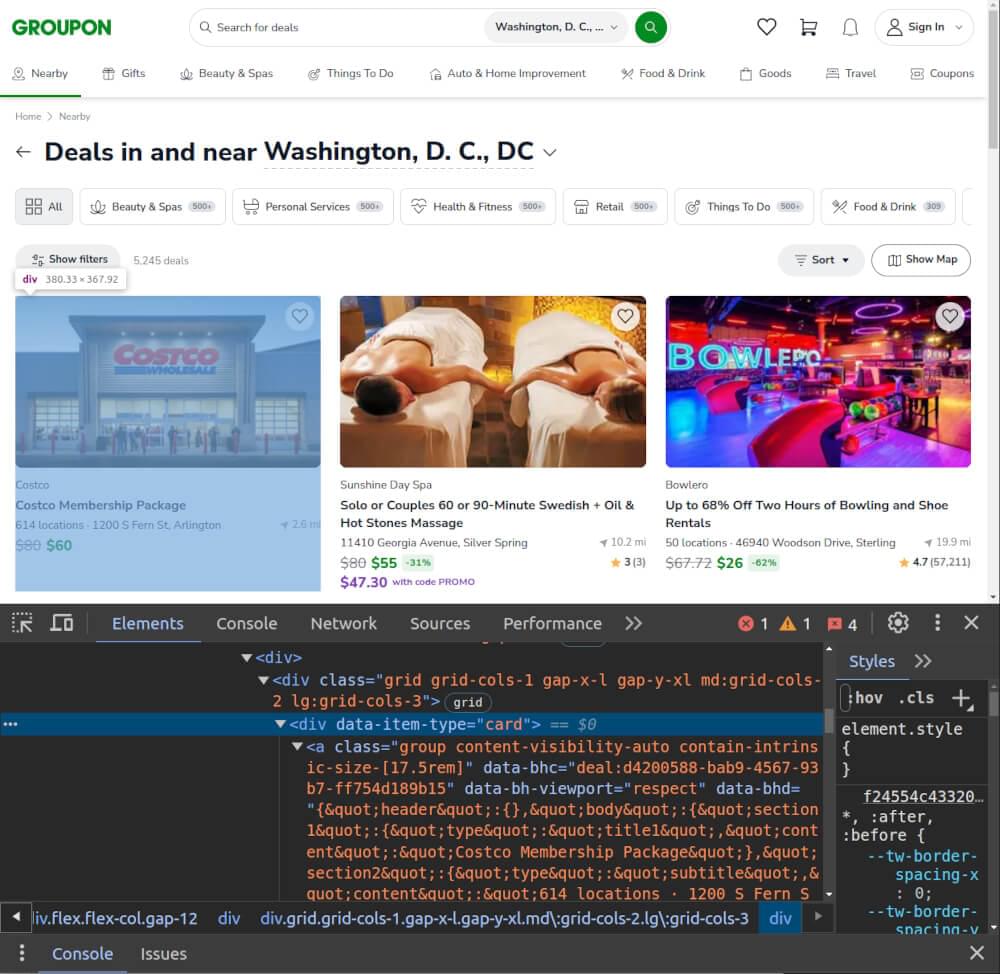

Inspecting the HTML Structure

Before writing the code, it’s crucial to inspect the HTML structure of Groupon’s deals page. This helps you determine the correct CSS selectors needed to extract the data.

Visit the URL: Open the URL in your browser.

Open Developer Tools: Right-click and select “Inspect” to open Developer Tools.

Identify Key Elements: Groupon deal listings are typically found within <div> elements with the class cui-content. Each deal has the following details:

- Merchant: Found inside the 2

divchild ofaelement. - Title: Found within a

<h2>tag with the classtext-dealCardTitle. - Link: The link is contained within the href attribute of the

<a>tag. - Original Price: Displayed in a

<div>with the attributedata-testid="strike-through-price". - Discount Price: Displayed in a

<div>with the attributedata-testid="green-price" - Location: Optional, usually in a

<span>, which is inside adivthat is next to the titleh2element.

Writing the Groupon Scraper

We’ll begin by coding a simple function to get the deal info from the page. We’ll use the Crawlbase Crawling API to handle dynamic content loading because Groupon relies on JavaScript for rendering.

Here’s the code:

1 | from crawlbase import CrawlingAPI |

The options parameter includes settings like ajax_wait for handling asynchronous content loading and page_wait to wait 5 seconds before scraping, allowing all elements to load properly. You can read about Crawlbase Crawling API parameters here.

Handling Pagination

Groupon uses button-based pagination to load additional deals dynamically. To capture all the deals, we’ll leverage the css_click_selector parameter in the Crawlbase Crawling API. We have to pass a valid CSS selector of the “Load more” button as a value for this parameter. Read more about this parameter here.

Here’s how you can integrate it:

1 | def scrape_groupon_with_pagination(url): |

In this function, we’ve added scroll-based pagination handling using Crawlbase’s options, ensuring max available deals are captured.

Storing Data in a JSON File

Once you’ve collected the data, it’s easy to store it in a JSON file:

1 | import json |

Complete Code Example

Here’s the full code combining everything discussed:

1 | from crawlbase import CrawlingAPI |

Test the Scraper:

Create a new file named groupon_deals_scraper.py, copy the code provided into this file, and save it. Run the Script using the following command:

1 | python groupon_deals_scraper.py |

You should see an output similar to the example below in the JSON file.

1 | [ |

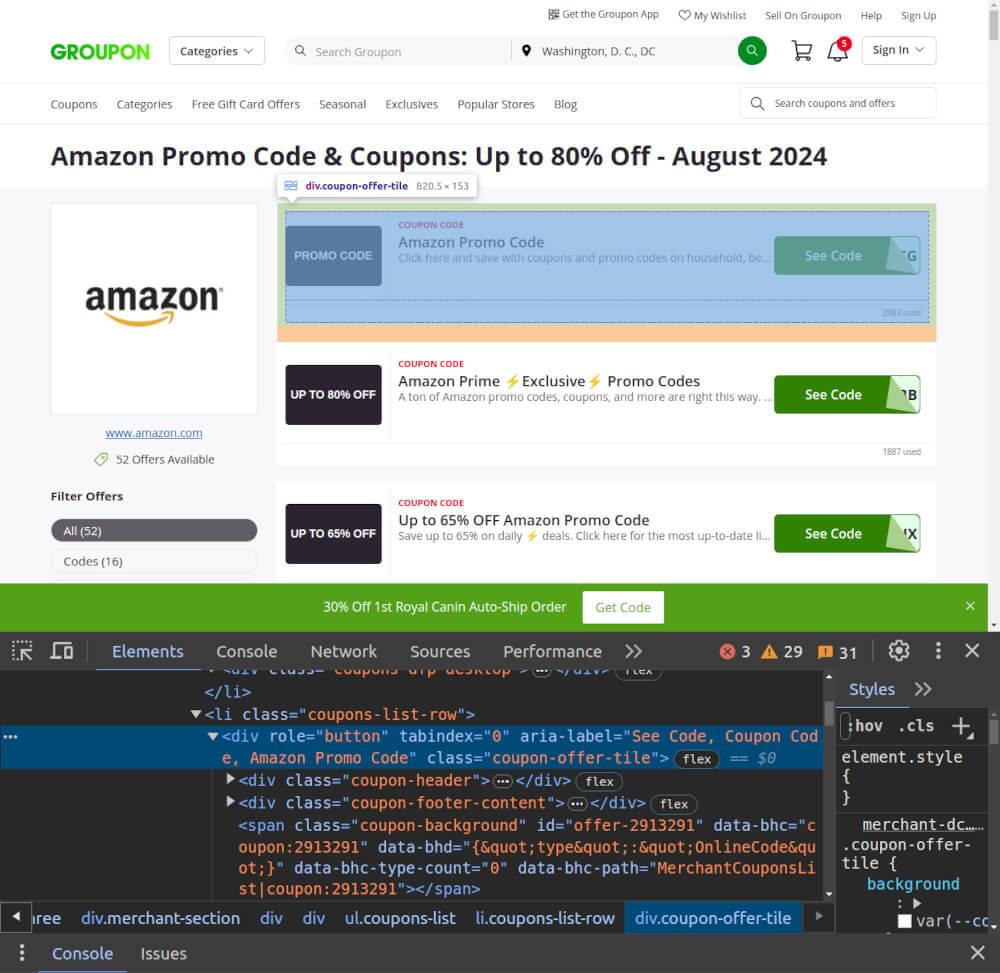

Scraping Groupon Coupons

In this part, we’ll learn how to get coupons from Groupon with Python and the Crawlbase Crawling API. Groupon’s coupon page looks a bit different from its deals page so we need to look at the HTML structure. We’ll use the Crawlbase API to get coupon titles descriptions when they expire, and their links.

We’ll scrape this URL: https://www.groupon.com/coupons/amazon

Inspecting the HTML Structure

To scrape Groupon coupons effectively, it’s essential to identify the key HTML elements that hold the data:

Visit the URL: Open the URL in your browser.

Open Developer Tools: Right-click on the webpage and choose “Inspect” to open the Developer Tools.

Locate the Coupon Containers: Groupon’s coupon listings are usually within <div> tags with the class coupon-offer-tile. Each coupon block contains:

- Title: Found inside an

<h2>element with the classcoupon-tile-title. - Callout: The Callout is within the

<div>element with the classcoupon-tile-callout. - Description: Usually found in a

<p>with the classcoupon-tile-description. - Coupon Type: Found inside a

<span>tag with the classcoupon-tile-type.

Writing the Groupon Coupon Scraper

We’ll write a function that uses the Crawlbase Crawling API to handle dynamic content rendering and pagination while scraping the coupon data. Here’s the implementation:

1 | from crawlbase import CrawlingAPI |

Storing Data in a JSON File

Once you have the coupon data, you can store it in a JSON file for easy access and analysis:

1 | def save_coupons_to_json(data, filename='groupon_coupons.json'): |

Complete Code Example

Here is the complete code for scraping Groupon coupons:

1 | from crawlbase import CrawlingAPI |

Test the Scraper:

Save the code to a file named groupon_coupons_scraper.py. Run the script using the following command:

1 | python groupon_coupons_scraper.py |

After running the script, you should find the coupon data saved in a JSON file named groupon_coupons.json.

1 | [ |

Final Thoughts

Building a Groupon scraper helps you stay in the loop about the best deals, promo codes and coupons. Python and the Crawlbase Crawling API let you scrape Groupon pages without much trouble. You can handle dynamic content and pull out useful data.

This guide showed you how to set up your environment, write the Groupon deals and coupons scraper, deal with pagination, and save your data. A well-designed Groupon scraper can automate the process if you want to track deals in a specific place or find the newest coupons.

If you’re looking to expand your web scraping capabilities, consider exploring our following guides on scraping other important websites.

📜 How to Scrape Google Finance

📜 How to Scrape Google News

📜 How to Scrape Google Scholar Results

📜 How to Scrape Google Search Results

📜 How to Scrape Google Maps

📜 How to Scrape Yahoo Finance

📜 How to Scrape Zillow

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions

Q. Is scraping Groupon legal?

Scraping Groupon doesn’t break the rules if you do it for yourself and stick to what the site allows. But make sure to look at Groupon’s rules to check if what you’re doing is okay. If you want to scrape Groupon data for commercial purposes, you should ask the website first so you don’t get into trouble.

Q. Why use the Crawlbase Crawling API instead of simpler methods?

Groupon depends a lot on JavaScript to show content. Regular scraping tools like requests and BeautifulSoup can’t handle this. The Crawlbase Crawling API helps get around these problems. It lets you grab deals and coupons even when there’s JavaScript and you need to scroll to see more items.

Q. How can I store scraped Groupon data?

You have options to keep Groupon data you’ve scraped in different formats like JSON, CSV, or even a database. In this guide, we’ve focused on saving data in a JSON file because it’s easy to handle and works well for most projects. JSON also keeps the structure of the data intact, which makes it simple to analyze later.