“What kind of marketing methodology do I need to do so I can learn more about my target market and its prospects?”. “How many emails do I need to send to get responses from my prospects and learn more about how I can sell to them?. “How much money should I invest in someone who can gather customer data for me?”

These are probably just a few of your many questions while thinking of ways to get relevant customer data for your business. You might have resorted to the conventional way of paying someone to search for customer data.

Now, we all know that this method takes a long time to complete and requires a huge amount of money, and the hardest part is that it doesn’t give you enough results. It is inefficient and just a total waste of time, money, and some hope. Businesses use many other ways to gather data each has its pros over the others. But nowadays, efficiency and quality while staying within the budget are the difference makers. We will talk about this in this article.

Running a business is not only about selling but also about knowing what to sell, how to market, what your audience is, your target, etc. That is why big data is so important.

However, collecting huge amounts of data is not always easy, and many times comes with a risk, so tools like Crawlbase come in handy.

This blog contains everything you need to know about big data web scraping. We will discuss five websites where you can find big data and how they can help your business. We will also introduce you to Crawlbase and show you simple steps to crawl websites with nodes for big data using Crawlbase.

Amazon Scraping

Getting data from Amazon can help you in many ways. For most e-commerce businesses, it means understanding their competitors. Big data scraping from Amazon offers real-time prices and can receive and collect Amazon reviews for product research and improve products’ performance when they go on retail. A big data web crawler for Amazon is extremely important for growth nowadays.

Download our Amazon Scraping Guide

Facebook Scraping

Let’s face it, you are freaking worried about your privacy, but if you are on Facebook, many things are exposed to the internet, and that is why collecting Facebook profiles from the internet can be achieved with tools like Crawlbase. Why would a company need data? For many things like risk assessment, for example, banks, before giving loans, they can scrape Facebook profiles and see what they can know about you. Also, before hiring new employees, companies can anonymously crawl Facebook to learn about their new future colleagues.

Instagram Scraping

Like with Facebook, you can gather data from different profiles by crawling an influencer database using an Instagram big data scraper. Scraping Instagram data is one of the most requested big-data trends nowadays, as you can easily learn about a brand or an influencer by crawling and scraping millions of Instagram pictures and profiles.

eBay Scraping

Again, this is crucial for e-commerce market research. Know about your competitors so you can beat them. EBay is a vast marketplace where big data scraping for prices, reviews, descriptions, and other data is a must if you want to stay afloat in the competitive e-commerce business world.

Google Scraper

Do you want to bring traffic to your website? Then you must work on your SEO, but not only that, you must scrape Google to know in which position you are compared to your competitors. Crawling Google allows you to get different kinds of data, which can help you stay ahead. With big data analysis, you can outperform your market and learn what products you should create and whom you must target.

These five scraping examples can take your business to the next level, but it doesn’t end here. With Crawlbase, you can crawl and scrape millions of websites and get real-time data for your projects. You should try it, as the first 1,000 are free of charge.

What is Crawlbase?

Crawlbase is an all-in-one data crawling and scraping platform for businesses and business developers. It lets you crawl public websites and conveniently scrape data from the web because you can extract millions and even billions of data without sweat. Crawlbase’s Crawling API lets you scrape big data automatically while giving you tremendously high success rates.

When you crawl and scrape web data, you encounter blocks like IP bans and CAPTCHAs. These are some of the many things you’ll face when scraping data from web pages for your business. With Crawlbase big data crawler, you no longer have to worry about these blocks.

We understand you care about your identity while scraping. While it is not illegal, especially since Crawlbase only crawls and scrapes public websites, businessmen and developers still want to ensure they can proceed in extracting data while keeping their anonymity secure. Crawlbase does that for you - scrape millions and even billions of data anonymously!

How Much Does Crawlbase Cost?

Crawlbase understands that every business has its project scale and budget. Therefore, smaller businesses only require a smaller amount of data and should only invest money equivalent to their project size. The cost of crawling web pages and scraping data from the web depends on the scale of your project.

What Do I Get Started?

Crawlbase will extract all the data you need from almost any website as long as they’re public. You don’t need a developer to work on the behind-the-scene codes. Crawlbase is user-friendly even a little coding knowledge is enough for you to begin big data web scraping with us.

Get the data you need for your business from the web by signing up on Crawlbase’s website, and get 1,000 free requests!

How To Crawl Websites With Node For Big Data

Are you ready for big data scraping? Do you need to crawl and scrape big data in massive amounts? In Crawlbase, we have the tools and resources for this job. Continue reading this post to learn how to build your crawlers quickly to load millions of pages daily.

We will first need a Crawlbase account.

Once you’ve got the account ready and with your billing details added (which is a prerequisite to using our big data crawler), head over to the Crawlers section to create your first crawler.

Here is the control panel for your crawlers you can see, stop, start, delete, and create your Crawlbase crawlers.

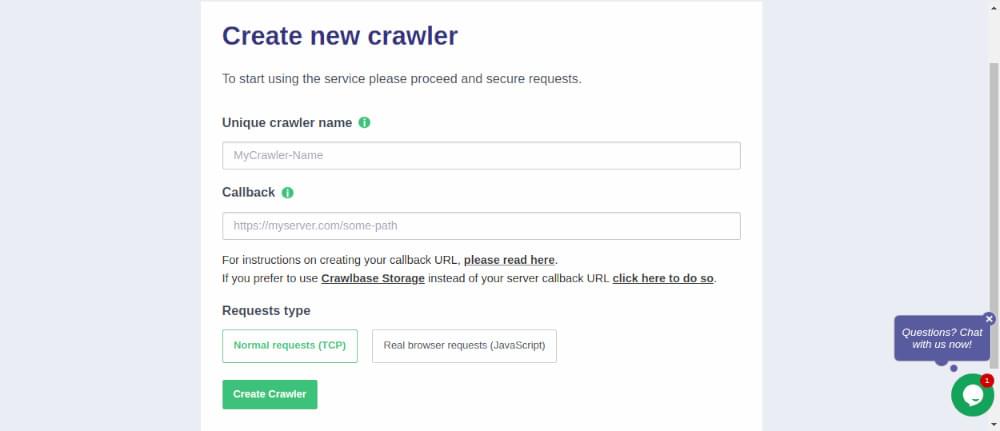

Creating your First Crawler

Creating a crawler is very easy. Once you are in the Crawlers section (see above), you just have to click on “Create new TCP crawler” if you want to load websites without javascript. You can go to “Create new JS crawler” if you want to crawl javascript enabled websites (like the ones made with React, Angular, Backbone, etc).

You will see something like the following:

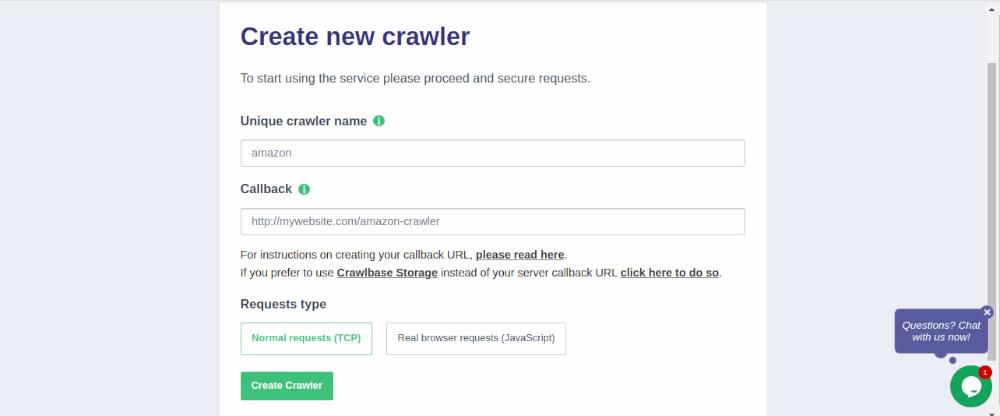

You should write a name for your nodejs crawler. For this example, let’s call it “amazon” as we will be crawling Amazon pages.

Next field is the callback url. This is your server which we will implement in Node for this example. But you can use any language: Ruby, PHP, Go, Node, Python etc. As we said, for this example we will use a node server which for demo purposes will be in the following url: http://mywebsite.com/amazon-crawler

So our settings will look like the following:

Now let’s save the crawler with “Create crawler” and let’s build our node server.

Building a Node Scraping Server

Let’s start with the basic code for a node server. Create a server.js file with the following contents:

1 | const http = require('http'); |

This is a basic server running in port 80. We will build our response handling in the handleRequest function. If your port runs in a different port, for example 4321. Make sure to update the callback url in your crawler accordingly. For example: http://mywebsite.com:4321/amazon-crawler

Request Handling Function

Crawlbase crawlers will send the html responses to your server via POST. So we have to basically check that the request method is POST and then get the content of the body. That will be the page HTML. Let’s make it simple, this is going to be the code for our request handler:

1 | function handleRequest(request, response) { |

With this function, you can already start pushing requests to the crawler you just created before, and you should start seeing responses in your server.

Let’s try running the following command in your terminal (make sure to replace with your real API token which you can find in the API docs):

1 | curl "https://api.crawlbase.com/?token=YOUR_API_TOKEN&url=https%3A%2F%2Fwww.amazon.com&crawler=amazon&callback=true" |

Run that command several times, and you will start seeing the logs in your server.

Please note that this is a basic implementation. For a real world usage you will have to consider other things like better error handling and logging and also status codes.

Scraping Big Data from Amazon

Now it’s time to get the actual data from the HTML. We have already one blog posts which explains in details how to do it with node. So why don’t you just jump on it to learn about scraping with node right here? The interesting part starts in the “Scraping Amazon reviews” section. You can apply the same code to your server and you will have a running Crawlbase Crawler. Easy right?

Who Uses Big Data Nowadays?

Before we go further, let us talk about why big data is essential in today’s business. Can big data web scraping benefit all kinds of businesses and investors? The answer is an absolute “Yes!”. Data is the new gold today. It fuels many businesses by giving their stakeholders enough knowledge about market trends, challenges, and opportunities.

Raw data can be turned into predictive data, which insurance companies, e-commerce and manufacturers, service industries, and many more commonly use. E-commerce and manufacturing companies use a big data crawler for social media to know more about demographics in their target locations. They also use this information to capture possible interests from customers with their hashtags, shared content, and commonly used and liked comments so they will know what’s “HOT” and what’s “NOT.”

They also crawl websites like Amazon and many other retail e-commerce websites to capture pricing information, dimensions, and even product reviews so they can come up with ideas for their own product/service innovations and development. Even the Real Estate industry utilizes a big data scraper to look for prospects and good properties for their listings.

How Much Data Do I Need?

Now, this question is commonly asked by many, but the answer depends on the type of the business, its products and services, and how far the business wants to go if we are talking about their data usage. There are several factors that you have to consider, which could be some of the following but are not limited to:

- With the business that I have, what kind of data do I need?

- Is there a specific service or product I need to create, develop, or market, and what kind of data would I need to boost it?

- How much should I invest in big data web scraping?

- And one of the most important questions is, “WHERE and HOW do I get it?”

WHERE and HOW Do I Get Big Data?

Okay, now that we have already given you some idea about what data is, why it is important, and who uses it. Remember when we said, “Efficiency and quality while staying within the budget are the difference makers”? Let us talk about it now.

Data. Something available on the internet. Just sit, boot up your computer, and search for anything in Google; you will find almost anything you are looking for. Easy? Of course! You can probably do a few searches a day, right? Yeah, sure.

Let’s say you are a retailing business, and you want to get data from e-commerce websites to study product details, pricing, dimensions, reviews, and availability per region. You can have someone spend some time browsing through the websites and collecting the needed data. Is it possible? Yeah, why wouldn’t it be? But what if you’re looking at websites with BILLIONS of pages and BILLIONS of different products? You’ll need more than just a team.

There are a lot of companies and providers who can help you with bid data scraping. But if you are looking for a company that can give you data and quality while keeping the process and the budget simple, there should be no one in the market who can beat Crawlbase.