Major cities around the world have recently reported spike in house prices due to the several reasons. Property data is one of the most-sought after information today as more people embrace technology to solve this challenge. Homes.com stands as a useful resource in the real estate sector, with vast database of property listings across the United States. Most prospective use the website to gather important information like prices, locations and other specifics at their comfort.

However, browsing through hundreds of pages on Homes.com can be a daunting task. That’s why scraping homes.com is a good opportunity for buyers, investors and sellers to gain valuable insights on the housing prices in the United States.

This blog will teach you how to scrape homes.com using Python and Crawlbase. It will explore the fundamentals of setting up your environment to handling anti-scraping measures, enabling you to create a good homes.com scraper.

Here’s a detailed tutorial to scrape property data on Homes.com:

Table of Contents

- Why Scrape homes.com Property Data?

- What can we Scrape from homes.com?

- Bypass Homes.com Blocking with Crawlbase

- Overview of Homes.com Anti-Scraping Measures

- Using Crawlbase Crawling API for Smooth Scraping

- Environment Setup for homes.com Scraping

- How to Scrape homes.com Search Pages

- How to Scrape homes.com Property Pages

- Final Thoughts

- Frequently Asked Questions (FAQs)

Why Scrape homes.com Property Data?

There are many reasons you might want to scrape homes.com. Suppose you are a real estate professional or analyst. In that case, you can gather homes.com data to stay ahead of the market and get great insight into property values, rent prices, neighborhood statistics, etc. This information is crucial to making an investment decision and marketing strategy.

If you are a developer or a data scientist, scraping homes.com with Python allows you to construct a powerful application that uses data as the foundation. By creating a homes.com scraper, you can automate the process of collecting and analyzing property data, saving time and effort. Additionally, having access to up-to-date property listings can help you identify emerging trends and opportunities in the real estate market.

Overall, scrapping homes.com can bring many benefits to anyone who works in the real estate industry, whether it is investors, agents, data scientists, or developers.

What can we Scrape from homes.com?

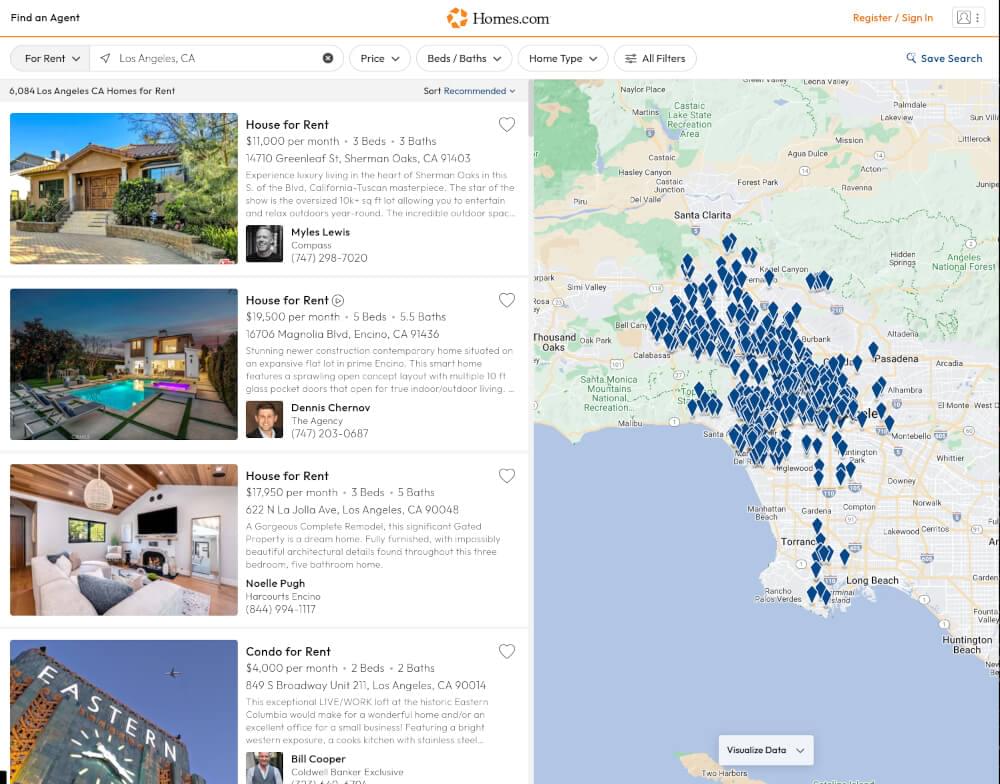

Here’s a glimpse of what you can scrape from homes.com:

An image showing the different types of data that can be scraped from Homes.com

- Property Listings: Homes.com property listings provide information about available homes, apartments, condos, and more. Scraping these listings provide data about important features, amenities, and images of properties.

- Pricing Information: Knowledge of the real estate market price trends is key to being in an advantageous position. Scraping pricing information from homes.com allows you to analyze price variations over time and across different locations.

- Property Details: Apart from the basic details, homes.com makes available to customers explicit details about the property, which includes square footage, number of bedrooms and bathrooms, property type, and so forth. You can scrape all this information for a better understanding of each listing.

- Location Data: Location plays a significant role in real estate. Scraping location data from homes.com provides insights into neighborhood amenities, schools, transportation options, and more, helping you evaluate the desirability of a property.

- Market Trends: By scraping homes.com regularly, you can track market trends and fluctuations in supply and demand. This data enables you to identify emerging patterns and predict future market movements.

- Historical Data: Holding data about the history of the real estate market, historical data is useful for studying past trends and patterns in real estate. Presuming to have scraped historical listing and pricing data from homes.com, you can now conduct longitudinal studies, and understand long term trends.

- Comparative Analysis: Using Homes.com data, you can do comparative analysis, comparing the properties within the same neighborhood versus across town or in multiple locations where you want to buy or sell property. You can quickly ascertain who your competition is with this data, and use it to determine price strategies.

- Market Dynamics: Understanding market dynamics is essential for navigating the real estate landscape. Scraping data from homes.com allows you to monitor factors such as inventory levels, time on market, and listing frequency, providing insights into market health and stability.

Bypass Homes.com Blocking with Crawlbase

Homes.com, like many other websites, employs JavaScript rendering and anti-scraping measures to prevent automated bots from accessing and extracting data from its pages.

Overview of Homes.com Anti-Scraping Measures

Here’s what you need to know about how Homes.com tries to stop scraping:

- JS Rendering: Homes.com, like many other websites, uses JavaScript (JS) rendering to dynamically load content, making it more challenging for traditional scraping methods that rely solely on HTML parsing.

- IP Blocking: Homes.com may block access to its website from specific IP addresses if it suspects automated scraping activity.

- CAPTCHAs: To verify that users are human and not bots, Homes.com may display CAPTCHAs, which require manual interaction to proceed.

- Rate Limiting: Homes.com may limit the number of requests a user can make within a certain time frame to prevent scraping overload.

These measures make it challenging to scrape data from Homes.com using traditional methods.

Use Crawlbase Crawling API for Smooth Scraping

Crawlbase offers a reliable solution for scraping data from Homes.com while bypassing its blocking mechanisms. By utilizing Crawlbase’s Crawling API, you gain access to a pool of residential IP addresses, ensuring seamless scraping operations without interruptions. Its parameters allow you to handle any kind of scraping problem with ease.

Crawling API can handle JavaScript rendering, which allows you to scrape dynamic content that wouldn’t be accessible with simple requests. Moreover, Crawlbase manages user-agent rotation and CAPTCHA solving, further improving the scraping process.

Crawlbase provides its own Python library for easy integration. The following steps demonstrate how you can use the Crawlbase library in your Python projects:

- Installation: Install the Crawlbase Python library by running the following command.

1 | pip install crawlbase |

- Authentication: Obtain an access token by creating an account on Crawlbase. This token will be used to authenticate your requests. For homes.com, we need JS token.

Here’s an example function demonstrating the usage of the Crawling API from the Crawlbase library to send requests:

1 | from crawlbase import CrawlingAPI |

Note: The first 1000 requests through the Crawling API are free of cost, and no credit card is required. You can refer to the API documentation for more details.

Environment Setup for homes.com Scraping

Before diving into scraping homes.com, it’s essential to set up your environment to ensure a smooth and efficient process. Here’s a step-by-step guide to help you get started:

- Install Python: First, make sure you have Python installed on your computer. You can download and install the latest version of Python from the official website.

- Virtual Environment: It’s recommended to create a virtual environment to manage project dependencies and avoid conflicts with other Python projects. Navigate to your project directory in the terminal and execute the following command to create a virtual environment named “homes_scraping_env”:

1 | python -m venv homes_scraping_env |

Activate the virtual environment by running the appropriate command based on your operating system:

On Windows:

1

homes_scraping_env\Scripts\activate

On macOS/Linux:

1

source homes_scraping_env/bin/activate

- Install Required Libraries: Next, install the necessary libraries for web scraping. You’ll need libraries like BeautifulSoup and Crawlbase to scrape homes.com efficiently. You can install these libraries using pip, the Python package manager. Simply open your command prompt or terminal and run the following commands:

1 | pip install beautifulsoup4 |

Code Editor: Choose a code editor or Integrated Development Environment (IDE) for writing and running your Python code. Popular options include PyCharm, Visual Studio Code, and Jupyter Notebook. Install your preferred code editor and ensure it’s configured to work with Python.

Create a Python Script: Create a new Python file in your chosen IDE where you’ll write your scraping code. You can name this file something like “homes_scraper.py”. This script will contain the code to scrape homes.com and extract the desired data.

By following these steps, you’ll have a well-configured environment for scraping homes.com efficiently. With the right tools and techniques, you’ll be able to gather valuable data from homes.com to support your real estate endeavors.

How to Scrape homes.com Search Pages

Scraping property listings from Homes.com can give you valuable insights into the housing market.

In this section, we will show you how to scrape Homes.com search pages using Python straightforward approach.

Importing Libraries

We need to import the required libraries: CrawlingAPI for making HTTP requests and BeautifulSoup for parsing HTML content.

1 | from crawlbase import CrawlingAPI |

Initialize Crawling API

Get your JS token form Crawlbase and initialize the CrawlingAPI class using it.

1 | # Initialize Crawlbase API with your access token |

Defining Constants

Set the base URL for Homes.com search pages and the output JSON file. To overcome the JS rendering issue, we can use ajax_wait and page_wait parameters provided by Crawling API. We can also provide a custom user_agent like in below options. We will set a limit on the number of pages to scrape from the pagination.

1 | BASE_URL = 'https://www.homes.com/los-angeles-ca/homes-for-rent' |

Scraping Function

Create a function to scrape property listings from Homes.com. This function will loop through the specified number of pages, make requests to Homes.com, and parse the HTML content to extract property details.

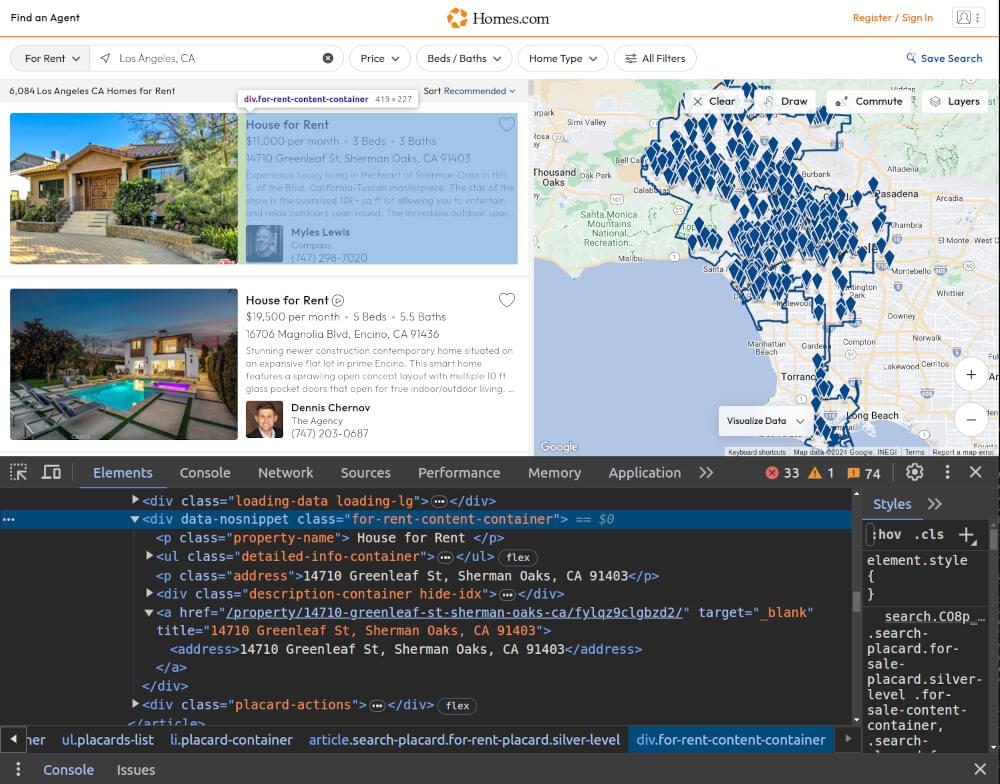

We have to inspect the page and find CSS selector through which we can get all the listing elements.

Each listing is inside a div with class for-rent-content-container.

1 | def scrape_listings(): |

Parsing Data

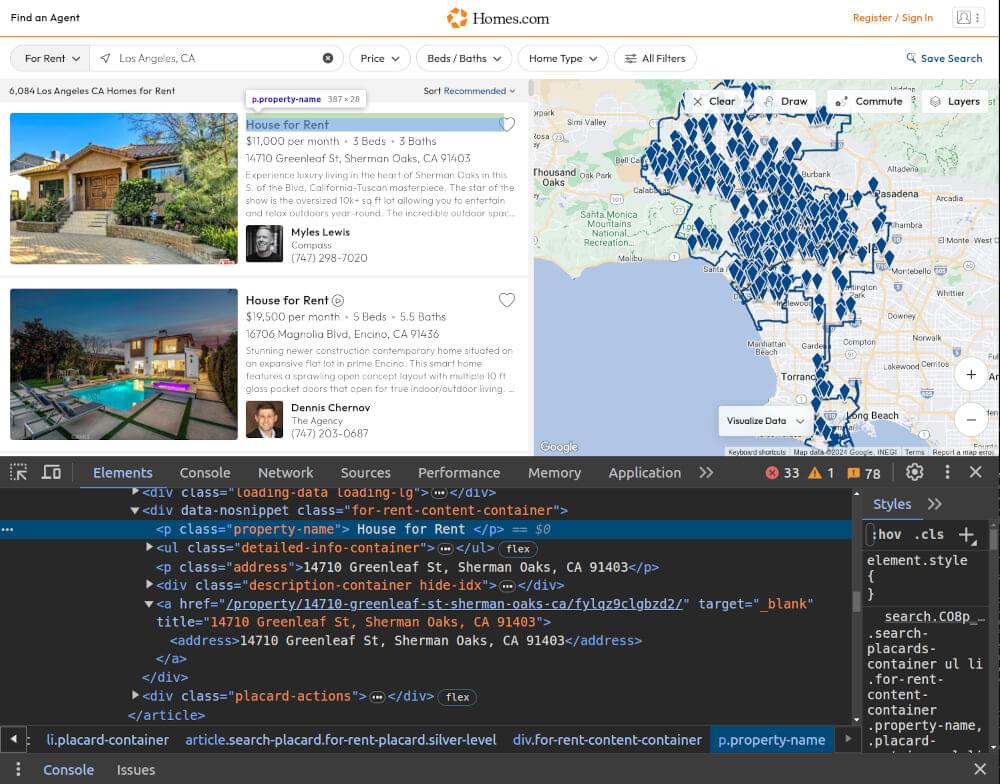

To extract relevant details from the HTML content, we need a function that processes the soup object and retrieves specific information. We can inspect the page and find the selectors of elements which hold the information we need.

In above image you can see that title is inside a p element wit class property-name, so we can use these as a selector to target title element. Similarly, we can find selectors for other elements which hold important information we need.

1 | def parse_property_details(properties): |

This function processes the list of property elements and extracts relevant details. It returns a list of dictionaries containing the property details.

Storing Data

Next, we need a function to store the parsed property details into a JSON file.

1 | import json |

This function writes the collected property data to a JSON file for easy analysis.

Running the Script

Finally, combine the scraping and parsing functions, and run the script to start collecting data from Homes.com search page.

1 | if __name__ == '__main__': |

Complete Code

Below is the complete code for scraping property listing for homes.com search page.

1 | from bs4 import BeautifulSoup |

Example Output:

1 | [ |

How to Scrape Homes.com Property Pages

Scraping Homes.com property pages can provide detailed insights into individual listings.

In this section, we will guide you through the process of scraping specific property pages using Python.

Importing Libraries

We need to import the required libraries: crawlbase for making HTTP requests and BeautifulSoup for parsing HTML content.

1 | from crawlbase import CrawlingAPI |

Initialize Crawling API

Initialize the CrawlingAPI class using your Crawlbase JS token like below.

1 | # Initialize Crawlbase API with your access token |

Defining Constants

Set the target URL for the property page you want to scrape and define the output JSON file. To overcome the JS rendering issue, we can use ajax_wait and page_wait parameters provided by Crawling API. We can also provide a custom user_agent like in below options.

1 | URL = 'https://www.homes.com/property/14710-greenleaf-st-sherman-oaks-ca/fylqz9clgbzd2/' |

Scraping Function

Create a function to scrape the details of a single property from Homes.com. This function will make a request to the property page, parse the HTML content, and extract the necessary details.

1 | def scrape_property(url): |

Extracting Property Details

Create a function to extract specific details from the property page. This function will parse the HTML and extract information such as the title, address, price, number of bedrooms, bathrooms, and description.

We can use “Inspect” tool in the browser to find CSS selectors of elements holding the information we need like we did in previous section.

1 | def extract_property_details(soup): |

Storing Data

Create a function to store the scraped data in a JSON file. This function takes the extracted property data and saves it into a JSON file.

1 | import json |

Running the Script

Combine the functions and run the script to scrape multiple property pages. Provide the property IDs you want to scrape in a list.

1 | if __name__ == '__main__': |

Complete Code

Below is the complete code for scraping property listing for homes.com property page.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

Optimize Homes.com Scraping with Crawlbase

Scraping data from Homes.com is useful for market research, investment analysis, and marketing strategies. Using Python with libraries like BeautifulSoup or services like Crawlbase, you can efficiently collect data from Homes.com listings.

Crawlbase’s Crawling API executes scraping tasks confidently, ensuring that your requests mimic genuine user interactions. This approach enhances scraping efficiency while minimizing the risk of detection and blocking by Homes.com’s anti-scraping measures.

If you’re interested in learning how to scrape data from other real estate websites, check out our helpful guides below.

📜 How to Scrape Realtor.com

📜 How to Scrape Zillow

📜 How to Scrape Airbnb

📜 How to Scrape Booking.com

📜 How to Scrape Redfin

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Remember to follow ethical guidelines and respect the website’s terms of service. Happy scraping!

Frequently Asked Questions (FAQs)

Q. Is scraping data from Homes.com legal?

Yes, scraping data from Homes.com is legal as long as you abide by their terms of service and do not engage in any activities that violate their policies. It’s essential to use scraping responsibly and ethically, ensuring that you’re not causing any harm or disruption to the website or its users.

Q. Can I scrape Homes.com without getting blocked?

While scraping Homes.com without getting blocked can be challenging due to its anti-scraping measures, there are techniques and tools available to help mitigate the risk of being blocked. Leveraging APIs like Crawlbase, rotating IP addresses, and mimicking human behavior can help improve your chances of scraping smoothly without triggering blocking mechanisms.

Q. How often should I scrape data from Homes.com?

The frequency of scraping data from Homes.com depends on your specific needs and objectives. It’s essential to strike a balance between gathering timely updates and avoiding overloading the website’s servers or triggering anti-scraping measures. Regularly monitoring changes in listings, market trends, or other relevant data can help determine the optimal scraping frequency for your use case.