Gumtree is one of the most popular online classifieds websites, where users can buy and sell products or services locally. Whether you’re looking for cars, furniture, property, electronics, or even jobs, Gumtree has millions of listings that update regularly. With over 15 million unique monthly visitors and more than 1.5 million active ads at any time, Gumtree provides a wealth of data that can be used for price comparison, competitor analysis, or tracking trends.

In this blog, we will walk you through how to scrape Gumtree search listings and individual product pages using Python. We will also show how to store the data in CSV files for easy analysis. At the end, we’ll discuss how to optimize the process using Crawlbase Smart AI Proxy to avoid issues like IP blocking.

Let’s dive in!

Table of Contents

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for CSS Selectors

- Writing the Search Listings Scraper

- Handling Pagination in Gumtree

- Storing Data in a CSV File

- Complete Code Example

- Inspecting the HTML for CSS Selectors

- Writing the Product Page Scraper

- Storing Data in a CSV File

- Complete Code Example

- Benefits of Crawlbase Smart AI Proxy

- Integrating Crawlbase Smart AI Proxy

Why Scrape Gumtree Data?

Scraping Gumtree data is useful for many things. As the leading online classifieds platform, Gumtree connects buyers and sellers for a wide range of products. Here are some reasons to scrape Gumtree:

- Market Trend Analysis: See product prices and availability to track the market.

- Competitor Research: Monitor competitor’s listings and pricing to stay ahead.

- Identify Popular Products: Find trending items and high demand products.

- Informed Business Decisions: Use data to make buying and selling choices.

- Price Tracking: Track price changes over time to find deals or trends.

- User Behavior Insights: Analyse listings to see what users want.

- Enhanced Marketing Strategies: Refine your marketing based on current trends.

In the following sections, we will show you how to effectively scrape Gumtree search listings and product pages.

Key Data Points to Extract from Gumtree

When scraping Gumtree, you need to know what data to grab. Here are the key data points to focus on when scraping Gumtree:

- Product Title: The title of the product is usually in the main heading of the listing. This is the most important part.

- Price: The listing price is what the seller is asking for the product. Monitoring prices will help you work out the market value.

- Location: The location of the seller is usually in the listing. This is useful for understanding regional demand and supply.

- Description: The product description has all the details of the item, condition, features and specs.

- Image URL: The image URL is important for visual representation. Helps you understand the condition and appeal of the product.

- Listing URL: The direct link to the product page is needed to get more details or contact the seller.

- Date Listed: The date the listing was posted helps you track how long the item has been available and can indicate demand.

- Seller’s Username: The name of the seller can give you an idea of trustworthiness and reliability especially if you’re comparing multiple listings.

Setting Up Your Python Environment

Before you can start scraping Gumtree, you need to set up your Python environment. This involves installing Python and the required libraries. This will give you the tools to send requests, extract data and store it for analysis.

Installing Python and Required Libraries

First make sure you have Python installed on your machine. If you don’t have Python installed, you can download it from the official Python website. Once installed, open a terminal or command prompt and install the required libraries with pip.

Here’s a list of the key libraries needed for scraping Gumtree:

- Requests: To send HTTP requests and receive responses.

- BeautifulSoup: For parsing HTML and extracting data.

- Pandas: For organizing and saving data in CSV format.

Run the following command to install these libraries:

1 | pip install requests beautifulsoup4 pandas |

Choosing an IDE

An Integrated Development Environment (IDE) makes coding easier and more efficient. Here are some popular IDEs for Python:

- PyCharm: A powerful, full-featured IDE with smart code assistance and debugging tools.

- Visual Studio Code: A lightweight code editor with a wide range of extensions for Python development.

- Jupyter Notebook: Ideal for running code in smaller chunks, making it easier to test and debug.

Once your environment is set up, let’s start scraping Gumtree listings. Next we’ll look at the HTML structure to find CSS selector of elements holding the data we need.

Scraping Gumtree Search Listings

In this section, we will learn how to scrape search listings from Gumtree. We’ll inspect the HTML structure, write the scraper, handle pagination, and store the data in a CSV file.

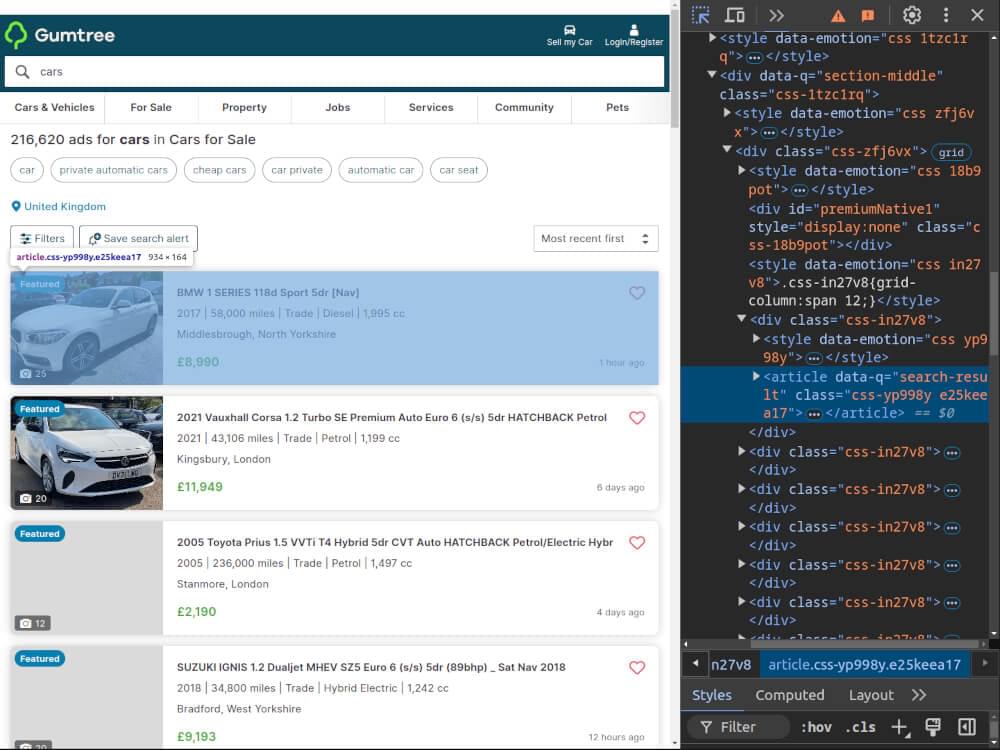

Inspecting the HTML for CSS Selectors

To get data from Gumtree, we first need to find the HTML elements that contain the information. Open your browser’s Developer Tools and inspect a listing.

Here are some key selectors:

- Title: Found in a

<div>tag with the attributedata-q="tile-title". - Price: Located in a

<div>tag with the attributedata-testid="price". - Location: Found in a

<div>tag with the attributedata-q="tile-location". - URL: The product link is within the

<a>tag’shrefattribute, identified by the attributedata-q="search-result-anchor".

We will use these CSS selectors to extract the required data.

Writing the Search Listings Scraper

Let’s write a function that sends a request to Gumtree, extracts the required data, and returns it.

1 | import requests |

This function extracts titles, prices, locations, and URLs from the search results page.

Handling Pagination in Gumtree

To scrape multiple pages, we need to handle pagination. The URL for subsequent pages usually contains a page parameter, such as ?page=2. We can modify the scraper to fetch data from multiple pages.

1 | def scrape_gumtree_multiple_pages(base_url, max_pages): |

This function iterates through a specified number of pages and collects the listings from each page.

Storing Data in a CSV File

To store the scraped data, we’ll use the pandas library to write the results into a CSV file.

1 | import pandas as pd |

This function takes a list of listings and saves it into a CSV file with the specified filename.

Complete Code Example

Here’s the complete code to scrape Gumtree search listings, handle pagination, and save the results to a CSV file.

1 | import requests |

This script scrapes Gumtree search listings for a product, handles pagination, and saves the data in a CSV file for further analysis.

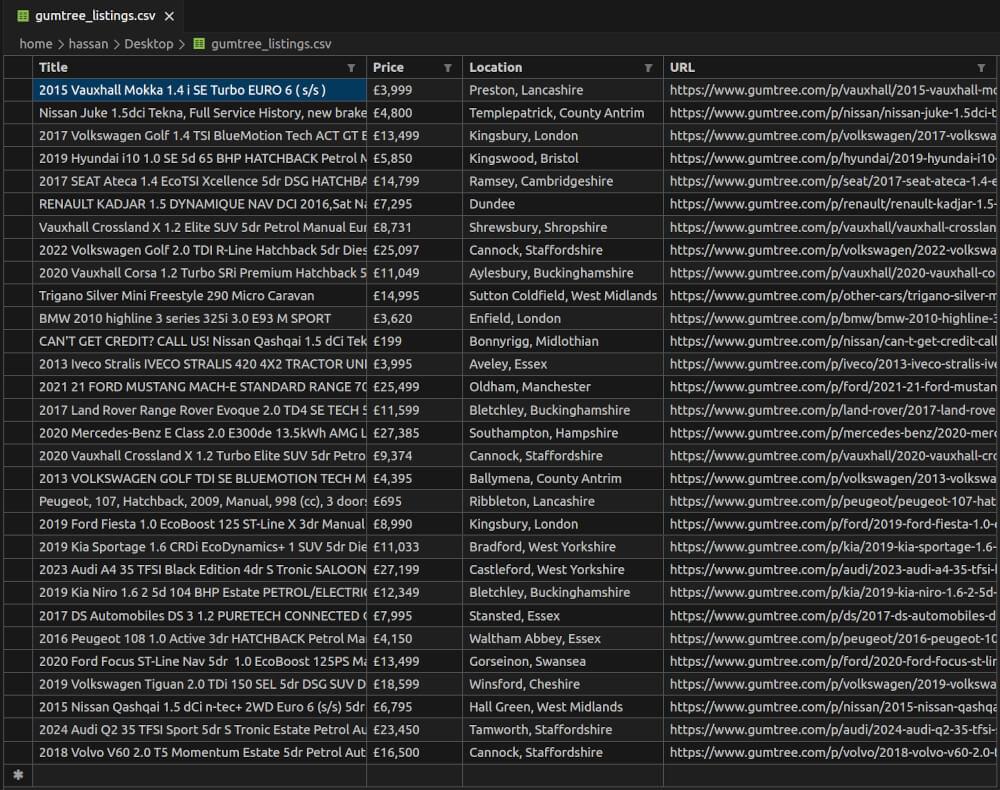

gumtree_listings.csv Snapshot:

Scraping Gumtree Product Pages

Now that we’ve scraped the search listings, the next step is to scrape individual product pages for more information. We will inspect the HTML structure of product pages, write the scraper, and save the data in a CSV file.

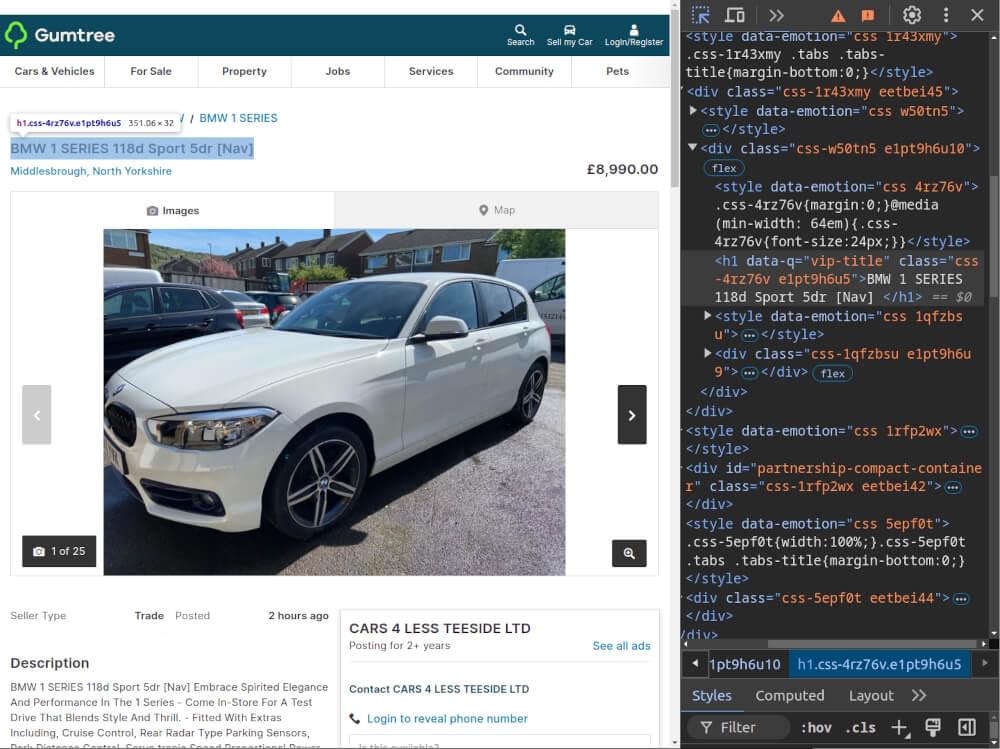

Inspecting the HTML for CSS Selectors

First, inspect the Gumtree product pages to find the HTML elements that contain the data. Open a product page in your browser and use the Developer Tools to find:

- Product Title: Located in an

<h1>tag with the attributedata-q="vip-title". - Price: Found inside an

<h3>tag with the attributedata-q="ad-price". - Description: Located in a

<p>tag with the attributeitemprop="description". - Seller Name: Inside an

<h2>tag with the classseller-rating-block-name. - Product Image URL: Found in

<img>tags within athat has the attributedata-testid="carousel", with the image URL stored in thesrcattribute.

Writing the Product Page Scraper

We’ll now create a function that takes a product page URL, fetches the page’s HTML content, and extracts the required information.

1 | def scrape_gumtree_product_page(url): |

This function sends a request to the product page URL, parses the HTML, and extracts the title, price, description, seller name, and product image URL.

Storing Data in a CSV File

Once we have scraped the data, we will store it in a CSV file. We can reuse the save_to_csv function we used earlier for search listings.

1 | import pandas as pd |

Complete Code Example

Here’s the complete code to scrape product pages, extract the required details, and store them in a CSV file.

1 | import requests |

This script scrapes product details from individual Gumtree product pages and saves the extracted information in a CSV file. You can add more product URLs to the product_urls list to scrape multiple pages.

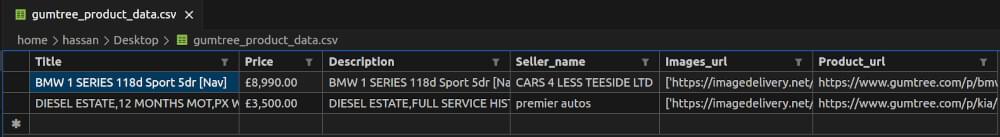

gumtree_product_data.csv Snapshot:

Optimizing Scraping with Crawlbase Smart AI Proxy

When scraping websites like Gumtree you may run into rate limits or IP bans. To scrape smoothly and efficiently use Crawlbase Smart AI Proxy. This service helps you bypass restrictions and improve your scraping.

Benefits of Crawlbase Smart AI Proxy

- Avoid IP Blocking: Crawlbase rotates IP addresses so your requests are anonymous and you won’t get blocked.

- CAPTCHA Handling: It handles CAPTCHA challenges for you so you can scrape without interruptions.

- Faster Scraping: By using multiple IPs you can make requests quickly and gather data faster.

- Geolocation: Choose proxies from specific locations to scrape localized data and get more relevant results.

Integrating Crawlbase Smart AI Proxy

To use Crawlbase Smart AI Proxy in your Gumtree scraper, set up your requests to route through the proxy. Here’s an example of how to do this:

1 | import requests |

In this code snippet, replace '_USER_TOKEN_' with your actual Crawlbase token. You can get one by creating an account on Crawlbase The proxies dictionary routes your requests through the Crawlbase Smart AI Proxy, helping you avoid blocks and maintain fast scraping speeds.

By optimizing your Gumtree scraping process with Crawlbase Smart AI Proxy, you can gather data more effectively and handle larger volumes without facing common web scraping issues.

Optimize Gumtree Scraping with Crawlbase

Scraping Gumtree data can be very useful for your projects. In this blog we have shown how to scrape search listings and product pages using Python. By inspecting the HTML and using the Requests library you can extract useful data such as titles, prices and descriptions.

Make sure your scraping runs smoothly by using tools like Crawlbase Smart AI Proxy. It will help you avoid IP blocks and maintain fast scraping speeds so you can focus on getting the data you need.

If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Houzz Data

📜 How to Scrape Tokopedia

Contact our support if you have any questions. Happy scraping.

Frequently Asked Questions

Q. Is it legal to scrape data from Gumtree?

Yes, it is generally legal to scrape Gumtree data as long as you comply with their terms of service. Always check the website’s policies to make sure you’re not breaking any rules. Always use the scraped data responsibly and ethically.

Q. What data can I scrape from Gumtree?

You can scrape various types of data from Gumtree, including product titles, prices, descriptions, images, and seller information. This data can help you analyze market trends or compare prices across different listings.

Q. How can I avoid getting blocked while scraping?

To avoid getting blocked while scraping, consider using a rotating proxy service like Crawlbase Smart AI Proxy. This will help you manage IP addresses so your scraping looks like regular user behavior. Also, implement delays between requests to reduce the chances of getting blocked.