Google Shopping stands out as one of the most data-rich e-commerce platforms. Its vast collection of products, prices, and retailers makes it a goldmine for companies and data enthusiasts alike.

Google Shopping plays a crucial role for online buyers and sellers. By 2024, it will offer millions of items from numerous retailers across the globe, giving shoppers a wide range of choices and bargains. When you extract data from Google Shopping, you gain insights into product costs, stock levels, and rival offerings, which helps you to make choices based on facts.

This post will show you how to scrape Google Shopping data with Python. We’ll use the Crawlbase Crawling API to get around restrictions and collect the information.

Here’s a detailed tutorial on how to scrape Google Shopping results:

Table Of Content

- Benefits of scraping Google Shopping

- Key Data Points of Google Shopping

- Setting Up Your Python Environment

- Installing Required Libraries

- Key Elements of Google Shopping SERP

- Inspecting the HTML Structure

- Writing Google Shopping SERP Scraper

- Handling Pagination

- Saving Data to JSON File

- Complete Code

- Key Elements of Google Shopping Product Page

- Inspecting the HTML Structure

- Writing Google Shopping Product Page Scraper

- Saving Data to JSON File

- Complete Code

Why Scrape Google Shopping?

Scraping Google Shopping lets you get useful insights. These insights help shape your business plan, make your products better, and set the right prices. In this part, we’ll look at the good things about getting data from Google Shopping and the key bits of info you can pull out.

Benefits of Scraping Google Shopping

Competitive Pricing Analysis

Pricing is one of key factors for customers’ decision. By scraping Google Shopping, you can see your competitor’s prices in real-time and adjust your pricing accordingly. This ensures your prices are competitive and attract more customers and sales.

Product Availability Monitoring

Product availability is key to inventory management and meeting customer demand. Scraping Google Shopping lets you see which products are in stock, out of stock or on sale. This will help you optimize your inventory so you have the right products at the right time.

Trend Analysis and Market Insights

Staying on top of the trends is vital for any e-commerce business. By scraping Google Shopping, you can see emerging trends, popular products, and shifting customer behavior. This will help your product development, marketing strategies and business decisions.

Improving Product Listings

Detailed and beautiful product listings are the key to converting browsers into buyers. By looking at successful listings on Google Shopping, you can get ideas for your product descriptions, images, and keywords. This will help your rankings and visibility.

What You Can Scrape from Google Shopping?

When scraping Google Shopping, you can extract the following data points:

- Product Titles and Descriptions: See how competitors are presenting their products and refine your product listings to get more customers.

- Prices and Discounts: Extract helpful information on prices, including discounts and special offers, to monitor competitors’ pricing strategies. You can use this data to adjust your prices to stay competitive and sell more.

- Product Ratings and Reviews: Customer ratings and reviews give insight into customer satisfaction and product quality. You can analyze their feedback to see the strengths and weaknesses of your products.

- Retailer Information: Extract information about retailers selling similar products to see who the key players are in your market and potential partners.

- Product Categories and Tags: See how products are categorized and tagged to improve your product organization and search engine optimization (SEO) so customers can find your products.

- Images and Visual Content: Images are crucial for capturing customer interest. By examining visual content from top-performing listings, you can enhance the quality of your product images to improve engagement.

Collecting and analyzing these data points enables you to make informed decisions that drive your business forward. In the next section, we’ll discuss how to overcome challenges in web scraping using the Crawlbase Crawling API.

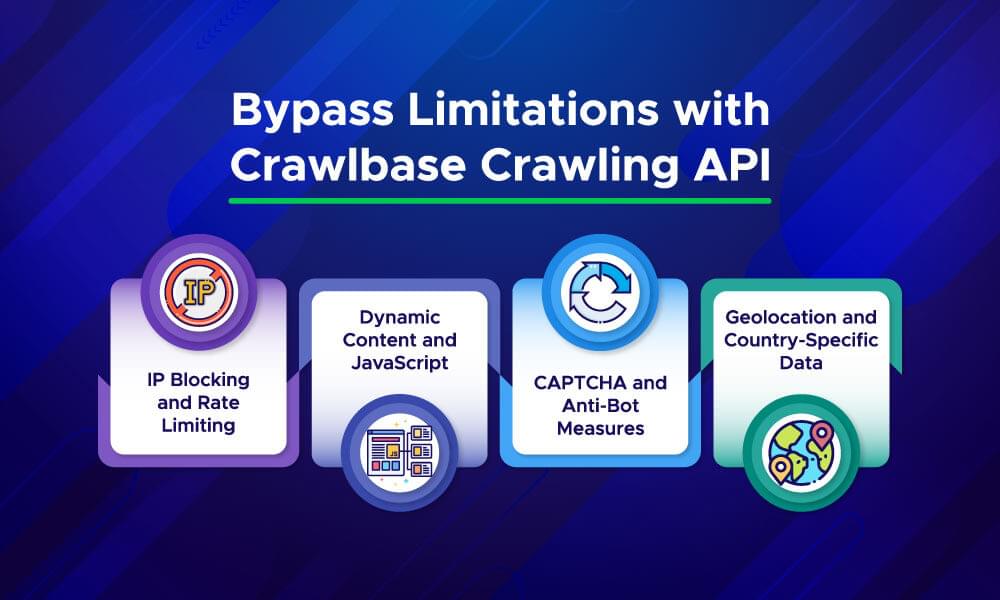

Bypass Limitations with Crawlbase Crawling API

Web scraping is a powerful tool for gathering data, but it comes with challenges like IP blocking, rate limits, dynamic content, and regional differences. The Crawlbase Crawling API helps overcome these issues, making the scraping process smoother and more effective.

IP Blocking and Rate Limiting

Websites may block IP addresses that send too many requests quickly, a problem known as rate limiting. Crawlbase Crawling API helps by rotating IP addresses and controlling request speeds, enabling you to scrape data without interruptions.

Dynamic Content and JavaScript

Many websites use JavaScript to load content after the page has initially loaded. Traditional scraping methods might miss this dynamic data. The Crawlbase Crawling API can handle JavaScript, ensuring you get all the content on the page, even the elements that appear later.

CAPTCHA and Anti-Bot Measures

To prevent automated scraping, websites often use captchas and other anti-bot measures. The Crawlbase Crawling API can get past these barriers, allowing you to keep collecting data without running into these obstacles.

Geolocation and Country-Specific Data

Websites sometimes show different content based on the user’s location. The Crawlbase Crawling API lets you choose the country for your requests so you can get data specific to a particular region, which is helpful for localized product information and pricing.

Crawlbase Crawling API handles these shared web scraping challenges effectively and collects valuable data from Google Shopping without issues. In the next section, we’ll discuss what you need to set up your Python environment for scraping.

Prerequisites

Before you begin scraping Google Shopping data, you need to set up your Python environment and install the necessary libraries. This section will walk you through the essential steps to get everything ready for your web scraping project.

Setting Up Your Python Environment

Install Python

Ensure that Python is installed on your computer. Python is a popular programming language used for web scraping and data analysis. If you don’t have Python installed, download it from the official Python website. Follow the installation instructions for your operating system.

Create a Virtual Environment

Creating a virtual environment is a good practice to keep your project dependencies organized and avoid conflicts with other projects. To create a virtual environment, open your command line or terminal and run:

1 | python -m venv myenv |

Replace myenv with a name for your environment. To activate the virtual environment, use the following command:

On Windows:

1

myenv\Scripts\activate

On macOS and Linux:

1

source myenv/bin/activate

Installing Required Libraries

With your virtual environment set up, you need to install the following libraries for web scraping and data processing:

BeautifulSoup4

The BeautifulSoup4 library helps with parsing HTML and extracting data. It works well with the Crawlbase library for efficient data extraction. Install it by running:

1 | pip install beautifulsoup4 |

Crawlbase

The Crawlbase library allows you to interact with the Crawlbase products. It helps handle challenges like IP blocking and dynamic content. Install it by running:

1 | pip install crawlbase |

Note: To access Crawlbase Crawling API, you need a token. You can get one by creating an account on Crawlbase. Crawlbase provides two types of tokens: a Normal Token for static websites and a JavaScript (JS) Token for handling dynamic or browser-based requests. In case of Google Shopping, you need Normal Token. The first 1,000 requests are free to get you started, with no credit card required.

With these libraries installed, you’re ready to start scraping Google Shopping data. In the next section, we’ll dive into the structure of the Google Shopping search results page and how to identify the data you need to extract.

Google Shopping SERP Structure

Knowing the structure of the Google Shopping Search Engine Results Page (SERP) is key to web scraping. This helps you find and extract the data you need.

Key Elements of Google Shopping SERP

1. Product Listings

Each product listing has:

- Product Title: The name of the product.

- Product Image: The image of the product.

- Price: The price of the product.

- Retailer Name: The store or retailer selling the product.

- Ratings and Reviews: Customer reviews if available.

2. Pagination

Google Shopping results are often spread across multiple pages. Pagination links allow you to get to more product listings, so you need to scrape data from all pages for full results.

3. Filters and Sorting Options

Users can refine search results by applying filters like price range, brand, or category. These will change the content displayed and are important for targeted data collection.

4. Sponsored Listings

Some products are marked as sponsored or ads and are displayed prominently on the page. If you only want non-sponsored products, you need to be able to tell the difference between sponsored and organic listings.

Next up, we’ll show you how to write a scraper for the Google Shopping SERP and save the data to a JSON.

Scraping Google Shopping SERP

In this section, we’ll go through how to scrape the Google Shopping Search Engine Results Page (SERP) for product data. We’ll cover inspecting the HTML, writing the scraper, pagination and saving the data to a JSON file.

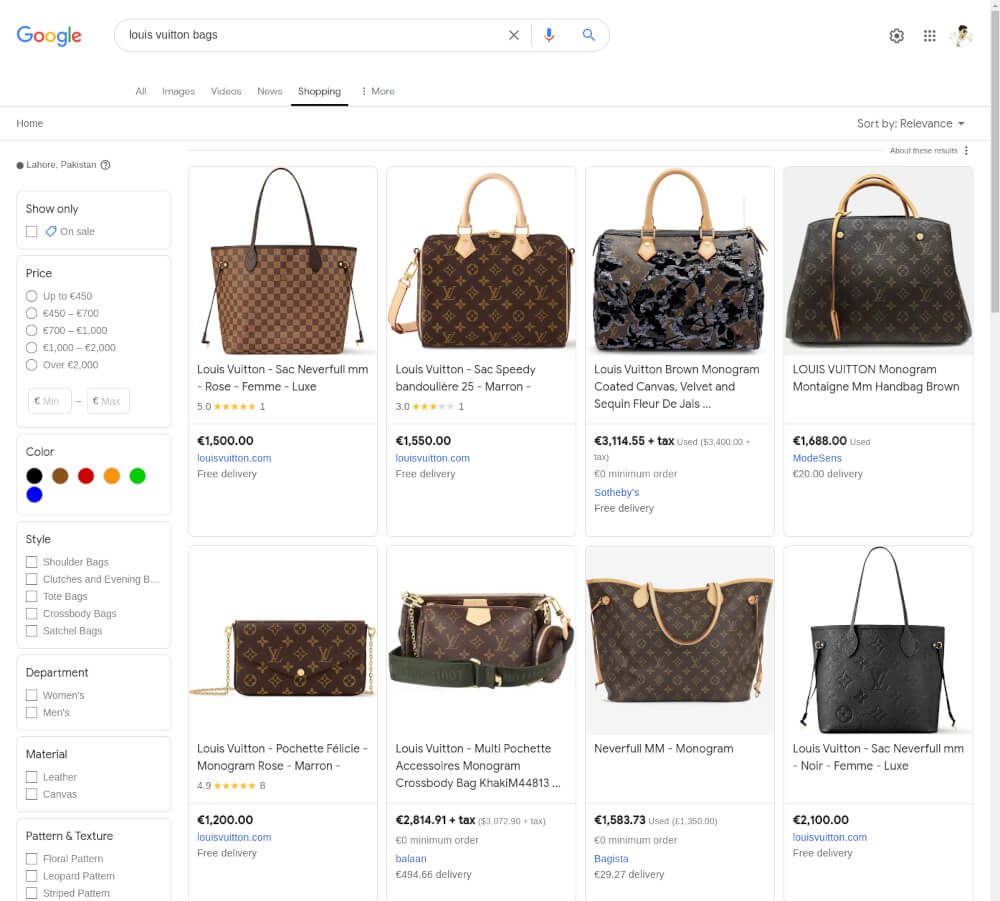

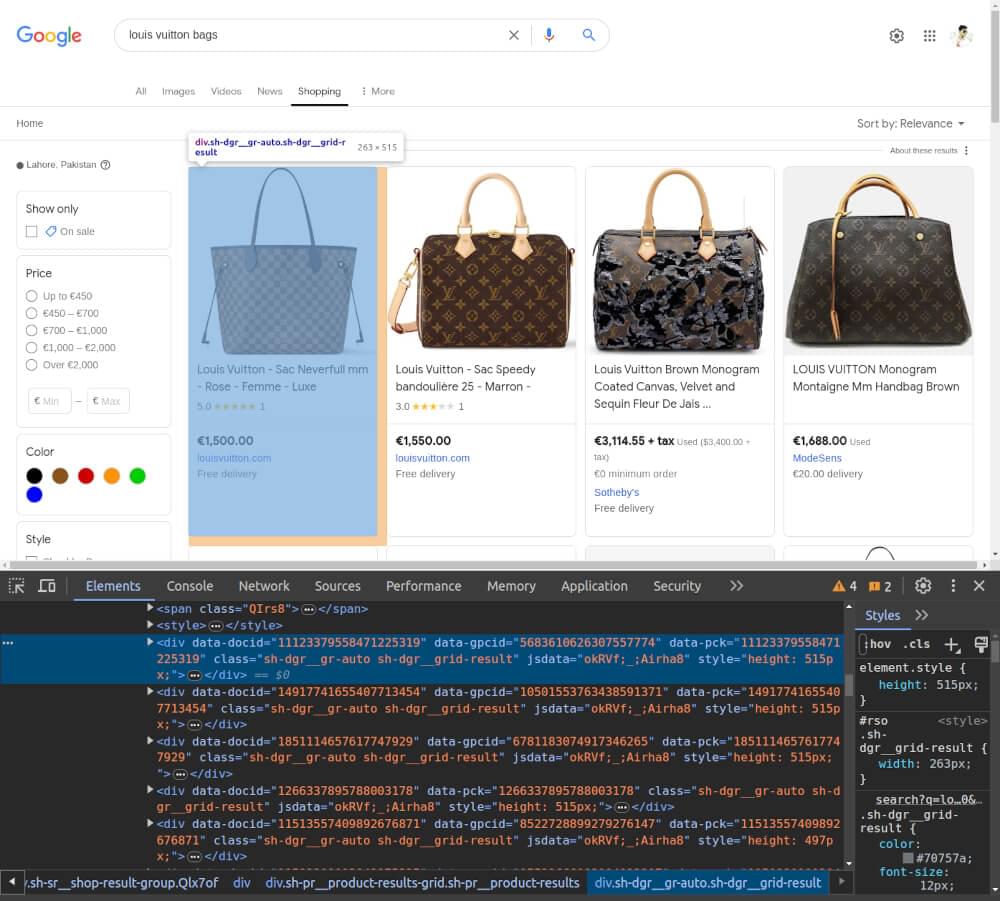

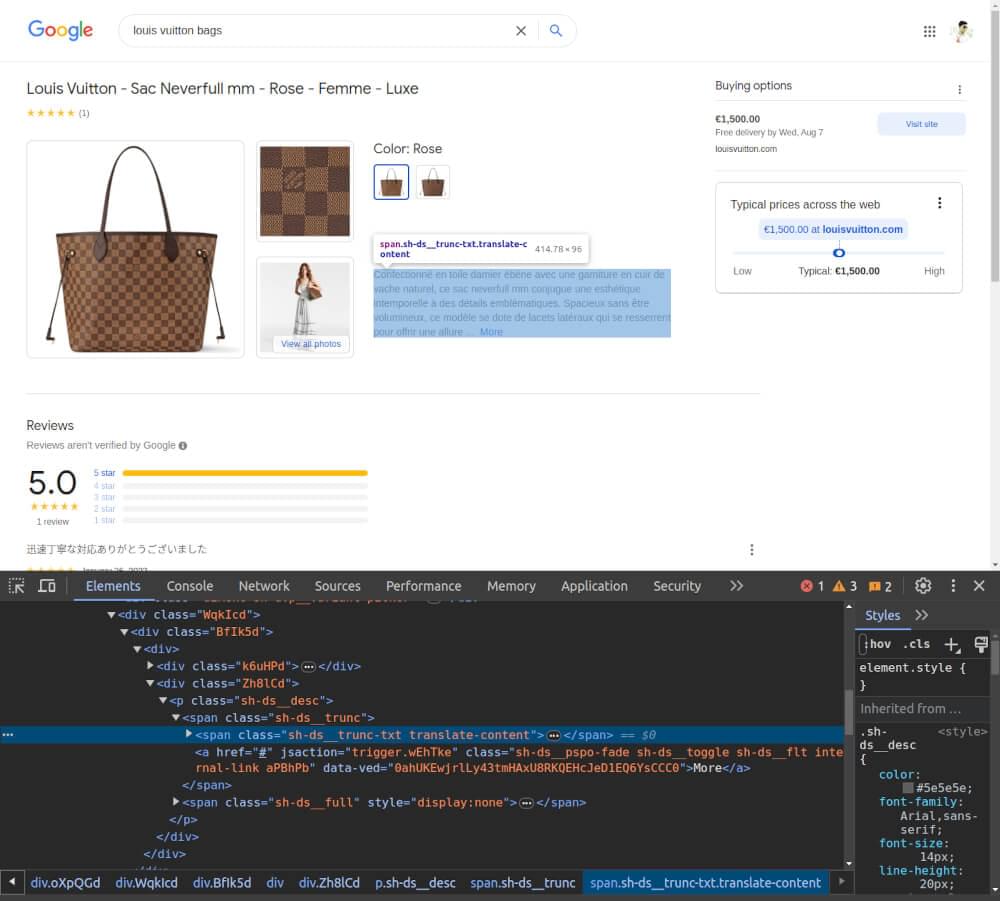

Inspecting the HTML Structure

Before you write your scraper, use your browser’s Developer Tools to inspect the Google Shopping SERP.

- Right-click on a product listing and select “Inspect” to open the Developer Tools.

- Hover over elements in the “Elements” tab to see which part of the page they correspond to.

- Identify the CSS selectors for elements like product title, price, and retailer name.

Writing Google Shopping SERP Scraper

To start scraping, we’ll use the Crawlbase Crawling API to fetch the HTML content. Below is an example of how to set up the scraper for the search query “louis vuitton bags”:

1 | from crawlbase import CrawlingAPI |

Handling Pagination

To scrape multiple pages, you need to modify the start parameter in the URL. This parameter controls the starting index for the results. For instance, to scrape the second page, set start=20, the third page start=40, and so on.

1 | def scrape_multiple_pages(base_url, pages=3): |

Saving Data to JSON File

After extracting the data, you can save it to a JSON file for further analysis or processing:

1 | def save_to_json(data, filename='products.json'): |

Complete Code

Here’s the complete code to scrape Google Shopping SERP, handle pagination, and save the data to a JSON file:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

In the next sections, we’ll explore how to scrape individual Google Shopping product pages for more detailed information.

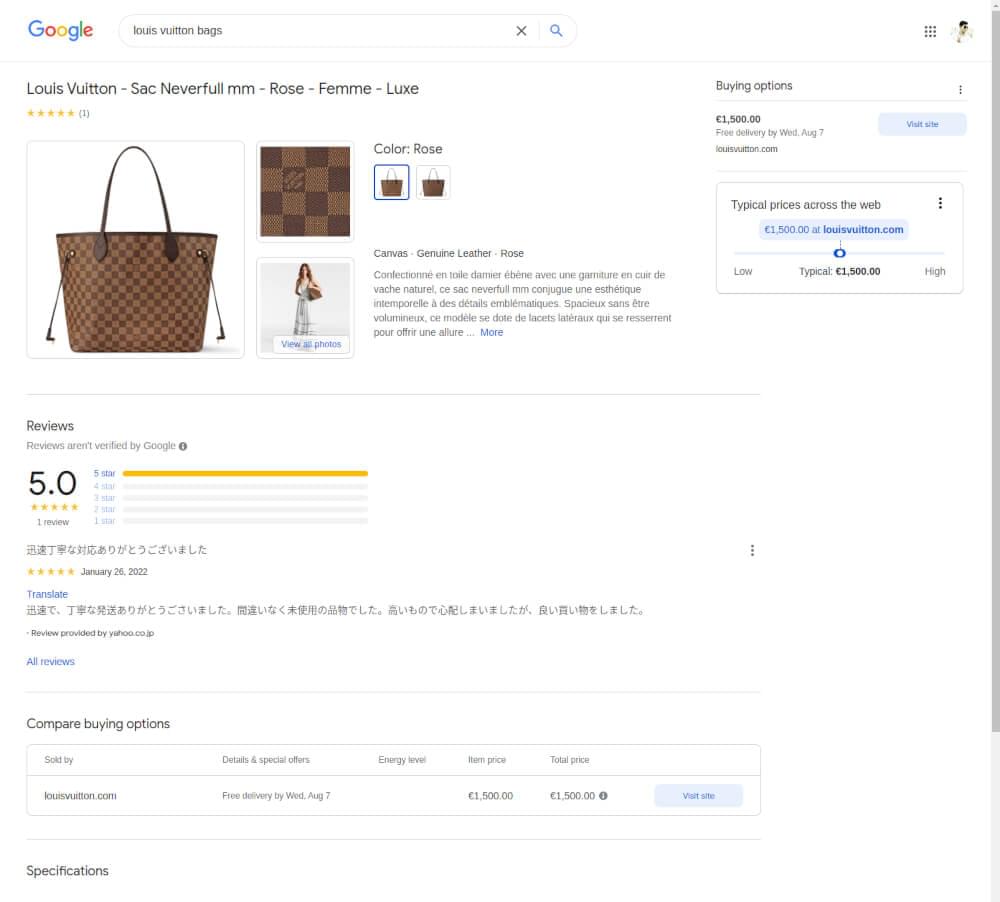

Google Shopping Product Page Structure

Once you’ve found products on the Google Shopping SERP, you can drill down into individual product pages to get more info. Understanding the structure of these pages is key to getting the most value.

Key Elements of a Google Shopping Product Page

- Product Title and Description

The product title and description give you the main features and benefits of the product.

- Price and Availability

Detailed pricing, including any discounts and availability status, shows if it’s in stock or sold out.

- Images and Videos

Images and videos show the product from different angles so you can see what it looks like.

- Customer Reviews and Ratings

Reviews and ratings give you an idea of customer satisfaction and product performance so you can gauge quality.

- Specifications and Features

Specifications like size, color, and material help you make an informed decision.

- Retailer Information

Information about the retailer, including store name and contact info, so you can see who sells the product and may include shipping and return policies.

In the next section, we’ll write a scraper for Google Shopping product pages and save the scraped data to a JSON file.

Scraping Google Shopping Product Page

In this section, we will walk you through scraping individual Google Shopping product pages. This includes inspecting the HTML, writing a scraper and saving the extracted data to a JSON file.

Inspecting the HTML Structure

Before writing your scraper, use the browser’s Developer Tools to inspect the HTML structure of a Google Shopping product page.

- Right-click on a product listing and select “Inspect” to open the Developer Tools.

- Hover over elements in the “Elements” tab to see which part of the page they correspond to.

- Identify the tags and classes containing the data you want to extract, such as product titles, prices, and reviews.

Writing Google Shopping Product Page Scraper

To scrape a Google Shopping product page, we’ll use the Crawlbase Crawling API to retrieve the HTML content. Here’s how you can set up the scraper:

1 | from crawlbase import CrawlingAPI |

Saving Data to JSON File

Once you have extracted the product data, you can save it to a JSON file for analysis or further processing:

1 | def save_to_json(data, filename='product_details.json'): |

Complete Code

Below is the complete code to scrape a Google Shopping product page and save the data to a JSON file:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

In the next section, we’ll wrap up our discussion with final thoughts on scraping Google Shopping.

Scrape Google Shopping with Crawlbase

Scraping data from Google Shopping helps you understand product trends, prices, and what customers think. Using the Crawlbase Crawling API can help you avoid problems like IP blocking and content that change often, making data collection easier. By using Crawlbase to get the data, BeautifulSoup to read the HTML, and JSON to store the data, you can effectively gather detailed and valuable information.

As you implement these techniques, remember to adhere to ethical guidelines and legal standards to ensure your data collection practices are responsible and respectful.

If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Flipkart

📜 How to Scrape Etsy

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Thank you for following this guide. Happy scraping!

Frequently Asked Questions

Q. Is scraping Google Shopping data legal?

Scraping Google Shopping data can be legal, but it’s essential to follow the website’s terms of service, and many users find that with respect and caution, it can be done. If unsure, seek professional legal advice. Using official APIs when available is also an excellent way to get data without legal issues. Always scrape responsibly and within the guidelines.

Q. What data can I extract from Google Shopping product pages?

When scraping Google Shopping product pages, you can extract the following data points. Product title to identify the product, prices to show current price and discount, and description to show product features. You can also get images for visual representation, ratings and reviews for customer feedback, and specifications like size and color for technical details. This data is useful for market analysis, price comparison, and customer opinions.

Q. How can I handle websites that block or limit scraping attempts?

Websites block scraping by using IP blocking, rate limiting, and CAPTCHAs. To handle these issues use IP rotation services like Crawlbase Crawling API to avoid IP blocks. Rotate user agents to mimic different browsers and reduce detection risk. Implement request throttling to space out your requests and avoid rate limits. For CAPTCHA bypass, some APIs, including Crawlbase can help you to overcome these hurdles and get continuous data extraction.

Q. What should I do if the product page structure changes?

If Google Shopping product page structure changes, you will need to update your scraping code to adapt to the new layout. Here’s how to

- Regular Monitoring: Monitor the product page regularly to detect any updates or changes in the HTML structure.

- Update Selectors: Update your scraping code to reflect new tags, classes or IDs used on the page.

- Test Scrapers: Test your updated code to make sure it extracts the required data with the new structure.

- Handle Exceptions: Implement error handling in your code to handle scenarios where expected elements are missing or altered. Be proactive and adapt to changes to get accurate data extraction.

Q. Does Google Shopping have APIs?

Yes, Google Shopping offers APIs that developers can use to integrate Google Shopping features into their applications. Google Shopping APIs help businesses streamline their e-commerce operations by automating tasks related to product listing management, advertising campaigns, and performance monitoring.