In data extraction and analysis, scraping search engine results is crucial for researchers, developers, and analysts seeking substantial data for insights or applications. Recognizing the importance of scraping Bing search results unlocks a wealth of information, allowing users to leverage Bing’s extensive database.

This guide takes a practical approach to scraping Bing search results using JavaScript Puppeteer and the Crawlbase Crawling API. We’ll delve into the significance of JavaScript Puppeteer for streamlined interaction with Bing’s search engine and understand how integrating the Crawlbase Crawling API ensures seamless access to Bing results, effortlessly bypassing common scraping issues.

Join us in exploring Bing SERP scraping as we master advanced web scraping techniques to unlock Microsoft Bing’s full potential as a valuable data source.

Table of Contents

I. Understanding Bing’s Search Page Structure

- Bing SERP Structure

- Data to Scrape

II. Prerequisites

III. Setting Up Puppeteer

- Prepare the Coding Environment

- Scraping Bing SERP using Puppeteer

IV. Setting Up Crawlbase’s Crawling API

- Obtain API Credentials

- Prepare the Coding Environment

- Scraping Bing SERP using Crawling API

- Pros and Cons

- Conclusion

VI. Frequently Asked Questions (FAQ)

I. Understanding Bing’s Search Page Structure

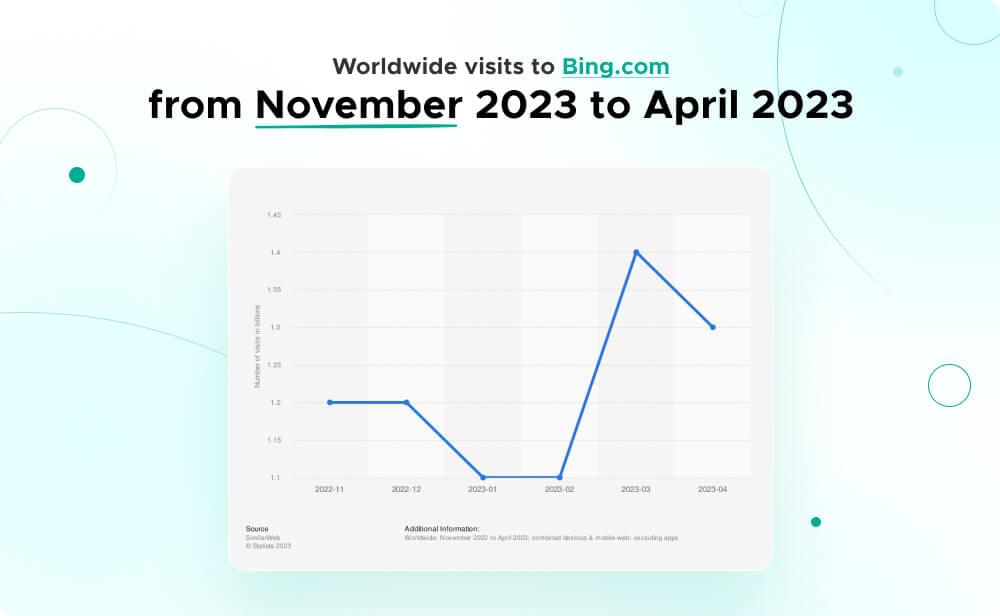

Search engines play a pivotal role in helping users navigate the vast sea of information from the internet. With its distinctive features and growing user base, Microsoft’s Bing stands as a significant player in web search. As of April 2024, Bing.com reached close to 1.3 billion unique global visitors, a testament to its widespread usage and influence in the online space. Although experiencing a slight decline from the previous month’s 1.4 billion visitors and far behind Google, Bing remains relevant in delivering search results.

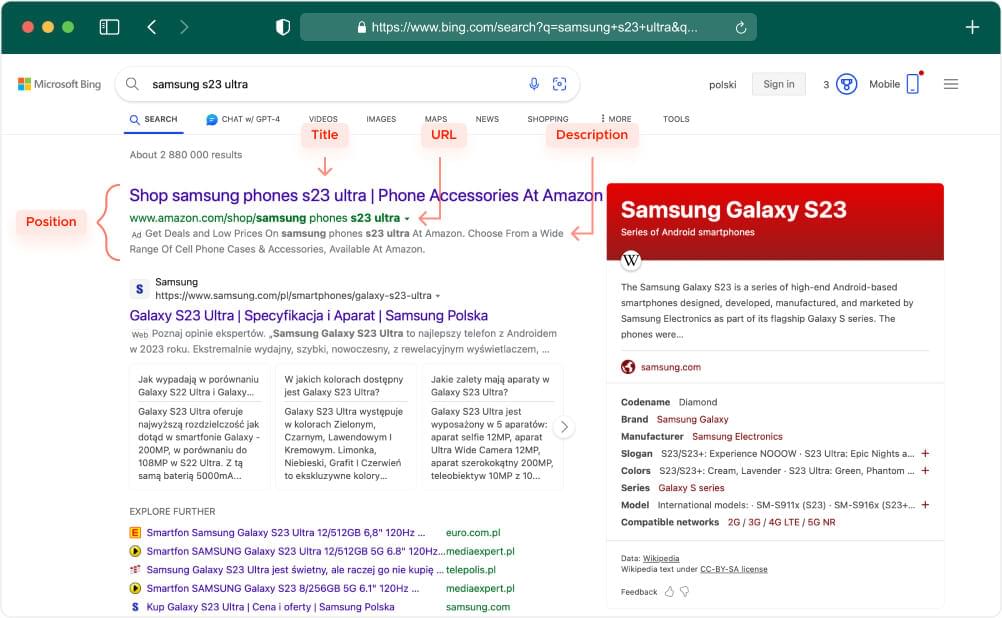

Before we start working with our scraper, it’s important to understand the layout of a Bing SERP (Search Engine Results Page), like our target URL for this guide. Bing typically presents search results in a format that includes various elements, and you can extract valuable information from these elements using web scraping techniques. Here’s an overview of the structure and the data you can scrape:

Bing SERP Structure

1. Search Results Container

- Bing displays search results in a container, usually in a list format, with each result having a distinct block.

2. Individual Search Result Block

- Each search result block contains information about a specific webpage, including the title, description, and link.

3. Title

- The search result’s title is the clickable headline representing the webpage. Users must identify the relevance of the result quickly.

4. Description

- The description provides a brief summary or snippet of the content found on the webpage. It offers additional context to users about what to expect from the linked page.

5. Link

- The link is the URL of the webpage associated with the search result. Clicking on the link directs users to the respective webpage.

6. Result Videos

- Bing may include video results directly in the search results. These can be videos from various sources like YouTube, Vimeo, or other video platforms.

Data to Scrape:

1. Titles

- Extract each search result’s titles to understand the web pages’ main topics or themes.

2. Descriptions

- Scrape the descriptions to gather concise information about the content of each webpage. This can be useful for creating summaries or snippets.

3. Links

- Capture the URLs of the web pages associated with each search result. These links are essential for navigating to the source pages.

We’ll show you how easy it is to use the Crawling API to scrape the data mentioned above. Also, we’ll use the method page.evaluate in Puppeteer to execute a function within the context of the page being controlled by Puppeteer. This function runs in the browser environment and can access the DOM (Document Object Model) and JavaScript variables within the page. Here is an example:

1 | const results = await page.evaluate(() => { |

Let’s proceed to the main part of our guide, where we’ll walk you through the process of using Puppeteer and Crawling API step by step to scrape Bing SERP data.

II. Prerequisites

Before getting started, ensure that you have the following prerequisites:

- Node.js: Make sure Node.js is installed on your machine. You can download it from Node.js official website.

- npm (Node Package Manager): npm is typically included with Node.js installation. Check if it’s available by running the following command in your terminal:

1 | npm -v |

If the version is displayed, npm is installed. If not, ensure Node.js is correctly installed, as npm is bundled with it.

Having Node.js and npm installed ensures a smooth experience as you proceed with setting up your environment for web scraping with Puppeteer or Crawling API.

III. Setting Up Puppeteer

Puppeteer is a powerful Node.js library developed by the Chrome team at Google. It provides a high-level API to control headless or full browsers over the DevTools Protocol, making it an excellent choice for tasks such as web scraping and automated testing. Before diving into the project with Puppeteer, let’s set up a Node.js project and install the Puppeteer package.

Prepare the Coding Environment

- Create a Node.js Project

Open your terminal and run the following command to create a basic Node.js project with default settings:

1 | npm init -y |

This command generates a package.json file, which includes metadata about your project and its dependencies.

- Install Puppeteer:

Once the project is set up, install the Puppeteer package using the following command:

1 | npm i puppeteer |

This command downloads and installs the Puppeteer library, enabling you to control browsers programmatically.

- Create an Index File:

To write your web scraper’s code, create anindex.jsfile. Use the following command to generate the file:

1 | touch index.js |

This command creates an empty index.js file where you’ll write the Puppeteer script for scraping Bing SERP data. You have the option to change this to whatever filename you like.

Scraping Bing SERP using Puppeteer

With your Node.js project initialized, Puppeteer installed, and an index.js file ready, you’re all set to harness the capabilities of Puppeteer for web scraping. Copy the code below and save it to your index.js file.

1 | // Import required modules |

Let’s execute the above code by using a simple command:

1 | node index.js |

If successful, you’ll get the result in JSON format as shown below:

1 | { |

IV. Setting Up Crawlbase’s Scraper

Now that we’ve covered the steps for Puppeteer, let’s explore the Scraper. Here’s what you need to do if it’s your first time using the Scraper:

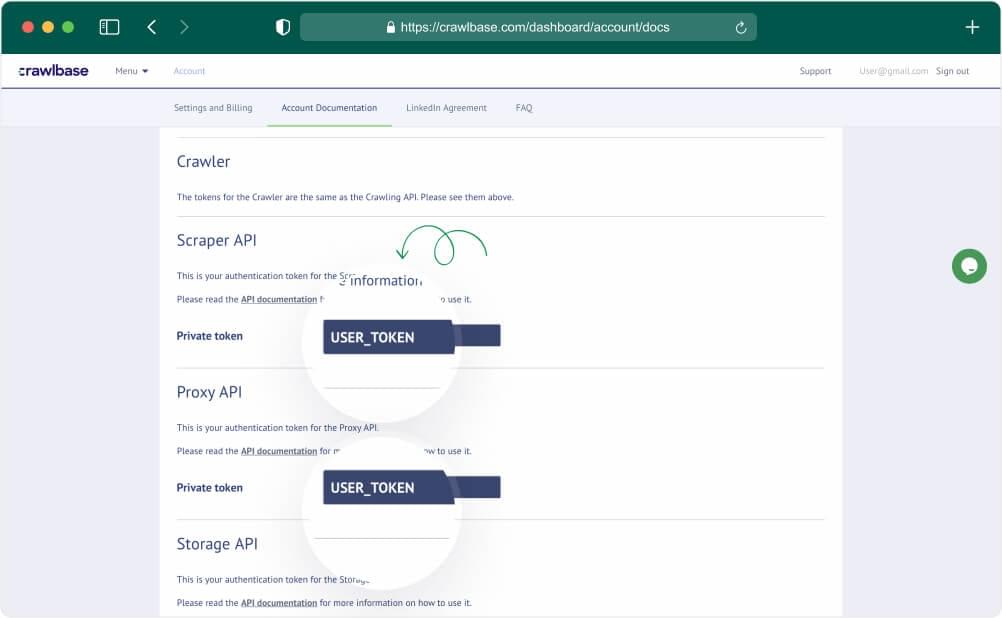

Obtain API Credentials:

- Sign Up for Scraper :

- Begin by signing up on the Crawlbase website to obtain access to the Scraper.

- Access API Documentation:

- Consult the Crawlbase API documentation to gain a comprehensive understanding of endpoints and parameters.

- Retrieve API Credentials:

- Find your API credentials (e.g., API key) either in the documentation or on your account dashboard. These credentials are crucial for authenticating your requests to the Scraper.

Prepare the Coding Environment

To kickstart your Scraper project using Crawlbase Scraper and set up the scraping environment successfully, follow these commands:

- Create Project Folder

1 | mkdir bing-serp |

This command creates an empty folder named “bing-serp” to organize your scraping project.

- Navigate to Project Folder

1 | cd bing-serp |

Use this command to enter the newly created directory and prepare for writing your scraping code.

- Create JS File

1 | touch index.js |

This command generates an index.js file where you’ll write the JavaScript code for your scraper.

- Install Crawlbase Package

1 | npm install crawlbase |

The Crawlbase Node package is used for interacting with the Crawlbase APIs including the Scraper, allowing you to fetch HTML without getting blocked and scrape content from websites efficiently.

Scraping Bing SERP using Scraper

Once done setting up your coding environment, we can now start integrating the Scraper into our script.

Copy the code below and make sure you replace "Crawlbase_TOKEN" with your actual Crawlbase API token for proper authentication.

1 | // import Crawlbase Scraper API package |

Execute the above code by using a simple command:

1 | node index.js |

Result should be in JSON format as shown below:

1 | { |

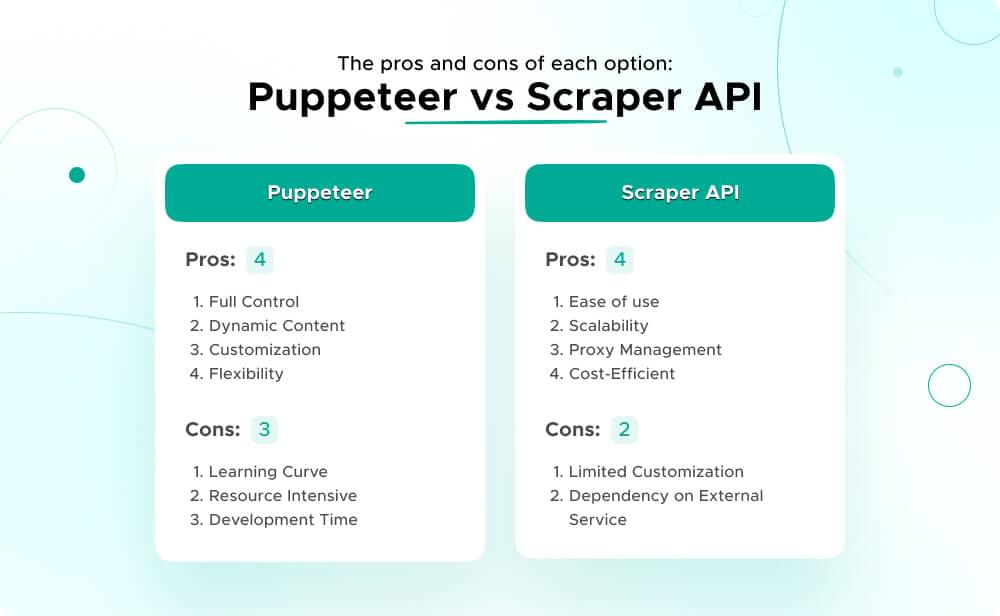

V. Puppeteer vs Crawlbase Scraper

When deciding between Puppeteer and Crawlbase’s Scraper for scraping Bing Search Engine Results Pages (SERP) in JavaScript, there are several factors to consider. Let’s break down the pros and cons of each option:

Puppeteer:

Pros:

- Full Control: Puppeteer is a headless browser automation library that provides full control over the browser, allowing you to interact with web pages just like a user would.

- Dynamic Content: Puppeteer is excellent for scraping pages with dynamic content and heavy JavaScript usage, as it renders pages and executes JavaScript.

- Customization: You can customize your scraping logic extensively, adapting it to specific website structures and behaviors.

- Flexibility: Puppeteer is not limited to scraping. It can also be used for automated testing, taking screenshots, generating PDFs, and more.

Cons:

- Learning Curve: Puppeteer might have a steeper learning curve, especially for beginners, as it involves understanding how browsers work and interacting with them programmatically.

- Resource Intensive: Running a headless browser can be resource-intensive, consuming more memory and CPU compared to simpler scraping solutions.

- Development Time: Creating and maintaining Puppeteer scripts may require more development time, potentially increasing overall project costs.

Crawlbase’s Scraper :

Pros:

- Ease of Use: Crawlbase API is designed to be user-friendly, making it easy for developers to get started quickly without the need for extensive coding or browser automation knowledge.

- Scalability: Crawlbase API is a cloud-based solution, offering scalability and eliminating the need for you to manage infrastructure concerns.

- Proxy Management: Crawlbase API handles proxies and IP rotation automatically, which can be crucial for avoiding IP bans and improving reliability.

- Cost-Efficient: Depending on your scraping needs, using a service like API might be more cost-efficient, especially if you don’t require the extensive capabilities of a headless browser.

Cons:

- Limited Customization: Crawlbase API might have limitations in terms of customization compared to Puppeteer. It may not be as flexible if you need highly specialized scraping logic.

- Dependency on External Service: Your scraping process relies on an external service, which means you are subject to their service availability and policies.

Conclusion:

Choose Puppeteer if:

- You need full control and customization over the scraping process.

- You’re aware that development time may be longer, potentially increasing costs.

- You are comfortable managing a headless browser and are willing to invest time in learning.

Choose Crawlbase API if:

- You want a quick and easy-to-use solution without the need for in-depth browser automation knowledge.

- Scalability and proxy management are crucial for your scraping needs.

- You prefer a managed service and a simple solution for quick project deployment.

- You aim for a more cost-efficient solution considering potential development time and resources.

Ultimately, the choice between Puppeteer and Crawlbase API depends on your specific requirements, technical expertise, and preferences in terms of control and ease of use.

If you like this guide, check out other scraping guides from Crawlbase. See our recommended “how-to” guides below:

How to Scrape Flipkart

How to Scrape Yelp

How to Scrape Glassdoor

VI. Frequently Asked Questions (FAQ)

Q. Can I use the Crawlbase API for other websites?

Yes, the Crawlbase API is compatible with other websites, especially popular ones like Amazon, Google, Facebook, LinkedIn, and more. Check the Crawlbase API documentation for the full list.

Q. Is there a free trial for the Crawlbase API?

Yes, the first 1,000 free requests are free of charge for regular requests. If you need JavaScript rendering, you can subscribe to any of the paid packages.

Q. Can the Crawlbase API hide my IP address to avoid blocks or IP bans?

Yes. the Crawlbase API utilizes millions of proxies and hide your IP on each request to bypass common scraping problems like bot detection, CAPTCHAs, and IP blocks effectively.

If you have other questions or concerns about this guide or the API, our product experts will be glad to assist. Please do not hesitate to contact our support team. Happy Scraping!