Looking to scrape Zalando? You’re in the right place. Zalando is one of the top fashion online shopping sites with a huge range of stuff from clothes to accessories. Maybe you’re doing market research or building a fashion app - either way, knowing how to get good data straight from the site can be handy.

In this blog, we’ll show you how to create a reliable Zalando scraper with Puppeteer - a well-known web scraping tool. You’ll learn how to pull out product details such as prices, sizes, and stock levels. We’ll also give you tips on how to handle CAPTCHA, IP blocking and how to scale your scraper with Crawlbase Smart Proxy.

Let’s get started!

Table of Contents

- Why Scrape Zalando for Product Data?

- Key Data Points to Extract from Zalando

- Setting Up Your Node.js Environment

- Installing Node.js

- Installing Required Libraries

- Choosing an IDE

- Inspecting the HTML for Selectors

- Writing the Zalando Product Listings Scraper

- Handling Pagination

- Storing Data in a JSON File

- Inspecting the HTML for Selectors

- Writing the Zalando Product Details Scraper

- Storing Data in a JSON File

- What is Crawlbase Smart Proxy?

- How to Use Crawlbase Smart Proxy with Puppeteer

- Benefits of Using Crawlbase Smart Proxy

Why Scrape Zalando for Product Data?

Scraping Zalando is a great way to get product data for various purposes. Whether you’re monitoring prices, tracking product availability, or analyzing fashion trends, having access to this data gives you an edge. Zalando is one of the largest online fashion platforms in Europe with a wide range of products from shoes and clothes to accessories.

By scraping Zalando, you can extract product names, prices, reviews, and availability. This data can be used to compare prices, create data-driven marketing strategies, or even build an automated price tracker. If you run an eCommerce business or just want to keep an eye on the latest fashion trends, scraping Zalando’s product data will help you stay ahead.

Using a scraper to get data from Zalando saves you the time and effort of manually searching and copying product information. With the right setup you can get thousands of product details in no time and efficiently, making your data collection process more streamlined.

Key Data Points to Extract from Zalando

When scraping Zalando you can extract several important product information. These details are useful for tracking trends, understanding prices, or analyzing market behaviors. Below are the main data points to focus on:

- Product Name: The name of the product helps you identify and categorize what is being sold.

- Product Price: Knowing the price, including discounts, is essential for monitoring price trends and comparing competitors.

- Product Description: This gives specific information about the product, such as material, style, and other key features.

- Product Reviews: Reviews provide information about product quality and popularity and are useful for sentiment analysis.

- Product Availability: Checking if a product is in stock helps you understand demand and how quickly items are selling.

- Product Images: Images give a clear view of the product, which is important for understanding fashion trends and styles.

- Brand Name: Knowing the brand allows for better analysis of brand performance and comparison across different brands.

Setting Up Your Node.js Environment

In order to efficiently scrape Zalando, you will need to configure your Node.js environment. This process involves installing Node.js, the necessary libraries, and choosing a suitable Integrated Development Environment (IDE). Here’s how to do it step by step:

Installing Node.js

- Download Node.js: Go to the official Node.js website to get its latest version for your operating system. Node.js comes with npm (Node Package Manager), which you’ll use to install other libraries.

- Install Node.js: Follow the installation instructions for your operating system. You may verify if it is installed by opening your terminal or command prompt and typing:

1 | node -v |

This command should display the installed version of Node.js.

Installing Required Libraries

- Create a New Project Folder: Create a folder for your scraping project. Open the terminal inside this folder.

- Initialize npm: Inside your project folder, run:

1 | npm init -y |

This command creates a package.json file that keeps track of your project’s dependencies.

- Install Required Libraries: You’ll need a few libraries to make scraping easier. Install Puppeteer and any other libraries you may require:

1 | npm install puppeteer axios |

- Create the Main File: In your project folder, create a file named

scraper.js. This file will contain your scraping code.

Choosing an IDE

Selecting an IDE can make coding easier. Some of the popular ones include:

- Visual Studio Code: Popular editor with lots of extensions for working with JavaScript.

- WebStorm: A powerful IDE specifically designed for JavaScript and web development, but it isn’t free.

- Atom: A hackable text editor that is customizable and user-friendly.

Now that you have your environment set up and scraper.js created. Let’s get started with scraping Zalando product listings.

Scraping Zalando Product Listings

After setting up the environment, we can start creating the scraper for Zalando product listings. We will scrape the handbags section from this URL:

https://en.zalando.de/catalogue/?q=handbags

We’ll extract the product page URL, title, store name, price, and image URL from each listing. We will also handle pagination to go through multiple pages.

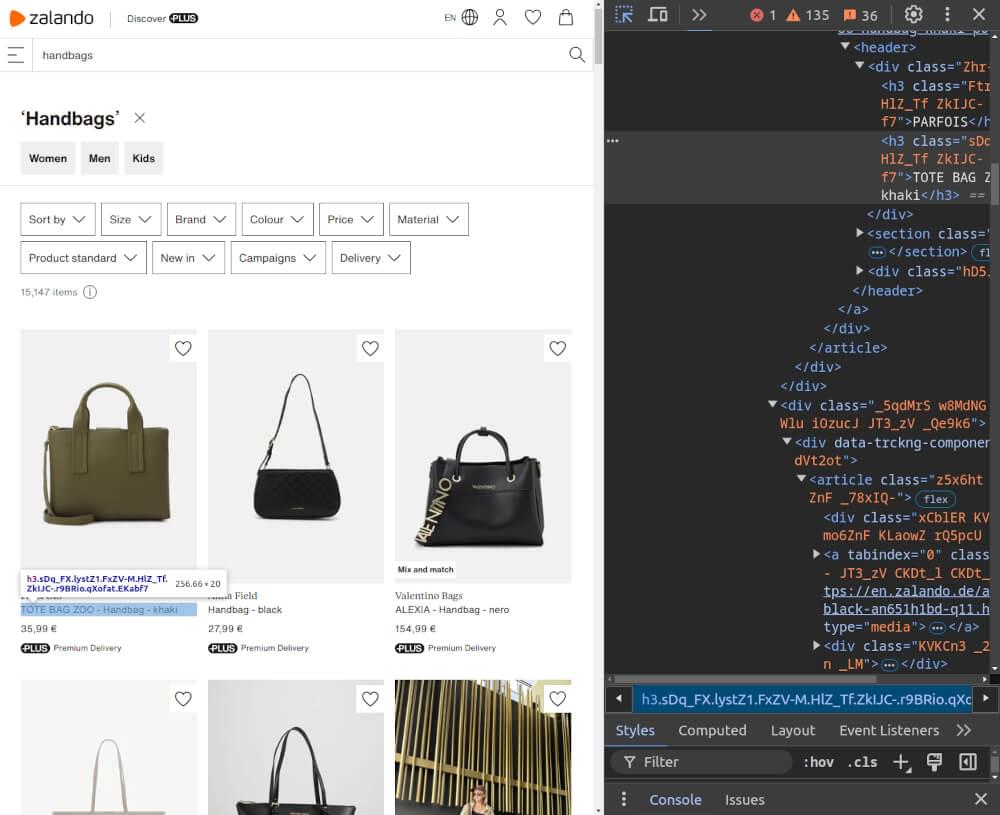

Inspecting the HTML for Selectors

First we have to inspect the HTML of the product listings page to find the correct selectors. Open the developer tools in your browser and navigate to the handbag listings.

You’ll typically look for elements like:

- Product Page URL: This is the link to the individual product page.

- Product Title: Usually in an

<h3>tag within a<div>element. - Brand Name: This may be found in an

<h3>tag within a<div>element. - Price: Found in a

<span>tag with a price class. - Image URL: Contained in the

<img>tag within each product card.

Writing the Zalando Product Listings Scraper

Now that you have the selectors, you can write a scraper to collect product listings. Here’s an example code snippet using Puppeteer:

1 | const puppeteer = require('puppeteer'); |

Code Explanation:

scrapeProductListingsFunction: This function navigates to the Zalando product page, with unlimited timeout limit, and extracts the product title, price, URL, and image URL.- Data Collection: The function returns an array of product objects containing the scraped information.

Example Output:

1 | Product Listings: [ |

Handling Pagination

To gather more listings, you need to handle pagination. Zalando uses the &p= parameter in the URL to navigate between pages. Here’s how to modify your scraper to handle multiple pages:

1 | async function scrapeAllProductListings(page, totalPages) { |

Code Explanation:

scrapeAllProductListingsFunction: This function loops through the specified number of pages, constructs the URL for each page, and calls thescrapeProductListingsfunction to gather data from each page.- Pagination Handling: Products from all pages are combined into a single array.

Storing Data in a JSON File

Finally, it’s useful to store the scraped data in a JSON file for later analysis. Here’s how to do that:

1 | const puppeteer = require('puppeteer'); |

Code Explanation:

saveDataToJsonFunction: This function saves the scraped product listings to a json file (zalando_product_listings.json) so you can easily access the data

Next up we will cover how to scrape product data from individual product pages.

Scraping Zalando Product Details

Now that you have scraped the listings, the next step is to gather data from individual product pages. This allows you to get more specific data like product descriptions, material details, and customer reviews, which are not available on the listing pages.

To scrape the product details, we’ll first inspect the structure of the product page and identify the relevant HTML elements that contain the data we need.

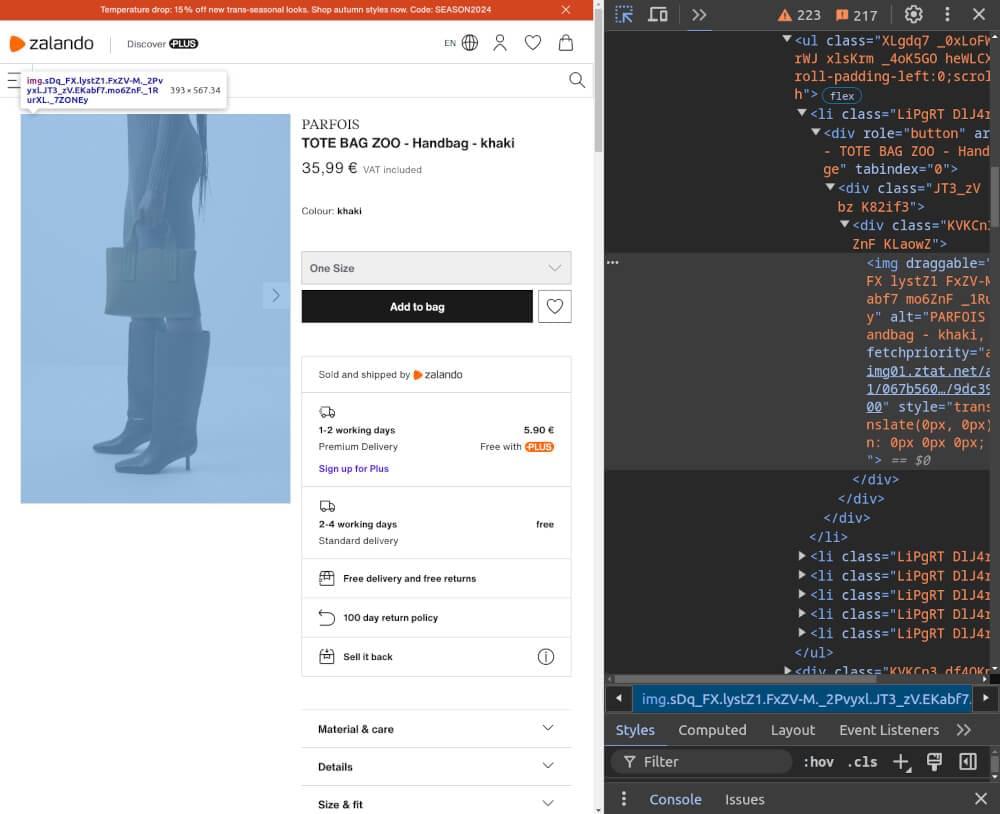

Inspecting the HTML for Selectors

Visit any individual product page from Zalando and use your browser’s developer tools to inspect the HTML structure.

You’ll typically need to find elements like:

- Product Title: Usually within a

<span>tag with classes likeEKabf7 R_QwOV. - Brand Name: Usually within a

<span>tag with classes likez2N-Fg yOtBvf. - Product Details: Located in a

<div>withindata-testid="pdp-accordion-details". - Price: In a

<span>tag with classes likedgII7d Km7l2y. - Available Sizes: Often listed in a

<div>withindata-testid="pdp-accordion-size_fit". - Image URLs: Contained in the

<img>tag within a<ul>with classes likeXLgdq7 _0xLoFW.

Writing the Zalando Product Details Scraper

Once you have the correct selectors, you can write a scraper to collect product details like the title, description, price, available sizes, and image URLs.

Here’s an example code to scrape Zalando product details using Puppeteer:

1 | const puppeteer = require('puppeteer'); |

Code Explanation:

scrapeProductDetailsFunction: This function navigates to the product URL, waits for the content to load, and scrapes the product title, description, price, available sizes, and image URLs. To access the relevant content, the function first waits for the “Details” and “Sizes” buttons to become visible usingawait page.waitForSelector(), then clicks them withawait page.click(). This expands the respective sections, enabling the extraction of their content.- Product URLs Array: This array contains the product page URLs you want to scrape.

Example Output:

1 | Product details scraped successfully: [ |

Storing Data in a JSON File

After scraping the product details, it’s a good idea to save data in a JSON file. This makes it easier to access and analyze later. Here’s how to save the scraped product details to a JSON file.

1 | const fs = require('fs'); |

Code Explanation:

saveDataToJsonFunction: This function writes the scraped product details to a JSON file (zalando_product_details.json), formatted for easy reading.- Data Storage: After scraping the details, the data is passed to the function to be saved in a structured format.

In the next section, we’ll look at how you can optimize your scraper using Crawlbase Smart Proxy to avoid getting blocked while scraping.

Optimizing with Crawlbase Smart Proxy

When scraping Zalando, you might get blocked or throttled. To avoid this, use a proxy service. Crawlbase Smart Proxy helps you scrape safely and fast. Here’s how to integrate it into your Zalando scraper.

How to Use Crawlbase Smart Proxy with Puppeteer

Integrating Crawlbase Smart Proxy into your Puppeteer script is straightforward. You’ll need your Crawlbase API key to get started.

Here’s how to set it up:

- Sign Up for Crawlbase: Go to the Crawlbase website and create an account. After signing up you’ll get an API Token.

- Update Your Puppeteer Script: Modify your existing scraper to use the Crawlbase proxy.

Here’s an updated version of your Zalando product scraper with Crawlbase Smart Proxy:

1 | const puppeteer = require('puppeteer'); |

Code Explanation:

- Proxy Setup: Replace

_USER_TOKEN_with your actual Crawlbase token. This tells Puppeteer to use the Crawlbase proxy for all requests. - Browser Launch Options: The

argsparameter in thepuppeteer.launch()method specifies the proxy server to use. This way, all your requests go through the Crawlbase proxy.

Optimize your Zalando Scraper with Crawlbase

Scraping Zalando can provide useful information for your projects. In this blog, we showed you how to set up your Node.js environment and scrape product listings and details. Always check Zalando’s scraping rules to stay within their limits.

Using Puppeteer with Crawlbase Smart Proxy makes your scraping faster and more robust. Storing your data in JSON makes it easy to manage and analyze. Remember, website layouts can change, so keep your scrapers up to date.

If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Flipkart

📜 How to Scrape Etsy

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Good luck with your scraping journey!

Frequently Asked Questions

Q. Is scraping Zalando legal?

Scraping data from Zalando can have legal implications. Make sure to review the website’s terms of service to see what they say about data scraping. Some websites will explicitly not allow scraping, while others will allow it under certain conditions. By following the website’s rules, you can avoid legal issues and be ethical.

Q. What tools do I need to scrape Zalando?

To scrape Zalando, you need specific tools because the site uses JavaScript rendering. First, install Node.js, which allows you to run JavaScript code outside a browser. Then, use Puppeteer, a powerful library that controls a headless Chrome browser so you can interact with JavaScript-rendered content. Also, consider using Crawlbase Crawling API, which can help with IP rotation and bypassing blocks. Together, these tools will help you extract data from Zalando’s dynamic pages.

Q. Why use Crawlbase Smart Proxy while scraping Zalando?

Using Crawlbase Smart Proxy for Zalando scraping is good for several reasons. It will prevent you from getting blocked by the website, it rotates IP addresses to mimic regular user behavior. So your scraping will be more effective and you can collect data continuously without interruptions. Crawlbase Smart Proxy will also speed up your scraping, so you can collect data faster and more efficiently.