This blog is a step-by-step guide of scraping Amazon PPC ad data with Python. Amazon PPC ads, or Sponsored Products, have become a pivotal component of Amazon’s vast advertising ecosystem. These are the ads you see when you perform a search on Amazon, often labeled as “Sponsored” or “Ad.” Scraping Competitors sponsored ads data gives you alot more than competitive edge. Scroll down to learn more how amazon ads can benefit your business or you can head straight to scraping amazon ads data by clicking here.

Our ready-to-use Amazon scraper is a comprehensive solution for scraping all kinds of Amazon data. You can try it now.

Table Of Contents

- Why Scrape Amazon PPC Ad Data?

- Crawlbase Python Library

- The Data You Want to Scrape

- Setting Up Your Development Environment

- Installing Required Libraries

- Creating a Crawlbase Account

- Getting the Correct Crawlbase Token

- Setting up Crawlbase Crawling API

- Handling Dynamic Content

- Extracting Ad Data And Saving into SQLite Database

1. Getting Started

Amazon has a large and expanding marketplace. Amazon’s marketplace now has over 2.5 million sellers selling their wares. A company can do everything it can to raise awareness of its brand and product, but in the early stages, it often needs to utilize someone else’s brand to build its own. Smaller shops trying to scale platforms like Amazon to gain exposure to a client base would be unable to do so on their own. Amazon sells to almost 200,000 enterprises with annual sales of $100,000 or higher. On the marketplace, around 25,000 vendors earn more than $1 million.

Lets explore more on why you should scrape Amazon ads.

Why Scrape Amazon Sponsored Ads Data?

Scraping Amazon PPC ad data might not be the first idea that comes to mind, but it holds immense potential for e-commerce businesses. Here’s why you should consider diving into the world of scraping Amazon PPC ad data:

- Competitive Analysis: By scraping data from Amazon PPC ads, you can gain insights into your competitors’ advertising strategies. You can monitor their keywords, ad copy, and bidding strategies to stay ahead in the game.

- Optimizing Your Ad Campaigns: Accessing data from your own Amazon PPC campaigns allows you to analyze their performance in detail. You can identify what’s working and what’s not, helping you make data-driven decisions to optimize your ad spend.

- Discovering New Keywords: Scraping ad data can uncover valuable keywords that you might have missed in your initial research. These new keywords can be used to enhance your organic listings as well.

- Staying Informed: Amazon’s ad system is dynamic. New products, new keywords, and changing trends require constant monitoring. Scraping keeps you informed about these changes and ensures your advertising strategy remains relevant.

- Research and Market Insights: Beyond your own campaigns, scraping Amazon PPC ad data provides a broader perspective on market trends and customer behavior. You can identify rising trends and customer preferences by analyzing ad data at scale.

In the subsequent sections of this guide, you’ll delve into the technical aspects of scraping Amazon PPC ad data, unlocking the potential for a competitive advantage in the e-commerce world.

2. Getting Started with Crawlbase Crawling API

If you’re new to web scraping or experienced in the field, you’ll find that the Crawlbase Crawling API simplifies the process of extracting data from websites, including Scraping Amazon search pages. Before we go into the specifics of using this API, let’s take a moment to understand why it’s essential and how it can benefit you.

Crawlbase Python Library

To harness the power of Crawlbase Crawling API, you can use the Crawlbase Python library. This library simplifies the integration of Crawlbase into your Python projects, making it accessible to Python developers of all levels of expertise.

First, initialize the Crawling API class.

1 | api = CrawlingAPI({ 'token': 'YOUR_CRAWLBASE_TOKEN' }) |

Pass the URL that you want to scrape by using the following function.

1 | api.get(url, options = {}) |

Example:

1 | response = api.get('https://www.facebook.com/britneyspears') |

You can pass any options from the ones available in the API documentation.

Example:

1 | response = api.get('https://www.reddit.com/r/pics/comments/5bx4bx/thanks_obama/', { |

There are many other functionalities provided by Crawlbase Python library. You can read more about it here.

In the following sections, we will guide you through harnessing the capabilities of the Crawlbase Crawling API to scrape Amazon search pages effectively. We’ll use Python, a versatile programming language, to demonstrate the process step by step. Let’s explore Amazon’s wealth of information and learn how to unlock its potential.

3. Understanding Amazon PPC Ads

Before delving into the technical aspects of scraping Amazon PPC ad data, it’s crucial to understand Amazon sponsored ads, the different types of it, and the specific data you’ll want to scrape. Let’s start by decoding Amazon’s advertising system.

The Data You Want to Scrape

Now that you have an understanding of Amazon’s advertising, let’s focus on the specific data you want to scrape from Amazon PPC ads. When scraping Amazon PPC ad data, the key information you’ll typically aim to extract includes:

- Ad Campaign Information: This data provides insights into the overall performance of your ad campaigns. It includes campaign names, IDs, start and end dates, and budget details.

- Keyword Data: Keywords are the foundation of PPC advertising. You’ll want to scrape keyword information, including the keywords used in your campaigns, their match types (broad, phrase, exact), and bid amounts.

- Ad Group Details: Ad groups help you organize your ads based on common themes. Scraping ad group data allows you to understand the structure of your campaigns.

- Ad Performance Metrics: Essential metrics include the number of clicks, impressions, CTR, conversion rate, total spend, and more. These metrics help you evaluate the effectiveness of your ads.

- Product Information: Extracting data about the advertised products, such as ASIN, product titles, prices, and image URLs, is vital for optimizing ad content.

- Competitor Analysis: In addition to your own ad data, you might want to scrape competitor ad information to gain insights into their strategies and keyword targeting.

Understanding these core elements and the specific data you aim to scrape will be instrumental as you progress in scraping Amazon PPC ad data using Python and the Crawlbase Crawling API. In the subsequent sections, you’ll learn how to turn this understanding into actionable technical processes.

4. Prerequisites

Before we embark on our web scraping journey, let’s ensure that you have all the necessary tools and resources ready. In this chapter, we’ll cover the prerequisites needed for successful web scraping of Amazon search pages using the Crawlbase Crawling API.

Setting Up Your Development Environment

You’ll need a suitable development environment to get started with web scraping. Here’s what you’ll require:

Python:

Python is a versatile programming language widely used in web scraping. Ensure that you have Python installed on your system. You can download the latest version of Python from the official website here.

Code Editor or IDE:

Choose a code editor or integrated development environment (IDE) for writing and running your Python code. Popular options include PyCharm, and Jupyter Notebook. You can also use Google Colab. Select the one that best suits your preferences and workflow.

Installing Required Libraries

Web scraping in Python is made more accessible using libraries that simplify tasks like making HTTP, parsing HTML, and handling data. Install the following libraries using pip, Python’s package manager:

1 | pip install pandas |

- Pandas: Pandas is a powerful data manipulation library that will help you organize and analyze the scraped data efficiently.

- Crawlbase: A lightweight, dependency free Python class that acts as wrapper for Crawlbase API.

- Beautiful Soup: Beautiful Soup is a Python library that makes it easy to parse HTML and extract data from web pages.

Creating a Crawlbase Account

To access the Crawlbase Crawling API, you’ll need a Crawlbase account. If you don’t have one, follow these steps to create an account:

- Click here to create a new Crawlbase Account.

- Fill in the required information, including your name, email address, and password.

- Verify your email address by clicking the verification link sent to your inbox.

- Once your email is verified, you can access your Crawlbase dashboard.

Now that your development environment is set up and you have a Crawlbase account ready let’s proceed to the next steps, where we’ll get your Crawlbase token and start making requests to the Crawlbase Crawling API.

5. Amazon PPC Ad Scraping - Step by Step

Now that we’ve established the groundwork, it’s time to dive into the technical process of scraping Amazon PPC ad data step by step. This section will guide you through the entire journey, from making HTTP requests to Amazon and navigating search result pages to structuring your scraper for extracting ad data. We’ll also explore handling pagination to unearth more ads.

Getting the Correct Crawlbase Token

We must obtain an API token before we can unleash the power of the Crawlbase Crawling API. Crawlbase provides two types of tokens: the Normal Token (TCP) for static websites and the JavaScript Token (JS) for dynamic or JavaScript-driven websites. Given that Amazon relies heavily on JavaScript for dynamic content loading, we will opt for the JavaScript Token.

1 | from crawlbase import CrawlingAPI |

You can get your Crawlbase token here after creating account on it.

Setting up Crawlbase Crawling API

Armed with our JavaScript token, we’re all set to set up the Crawlbase Crawling API. But before we proceed, let’s delve into the structure of the output response. The response you receive can come in two formats: HTML or JSON. The default choice for the Crawling API is HTML format.

HTML response:

1 | Headers: |

To get the response in JSON format you have to pass a parameter “format” with the value “json”.

JSON Response:

1 | { |

We can read more about Crawling API response here. For the example, we will go with the default option. We’ll utilize the initialized API object to make requests. Specify the URL you intend to scrape using the api.get(url, options={}) function.

1 | from crawlbase import CrawlingAPI |

In the provided code snippet, we’re safeguarding the acquired HTML content by storing it in an HTML file. This action is crucial to confirm the successful acquisition of the targeted HTML data. We can then review the file to inspect the specific content contained within the crawled HTML.

output.html Preview:

As you can see above, no useful information is present in the crawled HTML. This is because Amazon loads its important content dynamically using JavaScript and Ajax.

Handling Dynamic Content

Much like numerous contemporary websites, Amazon’s search pages employ dynamic content loading through JavaScript rendering and Ajax calls. This dynamic behavior can present challenges when attempting to scrape data from these pages. Nonetheless, thanks to the Crawlbase Crawling API, these challenges can be effectively addressed. We can leverage the following query parameters provided by the Crawling API to tackle this issue.

Incorporating Parameters

When using the JavaScript token in conjunction with the Crawlbase API, you have the capability to define specific parameters that ensure the accurate capture of dynamically rendered content. Several pivotal parameters include:

- page_wait: This parameter, although optional, empowers you to specify the duration in milliseconds to await before the browser captures the resultant HTML code. Deploy this parameter in scenarios where a page necessitates additional time for rendering or when AJAX requests must be fully loaded before HTML capture.

- ajax_wait: Another optional parameter tailored for the JavaScript token. It grants you the ability to indicate whether the script should await the completion of AJAX requests prior to receiving the HTML response. This proves invaluable when content relies on the execution of AJAX requests.

For using these parameters in our example, we can update our code like this:

1 | from crawlbase import CrawlingAPI |

Crawling API provides many other important parameters. You can read about them here.

Extracting Ad Data And Saving into SQLite Database

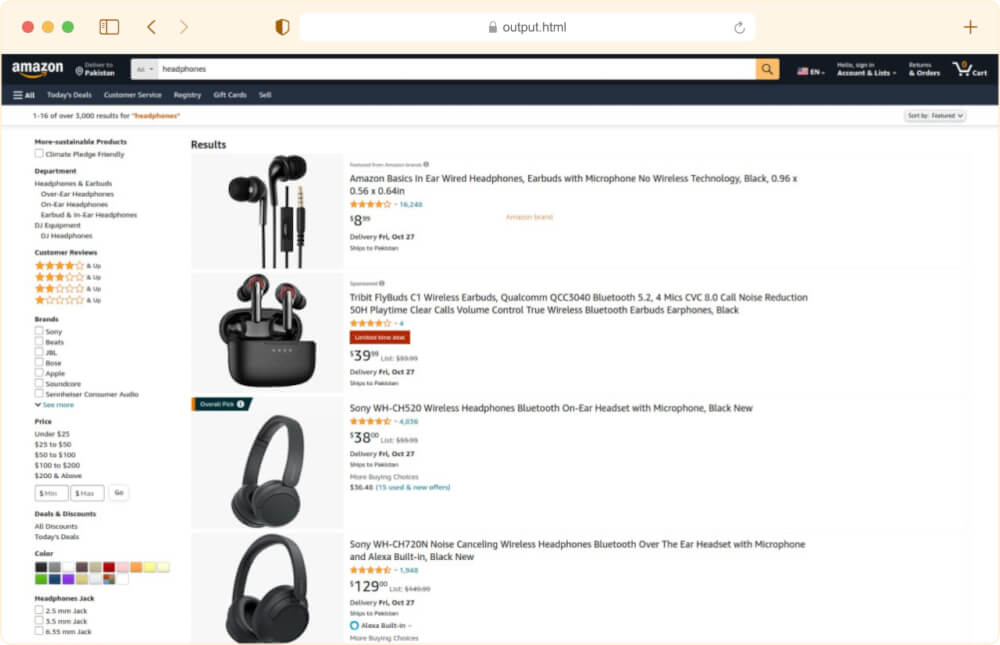

Now that we have successfully acquired the HTML content of Amazon’s dynamic search pages, it’s time to extract the valuable data for Amazon PPC ads from the retrieved content. For the example we will extract title and price of the ads.

After extracting this data, it’s prudent to store it systematically. For this purpose, we’ll employ SQLite, a lightweight and efficient relational database system that seamlessly integrates with Python. SQLite is an excellent choice for local storage of structured data, and in this context, it’s a perfect fit for preserving the scraped Amazon PPC ad data.

1 | import sqlite3 |

Example Output:

This Python script demonstrates the process of scraping Amazon’s search page for PPC ads. It begins by initializing an SQLite database, creating a table to store the scraped data, including the ad ID, price, and title. The insert_data function is defined to insert the extracted data into this database. The script then sets up the Crawlbase API for web crawling, specifying options for page and AJAX waiting times to handle dynamically loaded content effectively.

After successfully retrieving the Amazon search page using the Crawlbase API, the script utilizes BeautifulSoup for parsing the HTML content. It specifically targets PPC ad elements on the page. For each ad element, the script extracts the price and title information. It verifies the existence of these details and cleans them before inserting them into the SQLite database using the insert_data function. The script concludes by properly closing the database connection. In essence, this script showcases the complete process of web scraping, data extraction, and cloud storage, essential for various data analysis and usage scenarios.

6. Final Words

So this was scraping amazon sponsored ads, if you’re interested in more guides like these check out the links below:

📜 How to Scrape Amazon Reviews

📜 How to Scrape Amazon Search Pages

📜 How to Scrape Amazon Product Data

For additional help and support, check out the guides on scraping amazon ASIN, Amazon reviews in Node, Amazon Images, and Amazon data in Ruby.

We have written some guides on other e-commerce sites like scraping product data from Walmart, eBay, and AliExpress. just in case you’re scraping them ;).

Feel free to reach out to us here for questions and queries.

7. Frequently Asked Questions

Q. What is Amazon PPC advertising?

Amazon PPC advertising allows sellers and advertisers to promote their products on the Amazon platform. These ads are displayed within Amazon’s search results and product detail pages, helping products gain enhanced visibility. Advertisers pay a fee only when a user clicks on their ad. It’s a cost-effective way to reach potential customers who are actively searching for products.

Q. Why is scraping Amazon PPC ad data important?

Scraping Amazon data helps leverage data-driven insights to enhance the performance of PPC campaigns, boost visibility, and maximize ROI. Firstly, it enables businesses to gain insights into their competitors’ advertising strategies, such as keywords, ad copy, and bidding techniques. Secondly, it allows advertisers to optimize their own ad campaigns by analyzing performance metrics. Additionally, scraping can uncover valuable keywords for improving organic listings. Moreover, it keeps businesses informed about changes in Amazon’s ad system and provides broader market insights, helping them stay ahead in the dynamic e-commerce landscape.

Q. What is the Crawlbase Crawling API?

The Crawlbase Crawling API is a sophisticated web scraping tool that simplifies the process of extracting data from websites at scale. It offers developers and businesses an automated and user-friendly means of gathering information from web pages. One of its noteworthy features is automatic IP rotation, which enhances data extraction by dynamically changing the IP address for each request, reducing the risk of IP blocking or restrictions. Users can send requests to the API, specifying the URLs to scrape, along with query parameters, and in return, they receive the scraped data in structured formats like HTML or JSON. This versatile tool is invaluable for those seeking to collect data from websites efficiently and without interruption.

Q. How can I get started with web scraping using Crawlbase and Python?

To get started with web scraping using Crawlbase and Python, follow these steps:

- Ensure you have Python installed on your system.

- Choose a code editor or integrated development environment (IDE) for writing your Python code.

- Install necessary libraries, such as BeautifulSoup4 and the Crawlbase library, using pip.

- Create a Crawlbase account to obtain an API token.

- Set up the Crawlbase Python library and initialize the Crawling API with your token.

- Make requests to the Crawlbase Crawling API to scrape data from websites, specifying the URLs and any query parameters.

- Save the scraped data and analyze it as needed for your specific use case.