It all started with the release of the first version of ChatGPT in 2022. The use of AI has grown exponentially since then, with an increasing number of people integrating it into their daily tasks at work and in their personal lives. At this point, if you’re not taking advantage of it, you’re definitely missing out.

So in this blog, we’ll demonstrate a few of the many things you can accomplish if you combine Crawlbase scraping outputs with Generative AIs like ChatGPT. We’ll walk you through how to utilize its capabilities to automatically summarize web data with AI and create visualizations, such as pie charts, bar charts, and line graphs, for your data reporting workflow.

Table of Contents

Generative AI and Its Capabilities

A Generative AI is not just about analyzing or organizing data; it can perform far more complex tasks, such as creating new content in various forms, including text, images, code, audio, and even videos. This is possible because it learns and recognizes structures and patterns from the data it’s trained on, allowing it to generate results that resemble those of a real person.

Traditional web scrapers, on the other hand, rely on static rules and selectors such as CSS classes and IDs to extract data from websites. These rule-based tools often struggle with dynamic, JavaScript-heavy sites and require frequent manual adjustments. In contrast, generative AI and AI-powered tools can adapt to changing website structures and handle dynamic content more effectively, overcoming the limitations of traditional web scrapers.

For example, when trained on large amounts of written text, it can write blog posts, summarize articles, answer questions, or even tell stories and poems. When trained on images, it can create artwork that rivals that of real artists. It can produce realistic photos of people or objects that don’t actually exist.

Generative AI is already being used in many fields. Marketers use it for generating content ideas, and developers use it to write and improve code. Researchers use it to explore complex problems or simulate data when real data isn’t available.

What’s really exciting is how easily AI now integrates with Python and other coding tools. With just a few lines of script, you can have the AI not only summarize your data but also instruct the AI to plot bar charts, generate pie graphs, or automate routine analysis. This transforms the AI from a virtual assistant into a full-on data analyst, especially when combined with Crawlbase.

Why Summarize Web Data with AI

If you’re only looking at a small amount of web data, you might be able to summarize it yourself without too much trouble. However, once the data becomes larger or more complex, and you’re dealing with more than just a few web pages, doing it all by hand becomes exhausting, time-consuming, and mistakes start to creep in. With advances in generative AI, you no longer have to go through all that trouble. The benefits are pretty hard to ignore.

- Speed and Scale: AI can go through thousands of data points in just a few seconds. Something that would take you or even a whole team hours or an entire day can be done almost instantly.

- Consistency: People can get tired, make mistakes, and sometimes see the same data in different ways. AI applies the same algorithm and criteria to every document, so your results stay consistent no matter how often you run the analysis.

- Recognizing Patterns: AI models are not only fast, but they also excel at pattern recognition. They can find trends, patterns, or outliers in your data that you probably would not notice right away. For instance, they might pick up on a slight change in customer sentiment before it impacts your product.

- Automatic Reporting: One of the biggest perks is that AI can compile clear summaries and generate easy-to-understand visuals, such as charts and graphs. That way, you can see what is happening in your data right away.

Regardless of your field, if you need to extract valuable insights from a large volume of web data without spending endless hours in spreadsheets, using AI to summarize your data is an innovative solution. AI can automatically identify and extract relevant data from large datasets, allowing you to focus solely on the most meaningful information. With AI-generated summaries and visualizations, you can quickly transform raw data into actionable insights.

How to Combine Crawlbase with Generative AI

AI is only as good as the data it was given. This is why Crawlbase and Generative AI, such as ChatGPT, complement each other perfectly. Crawlbase lets you scrape web data at scale, whether it’s product information, reviews, or any public content online. Web scraping tools, especially AI web scraping tools like Crawlbase, are designed to extract data efficiently from a wide range of websites. Think of it as the engine that collects all the information, while ChatGPT is the brain that makes sense of it.

When you combine the two, you get an end-to-end system that can do some pretty amazing things:

- Crawlbase fetches the data you need, clean, structured, and fast.

- Python scripts organize that data into something usable, like a Pandas DataFrame.

- ChatGPT (or any LLM) then reads through it all and gives you a natural-language summary, trend analysis, or even formatted reports.

- Finally, you can generate visuals and export files (charts, graphs, CSVs) with just a few more lines of code.

AI web scrapers can handle complex website structures and automate the data extraction process, making it easier to extract data from sites that use dynamic content or anti-scraping measures.

If you can write Python scripts, you can start using Crawlbase and ChatGPT together to automate insights that used to take an entire team. Here’s how you can do it.

Setting Up Crawlbase and an OpenAI account

Step 1. Start by creating a free account on Crawlbase and logging into your dashboard. Once you’re in, you’ll automatically receive 1,000 free API requests, allowing you to start testing right away. Or before testing, add your billing details for an extra 9,000 free credits.

Step 2. Go to the Account Documentation section and copy your Normal Request Token, as you’ll need it later when we start writing the code.

Step 3. Sign up or log in at OpenAI. They offer free trial credits when you first sign up, but this offer isn’t guaranteed and is subject to change.

Step 4. Under Organization on the left side of your screen, click API keys and you should see an option there to “Create new secret key”.

Note: If you didn’t receive any free credits, check with your company or organization to see if they have a paid OpenAI account and can provide you with an API key.

Prepare your Python Environment

With your Crawlbase credentials ready, let’s focus on setting up your coding environment. Follow the steps below.

Step 1. Download and install Python 3 from python.org.

Step 2. Select a location on your computer and create a new folder to store all the files for this project.

Step 3. Set up your dependencies. Inside your project folder, create a file named requirements.txt and add the following lines:

1 | requests |

Step 4. Open a terminal or command prompt, navigate to your project folder, and run:

1 | python -m pip install -r requirements.txt |

This will install the necessary libraries for data collection, analysis, visualization, and working with ChatGPT.

Fetching Data Using Crawlbase

In this example, we’ll use Crawlbase to extract a list of best-selling electronics products from Amazon.

We’ll be using the Amazon Best Sellers scraper, which returns clean, structured JSON so you don’t have to worry about messy HTML parsing.

Step 1. Create a new file called web_data.py. This script will be responsible for fetching product data and handling pagination.

Step 2. Save the following code inside web_data.py:

1 | from requests.exceptions import RequestException |

Step 3. To run the script, just open a terminal, navigate to your project folder, and run:

1 | python web_data.py |

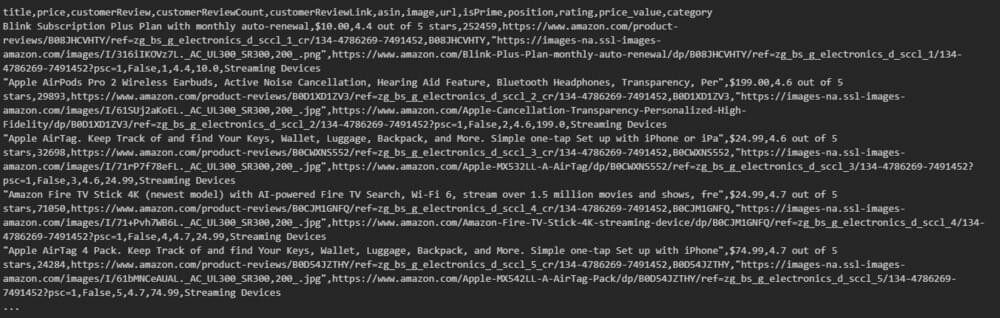

In a few seconds, you’ll see a JSON array of products printed to your terminal. Here’s a shortened example of what it looks like:

1 | [ |

This data is now ready to be passed to a Pandas DataFrame, summarized with ChatGPT, and visualized using charts. We’ll show you how to do that in the next section.

Using ChatGPT with Python Libraries

Our next goal is to clean up and organize the raw product data we gathered using Crawlbase. To accomplish this, we’ll utilize the pandas library, which facilitates the organization of data into a structured format, allowing us to efficiently filter, sort, extract values, and analyze data with ChatGPT.

Step 1. Take the product JSON returned by Crawlbase and load it into a Pandas DataFrame. Create a new file called data_frame.py and add the following code:

1 | from crawl_web_data import crawl_amazon_best_sellers_products |

This script extracts product data from Crawlbase, parses the review rating and price into numeric values, and adds a simple category column (which you can modify later).

Step 2. Open your terminal and run:

1 | python data_frame.py |

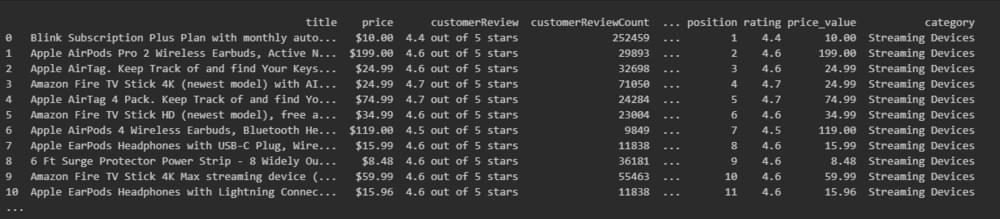

You’ll see a structured table printed to the console. Here’s a sample snippet of the output:

Step 3. Use OpenAI’s GPT model to analyze and summarize the trends from your data. Create a new file called summary.py and paste the following code:

1 | from openai import OpenAI, OpenAIError, APIStatusError, RateLimitError, BadRequestError, APIConnectionError, Timeout |

Make sure to replace <OpenAI API Key> with your actual API key from OpenAI.

Step 4. From your terminal, run:

1 | python summary.py |

The output will look something like this:

1 | AI-Generated Trend Summary: |

How to Generate Visualizations from AI Web Data

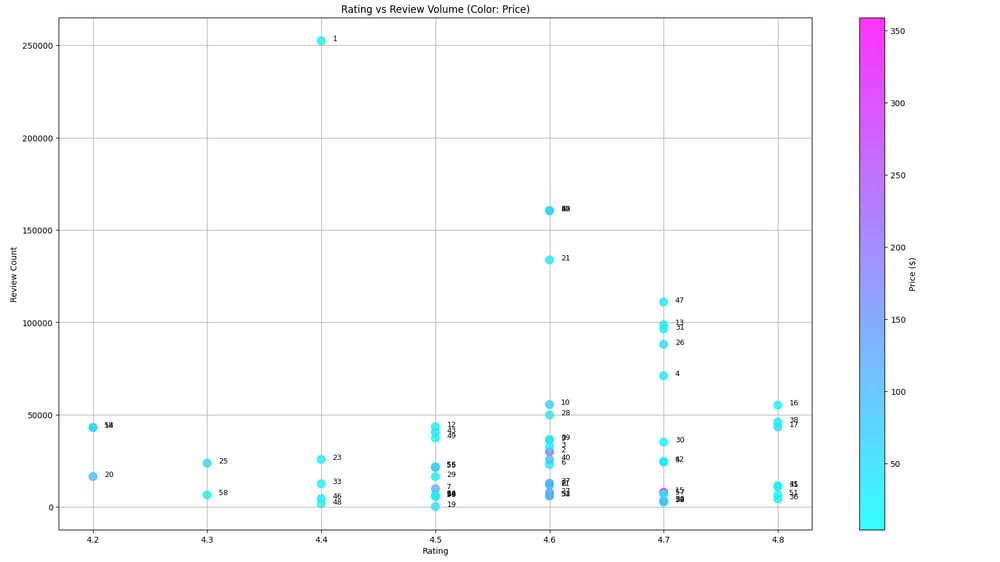

After structuring our product data into a Pandas DataFrame, we can take it one step further by creating visualizations that highlight trends, patterns, and outliers in a more digestible way.

For our next goal, we’ll use Matplotlib Python library to create data visualization charts from the Amazon best seller data we scraped earlier.

Step 1. Create a new file and name it visualization.py, then add the following code:

1 | from data_frame import generate_data_frame |

The code in visualization.py has three parts:

- It loads the product data into a Pandas DataFrame.

- Creates two charts: a bar chart showing review counts per product and a scatter plot showing the relationship between ratings, review volume, and price.

- Saves the data as a CSV file for future use or reporting.

Step 2. Run the script.

1 | python visualization.py |

This will generate three outputs:

- reviews_bar_chart.png

- rating_vs_reviews.png

- amazon_best_sellers_summary.csv

Congratulations! You have successfully generated summaries and charts that will make it easier to spot trends, compare performance, and back up your analysis with clear, data-driven visuals.

Note: You can access the entire codebase on GitHub.

Extra Tips for Automating Data Reporting

Schedule Automated Data Collection

Use a tool like cron (on Mac or Linux) or Task Scheduler (on Windows). This way, your code can run itself daily, weekly, or whenever suits you without you having to lift a finger. Great if you’re interested in trends or want to see fresh data every morning.

Use Pre-Prompted AI Instructions

Instead of typing a new prompt every time, save reusable AI prompts in your script. This helps generate consistent summaries, trend reports, or even plain-English explanations that non-technical team members can read.

Add Data Quality Checks

Always include a few safety checks before saving or visualizing the data.

- Did your product list actually load, or is it empty?

- Are important numbers, such as prices or ratings, missing?

- Is the data smaller than normal?

Websites often change their layouts without warning. These checks can save you from hours of confusion.

Take Full Advantage of Crawlbase Features

The Crawling API from Crawlbase is a reliable tool for data extraction, ensuring dependable and accurate results for your projects. Make sure you take advantage of the following:

- Normal and JavaScript requests - You can use two types of tokens. Use the Normal token for websites that don’t rely on JavaScript to render content. Use the JavaScript token when the content you need is generated via JavaScript, either because it’s built using frameworks like React or Angular, or because the data only appears after the page is fully loaded in the browser. Crawlbase can handle JavaScript-heavy websites, making it suitable for extracting data from dynamic, JavaScript-rich pages.

- Data Scrapers - In this blog, we used ‘amazon-best-sellers’. But Crawlbase offers many more scrapers tailored to specific websites and data types. If you plan to expand this project to other platforms, explore the complete list of available data scrapers on your Crawlbase dashboard.

- Get Extra Free Credits - As mentioned earlier, you can get a total of 10,000 credits for free by signing up and immediately adding your billing details. This is a great way to explore Crawlbase’s full potential and run large-scale tests before making any long-term commitment.

For enterprise or complex scraping needs, dedicated support is available to assist with setup, custom solutions, and ongoing maintenance. Sign up for Crawlbase now!

Frequently Asked Questions

Q: Do I need a paid OpenAI or Crawlbase account?

A: Both platforms offer a free tier for users to try out their services. For higher limits, unlimited pages, or advanced features, you can upgrade to paid plans, which include custom pricing options tailored to enterprise needs.

Q: Can I scrape websites other than Amazon?

A: Yes. Crawlbase supports scraping any public web page. You can modify the url parameter and even customize your scraping strategy depending on the site’s structure.

Q: What if I want to summarize non-product data, like blog posts or reviews?

A: That works too. As long as you can extract the text, you can feed it into ChatGPT and get summaries, highlights, or category suggestions.

Q: Can I use this in a business setting?

A: Yes, this setup is ideal for various business use cases such as market research, competitive analysis, price monitoring, tracking competitor pricing, analyzing pricing strategies, monitoring job postings, and extracting data from Google search and Google Maps. Web scrapers and data analysts utilize these web scraping tools to automate complex workflows, navigate intricate websites, and manage large-scale data scraping projects.

Q: What technical features and technologies are supported for AI web scraping?

A: These platforms leverage AI web technologies, including machine learning, large language models, and natural language processing, to automate the extraction process and adapt to website changes. They can scrape data, emulate human behavior to bypass IP blocking, and support automating data extraction from multiple URLs. Crawlbase is designed to handle data scraping from complex websites, manage complex workflows, and output structured formats for further analysis.