Tokopedia, one of Indonesia’s biggest e-commerce platforms has 90+ million active users and 350 million monthly visits. The platform has a wide range of products, from electronics, fashion, groceries to personal care. For businesses and developers, scraping Tokopedia data can give you insights into product trends, pricing strategy, and customer preference.

Tokopedia uses JavaScript to render its content; the traditional scraping method doesn’t work. Crawlbase Crawling API helps by handling JavaScript-rendered content seamlessly. In this tutorial, you’ll learn how to use Python and Crawlbase to scrape Tokopedia search listings and product pages for product names, prices, and ratings.

Let’s get started!

Table of Contents

- Why Scrape Tokopedia Data?

- Key Data Points to Extract from Tokopedia

- Crawlbase Crawling API for Tokopedia Scraping

- Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for CSS Selectors

- Writing the Search Listings Scraper

- Handling Pagination in Tokopedia

- Storing Data in a JSON File

- Complete Code

- Inspecting the HTML for CSS Selectors

- Writing the Product Page Scraper

- Storing Data in a JSON File

- Complete Code

Why Scrape Tokopedia Data?

Scraping Tokopedia data can be beneficial for businesses and developers. As one of Indonesia’s biggest e-commerce platform, Tokopedia has a lot of information about products, prices and customer behavior. By extracting this data, you can get ahead in the online market.

There are many reasons why one would choose to scrape data from Tokopedia:

- Market Research: Knowing the current demand will help you with inventory and marketing planning. Opportunities can always be found by looking at the general trends.

- Price Comparison: One may be able to scrape Tokopedia and get several prices on products from various categories. This would allow one to make price adjustments in order to remain competitive.

- Competitor Analysis: Compiling the data about the products of the competitors will help you understand how they position themselves and where are their weak points.

- Customer Insights: Looking into product reviews and ratings will help understand major pros and cons of various goods from customers’ point of view.

- Product Availability: Monitor products so that you know when the hot ones are getting low, bob up the stocks to appease customers.

In the next section we will see what we can scrape from Tokopedia.

Key Data Points to Extract from Tokopedia

When scraping Tokopedia, focus on the important data points and you’ll get actionable insights for your business or research. Here are the data points to grab:

- Product Name: Identifies the product.

- Price: For price monitoring and competition analysis.

- Ratings and Reviews: For User experience and products usability.

- Availability: For stock level and product availability.

- Seller Information: Details on third-party vendors, seller ratings and location.

- Product Images: Images for visual representation and understanding of the product.

- Product Description: For the details of the product.

- Category and Tags: For arrangement of products and categorized analysis.

Concentrating on these aspects of data, allows one to collect useful insights from Tokopedia that can aid one in refining or making better decisions. Next, we will see how to set up your Python environment for scraping.

Crawlbase Crawling API for Tokopedia Scraping

The Crawlbase Crawling API makes scraping Tokopedia fast and straightforward. Since Tokopedia’s website uses dynamic content, much of the data is loaded via JavaScript, making it challenging to scrape with traditional methods. But Crawlbase Crawling API renders the pages like a real browser so you can access the data.

Here’s why Crawlbase Crawling API is good for scraping Tokopedia:

- Handles Dynamic Content: Crawlbase handles JavaScript heavy pages so all product data is fully loaded and ready to scrape.

- IP Rotation: To prevent getting blocked by Tokopedia’s security systems, Crawlbase automatically rotates IPs, letting you scrape without worrying about rate limits or bans.

- Fast Performance: Crawlbase allows you to efficiently scrape massive amounts of data while saving time and resources.

- Customizable Requests: You can change the headers, cookies and control requests to fit your needs.

With these features, Crawlbase Crawling API makes scraping Tokopedia easier and more efficient.

Crawlbase Python Library

Crawlbase also provides a Python library to make web scraping even easier. To use this library you will need an access token that you can get by signing up to Crawlbase.

Here’s an example function to send a request to Crawlbase Crawling API:

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase provides two types of tokens. Normal Token for static sites. JavaScript (JS) Token for dynamic or browser-rendered content, which is required for scraping Tokopedia. Crawlbase offers 1,000 free requests to help you get started, and you can sign up without a credit card. For more details, refer to the Crawlbase Crawling API documentation.

In the next section, we’ll learn how to setup Python environment for Tokopedia scraping.

Setting Up Your Python Environment

To start scraping Tokopedia, you need to setup your Python environment. Follow these steps to get started:

Installing Python and Required Libraries

Make sure Python is installed on your machine. You can download it here. After installation, run the following command to install the necessary libraries:

1 | pip install crawlbase beautifulsoup4 |

- Crawlbase: For interacting with the Crawlbase Crawling API to handle dynamic content.

- BeautifulSoup: For parsing and extracting data from HTML.

These tools are essential for scraping Tokopedia’s data efficiently.

Selecting an IDE

Choose an IDE for seamless development:

- Visual Studio Code: Lightweight and frequently used.

- PyCharm: A full-featured IDE with powerful Python capabilities.

- Jupyter Notebook: Ideal for interactive coding and testing.

Once your environment is set up, you can begin scraping Tokopedia. Next, we’ll cover how to create Tokopedia SERP Scraper.

Scraping Tokopedia Search Listings

Now that you have your Python environment ready, we can start scraping Tokopedia’s search listings. In this section, we’ll guide you through inspecting the HTML, writing the scraper, handling pagination and storing the data in a JSON file.

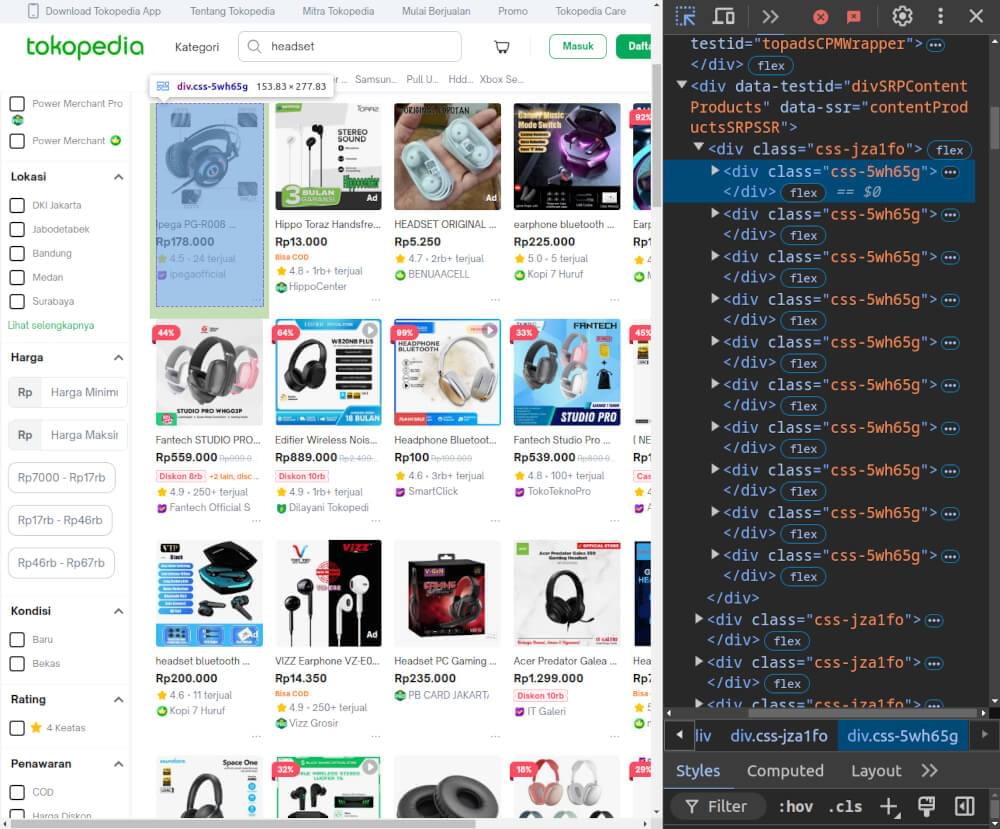

Inspecting the HTML Structure

First, you need to inspect the HTML of the Tokopedia search results page from which you want to scrape product listings. For this example, we’ll be scraping headset listings from the following URL:

1 | https://www.tokopedia.com/search?q=headset |

Open the developer tools in your browser and navigate to this URL.

Here are some key selectors to focus on:

- Product Title: Found in a

<span>tag with classOWkG6oHwAppMn1hIBsC3pQ==which contains the name of the product. - Price: In a

<div>tag with classELhJqP-Bfiud3i5eBR8NWg==that displays the product price. - Store Name: Found in a

<span>tag with classX6c-fdwuofj6zGvLKVUaNQ==. - Product Link: Product page Link found in an

<a>tag with classNq8NlC5Hk9KgVBJzMYBUsg==, accessible via thehrefattribute.

Writing the Search Listings Scraper

We’ll write a function that makes a request to the Crawlbase Crawling API, retrieves the HTML, and then parses the data using BeautifulSoup.

Here’s the code to scrape the search listings:

1 | from crawlbase import CrawlingAPI |

This function first fetches the HTML using the Crawlbase Crawling API and then parses the data using BeautifulSoup to extract the product information.

Handling Pagination in Tokopedia

Tokopedia’s search results are spread across multiple pages. To scrape all listings, we need to handle pagination. Each subsequent page can be accessed by appending a page parameter to the URL, such as ?page=2.

Here’s how to handle pagination:

1 | # Function to scrape multiple pages of search listings |

This function loops through the search result pages, scrapes the product listings from each page, and aggregates the results.

Storing Data in a JSON File

After scraping the data, you can store it in a JSON file for easy access and future use. Here’s how you can do it:

1 | # Function to save data to a JSON file |

Complete Code Example

Below is the complete code to scrape Tokopedia search listings for headsets, including pagination and saving the data to a JSON file:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

In the next section, we’ll cover scraping individual product pages on Tokopedia to get detailed information.

Scraping Tokopedia Product Pages

Now that we have scraped search listings, let’s move on to scraping product details from individual product pages. In this section, we will scrape product name, price, store name, description and image URL from a Tokopedia product page.

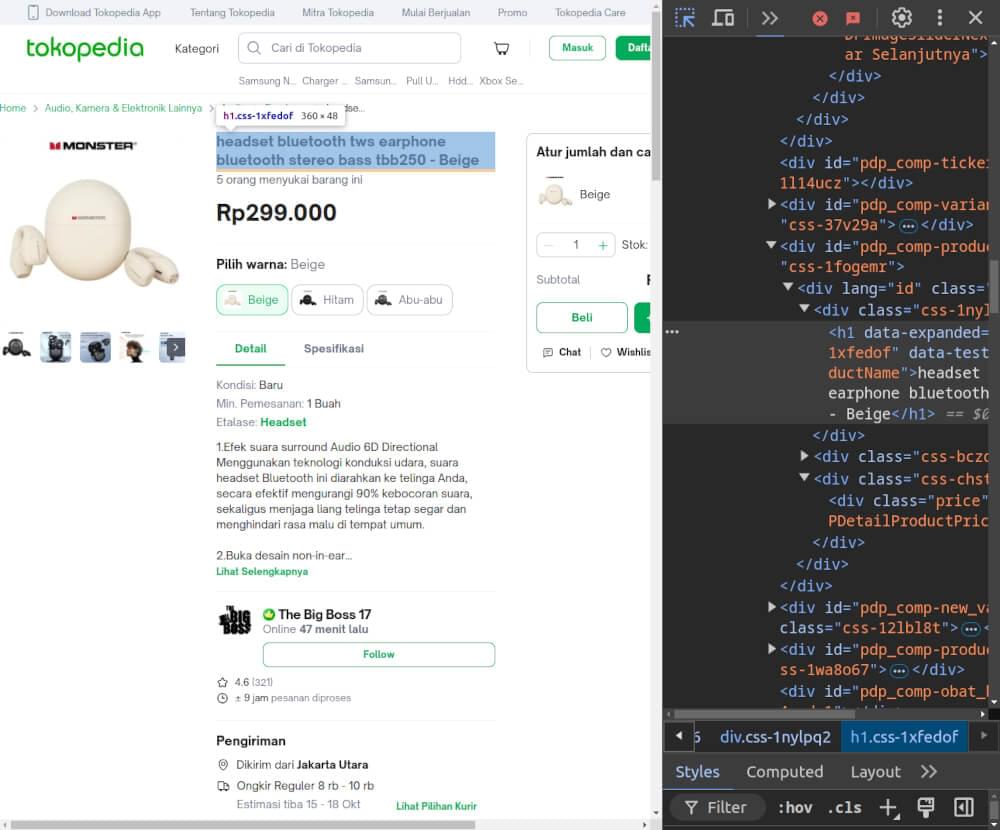

Inspecting the HTML for CSS Selectors

Before we write the scraper, we need to inspect the HTML structure of the product page to find the correct CSS selectors for the data we want to scrape. For this example, we’ll scrape the product page from the following URL:

1 | https://www.tokopedia.com/thebigboss/headset-bluetooth-tws-earphone-bluetooth-stereo-bass-tbb250-beige-8d839 |

Open the developer tools in your browser and navigate to this URL.

Here’s what we need to focus on:

- Product Name: Found in an

<h1>tag with the attributedata-testid="lblPDPDetailProductName". - Price: The price is located in a

<div>tag with the attributedata-testid="lblPDPDetailProductPrice". - Store Name: The store name is inside an

<a>tag with the attributedata-testid="llbPDPFooterShopName". - Product Description: Located in a

<div>tag with the attributedata-testid="lblPDPDescriptionProduk"which contains detailed information about the product. - Images URL: The main product image is found within a

<button>tag with the attributedata-testid="PDPImageThumbnail", and thesrcattribute of the nested<img>tag (classcss-1c345mg) contains the image link.

Writing the Product Page Scraper

Now that we have inspected the page, we can start writing the scraper. Below is a Python function that uses the Crawlbase Crawling API to fetch the HTML and BeautifulSoup to parse the content.

1 | from crawlbase import CrawlingAPI |

Storing Data in a JSON File

After scraping the product details, it’s good practice to store the data in a structured format like JSON. Here’s how to write the scraped data into a JSON file.

1 | def store_data_in_json(data, filename='tokopedia_product_data.json'): |

Complete Code Example

Here’s the complete code that scrapes the product page and stores the data in a JSON file.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

This complete example shows how to extract product details from Tokopedia product page and save them into a JSON file. It handles dynamic content so good for scraping data from JavaScript rendered pages.

Optimize Tokopedia Scraping with Crawlbase

Scraping Tokopedia can help you get product data for research, price comparison or market analysis. With Crawlbase Crawling API, you can navigate dynamic website and extract data fast even from JavaScript heavy pages.

In this blog, we covered how to setup the environment, find CSS selectors from HTML, and write the Python code to scrape product listings and product pages from Tokopedia. With the method used in this blog, you can easily collect useful information like product names, prices, descriptions, and images from Tokopedia and store them in a structured format like JSON.

If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Zalando

📜 How to Scrape Costco

Contact our support if you have any questions. Happy scraping.

Frequently Asked Questions

Q. Is it legal to scrape data from Tokopedia?

Scraping data from Tokopedia can be legal as long as you follow their terms of service and use the data responsibly. Always review the website’s rules and avoid scraping sensitive or personal data. It’s important to use the data for ethical purposes, like research or analysis, without violating Tokopedia’s policies.

Q. Why should I use Crawlbase Crawling API for scraping Tokopedia?

Tokopedia uses dynamic content that loads through JavaScript, making it harder to scrape using traditional methods. Crawlbase Crawling API makes this process easier by rendering the website in a real browser. It also controls IP rotation to prevent blockages, making scraping more effective and dependable.

Q. What key data points can I extract from Tokopedia product pages?

When scraping Tokopedia product pages, you can extract several important data points, including the product title, price, description, ratings, and image URLs. These details are useful for analysis, price comparison or building a database of products to understand market trends.