Temu is a fast-growing e-commerce platform known for its huge selection of products at competitive prices. Covering everything from electronics to fashion and home goods, Temu has become a go-to destination for online shoppers. Its dynamic, JavaScript-rendered pages make data scraping challenging with traditional methods, but with the right tools, it’s still achievable.

In this guide, we’ll show you how to scrape data from Temu using the Crawlbase Crawling API, designed to handle CAPTCHAs and JavaScript-rendered pages. Whether you’re looking to gather product information for analysis, price comparison, or market research, this blog will cover all the essential steps to extract data effectively. You’ll learn how to set up your Python environment, create Temu scrapers, handle Temu SERP pagination, and store data in a CSV file for easy access.

By the end of this article, you’ll have scrapers ready to scrape valuable data from Temu’s listings and product pages. Let’s get started!

Table of Contents

- Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for CSS Selectors

- Writing the Search Listings Scraper

- Handling Pagination in Temu

- Storing Data in a CSV File

- Complete Code Example

- Inspecting the HTML for CSS Selectors

- Writing the Product Page Scraper

- Storing Data in a CSV File

- Complete Code Example

Why Scrape Temu?

Scraping Temu is useful for businesses, analysts, and developers. Temu has everything from cheap electronics to clothing and household items, so it’s a great source for market research, price tracking, and competitor analysis. By extracting product data like prices, descriptions, ratings, and availability, businesses can stay competitive and keep up with the market.

For example, scraping Temu can help online retailers and resellers find popular products, understand pricing trends, and monitor stock availability. For personal projects or academic purposes, Temu’s data can be used to create price comparison tools, study consumer trends, or look at product performance over time.

Temu uses CAPTCHA and JavaScript, so traditional scrapers can’t capture the data. But with the Crawlbase Crawling API you can get structured data from Temu.

Key Data Points to Extract from Temu

When scraping Temu, you’ll want to collect the most important product details to support your goals, whether it’s for market analysis, product tracking, or building a database. Here are the data points you can extract from Temu:

- Product Name: The name helps to identify each product and category.

- Price: Price is important for trend monitoring and comparing similar products.

- Rating and Reviews: Reviews give insight into product quality and customer satisfaction, and ratings give overall customer opinion.

- Product Description: Descriptions give context to a product’s features, material, and unique selling points.

- Image URL: Images are important for a visual database and can be used on any site or app you’re building.

- Discounts and Offers: These can show competitive pricing and trending products.

Crawlbase Crawling API for Temu Scraping

The Crawlbase Crawling API makes scraping Temu efficient and straightforward, especially because Temu relies on JavaScript for much of its content, which makes traditional scraping methods difficult. Crawlbase solves this by rendering web pages like a real browser, allowing you to access fully loaded HTML.

Here’s why Crawlbase Crawling API is ideal for scraping Temu:

- Handles Dynamic Content: Crawlbase manages JavaScript-heavy pages so that all product data on Temu is loaded and ready for scraping.

- IP Rotation: To bypass Temu’s security checks, Crawlbase rotates IPs automatically, preventing rate limits and reducing the chance of being blocked.

- Fast and Efficient: Crawlbase allows you to scrape tons of data fast, saving you time and resources.

- Customizable Requests: You can control headers, cookies, and other request parameters to fit your scraping needs.

Crawlbase Python Library

The Crawlbase Python library further simplifies your scraping setup. To use the library, you’ll need an access token, which you can get by signing up for Crawlbase.

Here’s a sample function to request the Crawlbase Crawling API:

1 | from crawlbase import CrawlingAPI |

Note: For scraping JavaScript-rendered content like Temu’s, you’ll need a JavaScript (JS) Token from Crawlbase. Crawlbase offers 1,000 free requests to help you get started, and no credit card is required for sign-up. For more guidance, check the official Crawlbase Crawling API documentation.

In the next section, we’ll walk through setting up your Python environment for scraping Temu.

Setting Up Your Python Environment

To start scraping Temu, you need to set up your Python environment. This means installing Python and the required libraries and choosing an Integrated Development Environment (IDE) to write your code.

Installing Python and Required Libraries

First, make sure you have Python installed on your computer. You can download Python from the official website. Follow the installation instructions for your OS.

Now you have Python installed, you need to install some libraries to help with scraping. The required libraries are requests and crawlbase. Here’s how to install them using pip:

- Open your command prompt or terminal.

- Type the following commands and press Enter:

1 | pip install requests |

These commands will download and install the libraries you need. The requests library will help you make web requests. The crawlbase library allows you to interact with the Crawlbase Crawling API.

Choosing an IDE

Now, you need to choose an IDE for coding. An IDE is a program that helps you write, edit and manage your code. Here are a few options:

- PyCharm: A full Python IDE with code completion and debugging tools; a free Community Edition is available.

- Visual Studio Code (VS Code): A lightweight editor with Python support through extensions and a huge user base.

- Jupyter Notebook: Great for data analysis and testing, code can be executed in a browser for easy sharing.

Each has its advantages, so pick one that suits you. Now that your Python environment is set up, you’re ready to start scraping Temu’s search listings.

Scraping Temu Search Listings

Scraping Temu search results involves understanding the page’s HTML structure, writing a script to collect product info, managing pagination with the “See More” button, and saving the scraped data in a structured way. Let’s break it down.

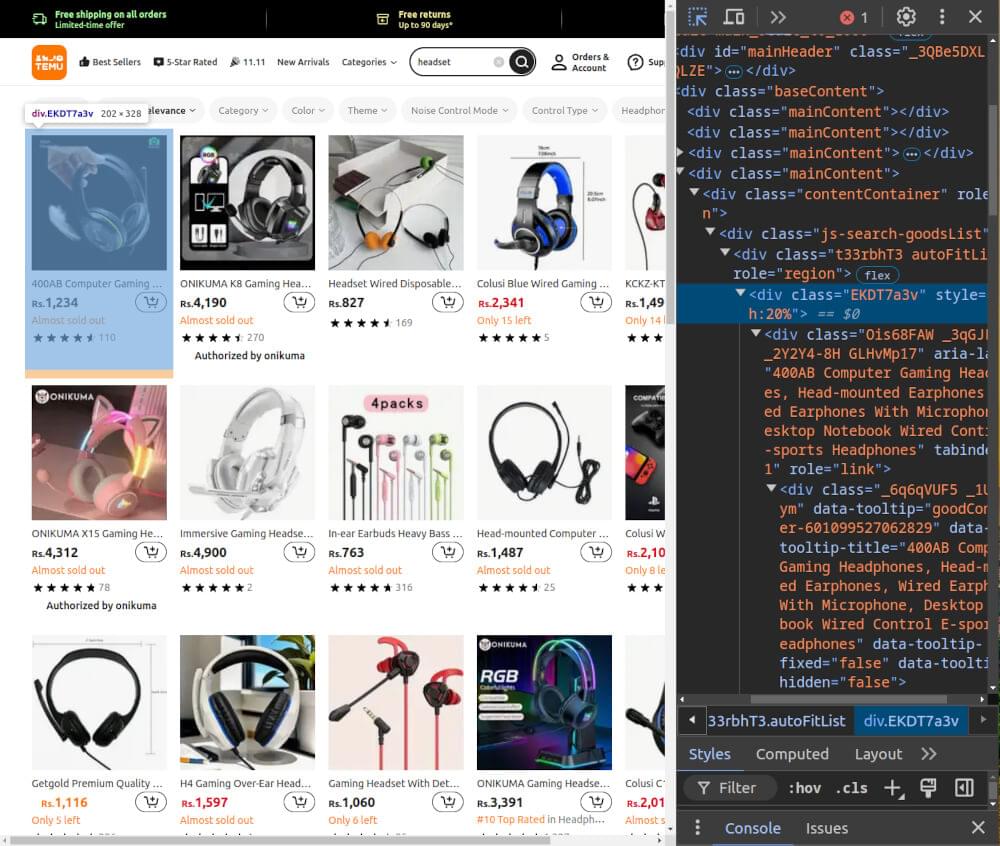

Inspecting the HTML for CSS Selectors

To start scraping, inspect the HTML structure of Temu’s search results page. Open Temu’s site in your browser, right-click on a product listing, and choose “Inspect” to see the HTML code.

Here are the key elements you’ll need:

- Product Name: Found within an

h2tag with the class_2BvQbnbN. - Price: Located within a

spantag with the class_2de9ERAH. - Image URL: Located in the

srcattribute of animgtag with the classgoods-img-external. - Product URL: Found in the href attribute of an

atag with the class_2Tl9qLr1.

By identifying these selectors, you’ll have the basic structure needed to scrape each product’s details from Temu’s search listings.

Writing the Search Listings Scraper

Now that we know the selectors, let’s write the scraper. We’ll use Python along with Crawlbase Crawling API to handle dynamic content. Here’s a function to start scraping product information:

1 | from crawlbase import CrawlingAPI |

This function fetches the HTML, processes it with BeautifulSoup, and extracts product details based on the selectors. It returns a list of product information.

Handling Pagination in Temu

Temu uses a “See More” button to load additional listings. We can simulate clicks on this button with Crawlbase’s css_click_selector to access more pages:

1 | # Function to scrape listings with pagination |

This code collects listings from multiple pages by “clicking” the “See More” button each time it loads a new batch of results.

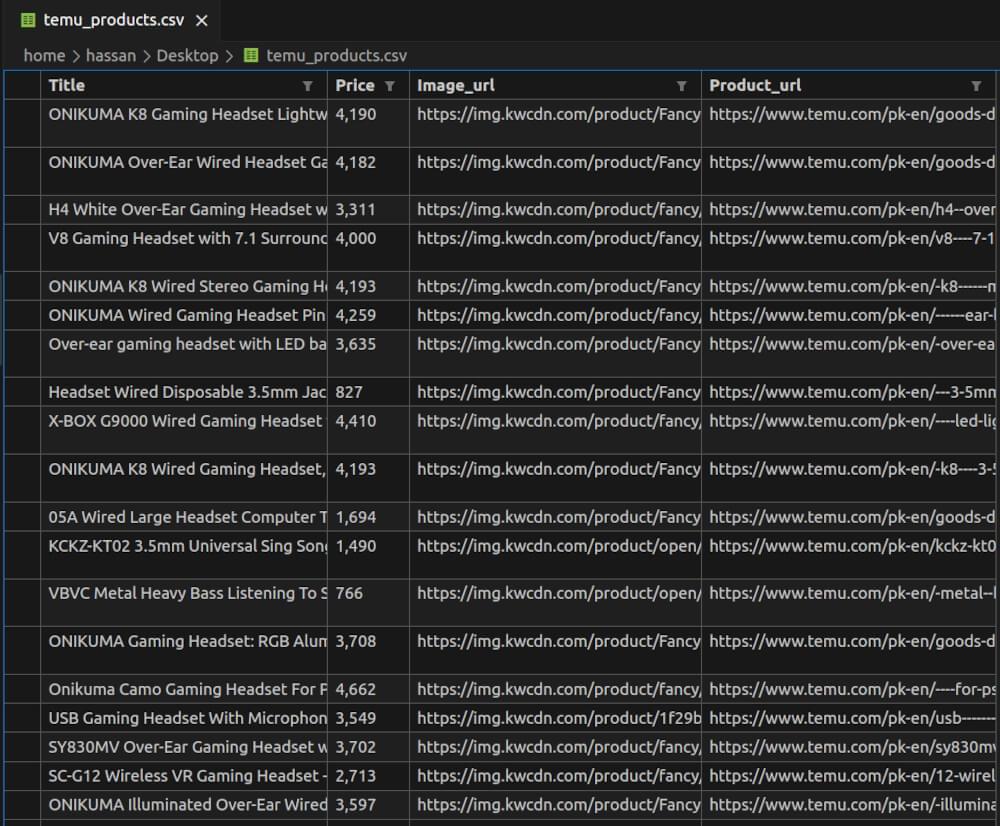

Storing Data in a CSV File

To store the scraped data in a CSV file, we’ll write each product’s information into rows, creating a structured file format for easy analysis.

1 | import csv |

This code creates a CSV file with columns for each data point, making it easy to analyze Temu listings offline.

Complete Code Example

Here’s the full script for scraping Temu search listings, handling pagination, and saving data to CSV:

1 | from crawlbase import CrawlingAPI |

temu_products.csv Snapshot:

Scraping Temu Product Pages

After collecting a list of product URLs from Temu’s search listings, the next step is to scrape details from each product page. This will allow us to gather more specific info such as detailed descriptions, specifications and reviews. Here’s how to do it.

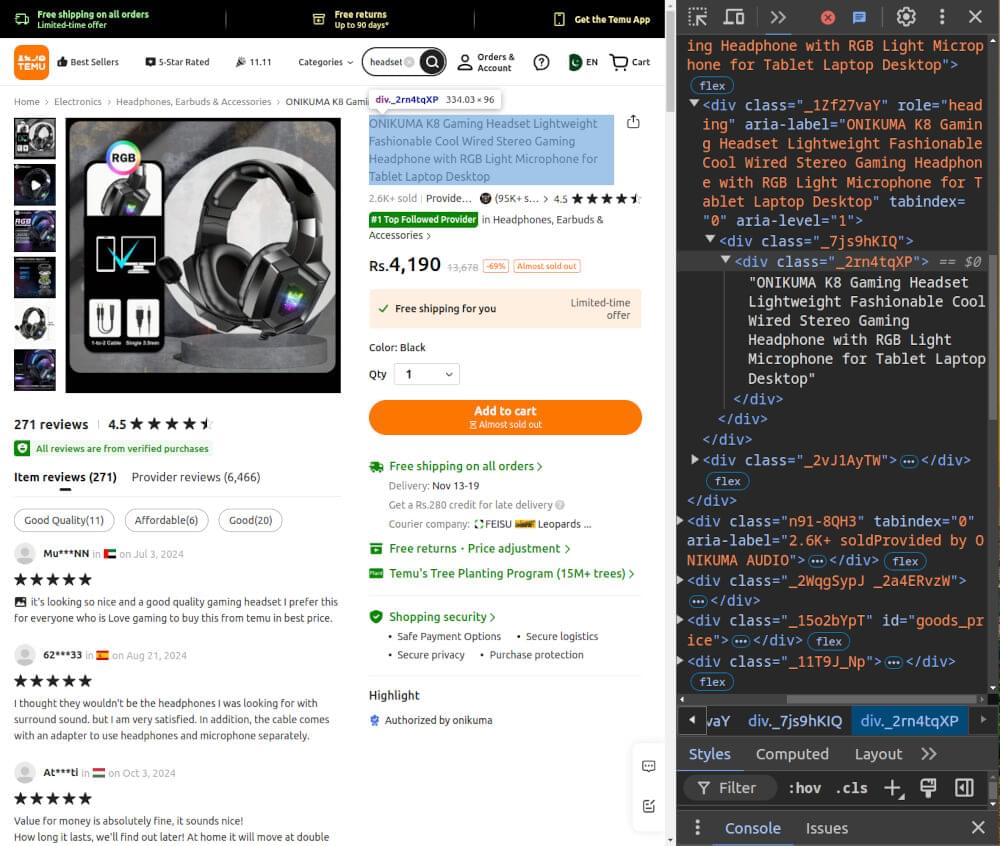

Inspecting the HTML for CSS Selectors

To start, inspect the HTML structure on a Temu product page. Open a product URL in your browser, right-click on the elements you want to scrape, and select “Inspect”.

Key elements to look for include:

- Product Title: Typically found in a

divtag with a class like_2rn4tqXP. - Price: Usually inside a

divtag with a class like_1vkz0rqG, selecting the lastspanchild for the actual price. - Product Description: Often in a div tag with a class like B_OB3uj0, providing details about the product.

- Images URL: Typically located in the

srcattribute ofimgtags within a div withrole="button"and a class likewxWpAMbp.

Identifying these selectors makes it easier to extract the data we need for each product.

Writing the Product Page Scraper

With the CSS selectors noted, we can write the scraper to gather details from each product page. We’ll use Python, along with the Crawlbase Crawling API, to handle dynamic content.

Here’s an example function to scrape product information from a Temu product page:

1 | from crawlbase import CrawlingAPI |

In this function, we use BeautifulSoup to parse the HTML and locate each element using the selectors identified. This returns a dictionary with the product details.

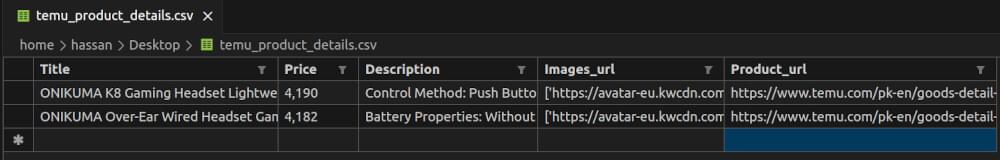

Storing Data in a CSV File

Once we’ve gathered product information, we can save it in a CSV file. This helps keep our data organized and easy to review or analyze later.

1 | import csv |

This function writes each product’s information to a CSV file with columns for each detail.

Complete Code Example

Here’s the full script to scrape multiple product pages from Temu, using the URLs from the search listings, and save the data into a CSV file.

1 | from crawlbase import CrawlingAPI |

temu_product_details.csv Snapshot:

Final Thoughts

Scraping product data from Temu helps with analyzing market trends, tracking competitors, and studying pricing changes. This guide covered setting up a scraper for search listings and product pages, handling pagination, and saving data to a CSV file.

Using the Crawlbase Crawling API manages JavaScript-heavy content, simplifying data collection. Remember to review Temu’s terms of service to avoid issues, as excessive scraping can impact their servers.

Test and update your code regularly, as website structures can change, requiring adjustments in CSS selectors or logic. If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Zalando

📜 How to Scrape Costco

Contact our support if you have any questions. Happy scraping!

Frequently Asked Questions

Q. Is Temu safe and legit to scrape?

Scraping Temu’s data for personal research, analysis, or educational purposes is generally acceptable, but it’s crucial to follow Temu’s terms of service. Avoid heavy scraping that could impact their servers or violate policies. Always check their latest terms to stay compliant and consider ethical data scraping practices.

Q. How often should I update my scraping code for Temu?

Websites can change their structure, especially the HTML and CSS selectors, which can break your scraper. It’s a good idea to test your scraper regularly—at least once a month or when you notice that data isn’t being collected correctly. If your scraper stops working, inspect the site for updated selectors and adjust your code.

Q. Can I store Temu data in a database instead of a CSV file?

Yes, storing scraped data in a database (like MySQL or MongoDB) is a good option for bigger projects. Databases make it easier to query and analyze data over time. You can replace the CSV storage step in your code with database commands and have a more efficient and scalable setup.