Rottentomatoes.com is a popular website for movie ratings and reviews. The platform offers valuable information on movies, TV shows and even audience opinions. Rotten Tomatoes has data for movie lovers, researchers, and developers.

This blog will show you how to scrape Rotten Tomatoes to get movie ratings using Python. Since Rotten Tomatoes uses JavaScript rendering, we will use the Crawlbase Crawling API to handle the dynamic content loading. By the end of this blog, you will know how to extract key movie data like ratings, release dates, and reviews and store it in a structured format like JSON.

Here’s a detailed tutorial on how to scrape Rotten Tomatoes:

Table of Contents

- Why Scrape Rotten Tomatoes for Movie Ratings?

- Key Data Points to Extract from Rotten Tomatoes

- Crawlbase Crawling API for Rotten Tomatoes Scraping

- Setting Up Your Python Environment

- Installing Python and Required Libraries

- Setting Up a Virtual Environment

- Choosing an IDE

- Inspecting the HTML Structure

- Writing the Rotten Tomatoes Movie Listings Scraper

- Handling Pagination

- Storing Data in a JSON File

- Complete Code Example

- Inspecting the HTML Structure

- Writing the Rotten Tomatoes Movie Details Scraper

- Storing Data in a JSON File

- Complete Code Example

Why Scrape Rotten Tomatoes for Movie Ratings?

Rotten Tomatoes is a reliable source for movie ratings and reviews so it’s a good website to scrape for movie data. Whether you’re a movie lover, a data analyst or a developer, you can scrape Rotten Tomatoes to gain insights into movie trends, audience preferences and critic ratings.

Here are a few reasons why you should scrape Rotten Tomatoes for movie ratings.

- Access to Critic Reviews: Rotten Tomatoes provides critic reviews, allowing you to see how individual professionals perceive the movie.

- Audience Scores: Get audience ratings which shows how the general public feels about a movie.

- Film Details: Rotten Tomatoes offers data such as titles, genres, release dates, and more.

- Popularity Tracking: By scraping ratings over time, you can monitor trends in genres, directors, or actors’ popularity.

- Create a Personal Movie Database: Gather ratings and reviews to build a custom database for research, recommendations, or personal projects.

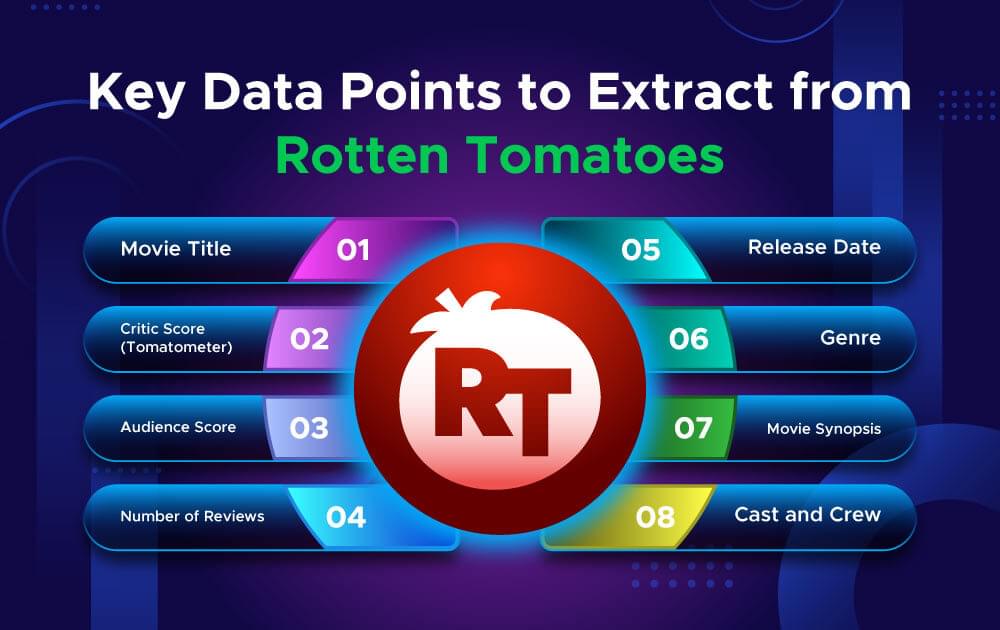

Key Data Points to Extract from Rotten Tomatoes

When scraping movie ratings from Rotten Tomatoes, you should focus on obtaining the most important data points. These data points shall provide you with essential info about movie’s reception, popularity and performance. Here are the data points you should extract:

- Movie Title: The title of the movie is the first piece of information that they have. This will assist you in categorizing your scraped data according to certain particular films.

- Critic Score (Tomatometer): Rotten Tomatoes has a critics score which is referred to as the ‘Tomatometer’. This score is computed with reviews from approved critics and provides an overall picture of a movie’s critical consensus.

- Audience Score: The audience score reflects how the general public prefers the particular movie. It is therefore useful when looking at the comparisons between the professional and public opinions on various movies.

- Number of Reviews: Critic as well as audience ratings are calculated according to the number of reviews available. To establish the reliability of the scores, you will need to extract this data as well.One of the ways to use this knowledge is to compare movies of different years and analyze changes over time knowing the release date of a given movie.

- Release Date: Release Date can be used to compare movies of different years and analyze changes over time.

- Genre: The movies in the database of Rotten Tomatoes are classified according to genre like drama, action, or comedy. Hence, genres are relevant to categorize the movie ratings according to the viewers’ interests.

- Movie Synopsis: Extracting the brief description/synopsis of the movie will help you to get a background information about the movie’s storyline and themes.

- Cast and Crew: Rotten Tomatoes has the cast and the crew list of the movies. This data is useful to track movies of a particular director, actor or writer.

Crawlbase Crawling API for Rotten Tomatoes Scraping

We will use the Crawlbase Crawling API to get movie ratings and other data from Rotten Tomatoes. Scrape Rotten Tomatoes with simple approaches is hard because the website loads its content dynamically using JavaScript. Crawlbase Crawling API is designed to handle dynamic, JavaScript-heavy websites, so it’s the best way to scrape Rotten Tomatoes fast and easy.

Why Scrape Rotten Tomatoes with Crawlbase?

JavaScript is used to load dynamic content from Rotten Tomatoes pages, including audience scores, reviews, and ratings. Such websites are tough for standard web scraping libraries like requests to handle since they cannot manage JavaScript rendering. The Crawlbase Crawling API solves this issue by making sure you get fully-loaded HTML, complete with all necessary data, through server-side JavaScript rendering.

Here’s why Crawlbase is a solid choice for scraping Rotten Tomatoes:

- JavaScript Rendering: It automatically deals with pages that depend on JavaScript to load content, like ratings or reviews.

- Built-in Proxies: Crawlbase includes rotating proxies to avoid IP blocks and captchas, keeping your scraping smooth.

- Customizable Parameters: You can adjust API parameters like

ajax_waitandpage_waitto make sure every piece of content is fully loaded before you start scraping. - Reliable and Fast: Designed for efficiency, Crawlbase lets you scrape large datasets from Rotten Tomatoes quickly, with minimal interruptions.

Crawlbase Python Library

Crawlbase provide its own Python library for simplicity. To use it, you’ll need an access token from Crawlbase, which you can obtain by registering an account.

Here’s a sample function to send requests with the Crawlbase Crawling API:

1 | from crawlbase import CrawlingAPI |

Note: For static sites, use the Normal Token. For dynamic sites like Rotten Tomatoes, use the JS Token. Crawlbase offers 1,000 free requests to get started, with no credit card needed. For more details, refer to the Crawlbase Crawling API documentation.

In the next sections we will cover how to set up your Python environment and the code to scrape movie data using Crawlbase Crawling API.

Setting Up Your Python Environment

To scrape data from Rotten Tomatoes, the first thing you’ll need to do is set up your Python environment. This includes installing Python, creating a virtual environment, and ensuring all the libraries you need are in place.

Installing Python

The first step is making sure Python is installed on your system. Head over to the official Python website to download the latest version. Remember to choose the version that matches your operating system (Windows, macOS or Linux).

Setting Up a Virtual Environment

Using a virtual environment is a smart way to manage your project dependencies. It helps you keep things clean and prevents conflicts with other projects. Here’s how you can do it:

Open your terminal or command prompt.

Go to your project folder.

Run the following command to create a virtual environment:

1

python -m venv myenv

Activate the virtual environment:

On Windows:

1

myenv\Scripts\activate

On macOS/Linux:

1

source myenv/bin/activate

Extracting Data Using BeautifulSoup and Crawlbase

Next you’ll need to install the required libraries, including Crawlbase and BeautifulSoup for data handling. Run the following command in your terminal:

1 | pip install crawlbase beautifulsoup4 |

- Crawlbase: Used to interact with the Crawlbase products including Crawling API.

- BeautifulSoup: For parsing HTML and extracting the required data.

Choosing an IDE

To write and run your Python scripts smoothly, use a good IDE (Integrated Development Environment). Here are a few:

- Visual Studio Code: Lightweight and highly customizable. Many developers love it.

- PyCharm: Full of features, built for Python.

- Jupyter Notebook: For interactive coding and quick tests.

Now that you have your environment set up, let’s start scraping Rotten Tomatoes for movie ratings with Python. In the next section we’ll get into the code that will extract movie ratings and other info from Rotten Tomatoes.

Scraping Rotten Tomatoes Movie Listings

Here we will scrape movie listings from Rotten Tomatoes. We will look at the HTML, write the scraper, handle pagination and organize the data. We will use the Crawlbase Crawling API for JavaScript and dynamic content.

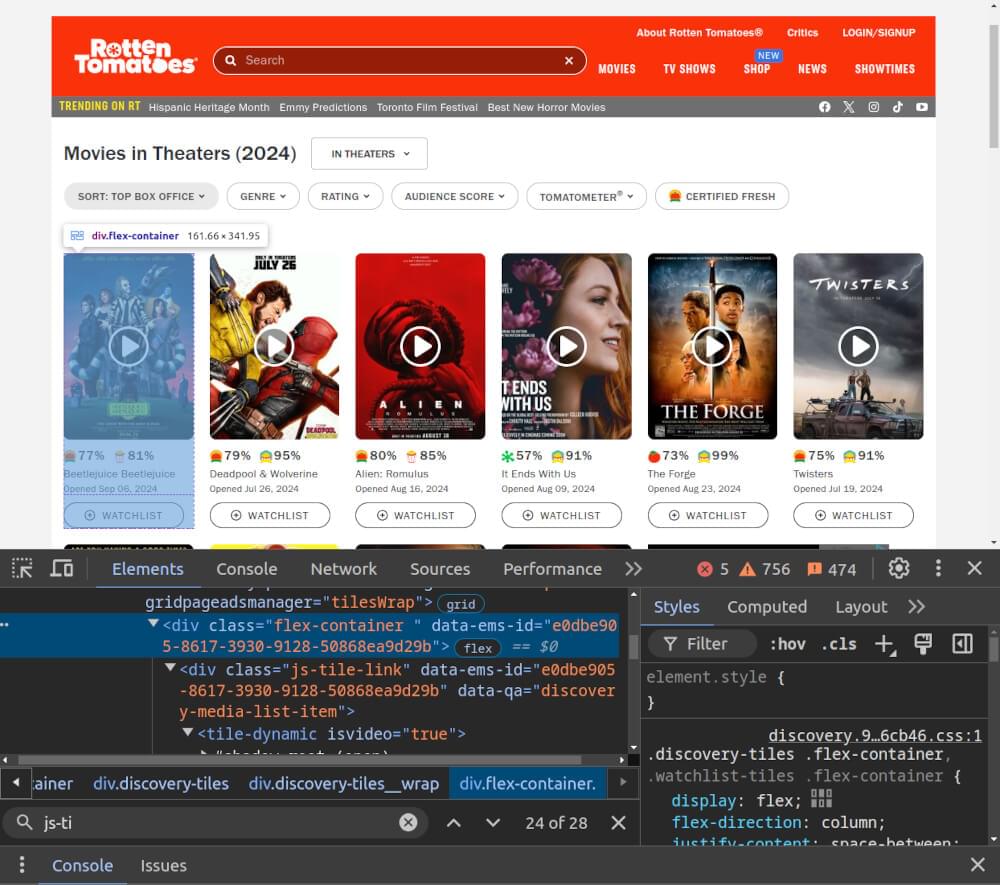

Inspecting the HTML Structure

Before we write the scraper we need to inspect the Rotten Tomatoes page to see what the structure looks like. This will help us know what to target.

- Open the Rotten Tomatoes page: Go to the page you want to scrape. For example we are scraping Top Box Office movies List.

- Open Developer Tools: Right-click on the page and select “Inspect” or press

Ctrl+Shift+I(Windows) orCmd+Option+I(Mac).

Identify the Movie Container: Movies on Rotten Tomatoes are usually inside

elements with class flex-container which are inside a parentwith attribute data-qa=”discovery-media-list”.Locate Key Data:

- Title: Usually inside a

<span>with attribute likedata-qa="discovery-media-list-item-title". This is the movie title. - Critics Score: Inside an

rt-textelement withslot="criticsScore". This is the critics score for the movie. - Audience Score: Also inside an

rt-textelement but withslot="audienceScore". This is the audience score for the movie. - Link: The movie link is usually inside an

<a>tag withdata-qaattribute that starts withdiscovery-media-list-item. You can extract thehrefattribute from this element to get the link to the movie’s page.

- Title: Usually inside a

Writing the Rotten Tomatoes Movie Listings Scraper

Now we know the structure, let’s write the scraper. We will use Crawlbase Crawling API to fetch the HTML and BeautifulSoup to parse the page and extract titles, ratings and links.

1 | from crawlbase import CrawlingAPI |

Handling Pagination

Rotten Tomatoes uses button-based pagination for their movie listings. We need to handle pagination by clicking the “Load More” button. Crawlbase Crawling API allows us to handle pagination with the css_click_selector parameter.

1 | def fetch_html_with_pagination(url): |

This code will click the “Load More” button to load more movie listings before scraping the data.

Storing Data in a JSON File

After scraping the movie data, we can save it to a file in a structured format like JSON.

1 | import json |

Complete Code Example

Here is the full code that brings it all together, fetching the HTML, parsing the movie data, handling pagination and saving the results to a JSON file.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

In the next section, we will discuss scraping individual movie details.

Scraping Rotten Tomatoes Movie Details

Now, we’ll move on to scraping individual movie details from Rotten Tomatoes. Once you have the movie listings, you need to extract detailed information for each movie, like release date, director, genre etc. This part will walk you through inspecting the HTML of a movie page, writing the scraper and saving the data to a JSON file.

Inspecting the HTML Structure

Before we start writing the scraper, we need to inspect the HTML structure of a specific movie’s page.

Open Movie Page: Go to a movie’s page from the list you scraped earlier.

Open Developer Tools: Right-click on the page and select “Inspect” or press

Ctrl+Shift+I(Windows) orCmd+Option+I(Mac).Locate Key Data:

- Title: Usually in an with a

slot="titleIntro"attribute. - Synopsis: In awith a class of

synopsis-wrapand anrt-textelement without the .key class. - Movie Details: These are in a list format with

<dt>elements (keys) and<dd>elements (values). The data is usually inrt-linkandrt-texttags.

Writing the Rotten Tomatoes Movie Details Scraper

Now that we have the HTML structure, we can write the scraper to get the details from each movie page. Lets write the scraper code.

1 | from crawlbase import CrawlingAPI |

fetch_movie_details function fetches the movie title, release date, director and genres from the movie URL. It uses BeautifulSoup for HTML parsing and structures the data into a dictionary.

Storing Movie Details in a JSON File

After scraping the movie details, you will want to save the data in a structured format like JSON. Here is the code to save the movie details to a JSON file.

1 | import json |

Complete Code Example

Here is the complete code to scrape movie details from Rotten Tomatoes and save to a JSON file.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

Scrape Movie Ratings on Rotten Tomatoes with Crawlbase

Scraping movie ratings from Rotten Tomatoes has many uses. You can collect data to analyze, research or just have fun. It helps you see what’s popular. The Crawlbase Crawling API can deal with dynamic content so you can get the data you need. When you scrape Rotten Tomatoes, you can get public opinions, box office stats or movie details to use in any project you like.

This blog showed you how to scrape movie listings, ratings, and get details like release dates, directors, and genres. We used Python, Crawlbase BeautifulSoup, and JSON to gather and sort the data so you can use and study it. This blog showed you how to scrape movie listings and ratings and get details like release dates, directors, and genres.

If you want to do more web scraping, check out our guides on scraping other key websites.

📜 How to Scrape Monster.com

📜 How to Scrape Groupon

📜 How to Scrape TechCrunch

📜 How to Scrape Clutch.co

If you have any questions or feedback, our support team can help you with web scraping. Happy scraping!

Frequently Asked Questions

Q. How do I scrape Rotten Tomatoes if the site changes its layout?

If Rotten Tomatoes alters its layout or HTML, your scraper will stop working. To address this:

- Keep an eye on the site for any changes.

- Examine the new HTML and revise your CSS selectors.

- Make changes to your scraper’s code.

Q. What should I keep in mind when scraping Rotten Tomatoes?

When you scrape Rotten Tomatoes or similar websites:

- Look at the Robots.txt: Make sure the site allows scraping by checking its

robots.txtfile. - Put throttling to use: Add some time between requests to avoid overwhelming the server and reduce your chances of getting blocked.

- Deal with errors: Include ways to handle request failures or changes in how the site is built.

Q. How do I manage pagination with Crawlbase Crawling API while scraping Rotten Tomatoes?

Rotten Tomatoes may use different ways to show more content, like “Load More” buttons or endless scrolling.

- For Buttons: Use the

css_click_selectorparameter in the Crawlbase Crawling API to click the “Load More” button. - For Infinite Scrolling: Use

page_waitorajax_waitparameter in the Crawlbase Crawling API to wait for all content to load before you capture.