Farfetch is one of the biggest luxury fashion platforms, with thousands of high-end clothing, shoes, and accessories from top brands around the world. Whether you are doing market research, analyzing luxury fashion trends, or building your e-commerce database, scraping data from Farfetch can be very useful.

However, like many other websites, Farfetch uses JavaScript to load its content, so traditional scrapers can’t scrape the retail data you need. That’s where the Crawlbase Crawling API comes in. It makes scraping easy by handling JavaScript content, managing proxies, and bypassing anti-bot mechanisms – all with just a few lines of code in Python.

In this blog, we’ll scrape Farfetch SERP and product pages with the Crawlbase Crawling API in Python.

Table of Contents

- Benefits of Farfetch Data Scraping

- Key Data Points to Extract from Farfetch

- Crawlbase Crawling API for Farfetch Scraping

- Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for CSS Selectors

- Writing the Search Listings Scraper

- Handling Pagination in Farfetch

- Storing Data in a CSV File

- Complete Code Example

- Inspecting the HTML for CSS Selectors

- Writing the Product Page Scraper

- Storing Data in a CSV File

- Complete Code Example

Benefits of Farfetch Data Scraping

One of the largest players in the luxury fashion market, Farfetch connects consumers with shops and high-end brands. Farfetch is a treasure trove for companies, academics, and developers, with thousands of products ranging from high-end clothing to accessories.

Scraping Farfetch will give you insights into:

- Pricing Trends: How luxury products are priced across brands, categories, and regions.

- Product Availability: Track stock levels and availability to see what’s selling fast and what brands are popular.

- Market Trends: Find fashion trends, seasonal drops, and new brands.

- Competitor Analysis: Compare pricing, product descriptions and discounts with competitors.

- Building Databases: Build a clean database of products with titles, descriptions, prices, images and more.

Key Data Points to Extract from Farfetch

When scraping Farfetch, focus on these data points:

- Blurb: A short description to help you identify the product.

- Brand: Track and identify the luxury brands on the platform.

- Price: Get both original and discounted prices.

- Product Description: Collect info on materials and features for cataloging.

- Sizes and Availability: Monitor stock status and demand for popular sizes.

- Categories: Analyze trends within specific product segments.

- Images: Extract URLs of product images for visual databases.

- Ratings and Reviews: Understand customer preferences and assess product quality.

- Regional Pricing: Compare prices across various currencies and regions.

- Delivery Options: Evaluate shipping times and costs.

By collecting these data points, you can get insights for market research and business growth. Now, let’s see how to scrape Farfetch with Crawlbase Crawling API.

Crawlbase Crawling API for Farfetch Scraping

The Crawlbase Crawling API is a web scraping tool that makes data extraction from Farfetch easy. It handles JavaScript rendering, proxies and CAPTCHA solving so you can focus on building your scraper without the technical headaches.

Crawlbase Python Library

Crawlbase also has a Python library to make API integration easy. Once you sign up, you will receive an access token for authentication. Here’s a quick example of how to use it:

1 | from crawlbase import CrawlingAPI |

Key Points:

- Crawlbase has separate tokens for static and dynamic content scraping.

- Use a JavaScript (JS) token for scraping Farfetch dynamic content.

- Crawlbase Crawling API takes care of JavaScript rendering and proxies for you.

How to Set Up Your Python Environment

Before you start scraping Farfetch, you need to set up your Python environment. This will guide you through the process of installing Python, setting up the required libraries and choosing an IDE for your needs.

Installing Python and Required Libraries

- Install Python:

- Go to python.org and download the latest version of Python.

- Make sure to check the option “Add Python to PATH” during the installation.

- Install Required Libraries:

- Open a terminal or command prompt and execute the following command:

1 | pip install crawlbase beautifulsoup4 |

- These libraries are crucial for web scraping and utilizing the Crawlbase Crawling API.

Choosing an IDE

To write and manage your code you’ll want an IDE or code editor. Here are some options:

- PyCharm: A full-featured IDE with advanced debugging and code navigation tools

- Visual Studio Code: A lightweight and customizable editor with extensions for Python.

- Jupyter Notebook: For testing and running code snippets interactively.

Choose the IDE that suits you, and you’ll be good to go. In the next section we’ll get into scraping Farfetch’s search listings.

Scraping Farfetch Search Results

Now that you have your Python environment set up let’s get into scraping the search results from Farfetch. This section will guide you through how to inspect the HTML, create a scraper, manage pagination, and save the data into a CSV file.

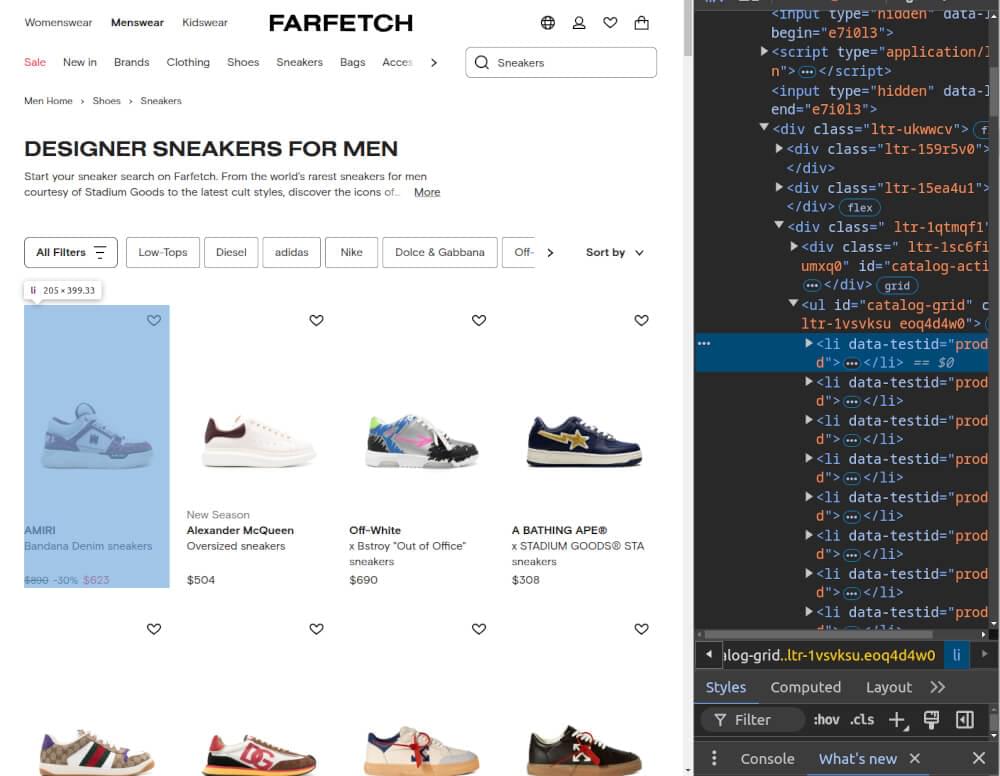

Inspecting the HTML Structure

Before we write the scraper, we need to inspect the HTML of Farfetch’s search results to find the product titles, prices, and links. For this example, we’ll be using a category like “men’s sneakers” from the following URL.

1 | https://www.farfetch.com/pk/shopping/men/trainers-2/items.aspx |

- Open Developer Tools: Go to the URL and press

Ctrl + Shift + I(orCmd + Option + Ion Mac) to open your browser’s developer tools. - Inspect Product Elements: Hover over the product titles, prices, and links to find their corresponding tags and CSS classes.

Key selectors for Farfetch search listings:

- Brand: Found in a

<p>tag with thedata-component="ProductCardBrandName"attribute. - Description: Found in a

<p>tag with thedata-component="ProductCardDescription"attribute. - Price: Found in a

<p>tag with thedata-component="Price"ordata-component="PriceFinal"attribute. - Discount: Found in a

<p>tag with thedata-component="PriceDiscount"attribute. - Product Link: Found in an

<a>tag within the product container. The href attribute provides the product link, prefixed withhttps://www.farfetch.com.

Writing the Search Listings Scraper

Here’s a Python script to scrape product data using Crawlbase and BeautifulSoup libraries:

1 | from crawlbase import CrawlingAPI |

This code defines a function, scrape_farfetch_listings, to scrape product details from Farfetch search results. It sends a request to Crawlbase Crawling API to get Farfetch SERP HTML. It uses ajax_wait and page_wait parameters provided by Crawlbase Crawling API to handle JS content. You can read about these parameters here.

If the request is successful, the function uses BeautifulSoup to parse the returned HTML and extract product details for each product card. The extracted data is stored as dictionaries in a list, and the function returns the list of products.

Handling Pagination in Farfetch

Farfetch lists products across multiple pages. To scrape all listings, iterate through each page by appending the page parameter to the URL (e.g., ?page=2).

1 | def scrape_multiple_pages(base_url, total_pages): |

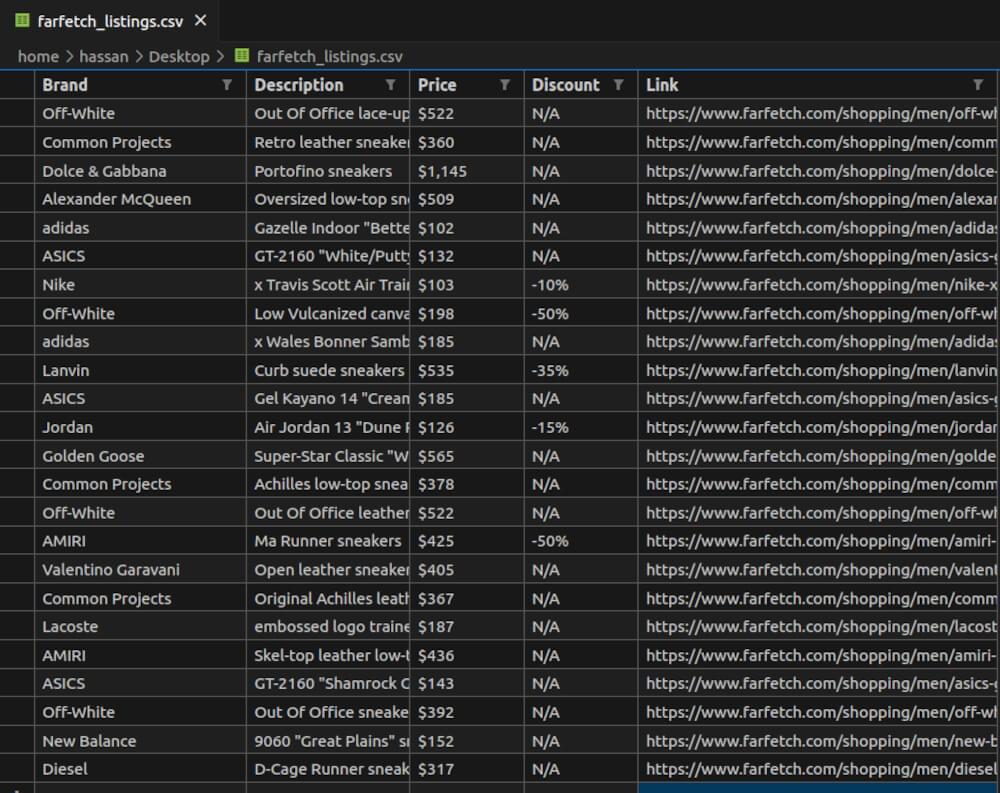

Storing Data in a CSV File

After scraping, save the data into a CSV file for further analysis.

1 | import csv |

Complete Code Example

Below is the complete script to scrape Farfetch’s search listings using Crawlbase Crawling API, handle pagination, and save the data to a CSV file:

1 | from crawlbase import CrawlingAPI |

farfetch_listings.csv file Snapshot:

In the next section, we’ll explore scraping individual product pages for more detailed data.

Scraping Farfetch Product Pages

Now that you have the product listings scraped, the next step is to scrape individual product pages to get product descriptions, sizes, materials, and more. Here, we will show you how to inspect the HTML, write a scraper for product pages, and store the data in a CSV file.

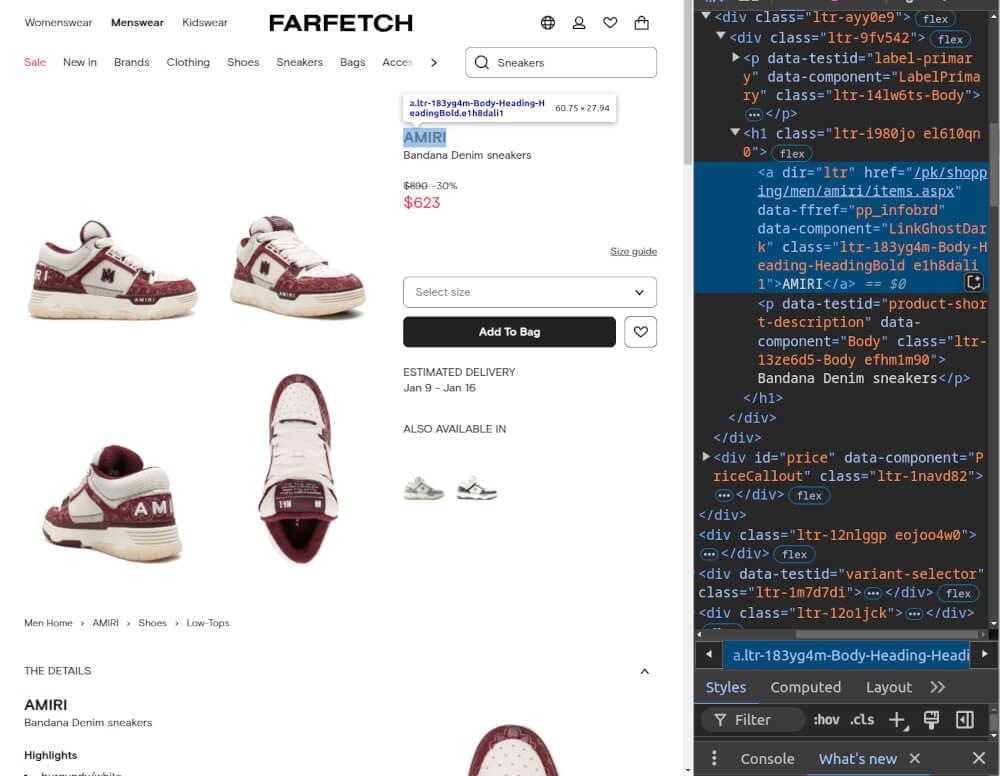

Inspecting the HTML for CSS Selectors

Visit a Farfetch product page, for example:

1 | https://www.farfetch.com/pk/shopping/men/gucci-screener-sneakers-item-27582236.aspx |

Open the developer tools in your browser (Ctrl + Shift + I or Cmd + Option + I on Mac) and inspect the key elements you want to scrape.

Key selectors for Farfetch product pages:

- Blurb: Located in a

<p>tag withdata-testid="product-short-description". - Brand Name: Located in an

<a>tag withdata-component="LinkGhostDark". - Price: Located in a

<div>tag withid="price". - Description: Located in a nested

<div>withdata-component="AccordionPanel"with is inside adivwithdata-testid="product-information-accordion".

Writing the Product Page Scraper

Here’s a Python script to scrape product details using Crawlbase and BeautifulSoap:

1 | from crawlbase import CrawlingAPI |

The scrape_product_page function makes an HTTP request to the given URL with options to render JavaScript. It then uses BeautifulSoup to parse the HTML and extract the blurb, brand, price, description, and sizes. The data is returned as a dictionary. If the request fails, it prints an error.

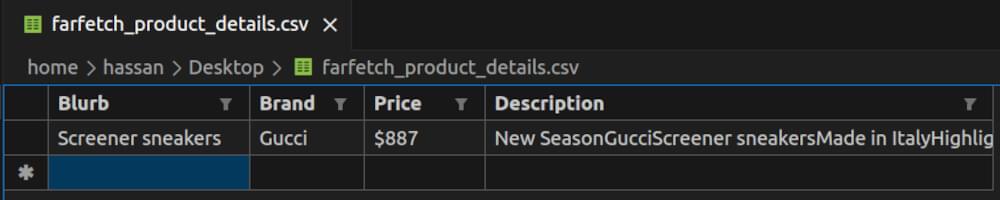

Storing Data in a CSV File

Once you’ve scraped product details, you can save them into a CSV file for easier access.

1 | import csv |

Complete Code Example

Here’s the full script for scraping a Farfetch product page using Crawlbase Crawling API and saving the data to a CSV file:

1 | from crawlbase import CrawlingAPI |

farfetch_product_details.csv file Snapshot:

Final Thoughts

Scraping Farfetch can get you valuable data for market research, pricing analysis and to stay ahead in the fashion game. Using Crawlbase Crawling API and libraries like BeautifulSoup, you can scrape product details, automate data collection, and streamline your workflow.

But remember to respect website TOS and ethical scraping. With the code and guidance provided in this blog, you can easily scrape Farfetch search and product pages. Want to scrape more websites? Check out our other guides.

📜 How to Scrape Monster.com

📜 How to Scrape Groupon

📜 How to Scrape TechCrunch

📜 How to Scrape X.com Tweet Pages

📜 How to Scrape Clutch.co

If you have questions or want to give feedback, our support team can help with web scraping. Happy scraping!

Frequently Asked Questions

Q. How do I handle JavaScript content when scraping Farfetch?

When scraping dynamic sites like Farfetch, JavaScript content may not load immediately. Use the Crawlbase Crawling API, which supports JavaScript rendering. This will ensure the page is fully loaded, including dynamic content, before you extract the data. You can set the ajax_wait option to true in the API request to allow enough time for JavaScript to render the page.

Q. Can I scrape product details from multiple pages on Farfetch?

Yes, you can scrape product details from multiple pages on Farfetch. To do this, you need to handle pagination. You can adjust the URL to include a page number parameter and scrape listings from each page in a loop. If used together with the Crawlbase Crawling API, you’d be able to scrape multiple pages without the fear of getting banned.

Q. How do I store the scraped data?

After scraping data from Farfetch, it’s essential to store it in an organized format. You can save the data in CSV or JSON files for easy access and future use. For example, the code can write the scraped product details to a CSV file, ensuring that the information is saved in a structured manner, which is ideal for analysis or sharing.