Houzz is a platform where homeowners, designers and builders come together to find products, inspiration and services. It’s one of the top online platforms for home renovation, interior design and furniture shopping. With over 65 million unique users and 10 million product listings, Houzz is a treasure trove of data for businesses, developers and researchers. The platform offers insights that can be used to build an e-commerce, do market research or analyze design trends.

In this blog, we’ll walk you through how to scrape Houzz search listings and product pages using Python. We’ll show you how to optimize your scraper using Crawlbase Smart AI Proxy so you can scrape smoothly and efficiently even from websites with anti-scraping measures.

Let’s get started!

Table of Contents

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML Structure

- Writing the Houzz Search Listings Scraper

- Handling Pagination

- Storing Data in a JSON File

- Complete Code Example

- Inspecting the HTML Structure

- Writing the Houzz Product Page Scraper

- Storing Data in a JSON File

- Complete Code Example

- Why Use Crawlbase Smart AI Proxy?

- How to add it to your Scraper?

Why Scrape Houzz Data?

Scraping Houzz data can be incredibly useful for a variety of reasons. With its large collection of home products, furniture, and decor, Houzz offers a lot of data that can help businesses and individuals make informed decisions. Following are some of the reasons to scrape Houzz data.

- Market Research: If you’re in the home decor or furniture industry, you can analyze product trends, pricing strategies and customer preferences by scraping product details and customer reviews from Houzz.

- Competitor Analysis: For e-commerce businesses, scraping Houzz will give you competitor pricing, product availability and customer ratings so you can stay competitive.

- Product Data Aggregation: If you’re building a website or app that compares products across multiple platforms, scrape Houzz to include its massive product catalog in your data.

- Customer Sentiment Analysis: Collect reviews and ratings to analyze customer sentiment about specific products or brands. Help brands improve their offerings or help buyers make better decisions.

- Data-Driven Decisions: Scrape Houzz to make informed decisions on what products to stock, how to price them and what customers are looking for.

Key Data Points to Extract from Houzz

When scraping from Houzz, you can focus on several key pieces of information. Here are the data points to extract from Houzz:

- Name: The product name.

- Price: The product price.

- Description: Full details on features and materials.

- Images: High res images of the product.

- Ratings and Reviews: Customer feedback on product.

- Specifications: Dimensions, materials etc.

- Seller: Information on the seller or store.

- Company: Business name.

- Location: Business location.

- Phone: Business phone number.

- Website: Business website.

- Email: Business email (if on website).

Setting Up Your Python Environment

To get started scraping Houzz data you need to set up your Python environment. This involves installing Python, the necessary libraries and an Integrated Development Environment (IDE) to make coding easier.

Installing Python and Required Libraries

First, you need to install Python on your computer. You can download the latest version from python.org. After installing open a terminal or command prompt to make sure Python is installed by typing:

1 | python --version |

Next, you’ll need to install the libraries for web scraping. The two main ones are requests for fetching web pages and BeautifulSoup for parsing the HTML. Install these by typing:

1 | pip install requests beautifulsoup4 |

These libraries are essential for extracting data from Houzz’s HTML structure and making the process smooth.

Choosing an IDE

An IDE makes writing and managing your Python code easier. Some popular options include:

- Visual Studio Code: A lightweight, free editor with great extensions for Python development.

- PyCharm: A dedicated Python IDE with many built-in features for debugging and code navigation.

- Jupyter Notebook: Great for interactive coding and seeing your results immediately.

Choose the IDE that suits you and your coding style. Once your environment is set up you’ll be ready to start building your Houzz scraper.

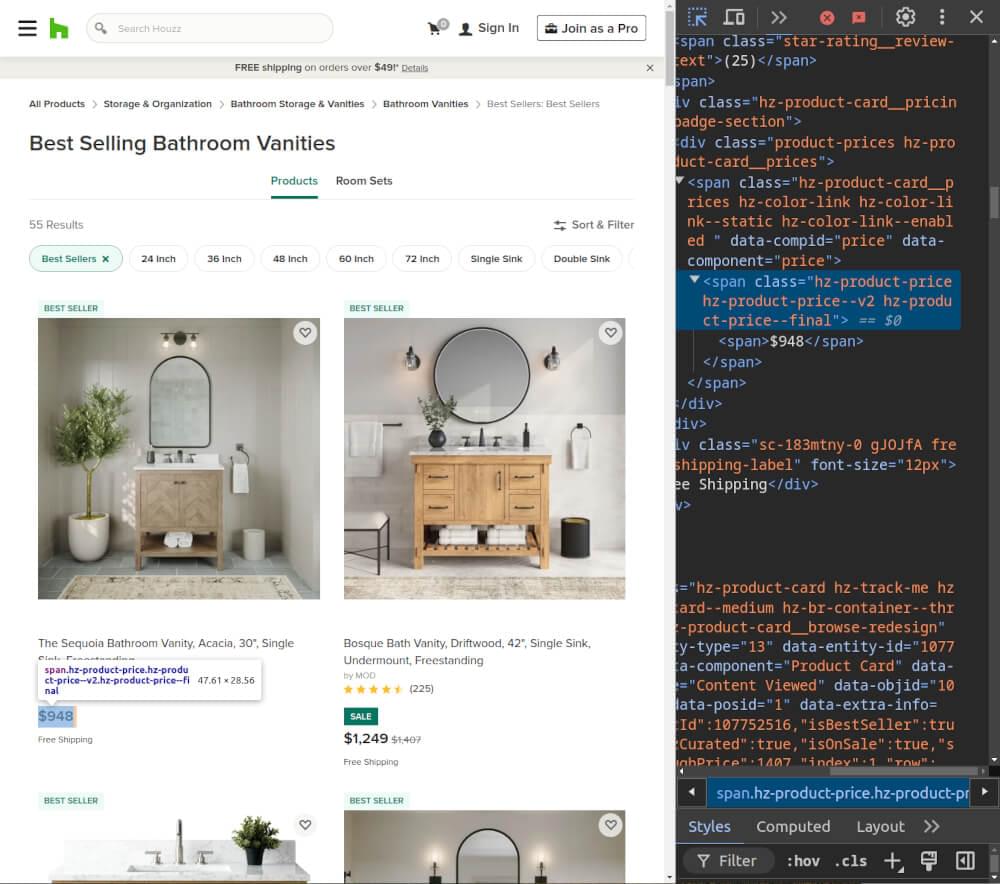

Scraping Houzz Search Listings

In this section, we will focus on scraping Houzz search listings, which display all the products on the site. We will cover how to find CSS selectors by inspecting the HTML, write a scraper to extract data, handle pagination, and store the data in a JSON file.

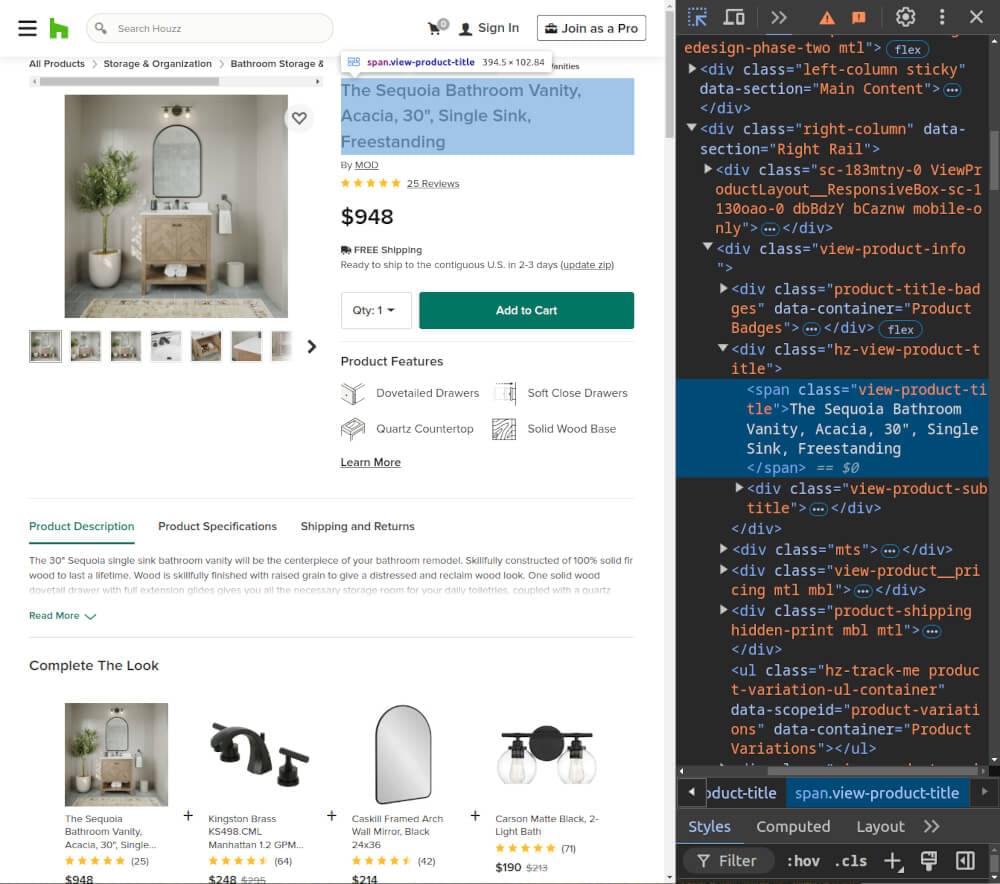

Inspecting the HTML Structure

First of all, you need to inspect the HTML of the Houzz page from which you want to scrape product listings. For example, to scrape bathroom vanities and sink consoles, use the URL:

1 | https://www.houzz.com/products/bathroom-vanities-and-sink-consoles/best-sellers--best-sellers |

Open the developer tools in your browser and navigate to this URL.

Here are some key selectors to focus on:

- Product Title: Found in an

<a>tag with classhz-product-card__product-titlewhich contains the product name. - Price: In a

<span>tag with classhz-product-pricewhich displays the product price. - Rating: In a

<span>tag with classstar-ratingwhich shows the product’s average rating (accessible via thearia-labelattribute). - Image URL: The product image is in an

<img>tag and you can get the URL from thesrcattribute. - Product Link: Each product links to its detailed page in an

<a>tag which can be accessed via thehrefattribute.

By looking at these selectors you can target the data you need for your scraper.

Writing the Houzz Search Listings Scraper

Now that you know where the data is located, let’s write the scraper. The following code uses the requests library to fetch the page and BeautifulSoup to parse the HTML.

1 | import requests |

Handling Pagination

To scrape multiple pages, we need to implement a separate function that will handle pagination logic. This function will check if there is a “next page” link and return the URL for that page. We can then loop through all the listings.

Here’s how you can write the pagination function:

1 | def get_next_page_url(soup): |

We will call this function in our main scraping function to continue fetching products from all available pages.

Storing Data in a JSON File

Next, we’ll create a function to save the scraped data into a JSON file. This function can be called after retrieving the listings.

1 | def save_to_json(data, filename='houzz_products.json'): |

Complete Code Example

Now, let’s combine everything, including pagination, into a complete code snippet.

1 | import requests |

This complete scraper will extract product listings from Houzz, handling pagination smoothly.

Example Output:

1 | [ |

Next, we will explore how to scrape individual product pages for more detailed information.

Scraping Houzz Product Pages

After scraping the search listings, next we gather more information from individual product pages. This will give us more info about each product, including specs and extra images. In this section, we will look at the HTML of a product page, write a scraper to extract the data and then store that data in a JSON file.

Inspecting the HTML Structure

To scrape product pages, you first need to look at the HTML structure of a specific product page.

1 | https://www.houzz.com/products/the-sequoia-bathroom-vanity-acacia-30-single-sink-freestanding-prvw-vr~170329010 |

Open the developer tools in your browser and navigate to this URL.

Here are some key selectors to focus on:

- Product Title: Within a

spanwith classview-product-title. - Price: Within a

spanwith classpricing-info__price. - Description: Within a

divwith classvp-redesign-description. - Images: Additional images within

imgtags withindiv.alt-images__thumb.

Knowing this is key to writing your scraper.

Writing the Houzz Product Page Scraper

Now that we know where to find the data, we can create a function to scrape the product page. Here’s how you can write the code to extract the necessary details:

1 | import requests |

Storing Data in a JSON File

Just like the search listings, we can save the data we scrape from the product pages into a JSON file for easy access and analysis. Here’s a function that takes the product data and saves it in a JSON file:

1 | def save_product_to_json(product_data, filename='houzz_product.json'): |

Complete Code Example

To combine everything we’ve discussed, here’s a complete code example that includes both scraping individual product pages and saving that data to a JSON file:

1 | import requests |

This code will scrape detailed information from a single Houzz product page and save it to a JSON file.

Example Output:

1 | { |

In the next section, we will discuss how to optimize your scraping process with Crawlbase Smart AI Proxy.

Optimizing with Crawlbase Smart AI Proxy

When scraping sites like Houzz, IP blocks and CAPTCHAs can slow you down. Crawlbase Smart AI Proxy helps bypass these issues by rotating IPs and handling CAPTCHAs automatically. This allows you to scrape data without interruptions.

Why Use Crawlbase Smart AI Proxy?

- IP Rotation: Avoid IP bans by using a pool of thousands of rotating proxies.

- CAPTCHA Handling: Crawlbase automatically bypasses CAPTCHAs, so you don’t have to solve them manually.

- Increased Efficiency: Scrape data faster by making requests without interruptions from rate limits or blocks.

- Global Coverage: You can scrape data from any location by selecting proxies from different regions worldwide.

How to Add It to Your Scraper?

To integrate Crawlbase Smart AI Proxy, modify your request URL to route through their API:

1 | import requests |

This will ensure your scraper can run smoothly and efficiently while scraping Houzz.

Optimize Houzz Scraper with Crawlbase

Houzz provides valuable insights for your projects. You can explore home improvement trends and analyze market prices. By following the steps in this blog, you can easily gather important information like product details, prices, and customer reviews.

Using Python libraries like Requests and BeautifulSoup simplifies the scraping process. Plus, using Crawlbase Smart AI Proxy helps you access the data you need without facing issues like IP bans or CAPTCHAs.

If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Zalando

📜 How to Scrape Costco

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Good luck with your scraping journey!

Frequently Asked Questions

Q. Is it legal to scrape product data from Houzz?

Yes, scraping product data from Houzz is allowed as long as you follow their terms of service. Make sure to read Houzz’s TOS and respect their robots.txt file so you scrape responsibly and ethically.

Q. Why should I use a proxy like Crawlbase Smart AI Proxy for scraping Houzz?

Using a proxy like Crawlbase Smart AI Proxy prevents IP bans which can happen if you make too many requests to a website in a short span of time. Proxies also bypass CAPTCHA challenges and geographic restrictions so you can scrape data from Houzz or any other website smoothly.

Q. Can I scrape both product listings and product details from Houzz?

Yes, you can scrape both. In this blog, we’ve demonstrated how to extract essential information from Houzz’s search listings and individual product pages. By following similar steps, you can extend your scraper to gather various data points, such as pricing, reviews, specifications, and even business contact details.