Web scraping is a great way to get data from search engines, but major search engines like Google, Bing, and Yahoo have measures to detect and block scrapers. They look at traffic patterns, track IPs, and use browser fingerprinting and CAPTCHAs to prevent automated access.

If your scraper gets blocked, it can screw up data collection and make it hard to get insights. But by knowing how search engines detect scrapers and using the right techniques, you can avoid getting blocked and get data.

In this post, we’ll go through how search engines detect scrapers, the methods they use to block them, and proven ways to bypass them. Let’s get started!

Table of Contents

- Unusual Traffic Patterns

- IP Tracking and Blocking

- Browser Fingerprinting

- CAPTCHA Challenges

- JavaScript and Bot Detection

- Rate Limiting and Request Throttling

- Blocking Known Proxy and VPN IPs

- Analyzing User Behavior

- Dynamic Content Loading

- Using Rotating Proxies and User-Agents

- Implementing Headless Browsers and Human-like Interactions

- Slowing Down Requests to Mimic Real Users

- Leveraging CAPTCHA Solving Services

- Using Crawlbase Crawling API for Seamless Scraping

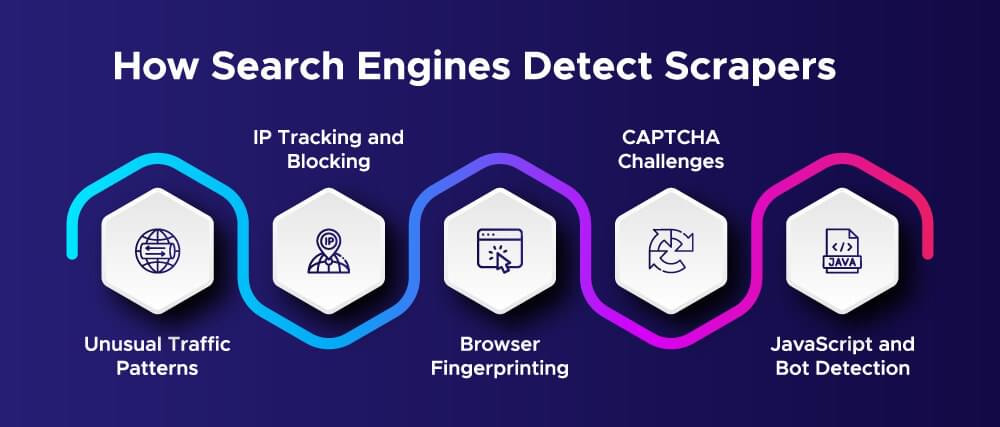

How Search Engines Detect Scrapers

Search engines have ways to detect scrapers and block access. Know these, and you can build a scraper that acts like a human and escapes detection.

- Unusual Traffic Patterns

Search engines track traffic for weird activity. If one IP sends too many requests in a short time, it’s a red flag. Rapid requests from the same IP often indicate a bot and will get blocked or get a CAPTCHA.

- IP Tracking and Blocking

Search engines log IP addresses to track user behavior. If they see an IP sending automated requests, they’ll block it or show a verification challenge. Shared or data center IPs are more likely to get flagged than residential IPs.

- Browser Fingerprinting

Browser fingerprinting collects data about a user’s device, operating system, screen resolution, and installed plugins. If a scraper’s fingerprint doesn’t match a real user’s, search engines will find and block it. Headless browsers are often flagged unless appropriately configured.

- CAPTCHA Challenges

Google and other search engines use CAPTCHAs to differentiate humans from bots. If they see unusual behavior, they’ll show a reCAPTCHA or image verification to confirm real user activity. CAPTCHAs are triggered by high request rates, missing browser headers, or known bot IPs.

- JavaScript and Bot Detection

Modern websites (including search engines) use JavaScript to track user interactions. They analyze mouse movements, scrolling, and other behavioral signals. Scrapers that don’t execute JavaScript are easily detected as they don’t imitate human web interaction.

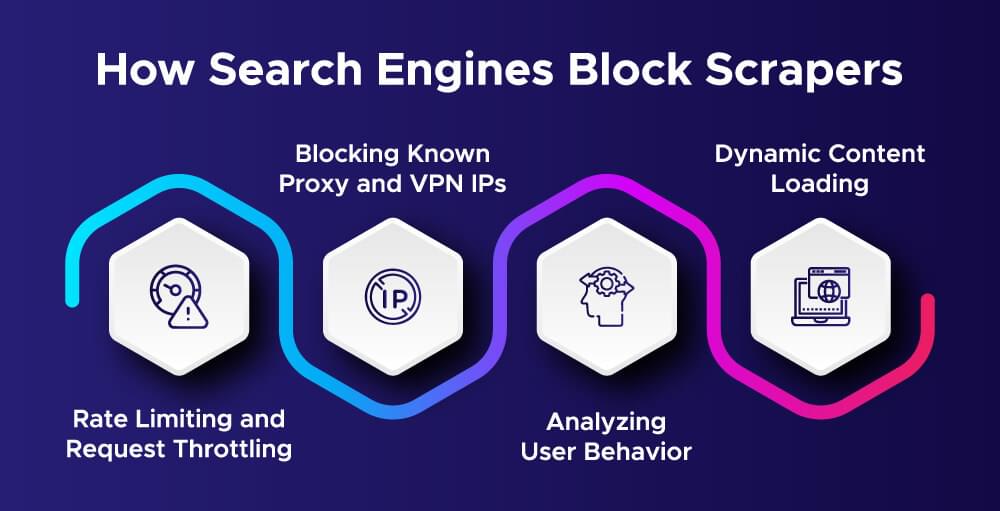

How Search Engines Block Scrapers

Search engines use several methods to block web scrapers and prevent data extraction. Knowing these will help you scrape data without getting blocked.

- Rate Limiting and Request Throttling

Search engines track the number of requests from an IP in a given time frame. If too many requests are made in a short period, they slow down or block access. That’s why gradual request timing and delays are key for web scraping.

- Blocking Known Proxy and VPN IPs

Google and other search engines have lists of data center, proxy, and VPN IPs that are commonly used for automation. If your scraper uses one of these IPs, it will get flagged and blocked instantly. Using residential or rotating proxies can help you avoid detection.

- Analyzing User Behavior

Search engines track user interactions like mouse movements, scrolling, and clicking patterns. Bots that don’t mimic these natural behaviors will get detected easily. Using headless browsers with human-like behavior can reduce the chances of getting flagged.

- Dynamic Content Loading

Many search engines now use JavaScript and AJAX to load search results dynamically. Simple scrapers that don’t execute JavaScript might omit important data. Using tools like Selenium or Puppeteer can help you handle JavaScript-heavy pages for accurate data extraction.

Effective Ways to Bypass Scraper Detection

To scrape search engines without getting blocked, you need innovative ways to avoid detection. Below are some of the best methods:

- Using Rotating Proxies and User-Agents

Search engines track IP addresses and browser headers to detect automated requests. Rotating proxies makes your requests look like they come from different IPs. Rotating user agents (browser identifiers) makes requests look like they come from different devices and browsers.

- Implementing Headless Browsers and Human-like Interactions

Headless browsers like Puppeteer or Selenium can simulate human behavior, like scrolling, clicking and mouse movements. These interactions prevent search engines from flagging your scraper as a bot.

- Slowing Down Requests to Mimic Real Users

Sending too many requests in a short time is a red flag for search engines. Introduce random delays between requests. This makes your scraper behave like a real user and reduces the chances of getting blocked.

- Leveraging CAPTCHA Solving Services

When search engines detect suspicious activity, they trigger CAPTCHA challenges to verify human presence. Services like 2Captcha and Anti-Captcha can solve these challenges for you so your scraper can continue to run smoothly.

- Using Crawlbase Crawling API for Seamless Scraping

The Crawlbase Crawling API handles IP rotation, CAPTCHA solving, and JavaScript rendering for you. You can extract SERP data easily without worrying about bans or restrictions. It’s the best solution for hassle-free web scraping.

By following these tips, you can scrape search engine data more effectively and with less detection and blocking.

Final Thoughts

Scraping search engines is hard because of anti-bot measures, but with the right strategies, you can get the data without getting blocked. Using rotating proxies, headless browsers, randomized requests and CAPTCHA-solving services helps to bypass detection.

For hassle-free and reliable scraping, Crawlbase Crawling API has got you covered with proxies, JavaScript rendering, and CAPTCHA bypassing taken care of. By following best practices, you can ensure long-term success in scraping search engines without interruptions.

Frequently Asked Questions

Q. How do search engines detect scrapers?

Search engines use multiple techniques to detect scrapers, such as monitoring unusual traffic patterns, tracking IP addresses, fingerprinting browsers, and using CAPTCHA challenges. They also analyze user behavior to differentiate between bots and real users.

Q. What is the best way to avoid getting blocked while scraping?

The best way to avoid getting blocked is by using rotating proxies, changing user agents, implementing headless browsers, slowing down requests, and solving CAPTCHAs automatically. Services like Crawlbase Crawling API make this process seamless by handling these for you.

Q. Can I scrape search engines legally?

Scraping search engines is a legal gray area. Public data is accessible, but scraping must follow terms of service and ethical guidelines. Don’t make excessive requests, respect robots.txt rules, and ensure you’re not violating any data protection laws.